Windows Azure and Cloud Computing Posts for 9/23/2013+

Top Stories This Week:

- Scott Guthrie (@scottgu) reported release of New Virtual Machine, Active Directory, Multi-Factor Auth, Storage, Web Site and Spending Limit Improvements on 9/26/2013 in the Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN section.

- Steven Martin (@stevemar_msft) announced that you can Deploy Pre-configured Oracle VMs on Windows Azure on the day that the Oracle World conference opened in San Francisco’s Moscone Center (see the Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN section).

- Paolo Salvatore (@babosbird) explained How to integrate Mobile Services with BizTalk Server via Service Bus on 9/27/2013 in the Windows Azure Service Bus, BizTalk Services and Workflow section.

| A compendium of Windows Azure, Service Bus, BizTalk Services, Access Control, Caching, SQL Azure Database, and other cloud-computing articles. |

‡ Updated 9/28/2013 with new articles marked ‡

• Updated 9/26/2013 with new articles marked •.

Note: This post is updated weekly or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Windows Azure Marketplace DataMarket, Power BI, Big Data and OData

- Windows Azure Service Bus, BizTalk Services and Workflow

- Windows Azure Access Control, Active Directory, and Identity

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure and DevOps

- Windows Azure Pack, Hosting, Hyper-V and Private/Hybrid Clouds

- Visual Studio LightSwitch and Entity Framework v4+

- Cloud Security, Compliance and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

‡ Tyler Doerksen (@tyler_gd) posted Quick Script: Copy All Blobs to New Storage Account Using PowerShell on 9/27/2013:

Here is a quick PowerShell script to copy blobs from different storage accounts on different subscriptions.

Import-Module Azure $sourceAccount = 'myvids' $sourceKey = '@@@@@@@' $destAccount = 'destvids' $destKey = '@@@@@@@' $containerName = 'videos' $sourceContext = New-AzureStorageContext $sourceAccount $sourceKey $destContext = New-AzureStorageContext $destAccount $destKey$blobs = Get-AzureStorageBlob ` -Context $sourceContext ` -Container $containerName $copiedBlobs = $blobs | Start-AzureStorageBlobCopy ` -DestContext $destContext ` -DestContainer $containerName ` -Verbose $copiedBlobs | Get-AzureStorageBlobCopyState

That will copy all of the blobs in the source container to the destination container.

Ricardo Villalobos (@ricvilla) posted Windows Azure Insider September 2013 – Hadoop and HDInsight: Big Data in Windows Azure on 9/25/2013:

For the September edition of the Windows Azure Insider MSDN magazine column, Bruno and I write about Big Data, the benefits of the MapReduce model, and HDInsight, the Windows Azure component that offers Hadoop-as-a-Service in the public cloud. We also show how to perform simple analytics against a public dataset using Java code and and

Hive.

The full article can be found here.

Enjoy!

Mariano Converti (@mconverti) reported New Windows Azure Media Services (WAMS) Asset Replicator release published on CodePlex in a 9/24/2013 post:

Last week, a new release of the Windows Azure Media Services (WAMS) Asset Replicator Tool was published on CodePlex. This September 2013 release includes the following changes:

- Code upgraded to use the latest Windows Azure Storage Client library (v2.1.0.0)

- Code upgraded to use the latest Windows Azure Media Services .NET SDK (v2.4.0.0)

- C# projects upgraded to target .NET Framework 4.5

- Cloud Service project upgraded to Windows Azure Tools v2.0

- NuGet package dependencies updated to their latest versions

- Support added to compare, replicate and verify FragBlob assets

- New approach to auto-replicate and auto-ignore assets based on metadata in the IAsset.AlternateId property using JSON format

As you can see, the most important changes are the last two items which I will go into more detail about below.

FragBlob support

FragBlob is a new storage format that will be used in an upcoming Windows Azure Media Services feature that is not yet available. In this new format, each Smooth Streaming fragment is written to storage as a separate blob in the asset’s container instead of grouping them together into Smooth Streaming PIFF files (ISMV’s/ISMA’s). Therefore, the Replicator has been updated to identify, compare, copy and verify this new FragBlob asset type.

Replicator metadata in IAsset.AlternateId property using JSON format

To decide whether or not an asset should be automatically replicated or ignored, the Replicator Tool needs to get some metadata from your assets. Currently the IAsset interface does not have a property to store custom metadata, so as a workaround the Replicator now uses the IAsset.AlternateId string property to store this metadata with a specific JSON format described below:

{"alternateId":"my-custom-alternate-id","replicate":"No","data":"optional custom metadata"}The following are the expected fields in the JSON format:

- alternateId: this is the actual Alternate Id value for the asset that is used to identify and track assets in both data centers.

- replicate: this is a three-state flag that the replicator will use to determine whether or not it should take automatic action for the asset. The possible values are:

- No: the asset will be automatically ignored

- Auto: the asset will be automatically replicated

- Manual: no automatic action will be taken for this asset

Important: If the replicate field is not included in the IAsset.AlternateId (or if this property is not set at all – null value), the default value is No (asset automatically ignored).

- data: this is an optional field that you can use to store additional custom metadata for the asset.

The Replicator uses some extension methods for the IAsset interface to easily retrieve and set these values without having to deal with the JSON format. These extensions can be found in the Replicator.Core\Extensions\AssetUtilities.cs source code file.

Using these IAsset extension methods for the IAsset.AlternateId property, you can easily set the Replicator metadata in your media workflows as explained below:

- How to set the IAsset.AlternateId metadata for automatic replication

- How to set the IAsset.AlternateId metadata for manual replication

How to set the IAsset.AlternateId metadata for automatic replication

Once your asset is ready (for instance, after ingestion or a transcoding job) and you have successfully created an Origin locator for it, you need to set the alternateId and replicate fields as follows:

// Set the alternateId to track the asset in both WAMS accounts.string alternateId = "my-custom-id";asset.SetAlternateId(alternateId);// Set the replicate flag to 'Auto' for automatic replication.asset.SetReplicateFlag(ReplicateFlag.Auto);// Update the asset to save the changes in the IAsset.AlternateId property.asset.Update();By setting the replicate field to ‘Auto‘, the Replicator will un-ignore the asset and automatically start copying it to the other WAMS account. When the copy operation is complete, both assets will be marked as verified if everything measures up OK; otherwise, it will report the differences/errors and the user will have to take manual action from the Replicator Dashboard (like manually forcing the copy again).

How to set the IAsset.AlternateId metadata for manual replication

Once your asset is ready and you have successfully created an Origin locator for it, you need to set the alternateId and replicate fields as follows:

// Set the alternateId to track the asset in both WAMS accounts.// Make sure to use the same alternateId for the asset in the other WAMS account that you want to compare.string alternateId = "my-custom-id";asset.SetAlternateId(alternateId);// Set the replicate flag to 'Manual' for manual replication.asset.SetReplicateFlag(ReplicateFlag.Manual);// Update the asset to save the changes in the IAsset.AlternateId property.asset.Update();By setting the replicate field to ‘Manual‘, the Replicator will un-ignore the asset and check if there is an asset in the other WASM account with the same alternateId field. If the Replicator finds one, it will compare both assets and mark them as verified if everything checks out OK; otherwise, it will report the differences and the user will have to take manual action from the Replicator Dashboard (like deleting one and forcing a copy of the other). This scenario is useful when comparing assets living in different WAMS accounts and generated from the same source.

Michael Collier (@MichaelCollier) described a PowerShell script to compute the Billable Size of Windows Azure Blobs in a 9/23/2013 post:

I recently came across a PowerShell script that I think will be very handy for many Windows Azure users. The script calculates the billable size of Windows Azure blobs in a container, or the entire storage account. You can get the script at http://gallery.technet.microsoft.com/Get-Billable-Size-of-32175802.

Let’s walk through using this script:

0. Prerequisites

Windows Azure subscription. If you have MSDN, you can activate your Windows Azure benefits at http://bit.ly/140uAMt

- Windows Azure storage account

- Windows Azure PowerShell cmdlets (download and configure)

1. Select Your Windows Azure Subscription

Select-AzureSubscription -SubscriptionName "MySubscription"2. Update PowerShell Execution Policy

You should only need to do this if your PowerShell execution policy prohibits running unsigned scripts. More on execution policy.Set-ExecutionPolicy -Scope Process -ExecutionPolicy Bypass

3. Calculate Blob Size for an Entire Storage Account

.\CalculateBlobCost.ps1 -StorageAccountName mystorageaccountnameVERBOSE: Loading module from path ‘C:\Program Files (x86)\Microsoft SDKs\Windows

Azure\PowerShell\Azure\.\Microsoft.WindowsAzure.Management.SqlDatabase.dll’.

VERBOSE: Loading module from path ‘C:\Program Files (x86)\Microsoft SDKs\Windows

Azure\PowerShell\Azure\.\Microsoft.WindowsAzure.Management.ServiceManagement.dll’.

VERBOSE: Loading module from path ‘C:\Program Files (x86)\Microsoft SDKs\Windows

Azure\PowerShell\Azure\.\Microsoft.WindowsAzure.Management.Storage.dll’.

VERBOSE: Loading module from path ‘C:\Program Files (x86)\Microsoft SDKs\Windows

Azure\PowerShell\Azure\.\Microsoft.WindowsAzure.Management.dll’.

VERBOSE: 12:16:39 PM – Begin Operation: Get-AzureStorageAccount

VERBOSE: 12:16:42 PM – Completed Operation: Get-AzureStorageAccount

VERBOSE: 12:16:42 PM – Begin Operation: Get-AzureStorageKey

VERBOSE: 12:16:45 PM – Completed Operation: Get-AzureStorageKey

VERBOSE: Container ‘deployments’ with 4 blobs has a size of 15.01MB.

VERBOSE: Container ‘guestbook’ with 4 blobs has a size of 0.00MB.

VERBOSE: Container ‘mydeployments’ with 1 blobs has a size of 12.55MB.

VERBOSE: Container ‘test123′ with 1 blobs has a size of 0.00MB.

VERBOSE: Container ‘vsdeploy’ with 0 blobs has a size of 0.00MB.

VERBOSE: Container ‘wad-control-container’ with 19 blobs has a size of 0.00MB.

VERBOSE: Container ‘wad-iis-logfiles’ with 15 blobs has a size of 0.01MB.

Total size calculated for 7 containers is 0.03GB.4. Calculate Blob Size for a Specific Container within a Storage Account

.\CalculateBlobCost.ps1 -StorageAccountName mystorageaccountname ` -ContainerName deploymentsVERBOSE: Loading module from path ‘C:\Program Files (x86)\Microsoft SDKs\Windows

Azure\PowerShell\Azure\.\Microsoft.WindowsAzure.Management.SqlDatabase.dll’.

VERBOSE: Loading module from path ‘C:\Program Files (x86)\Microsoft SDKs\Windows

Azure\PowerShell\Azure\.\Microsoft.WindowsAzure.Management.ServiceManagement.dll’.

VERBOSE: Loading module from path ‘C:\Program Files (x86)\Microsoft SDKs\Windows

Azure\PowerShell\Azure\.\Microsoft.WindowsAzure.Management.Storage.dll’.

VERBOSE: Loading module from path ‘C:\Program Files (x86)\Microsoft SDKs\Windows

Azure\PowerShell\Azure\.\Microsoft.WindowsAzure.Management.dll’.

VERBOSE: 12:12:48 PM – Begin Operation: Get-AzureStorageAccount

VERBOSE: 12:12:52 PM – Completed Operation: Get-AzureStorageAccount

VERBOSE: 12:12:52 PM – Begin Operation: Get-AzureStorageKey

VERBOSE: 12:12:54 PM – Completed Operation: Get-AzureStorageKey

VERBOSE: Container ‘deployments’ with 4 blobs has a size of 15.01MB.

Total size calculated for 1 containers is 0.01GB.5. Calculate the Cost

The Windows Azure pricing calculator page should open immediately after the script executes. From there you can adjust the slider to the desired storage size, and view the standard price. The current price is $0.095 per GB for geo-redundant storage. So this one storage account is costing me only $0.0027 per month. I can handle that.

<Return to section navigation list>

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

‡ David Pallman posted Getting Started with Mobility, Part 10: A Back-end in the Cloud with Windows Azure Mobile Services on 9/28/2013:

In this series of posts, we're looking at how to get started as a mobile developer. In Parts 1-9, we examined a variety of mobile platforms and client app development approaches (native, hybrid, web). We've seen a lot so far, but that's only the front-end; typically, a mobile app also needs a back-end. We'll now start looking at various approaches to providing a back-end for your mobile app. Here in Part 10 we'll look at Microsoft's cloud-based Mobile Backend As A Service (MBAAS) offering, Windows Azure Mobile Services.

About Mobile Back-end as a Service (MBaaS) Offerings

To create a back-end for your mobile app(s), you're typically going to care about the following:

- A service layer, where you can put server-side logic

- Persistent data storage

- Authentication

- Push notifications

You could create a back-end for the above using many different platforms and technologies, and you could do so in a traditional data center or in a public cloud. You'd need to write a set of web services, create a database or data store of some kind, provide a security mechanism, and so on.

What's interesting is that today you can make a "build or buy" decision about your mobile back-end: several vendors and open source groups have decided to offer all of the above as a ready-to-use, out-of-box service. Microsoft's Windows Azure Mobile Services is an example of this. Of course, it doesn't do all of your work for you--you're still going to be responsible for supplying a data model and server-side logic. Nevertheless, MBaaS gives you a huge head start. MBaaS is especially valuable if you are mostly a mobile developer who wants to focus their time on the app and not a [backend implementation.]Windows Azure Mobile Services

Windows Azure Mobile Services "provides a scalable cloud backend for building Windows Store, Windows Phone, Apple iOS, Android, and HTML/JavaScript applications. Store data in the cloud, authenticate users, and send push notifications to your application within minutes." Specifically, that means you get the following:The service will also generate for you starter mobile clients for iOS, Android, Windows Phone, Windows 8, or HTML5. You can use these apps as your starting point, or as references for seeing how to hook up your own apps to connect to the service.

- Authentication (to Facebook, Twitter, Microsoft, or Google accounts)

- Scripting for server-side logic

- Push notifications

- Logging

- Data storage

- Diagnostics

- Scalability

Pricing

So what does all this back-end goodness cost? At the time of this writing, there are Free, Standard ($25/month), and Premium ($199/month) tiers of pricing. You can read the pricing details here.Training

We reference training resources throughout this post. A good place to start, though, is here:Get Started with Mobile Services

Android iOS Windows Phone Windows 8 HTML5Provisioning a Mobile Service

The first thing you'll notice about WAMS is the care that's been given to the developer experience, especially your first-time experience. Once you have a Windows Azure account, you'll go to azure.com, sign-in to the management portal, and navigate to the Mobile Services tab. From there, you're only a handful of clicks away from rapid provisioning of a mobile back-end.1 Kick-off Provisioning

Click New > Mobile Service > Create to begin provisioning a mobile service.Provisioning a Mobile Service

2 Define a Unique Name and Select a Database

On the first provisioning screen, you'll choose an endpoint name for your service, and either create a database or attach to one you're previously created in the cloud. The service offers a free 20MB SQL database. You'll also indicate which data center to allocate the service in (there are 8 worldwide, 4 in the U.S.)Provisioning a Mobile Service - Screen 1

Provisioning a Mobile Service - Screen 2

3 Wait for Provisioning to Complete

Click the Checkmark button, and provisioning will commence. It's fast! In less than a minute your service will have been created.Newly-provisioned Mobile Service Listed in Portal

4 Use the New Mobile Service Wizard

Click on your service to set it up. You'll be greeted with a wizard that walks you through. This is especially helpful if this is your first time using Windows Azure Mobile Services. On the first screen, you'll indicate which mobile platform you are targeting: Windows Store (Windows 8), Windows Phone 8, iOS, Android, or HTML5 (don't worry, you're not restricted to a single mobile platform and can come back and change this setting as often you wish).Setup Wizard, Screen 1

5 Generate a Mobile App that Uses your Service

Next, you can download an automatically generated app for the platform you've selected, pre-wired up to talk to the service you just provisioned. To do so, click the Create a New App link. This will walk you through 1) installing the SDK you need for your mobile project, 2) creating a database table, and 3) downloading and running your app. The app and database will initially be for a ToDo database, but you can amend the database and app to your liking once you're done with the wizard.Generating a Mobile Client App for Android

In the next section, we'll review how to build and run the app that you generated, and how to view what's happening on the back end.

Building and Running a Generated Mobile App

Let's walk through building and running the To Do app the portal auto-generates for you. The mobile client download is a zip file, which you should save locally, Unblock, and extract to a local folder. Next, you can open the project and run it--it's that simple to get started.Running the App

When you run the app, you'll see a simple ToDo app--one that is live, and uses your back-end in the cloud for data storage. Run the app and kick the tires by adding some tasks. Enter a task by entering it's name and clicking Add. Delete an item by touching its checkbox.To Do app running on Android phone

Viewing the Data

Now, back in the Windows Azure portal we can inspect the data that has been stored in the database in the cloud. Click on the Data link at the top of the Windows Azure portal for your mobile service, and you'll see what's in the ToDo table. It should match what you just entered using the mobile app.Database Data in the Cloud

Dynamic Data

One of the great features of Windows Azure Mobile Services is its ability to dynamically adjust its data model. This allows you to change your mobile app's data structure in code, and the back-end database will automatically add new columns if it needs to--all by itself.Get Started with Data in Mobile Services

Android iOS Windows Phone Windows 8 HTML5Dynamic data is a great feature, but some people won't want it enabled, perhaps once you're all ready for production use. You can enable or disable the feature in the Configure page of the portal.

Server-side Logic

Windows Azure Mobile Services happens to use node.js, which means server-side logic is something you write in JavaScript.Mobile Services Server Script Reference

You can have scripts associated with your database table(s), where operations like Insert, Update, Delete, or Read execute script code. You set up these up on the Data page of the portal for your mobile service.Database Action Scripts

You can also set up scheduled scripts, which run on a schedule. On the Schedule page of the portal, click Create a Scheduled Job to define a scheduled job.

Creating a Scheduled Job

Once you've define a scheduled job, you can access it in the portal to enter script code and enable or disable the job.

Setting a Job's Script Code

Authentication

You can authenticate against Microsoft accounts, Facebook, Twitter, or Google. This involves registering your app for authentication and configuring Mobile Services; restricting database table permissions to authenticated users; and adding authentication to the app.Get Started with Authentication

Android iOS Windows Phone Windows 8 HTML5Push Notifications

Push notifications support is provided for each mobile platform. Tutorials acquaint you with the registration and code steps needed to implement push notifications for each platform,Get Started with Push Notifications

Android iOS Windows Phone Windows 8 HTML5Summary

Windows Azure Mobile Services provides a fast and easy mobile back-end in the cloud. It offers the essential capabilities you need in a back end and supports the common mobile platforms. If you're comfortable expressing your server-side logic in node.js JavaScript, this is a compelling MBaaS to consider.

MBaaS is a new FLA (Five-Letter Acronym) AFAIK.

Bruno Terkaly (@brunoterkaly) produced a series of Windows Azure SQL Database (a.k.a., SQL Azure) hands-on-labs (HOLs) on 9/26/2013. Here are links:

A set of database-oriented posts

How to create a Windows Azure Storage Account

- How to Export an On-Premises SQL Server Database to Windows Azure Storage

- How to Migrate an On-Premises SQL Server 2012 Database to Windows Azure SQL Virtual Machine

How to Migrate an On-Premises SQL Server 2012 Database to Windows Azure SQL Database

- Setting up an Azure Virtual Machine For Developers with Visual Studio 2013 Ultimate and SQL Server 2012 Express

<Return to section navigation list>

Windows Azure Marketplace DataMarket, Cloud Numerics, Big Data and OData

Splunk (@splunk) now supports OData, according to a 9/27/2013 OData for Splunk announcement:

Ever wanted to be able to access your Splunk data from Excel or Tableau? This app provides an OData (http://www.odata.org) interface to your saved searches, which you can easily connect to with Excel, Tableau and a myriad of other programs.

This application is currently under private access, and works with Splunk 4.2x and above. If you would like access, please contact us at devinfo@splunk.com, or use the contact links on the site.

Release Notes

- Version: 0.5.3

- All versions

Now works with Excel 2013.

Brian Benz (@bbenz) reported OData v4.0 approved as Committee Specification by the OASIS Open Data Protocol Technical Committee on 9/13/2013 (missed when posted):

Microsoft Open Technologies, Inc is pleased to announce the approval and publication of OData Version 4.0 Committee Specification (CS) by the members of the OASIS Open Data Protocol (OData) Technical Committee. As we reported back in May, this brings OData 4.0 one step closer to becoming an OASIS Standard.

The Open Data Protocol (OData) uses REST-based data services to access and manipulate resources defined according to an Entity Data Model (EDM).

The Committee Specification is published in three parts; Part 1: Protocol defines the core semantics and facilities of the protocol. Part 2: URL Conventions defines a set of rules for constructing URLs to identify the data and metadata exposed by an OData service as well as a set of reserved URL query string operators. Part 3: Common Schema Definition Language (CSDL) defines an XML representation of the entity data model exposed by an OData service.

The CS also includes schemas, ABNF components, Vocabulary Components and the OData Metadata Service Entity Model.

You can also download a zip file of the complete package of each specification and related files here.

Join the OData Community

Here are some resources for those of you interested in using or implementing the OData protocol or contributing to the OData standard:

- Visit OData.org for information, content, videos, and documentation and to learn more about OData and the ecosystem of open data producer and consumer services.

- Join the OData.org mailing list.

- Check out the #OData discussion on twitter.

- Join the OASIS OData technical committee (OData TC) and contribute to the standard.

- Send comments on OData version 4.0 to the OASIS OData Technical Committee

Our congratulations to the OASIS OData Technical Committee on achieving this milestone! As always, we’re looking forward to continued collaboration with the community to develop OData into a formal standard through OASIS.

<Return to section navigation list>

Windows Azure Service Bus, BizTalk Services and Workflow

‡ Paolo Salvatore (@babosbird) described How to integrate Mobile Services with BizTalk Server via Service Bus on 9/27/2013:

Introduction

This sample demonstrates how to integrate Windows Azure Mobile Service with line of business applications, running on-premises or in the cloud, via BizTalk Server 2013, Service Bus Brokered Messaging and Service Bus Relayed Messaging. The Access Control Service is used to authenticate Windows Azure Mobile Services against the Windows Azure Service Bus. In this scenario, BizTalk Server 2013 can run on-premises or in a Virtual Machine on Windows Azure.

Scenario

This scenario extends the TodoList tutorial application. For more information, see the following resources:

- Get started with Mobile Services

- Get started with authentication in Mobile Services

- Register your apps to use a Microsoft Account login

A mobile service receives a new todo item in JSON format sent by an HTML5/JavaScript site, Windows Phone 8 or Windows Store app via HTTP POST method. The mobile service performs the following actions:

- Authenticates the user against the Microsoft, Facebook, Twitter, Google identity providers using the OAuth open protocol. For more information on this topic, see Troubleshooting authentication issues in Azure Mobile Services by Carlos Figueira.

- Validates the input data.

- Uses the access token issued by the identity provider as a key to query via REST the authentication provider and retrieve the user name. For more information on this topic, see Getting user information on Azure Mobile Services by Carlos Figueira.

- Retrieves the user address from BizTalk Server 2013 via Service Bus Relayed Messaging.

- Saves the new item to the TodoItem table on the Windows Azure SQL Database of the mobile service.

- Reads notification channels from the Channel table on the Windows Azure SQL Database of the mobile service.

- Sends push notifications to Windows Store Apps using the Windows Push Notification Service (WNS).

- Sends push notifications to Windows Phone 8 Apps using the Microsoft Push Notification Service (MPNS).

- Sends a notification to BizTalk Server 2013 via Service Bus Brokered Messaging.

Architecture

The following diagram shows the architecture of the solution.

Message Flow

- The client application (HTML5/JavaScript site, Windows Phone 8 app or Windows Store app) sends authentication credentials to the mobile service.

- The mobile service redirects the user to the page of the select authentication provider which validates the credentials (username and password) provided by the user and issues a security token.

- The mobile service returns its access token to the client application. The user sends a new todo item to the mobile service.

- The insert script for the TodoItem table handles the incoming call. The script validates the inbound data then invokes the authentication provider via REST using the request module (getUserName function).

- The script sends a request to the Access Control Service to acquire a security token necessary to be authenticated by the Service Bus Relay Service exposed by BizTalk Server via a WCF-BasicHttpRelay Receive Location. The mobile service uses the OAuth WRAP Protocol to acquire a security token from ACS (getAcsToken funtion). In particular, the server script sends a request to ACS using the https module. The request contains the following information:

ACS issues and returns a security token. For more information on the OAuth WRAP Protocol, see How to: Request a Token from ACS via the OAuth WRAP Protocol. The insert script calls the getUserAddress function that performs the following actions:

- wrap_name: the name of a service identity within the Access Control namespace of the Service Bus Relay Service (e.g. owner)

- wrap_password: the password of the service identity specified by the wrap_name parameter.

- wrap_scope: this parameter contains the relying party application realm. In our case, it contains the http base address of the Service Bus Relay Service (e.g. http://paolosalvatori.servicebus.windows.net/)

- Extracts the wrap_access_token from the security token issued by ACS.

- Creates a SOAP envelope to invoke the Service Bus Relay Service. In particular, the Header contains a RelayAccessToken element which in turn contains the wrap_access_token returned by ACS in base64 format. The Body contains the payload for the call.

- Uses the request module to send the SOAP envelope to the Service Bus Relay Service. The Service Bus Relay Service validates and remove the security token, then forwards the request to BizTalk Server that processes the request and returns a response message containing the user address. See below for more details on his use case.

- The insert script calls the insertItem function that inserts the new item in the TodoItem table.

- The insert script retrieves from the Channel table the channel URI of the Windows Phone 8 and Windows Store apps to which to send push a notification (sendPushNotification function)

- The sendPushNotification function sends push notifications.

- The insert script calls the sendMessageToServiceBus function that uses the azure module to send a notification to BizTalk Server via a Windows Azure Service Bus queue.

Call BizTalk Server via Service Bus Relayed Messaging

The following diagram shows how BizTalk Server is configured to receive and process request messages sent by a mobile service via Service Bus Relay Service using a two-way request-reply message exchange pattern.

Message Flow

- The client application sends a new item to the mobile service.

- The insert script sends a request to the Access Control Service to acquire a security token necessary to be authenticated by the Service Bus Relay Service exposed by BizTalk Server via a WCF-BasicHttpRelay Receive Location. The mobile service uses the OAuth WRAP Protocol to acquire a security token from ACS (getAcsToken funtion). In particular, the server script sends a request to ACS using the https module. The request contains the following information:

- wrap_name: the name of a service identity within the Access Control namespace of the Service Bus Relay Service (e.g. owner)

- wrap_password: the password of the service identity specified by the wrap_name parameter.

- wrap_scope: this parameter contains the relying party application realm. In our case, it contains the http base address of the Service Bus Relay Service (e.g. http://paolosalvatori.servicebus.windows.net/).

- ACS issues and returns a security token. For more information on the OAuth WRAP Protocol, see How to: Request a Token from ACS via the OAuth WRAP Protocol.

- The insert script calls the getUserAddress function that performs the following actions:

- Extracts the wrap_access_token from the security token issued by ACS.

- Creates a SOAP envelope to invoke the Service Bus Relay Service. In particular, the Header contains a RelayAccessToken element which in turn contains the wrap_access_token returned by ACS in base64 format. The Body contains the payload for the call.

- Uses the request module to send the SOAP envelope to the Service Bus Relay Service. The Service Bus Relay Service validates and remove the security token, then forwards the request to BizTalk Server that processes the request and returns a response message containing the user address. See below for more details on his use case.

- The Service Bus Relay Service validates and remove the security token, then forwards the request to one the WCF-BasicHttpRelay Receive Location exposed by BizTalk Server.

- The WCF-BasicHttpRelay Receive Location publishes the request message to the BizTalkServerMsgBoxDb.

- The message triggers the execution of a new instance of the GetUserAddress orchestration.

- The orchestration uses the user id contained in the request message to retrieve his/her address. For demo purpose, the orchestration generates a random address. The orchestration writes the response message to the BizTalkServerMsgBoxDb.

- The WCF-BasicHttpRelay Receive Location retrieves the message from the BizTalkServerMsgBoxDb.

- The receive location sends the response message back to the Service Bus Relay Service.

- The Service Bus Relay Service forwards the message to the mobile service.

- The mobile service saves the new item in the TodoItem table and sends the enriched item back to the client application.

Call BizTalk Server via Service Bus Brokered Messaging

The following diagram shows how BizTalk Server is configured to receive and process request messages sent by a mobile service via a Service Bus queue using a one-way message exchange pattern.

Message Flow

- The client application sends a new item to the mobile service.

- The insert script calls the sendMessageToServiceBus function that performs the following actions:

- Creates a XML message using the xmlbuilder module.

- Uses the azure to send the message to Windows Azure Service Bus queue.

- BizTalk Server 2013 uses a SB-Messaging Receive Location to retrieve the message from the queue.

- The WCF-BasicHttpRelay Receive Location publishes the notification message to the BizTalkServerMsgBoxDb.

- The message triggers the execution of a new instance of the StoreTodoItem orchestration.

- The orchestration elaborates and transforms the incoming message and publish a new message to the BizTalkServerMsgBoxDb.

- The message is consumed by a FILE Send Port.

- The FILE Send Port writes the message to the Out folder.

Prerequisites

- Visual Studio 2012 Express for Windows 8

- Windows Azure Mobile Services SDK for Windows 8

- and a Windows Azure account (get the Free Trial)

Building the Sample

Proceed as follows to set up the solution.

Create the Todo Mobile Service

Follow the steps in the tutorial to create the Todo mobile service.

- Log into the Management Portal.

At the bottom of the navigation pane, click +NEW.

Expand Mobile Service, then click Create.

This displays the New Mobile Service dialog.

In the Create a mobile service page, type a subdomain name for the new mobile service in the URL textbox and wait for name verification. Once name verification completes, click the right arrow button to go to the next page.

This displays the Specify database settings page.

Note: As part of this tutorial, you create a new SQL Database instance and server. You can reuse this new database and administer it as you would any other SQL Database instance. If you already have a database in the same region as the new mobile service, you can instead choose Use existing Database and then select that database. The use of a database in a different region is not recommended because of additional bandwidth costs and higher latencies.

In Name, type the name of the new database, then type Login name, which is the administrator login name for the new SQL Database server, type and confirm the password, and click the check button to complete the process.

Configure the application to authenticate users

This solution requires the user to be authenticated by an identity providers. Follow the instructions contained in the links below to configure the Mobile Service to authenticate users against one or more identity providers and follow the steps to register your app with that provider:

For more information, see:

- Get started with authentication in Mobile Services

- Register your apps to use a Microsoft Account login.

For your convenience, here you find the steps to configure the application to authanticate users using a Microsoft Account login.

Log on to the Windows Azure Management Portal, click Mobile Services, and then click your mobile service.

Click the Dashboard tab and make a note of the Site URL value.

Navigate to the My Applications page in the Live Connect Developer Center, and log on with your Microsoft account, if required.

Click Create application, then type an Application name and click I accept.

This registers the application with Live Connect.

Click Application settings page, then API Settings and make a note of the values of the Client ID and Client secret.

Security Note

The client secret is an important security credential. Do not share the client secret with anyone or distribute it with your app.

In Redirect domain, enter the URL of your mobile service, and then click Save.

Back in the Management Portal, click the Identity tab, enter the Client Id and Client Secret obtained at the previous step in the microsoft account settings, and click Save.

Restrict permissions to authenticated users

In the Management Portal, click the Data tab, and then click the TodoItem table.

Click the Permissions tab, set all permissions to Only authenticated users, and then click Save. This will ensure that all operations against the TodoItem table require an authenticated user. This also simplifies the scripts in the next tutorial because they will not have to allow for the possibility of anonymous users.

Define server side scripts

Server scripts are registered in a mobile service and can be used to perform a wide range of operations on data being inserted and updated, including validation and data modification. In this sample, they are used to validate data, retrieves data from identity providers, send push notifications and communicate with BizTalk Server via Windows Azure Service Bus. For more information on server scripts, see the following resources:

- Windows Azure Mobile Services Server Scripts

- Server script example how tos

- Mobile Services server script reference

To use Windows Azure Service Bus, you need to use the Node.js azure package in server scripts. This package includes a set of convenience libraries that communicate with the storage REST services. For more information on the Node.js azure package, see the following resources:

Follow these steps to create server scripts:

- In the Management Portal, click the Data tab, and then click the TodoItem table.

- Click the scripts tab and select the insert, update, read or del script from the drow-down list.

- Modify the code of the selected script to add your business logic to the function. …

Paolo continues with source code for the server scripts.

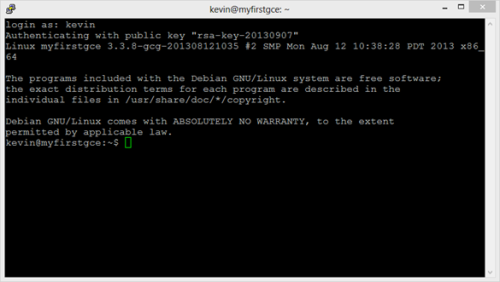

Configure Git Source Control

The source control support provides a Git repository as part your mobile service, and it includes all of your existing Mobile Service scripts and permissions. You can clone that git repository on your local machine, make changes to any of your scripts, and then easily deploy the mobile service to production using Git. This enables a really great developer workflow that works on any developer machine (Windows, Mac and Linux). To configure the Git source control proceed as follows:

- Navigate to the dashboard for your mobile service and select the Set up source control link:

- If this is your first time enabling Git within Windows Azure, you will be prompted to enter the credentials you want to use to access the repository:

- Once you configure this, you can switch to the CONFIGURE tab of your Mobile Service and you will see a Git URL you can use to use your repository:

You can use the GIT URL to clone the repository locally using Git from the command line:

cd C:\ mkdir Git cd Git git clone https://todolist.scm.azure-mobile.net/todolist.git

You can make changes to the code of server scripts and then upload changes to your mobile service using the following script.

git add -A . git commit -m "Modified calculator server script" git pushVisual Studio Solution

The Visual Studio solution includes the following projects:

BusinessLogic: contains helper classes used by BizTalk Server orchestrations.

Orchestrations: contains two orchestrations:

- GetUserAddress

- StoreTodoItem

- Schemas: contains XML schemas for the messages exchanged by BizTalk Server with the Mobile Service via Windows Azure Service Bus.

Maps: contains the maps uses by the BizTalk Server application.

HTML5: contains the HTML5/JavaScript client for the mobile service.

WindowsPhone8: contains the Windows Phone 8 app that can be used to test the mobile service.

WindowsStoreApp: contains the Windows Store app that can be used to test the mobile service.

NOTE: the WindowsStoreApp project uses the Windows Azure Mobile Services NuGet package. To recuce the size of tha zip file, I deleted some of the asemblies from the packages folder. To repair the solution, make sure to right click the solution and select Enable NuGet Package Restore as shown in the picture below. For more information on this topic, see the following post.

BizTalk Server Application

Proceed as follows to create the TodoItem BizTalk Server application:

- Open the solution in Visual Studio 2012 and deploy the Schemas, Maps and Orchestration to create the TodoItem application.

- Open the Binding.xml file in the Setup folder and replace the [YOUR-SERVICE-BUS-NAMESPACE] placeholder with the name of your Windows Azure Service Bus namespace.

- Open the BizTalk Server Administration Console and import the binding file to ceate Receive Ports, Receive Locations and Send Ports.

- Open the WCF-BasicHttpRelay Receive Location, click the Configure button:

- Click the Edit button in the Access Control Service section under the Security tab.

- Define the ACS STS uri, Issuer Name and Issuer Secret:

- Open the SB-Messaging Receive Location, click the Configure button:

- Define the ACS STS uri, Issuer Name and Issuer Secret under the Authentication tab:

- Open the FILE Send Port and click the Configure button:

- Enter the path of the Destination folder where notification messages sent by th Mobile Service via Service Bus are stored:

HTML5/JavaScript Client

The following figure shows the HTML5/JavaScript application that you can use to test the mobile service.

Paolo continues with source code.

Conclusions

Mobile services can easily be extended to get advantage of the services provided by Windows Azure. demonstrates how to integrate Windows Azure Mobile Service with line of business applications, running on-premises or in the cloud, via BizTalk Server 2013, Service Bus Brokered Messaging and Service Bus Relayed Messaging. The Access Control Service is used to authenticate Windows Azure Mobile Services against the Windows Azure Service Bus. In this scenario, BizTalk Server 2013 can run on-premises or in a Virtual Machine on Windows Azure. See also the following articles on Windows Azure Mobile Services:

Clemens Vasters (@clemensv) posted Blocking outbound IP addresses. Again. No. on 9/28/2013:

Just replied yet again to someone whose customer thinks they're adding security by blocking outbound network traffic to cloud services using IP-based allow-lists. They don't.

Service Bus and many other cloud services are multitenant systems that are shared across a range of customers. The IP addresses we assign come from a pool and that pool shifts as we optimize traffic from and to datacenters. We may also move clusters between datacenters within one region for disaster recovery, should that be necessary. The reason why we cannot give every feature slice an IP address is also that the world has none left. We’re out of IPv4 address space, which means we must pool workloads.

The last points are important ones and also shows how antiquated the IP-address lockdown model is relative to current practices for datacenter operations. Because of the IPv4 shortage, pools get acquired and traded and change. Because of automated and semi-automated disaster recovery mechanisms, we can provide service continuity even if clusters or datacenter segments or even datacenters fail, but a client system that’s locked to a single IP address will not be able to benefit from that. As the cloud system packs up and moves to a different place, the client stands in the dark due to its firewall rules. The same applies to rolling updates, which we perform using DNS switches.

The state of the art of no-downtime datacenter operations is that workloads are agile and will move as required. The place where you have stability is DNS.

Outbound Internet IP lockdowns add nothing in terms of security because workloads increasingly move into multitenant systems or systems that are dynamically managed as I’ve illustrated above. As there is no warning, the rule may be correct right now and pointing to a foreign system the next moment. The firewall will not be able to tell. The only proper way to ensure security is by making the remote system prove that it is the system you want to talk to and that happens at the transport security layer. If the system can present the expected certificate during the handshake, the traffic is legitimate. The IP address per-se proves nothing. Also, IP addresses can be spoofed and malicious routers can redirect the traffic. The firewall won’t be able to tell.

With most cloud-based services, traffic runs via TLS. You can verify the thumbprint of the certificate against the cert you can either set yourself, or obtain from the vendor out-of-band, or acquire by hitting a documented endpoint (in Windows Azure Service Bus, it's the root of each namespace). With our messaging system in ServiceBus, you are furthermore encouraged to use any kind of cryptographic mechanism to protect payloads (message bodies). We do not evaluate those for any purpose. We evaluate headers and message properties for routing. Neither of those are logged beyond having them in the system for temporary storage in the broker.

The server having access to Service Bus should have outbound Internet access based on the server’s identity or the running process’s identity. This can be achieved using IPSec between the edge and the internal system. Constraining it to the Microsoft DC ranges it possible, but those ranges shift and expand without warning.

The bottom line here is that there is no way to make outbound IP address constraints work with cloud systems or high availability systems in general.

<Return to section navigation list>

Windows Azure Access Control, Active Directory, Identity and Workflow

• Steven Martin (@stevemar_msft) posted Announcing General Availability of Windows Azure Multi-Factor Authentication to the Windows Azure Team blog on 9/26/2013:

Identity and access management is an anchor for security and top of mind for enterprise IT departments. It is key to extending anytime, anywhere access to employees, partners, and customers. Today, we are pleased to announce the General Availability of Windows Azure Multi-Factor Authentication - delivering increased access security and convenience for IT and end users.

Multi-Factor Authentication quickly enables an additional layer security for users signing in from around the globe. In addition to a username and password, users may authenticate via:

- An application on their mobile device.

- Automated voice call.

- Text message with a passcode.

It’s easy and meets user demand for a simple sign-in experience.

Windows Azure Multi-Factor Authentication can be configured in minutes for the many applications that require additional security, including:

- On-Premises VPNs, Web Applications, and More -- Run the Multi-Factor Authentication Server on your existing hardware or in a Windows Azure Virtual Machine. Synchronize with your Windows Server Active Directory for automated user set up.

Cloud Applications like Windows Azure, Office 365, and Dynamics CRM -- Enable Multi-Factor Authentication for Windows Azure AD identities with the flip of a switch, and users will be prompted to set up multi-factor the next time they sign-in.

- Custom Applications -- Use our SDK to build Multi-Factor Authentication phone call and text message authentication into your application’s sign-in or transaction processes.

Windows Azure Multi-Factor Authentication offers two pricing options: $2 per user per month or $2 for 10 authentications. Visit the pricing page to learn more.

For details on enabling Windows Azure Multi-Factor Authentication, please visit Scott Guthrie’s blog and check out this video. To get started with the new Multi-Factor Authentication service, visit the Windows Azure Management Portal and let us know what you think at @WindowsAzure!

<Return to section navigation list>

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

• Scott Guthrie (@scottgu) reported release of Windows Azure: New Virtual Machine, Active Directory, Multi-Factor Auth, Storage, Web Site and Spending Limit Improvements in a 9/26/2013 post:

This week we released some great updates to Windows Azure. These new capabilities include:

- Compute: New 2-CPU Core 14 GB RAM instance option

- Virtual Machines: Support for Oracle Software Images, Management Operations on Stopped VMs

- Active Directory: Richer Directory Management and General Availability of Multi-Factor Authentication Support

- Spending Limit: Reset your Spending Limit, Virtual Machines are no longer deleted if it is hit

- Storage: New Storage Client Library 2.1 Released

- Web Sites: IP and Domain Restriction Now Supported

All of these improvements are now available to use immediately. Below are more details about them.

Compute: New 2-CPU Core 14 GB RAM instance

This week we released a new memory-intensive instance for Windows Azure. This new instance, called A5, has two CPU cores and 14 gigabytes (GB) of RAM and can be used with Virtual Machines (both Windows and Linux) and Cloud Services:

You can begin using this new A5 compute option immediately. Additional information on pricing can be found in the Cloud Services and Virtual Machines sections of our pricing details pages on the Windows Azure website.

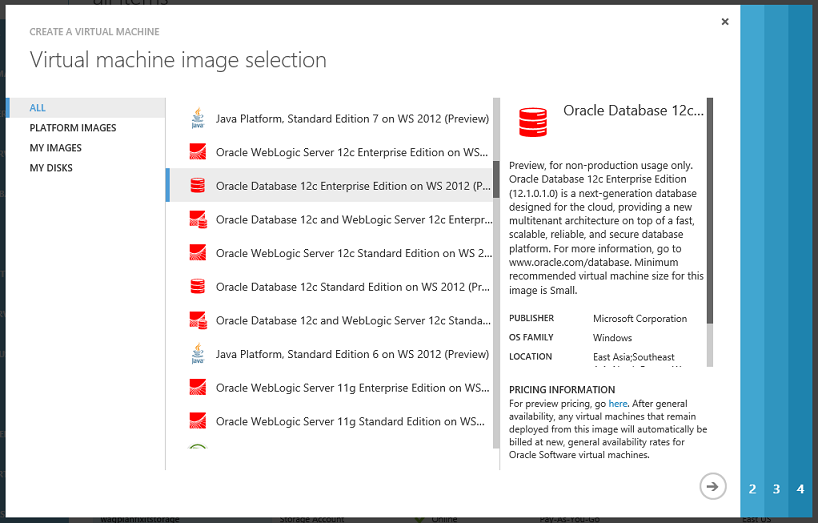

Virtual Machines: Support for Oracle Software Images

Earlier this summer we announced a strategic partnership between Microsoft and Oracle, and that we would enable support for running Oracle software in Windows Azure Virtual Machines.

Starting today, you can now deploy pre-configured virtual machine images running various combinations of Oracle Database, Oracle WebLogic Server, and Java Platform SE on Windows, with licenses for the Oracle software included. These ready-to-deploy Oracle software images enable rapid provisioning of cost-effective cloud environments for development, testing, deployment, and easy scaling of enterprise applications. The images can now be easily selected in the standard “Create Virtual Machine” wizard within the Windows Azure Management Portal:

During preview, these images are offered for no additional charge on top of the standard Windows Server VM rate. After the preview period ends, these Oracle images will be billed based on the total number of minutes the VMs run in a month. With Oracle license mobility, existing Oracle customers that are already licensed on Oracle software also have the flexibility to deploy them on Windows Azure.

To learn more about Oracle on Windows Azure, visit windowsazure.com/oracle and read the technical walk-through documentation for the Oracle Images.

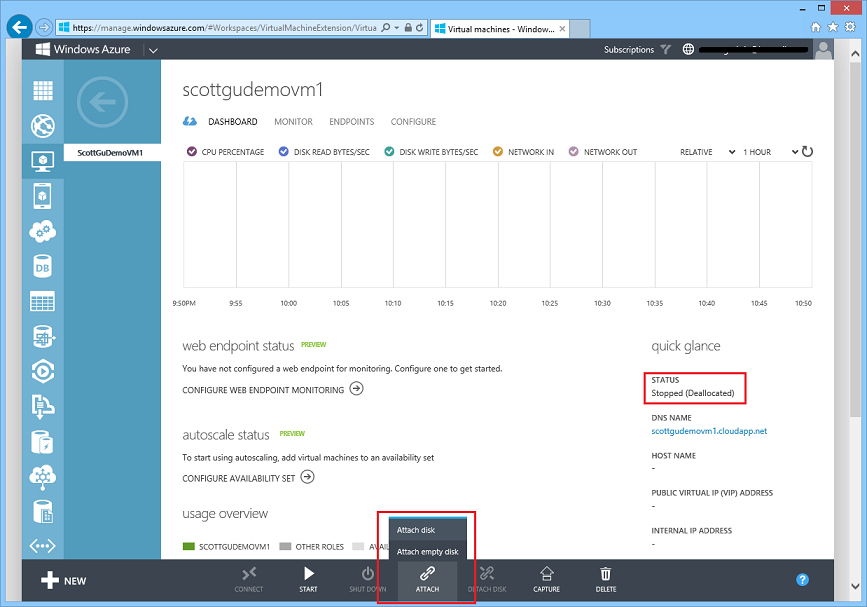

Virtual Machines: Management Operations on Stopped VMs

Starting with this week’s release, it is now possible to perform management operations on stopped/de-allocated Virtual Machines. Previously a VM had to be running in order to do operations like change the VM size, attach and detach disks, configure endpoints and load balancer/availability settings. Now it is possible to do all of these on stopped VMs without having to boot them:

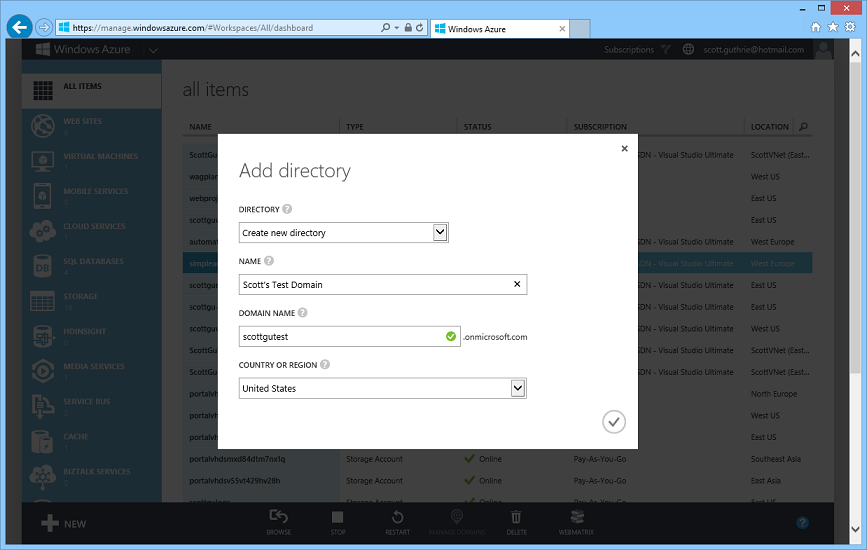

Active Directory: Create and Manage Multiple Active Directories

Starting with this week’s release it is now possible to create and manage multiple Windows Azure Active Directories in a single Windows Azure subscription (previously only one directory was supported and once created you couldn’t delete it). This is useful both for development/test scenarios as well as for cases where you want to have separate directory tenants or synchronize with different on-premises domains or forests.

Creating a New Active Directory

Creating a new Active Directory is now really easy. Simply select New->Application Services->Active Directory->Directory within the management portal:

When prompted configure the directory name, default domain name (you can later change this to any custom domain you want – e.g. yourcompanyname.com), and the country or region to use:

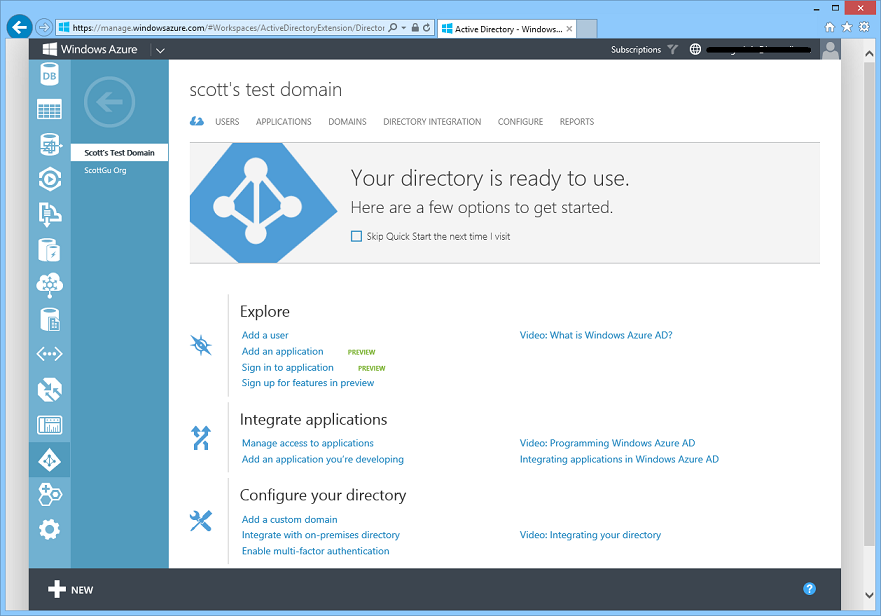

In a few seconds you’ll have a new Active Directory hosted within Windows Azure that is ready to use for free:

You can run and manage your Windows Azure Active Directories entirely in the cloud, or alternatively sync them with an on-premises Active Directory deployment - which allows you to automatically synchronize all of your on-premises users into your Active Directory in the cloud. This later option is very powerful, and ensures that any time you add or remove a user in your on-premises directory it is automatically reflected in the cloud as well.

You can use your Windows Azure Active Directory to manage identity access to custom applications you run and host in the cloud (and there is new support within ASP.NET in the VS 2013 release that makes building these SSO apps on Windows Azure really easy). You can also use Windows Azure Active Directory to securely manage the identity access of cloud based applications like Office 365, SalesForce.com, and other popular SaaS solutions.

Additional New Features

In addition to enabling the ability to create multiple directories in a single Windows Azure subscription, this week’s release also includes several additional usability enhancements to the Windows Azure Active Directory management experience:

- With this week’s release, we have added the ability to change the name of a directory after its created (previously it was fixed at creation time).

- As an administrator of a directory, you can now add users from another directory of which you’re a member. This is useful, for example, in the scenario where there are other members of your production directory who will need to collaborate on an application that is under development or testing in a non-production environment. A user can be a member of up to 20 directories.

- If you use a Microsoft account to access Windows Azure, and you use a different organizational account to manage another directory, you may find it convenient to manage that second directory with your Microsoft account. With this release, we’ve made it easier to configure a Microsoft account to manage an existing Active Directory. Now you can configure this even if the Microsoft account already manages a directory, and even if the administrator account for the other directory doesn’t have a subscription to Windows Azure. This is a common scenario when the administrator account for the other directory was created during signup for Office 365 or another Microsoft service.

- In this release, we’ve also added support to enable developers to delete single tenant applications that they’ve added to their Windows Azure AD. To delete an application, open the directory in which the application was added, click on the Applications tab, and click Delete on the command bar. An application can be deleted only when External Access is set to ‘Off’ on the configure tab.

As always, if there are aspects of these new Azure AD experiences that you think are great, or things that drive you crazy, let us know by posting in our forum on TechNet.

Active Directory: General Availability of Multi-Factor Authentication Service

With this week’s release we are excited to ship the general availability release of a great new service: the Windows Azure Multi-Factor Authentication (MFA) Service. Windows Azure Multi-Factor Authentication is a managed service that makes it easy to securely manage user access to Windows Azure, Office 365, Intune, Dynamics CRM and any third party cloud service that supports Windows Azure Active Directory. You can also use it to securely control access to your own custom applications that you develop and host within the cloud.

Windows Azure Multi-Factor Authentication can also be used with on-premise scenarios. You can optionally download our new Multi-Factor Authentication Server for Windows Server Active Directory and use it to protect on-premise applications as well.

Getting Started

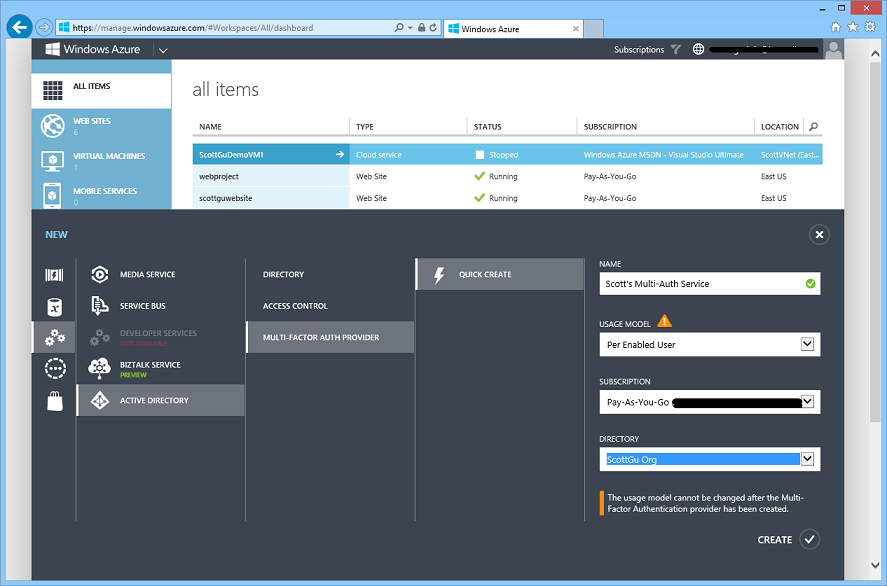

To enable multi-factor authentication, sign-in to the Windows Azure Management Portal and select New->Application Services->Active Directory->Multi-Factor Auth Provider and choose the “Quick Create” option. When you create the service you can point it at your Windows Azure Active Directory and choose from one of two billing models (per user pricing, or per authentication pricing):

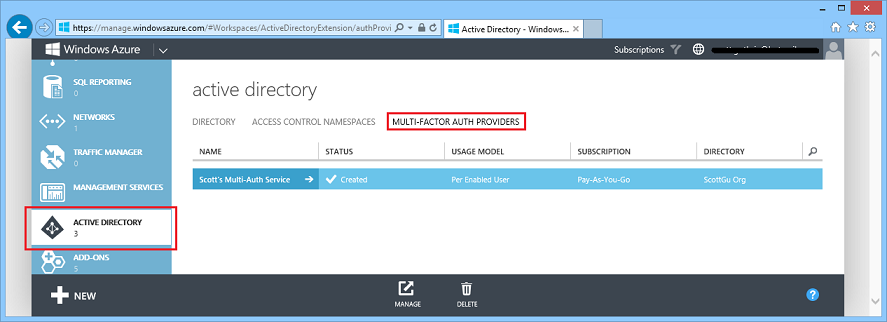

Once created the Windows Azure Multi-Factor Authentication service will show up within the “Multi-Factor Auth Providers” section of the Active Directory extension:

You can then manage which users in your directory have multi-factor authentication enabled by drilling into the “Users” tab of your Active Directory and then click the “Manage Multi-Factor Auth” button:

Once multi-factor authentication is enabled for a user within your directory they will be able to use a variety of secondary authentication techniques including verification via a mobile app, phone call, or text message to provide additional verification when they login to an app or service. The management and tracking of this is handled automatically for you by the Windows Azure Multi-Factor Authentication Service.

Learn More

You can learn more about today’s release from this 6 minute video on Windows Azure Multi-Factor Authentication.

Here are some additional videos and tutorials to learn even more:

- Turn Multi-Factor Authentication on for Windows Azure Active Directory and Office365 – view video

- Add Multi-Factor Authentication to on-premises applications – view video

- Build Multi-Factor Authentication into your applications – view video

- Alex & Steve’s blog post Building Multi-Factor Authentication to Your Applications using the SDK

Start making your applications and systems more secure with multi-factor authentication today! And give us your feedback and feature requests via the MFA forum.

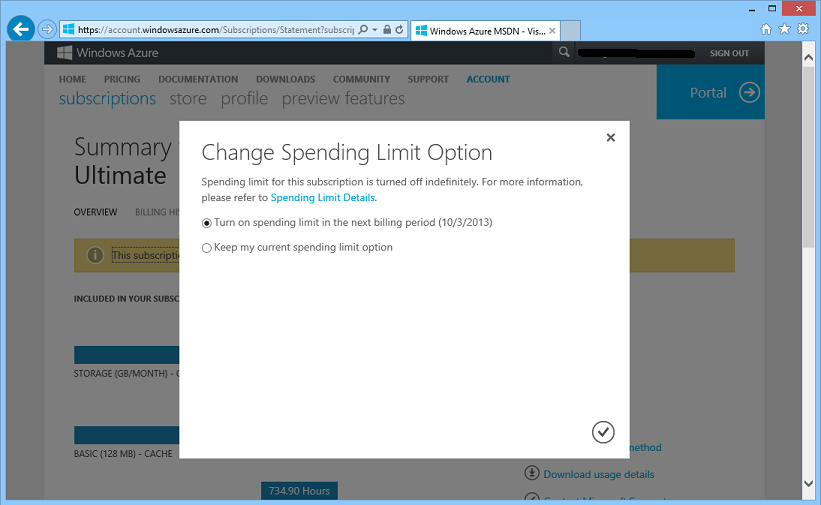

Billing: Reset your Spending Limit on MSDN subscriptions

When you sign-up for Windows Azure as a MSDN customer you automatically get a MSDN subscription created for you that enables deeply discounted prices and free “MSDN credits” (up to $150 each month) that you can spend on any resources within Windows Azure. I blogged some details about this last week.

By default MSDN subscriptions in Windows Azure are created with what is called a “Spending Limit” which ensures that if you ever use up all of the MSDN credits you still don’t get billed – as the subscription will automatically suspend when all of the free credits are gone (ensuring your bill is never more than $0).

You can optionally remove the spending limit if you want to use more than the free credits and pay any overage on top of them. Prior to this week, though, once the spending limit was removed there was no way to re-instate it for the next billing cycle.

Starting with this week’s release you can now:

- Remove the spending limit only for the current billing cycle (ideal if you know that it is a one time spike)

- Remove the spending limit indefinitely if you expect to continue to have higher usage in future

- Reset/Turn back on the spending limit from the next billing cycle forward in case you’ve already turned it off

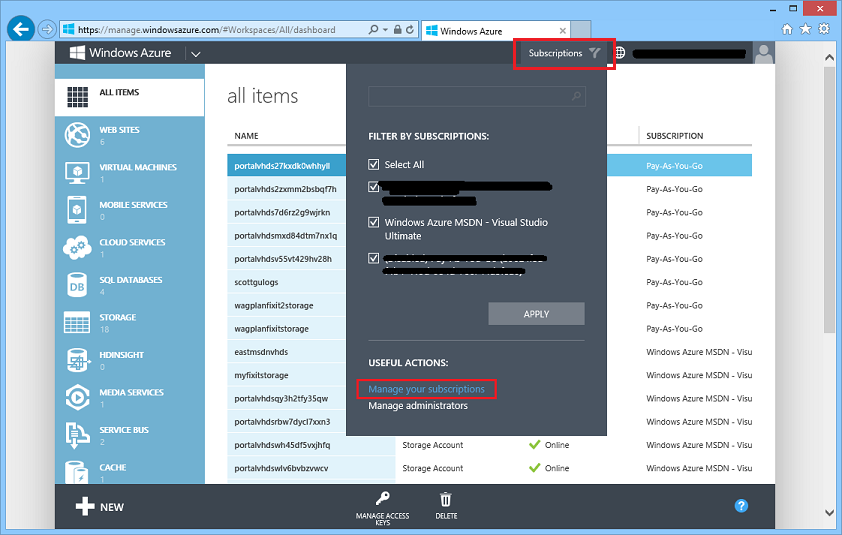

To enable or reset your spending limit, click the “Subscription” button in the top of the Windows Azure Management Portal and the click the “Manage your subscriptions” link within it:

This will take you to the Windows Azure subscription management page (which lists all of the Windows Azure subscriptions you have active). Click your MSDN subscription to see details on your account – including usage data on how much services you’ve used on it:

Above you can see usage data on my personal MSDN subscription. I’ve done a lot of talks recently and have used up my free $150 credits for the month and have $23.64 in overages. I was able to go above $0 on the subscription because I’ve turned off my spending limit (this is indicated in the text I’ve highlighted in red above).

If I want to reapply the spending limit for the next billing cycle (which starts on October 3rd) I can now do so by clicking the “Click here to change the spending limit option” link. This will bring up a dialog that makes it really easy for me to re-active the spending limit starting the next billing cycle:

We hope this new flexibility to turn the spending limit on and off enables you to use your MSDN benefits even more, and provides you with confidence that you won’t inadvertently do something that causes you to have to pay for something you weren’t expecting to.

Billing: Subscription suspension no longer deletes Virtual Machines

In addition to supporting the re-enablement of the spending limit, we also made an improvement this week so that if your MSDN (or BizSpark or Free trial) subscription does trigger the spending limit we no longer delete the Virtual Machines you have running.

Previously, Virtual Machines deployed in suspended subscriptions would be deleted when the spending limit was passed (the data drives would be preserved – but the VM instances themselves would be deleted). Now when a subscription is disabled, VMs deployed inside it will simply move into the stopped de-provision state we recently introduced (which allows a VM to stop without incurring any billing).

This allows the Virtual Machines to be quickly restarted with all the previously attached disks and endpoints when a fresh monetary credit is applied or the subscription is converted into a paid subscription. As a result, customers don’t have to worry about losing their Virtual Machines when spending limits are reached, and they can quickly return back to business by re-starting their VMs immediately.

Storage: New .NET Storage Client Library 2.1 Release

Earlier this month we released a major update of our Windows Azure Storage Client Library for .NET. The new 2.1 release includes a ton of awesome new features and capabilities:

- Improved Performance

- Async Task<T> support

- IQueryably<T> Support for Tables

- Buffer Pooling Support

- .NET Tracing Integration

- Blob Stream Improvements

- And a lot more…

Read this detailed blog post about the Storage Client Library 2.1 Release from the Windows Azure Storage Team to learn more. You can install the Storage Client Library 2.1 release and start using it immediately using NuGet.

Web Sites: IP and Domain Restriction Now Supported

This month we have also enabled the IP and Domain Restrictions feature of IIS to be used with Windows Azure Web Sites. This provides an additional security option that can also be used in combination with the recently enabled dynamic IP address restriction (DIPR) feature (http://blogs.msdn.com/b/windowsazure/archive/2013/08/27/confirming-dynamic-ip-address-restrictions-in-windows-azure-web-sites.aspx).

Developers can use IP and Domain Restrictions to control the set of IP addresses, and address ranges, that are either allowed or denied access to their websites. With Windows Azure Web Sites developers can enable/disable the feature, as well as customize its behavior, using web.config files located in their website.

There is an overview of the IP and Domain Restrictions feature from IIS available here: http://www.iis.net/configreference/system.webserver/security/ipsecurity. A full description of individual configuration elements and attributes is available here: http://msdn.microsoft.com/en-us/library/ms691353(v=vs.90).aspx

The example configuration snippet below shows an ipSecurity configuration that only allows access to addresses originating from the range specified by the combination of the ipAddress and subnetMask attributes. Setting allowUnlisted to false means that only those individual addresses, or address ranges, explicitly specified by a developer will be allowed to make HTTP requests to the website. Setting the allowed attribute to true in the child add element indicates that the address and subnet together define an address range that is allowed to access the website.

<system.webServer>

<security>

<ipSecurity allowUnlisted="false" denyAction="NotFound">

<add allowed="true" ipAddress="123.456.0.0" subnetMask="255.255.0.0"/>

</ipSecurity>

</security>

</system.webServer>

If a request is made to a website from an address outside of the allowed IP address range, then an HTTP 404 not found error is returned as defined in the denyAction attribute.

One final note, just like the companion DIPR feature, Windows Azure Web Sites ensures that the client IP addresses “seen” by the IP and Domain Restrictions module are the actual IP addresses of Internet clients making HTTP requests.

Summary

Today’s release includes a bunch of great features that enable you to build even better cloud solutions. If you don’t already have a Windows Azure account, you can sign-up for a free trial and start using all of the above features today. Then visit the Windows Azure Developer Center to learn more about how to build apps with it.

• Philip Fu posted [Sample Of Sep 25th] How to control Windows Azure VM with the REST API to the Microsoft All-In-One Code Framework blog on 9/25/2013:

Sample Download : http://code.msdn.microsoft.com/How-to-program-control-838bd90b

To operate Windows Azure IaaS virtual machine, using the azure power shell isn't the only way. We also can use management service API to achieve this target.

This sample will use GET/POST/DELETE requests to operate the virtual machine.

You can find more code samples that demonstrate the most typical programming scenarios by using Microsoft All-In-One Code Framework Sample Browser or Sample Browser Visual Studio extension. They give you the flexibility to search samples, download samples on demand, manage the downloaded samples in a centralized place, and automatically be notified about sample updates. If it is the first time that you hear about Microsoft All-In-One Code Framework, please watch the introduction video on Microsoft Showcase, or read the introduction on our homepage http://1code.codeplex.com/.

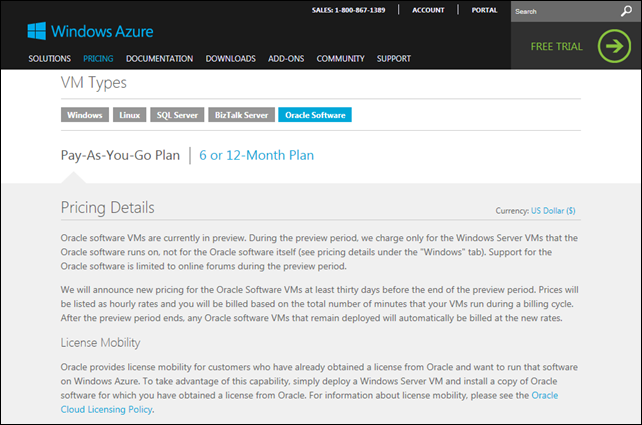

Steven Martin (@stevemar_msft) announced that you can Deploy Pre-configured Oracle VMs on Windows Azure on 9/23/2013:

Building on the recent announcement of strategic partnershi between Microsoft and Oracle; today we are making a number of popular Oracle software configurations available through the Windows Azure image gallery. Effective immediately, customers can deploy pre-configured virtual machine images running various combinations of Oracle Database, Oracle WebLogic Server, and Java Platform SE on Windows Server, with licenses for the Oracle software included. During the preview, these images are offered for no additional charge beyond the regular compute costs. After the preview period ends, Oracle images will be billed based on the total number of minutes VMs run in a month; details on the VM pricing to be announced at a later date.

These ready-to-deploy images enable rapid provisioning of cost-effective cloud environments for development and testing as well as easy scaling of enterprise Oracle applications. With Oracle license mobility, existing customers who are licensed on Oracle software can now deploy on Windows Azure and take advantage of powerful management features, cross-platform tools and automation capabilities.

Additionally, Oracle now offers Oracle Linux, Oracle Linux with Oracle Database, and Oracle Linux with WebLogic Server in the Windows Azure image gallery for customers who are licensed to use these products.

To get started with Oracle on Windows Azure, visit www.windowsazure.com/oracle and the technical walk-through documentation for Oracle Images. Don’t forget to tell us what you think at @WindowsAzure!

The Windows Azure Team posted Pricing Details for Oracle Software VMs on 9/23/2013:

The Windows Azure Technical Support (WATS) Team answered Why did my Azure VM restart? on 9/23/2013:

An unexpected restart of an Azure VM is an issue that commonly results in a customer opening a support incident to determine the cause of the restart. Hopefully the explanation below provides details to help understand why an Azure VM could have been restarted.

Windows Azure updates the host environment approximately once every 2-3 months to keep the environment secure for all applications and virtual machines running on the platform. This update process may result in your VM restarting, causing downtime to your applications/services hosted by the Virtual Machines feature. There is no option or configuration to avoid these host updates. In addition to platform updates, Windows Azure service healing occurs automatically when a problem with a host server is detected and the VMs running on that server are moved to a different host. When this occurs, you loose connectivity to VM during the service healing process. After the service healing process is completed, when you connect to VM, you will likely to find a event log entry indicating VM restart (either gracefully or unexpected). Because of this, it is important to configure your VMs to handle these situations in order to avoid downtime for your applications/services.

To ensure high availability of your applications/services hosted in Windows Azure Virtual Machines, we recommend using multiple VMs with availability sets. VMs in the same availability set are placed in different fault domains and update domains so that planned updates, or unexpected failures, will not impact all the VMs in that availability set. For example, if you have two VMs and configure them to be part of an availability set, when a host is being updated, only one VM is brought down at a time. This will provide high availability since you have one VM available to serve the user requests during the host update process. Mark Russinovich has posted a great blog post which explains Windows Azure Host updates in detail. Managing the high availability is detailed here.

While availability sets help provide high availability for your VMs, we recognize that proactive notification of planned maintenance is a much-requested feature, particularly to help prepare in a situation where you have a workload that is running on a single VM and is not configured for high availability. While this type of proactive notification of planned maintenance is not currently provided, we encourage you to provide comments on this topic so we can take the feedback to the product teams.

Cory Fowler (@SyntaxC4) posted Important: Update to Default PHP Runtime on Windows Azure Web Sites on 9/24/2013:

In upcoming weeks Windows Azure Web Sites will update the default PHP version from PHP 5.3 to PHP 5.4. PHP 5.3 will continue to be available as a non-default option. Customers who have not explicitly selected a PHP version for their site and wish the site to continue to run using PHP 5.3 can select this version at any time from the Windows Azure Management Portal, Windows Azure Cross Platform Command Line Tools, or Windows Azure PowerShell Cmdlets. The Windows Azure Web Sites team will also start onboarding PHP 5.5 as an option in the near future.

Explicitly Selecting a PHP version in Windows Azure Web Sites

If you wish to continue to run PHP 5.3 in your Windows Azure Web Site, follow one of the options below to explicitly set the PHP runtime of your site.

Selecting the PHP version from the Windows Azure Management Portal

After logging into the Windows Azure Management Portal, click on the Web Sites navigation item from the left hand menu.

Select the Web Site you wish to set the PHP Version for, then Click the arrow to navigate to the details screen.

Click on the CONFIGURE tab.

Ensure the value selected beside the PHP Version label is 5.3.

Perform any action which will require a save that will indicate the PHP 5.3 selection is intentional and not a reflection of the current platform default:

Add an App Setting

Temporarily toggle to PHP 5.4 or OFF

Enable Application or Site Diagnostics

Add/Change the Default documents

Click on the Save button in the command bar at the bottom of the portal.

Selecting the PHP version from the Windows Azure Cross Platform Command Line Tools

Run the following command from your terminal of choice, be sure that the Windows Azure Cross-Platform CLI Tools are installed and the appropriate subscription is selected.

azure site set --php-version 5.3 <site-name>Selecting the PHP version from the Windows Azure PowerShell Cmdlets

Run the following command from a PowerShell console, be sure that the Windows Azure PowerShell Cmdlets are installed and the appropriate subscription is selected.

Set-AzureWebsite -PhpVersion 5.3 -Name <site-name>

Kevin Remde (@KevenRemde) reported BREAKING NEWS: A new “memory intensive” VM size in Windows Azure on 9/24/2013:

In case you haven’t noticed, Microsoft has added a new virtual machine size available in Windows Azure. To go along with our really big “A6” and “A7” sizes, there is now an “A5” machine size…

So, if don’t have a need for so many processors, but need a bigger chunk of RAM, you’re in luck.

For more information, please refer to the Cloud Services or Virtual Machines sections of the Pricing Details webpages.

Andy Cross (@andygareweb) described Diagnosing a Windows Azure Website Github integration error in an 9/24/2013 post:

Yesterday I experienced an issue when trying to integrate a Windows Azure Website with Github. Specifically, my code would deploy from the master branch, but if I chose a specific other branch called ‘prototype’ I received a fetch error in the Windows Azure Management Portal:

This error has been reported to the team and I’m sure will be rectified so nobody else will run into it, but at Cory Fowler’s (@syntaxC4) prompting I wanted to document the steps I took to debug this as these steps may be useful to anyone struggling to debug a Windows Azure Website integration.

Scenario

In my scenario I had a project with a series of subfolders in my github repo. The project has progressed from a prototype to a full build but we were required to persist the prototype for design reference. We could have created ‘prototype’ without changing the solution structure, but as in all real world scenarios the requirement to leave the prototype available emerged only when we removed it and had changed the URL structure. We were only happy to continue working on new code if we could label the prototype or somehow leave it in a static state while the codebase moved on. This requirement is easily tackled by Windows Azure Websites and its Github integration; we changed the solution structure to have subfolders, created a new branch ‘prototype’ and continued our main work in ‘master’. Our ‘master’ branch has the additional benefit of having the prototype available for reference and quick applications of code change if we want to pivot our approach.

We then created two Windows Azure Websites (for free, wow!). In order to allow Windows Azure Websites to deploy the correct code for each, we created a .deployment file in each repository. In this .deployment file we inform Windows Azure Websites (through its Kudu deployment mechanism) that it should perform a custom deployment.

For the ‘master’ branch we want to deploy the /client folder, which involves a simple .deployment file containing

[config] project = clientFor the ‘prototype’ branch we want to deploy the /prototype folder, which involves a simple .deployment file containing

[config] project = prototypeAs you can see, these two branches then can evolve independently (although the prototype should be static).

Problems Start