Windows Azure and Cloud Computing Posts for 7/23/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI,Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

•• Updated 7/28/2012 at 8:00 AM PDT with new articles marked ••.

• Updated 7/26/2012 at 12:15 PM PDT with new articles marked •. I believe this post sets a new length record.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database, Federations and Reporting

- Marketplace DataMarket, Social Analytics, Big Data and OData

- Windows Azure Service Bus, Access Control, Caching, Active Directory, and Workflow

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Services

•• Brent Stineman (@BrentCodeMonkey) explained The “traffic cop” pattern in a 7/27/2012 post:

So I like design patterns but don’t follow them closely. Problem is that there are too many names and its just so darn hard to find them. But one “pattern” I keep seeing an ask for is the ability to having something that only runs once across a group of Windows Azure instances. This can surface as one-time startup task or it could be the need to have something that run constantly and if one instance fails, another can realize this and pick up the work.

This later example is often referred to as a “self-electing controller”. At the root of this is a pattern I’ve taken to calling a “traffic cop”. This mini-pattern involves having a unique resource that can be locked, and the process that gets the lock has the right of way. Hence the term “traffic cop”. In the past, aka my “mainframe days”, I used this with systems where I might be processing work in parallel and needed to make sure that a sensitive block of code could prevent a parallel process from executing it while it was already in progress. Critical when you have apps that are doing things like self-incrementing unique keys.

In Windows Azure, the most common way to do this is to use a Windows Azure Storage blob lease. You’d think this comes up often enough that there’d be a post on how to do it already, but I’ve never really run across one. That is until today. Keep reading!

But before I dig into the meat of this, a couple footnotes… First is a shout out to my buddy Neil over at the Convective blob. I used Neil’s Azure Cookbook for help me with the blob leasing stuff. You can never have too many reference books in your Kindle library. Secondly, the Windows Azure Storage team is already working on some enhancements for the next Windows Azure .NET SDK that will give us some more ‘native’ ways of doing blob leases. These include taking advantage of the newest features of the 2012-02-12 storage features. So the leasing techniques I have below may change in an upcoming SDK.

Blob based Traffic Cop

Because I want to get something that works for Windows Azure Cloud Services, I’m going to implement my traffic cop using a blob. But if you wanted to do this on-premises, you could just as easily get an exclusive lock on a file on a shared drive. So we’ll start by creating a new Cloud Service, add a worker role to it, and then add a public class to the worker role called “BlobTrafficCop”.

Shell this class out with a constructor that takes a CloudPageBlob, a property that we can test to see if we have control, and methods to Start and Stop control. This shell should look kind of like this:

class BlobTrafficCop { public BlobTrafficCop(CloudPageBlob blob) { } public bool HasControl { get { return true; } } public void Start(TimeSpan pollingInterval) { } public void Stop() { } }Note that I’m using a CloudPageBlob. I specifically chose this over a block blob because I wanted to call out something. We could create a 1tb page blob and won’t be charged for 1 byte of storage unless we put something into it. In this demo, we won’t be storing anything so I can create a million of these traffic cops and will only incur bandwidth and transaction charges. Now the amount I’m saving here isn’t even significant enough to be a rounding error. So just note this down as a piece of trivia you may want to use some day. It should also be noted that the size you set in the call to the Create method is arbitrary but MUST be a multiple of 512 (the size of a page). If you set it to anything that’s not a multiple of 512, you’ll receive an invalid argument exception.

I’ll start putting some buts into this by doing a null argument check in my constructor and also saving the parameter to a private variable. The real work starts when I create three private helper methods to work with the blob lease. GetLease, RenewLease, and ReleaseLease. …

Brent continues with the source code for the three private helper methods.

• Valery Mizonov and Seth Manheim wrote Windows Azure Table Storage and Windows Azure SQL Database - Compared and Contrasted for MSDN in July 2012. From the first few sections:

Reviewers: Brad Calder, Jai Haridas, Paolo Salvatori, Silvano Coriani, Prem Mehra, Rick Negrin, Stuart Ozer, Michael Thomassy, Ewan Fairweather

This topic compares two types of structured storage that Windows Azure supports: Windows Azure Table Storage and Windows Azure SQL Database, the latter formerly known as “SQL Azure.” The goal of this article is to provide a comparison of the respective technologies so that you can understand the similarities and differences between them. This analysis can help you make a more informed decision about which technology best meets your specific requirements.

Introduction

When considering data storage and persistence options, Windows Azure provides a choice of two cloud-based technologies: Windows Azure SQL Database and Windows Azure Table Storage.

Windows Azure SQL Database is a relational database service that extends core SQL Server capabilities to the cloud. Using SQL Database, you can provision and deploy relational database solutions in the cloud. The benefits include managed infrastructure, high availability, scalability, a familiar development model, and data access frameworks and tools -- similar to that found in the traditional SQL Server environment. SQL Database also offers features that enable migration, export, and ongoing synchronization of on-premises SQL Server databases with Windows Azure SQL databases (through SQL Data Sync).

Windows Azure Table Storage is a fault-tolerant, ISO 27001 certified NoSQL key-value store. Windows Azure Table Storage can be useful for applications that must store large amounts of nonrelational data, and need additional structure for that data. Tables offer key-based access to unschematized data at a low cost for applications with simplified data-access patterns. While Windows Azure Table Storage stores structured data without schemas, it does not provide any way to represent relationships between the data.

Despite some notable differences, Windows Azure SQL Database and Windows Azure Table Storage are both highly available managed services with a 99.9% monthly SLA.

Table Storage vs. SQL Database

Similar to SQL Database, Windows Azure Table Storage stores structured data. The main difference between SQL Database and Windows Azure Table Storage is that SQL Database is a relational database management system based on the SQL Server engine and built on standard relational principles and practices. As such, it provides relational data management capabilities through Transact-SQL queries, ACID transactions, and stored procedures that are executed on the server side.

Windows Azure Table Storage is a flexible key/value store that enables you to build cloud applications easily, without having to lock down the application data model to a particular set of schemas. It is not a relational data store and does not provide the same relational data management functions as SQL Database (such as joins and stored procedures). Windows Azure Table Storage provides limited support for server-side queries, but does offer transaction capabilities. Additionally, different rows within the same table can have different structures in Windows Azure Table Storage. This schema-less property of Windows Azure Tables also enables you to store and retrieve simple relational data efficiently.

If your application stores and retrieves large data sets that do not require rich relational capabilities, Windows Azure Table Storage might be a better choice. If your application requires data processing over schematized data sets and is relational in nature, SQL Database might better suit your needs. There are several other factors you should consider before deciding between SQL Database and Windows Azure Table Storage. Some of these considerations are listed in the next section.

Technology Selection Considerations

When determining which data storage technology fits the purpose for a given solution, solution architects and developers should consider the following recommendations.

As a solution architect/developer, consider using Windows Azure Table Storage when:

- Your application must store significantly large data volumes (expressed in multiple terabytes) while keeping costs down.

- Your application stores and retrieves large data sets and does not have complex relationships that require server-side joins, secondary indexes, or complex server-side logic.

- Your application requires flexible data schema to store non-uniform objects, the structure of which may not be known at design time.

- Your business requires disaster recovery capabilities across geographical locations in order to meet certain compliance needs. Windows Azure tables are geo-replicated between two data centers hundreds of miles apart on the same continent. This replication provides additional data durability in the case of a major disaster.

- You need to store more than 150 GB of data without the need for implementing sharding or partioning logic.

- You need to achieve a high level of scaling without having to manually shard your dataset.

As a solution architect/developer, consider using Windows Azure SQL Database when:

- Your application requires data processing over schematic, highly structured data sets with relationships.

- Your data is relational in nature and requires the key principles of the relational data programming model to enforce integrity using data uniqueness rules, referential constraints, and primary or foreign keys.

- Your data volumes might not exceed 150 GB per a single unit of colocated data sets, which often translates into a single database. However, you can partition your data across multiple sets to go beyond the stated limit. Note that this limit is subject to change in the future.

- Your existing data-centric application already uses SQL Server and you require cloud-based access to structured data by using existing data access frameworks. At the same time, your application requires seamless portability between on-premises and Windows Azure.

- Your application plans to leverage T-SQL stored procedures to perform computations within the data tier, thus minimizing round trips between the application and data storage.

- Your application requires support for spatial data, rich data types, and sophisticated data access patterns through consistent query semantics that include joins, aggregation, and complex predicates.

- Your application must provide visualization and business intelligence (BI) reporting over data models using out-of-the-box reporting tools.

Note: Many Windows Azure applications can take advantage of both technologies. Therefore, it is recommended that you consider using a combination of these options. …

The post continues with many tables of detailed selection criteria.

For a discussion of this topic in an OData context, see Glenn Gailey’s article in the Marketplace DataMarket, Social Analytics, Big Data and OData section below.

Bruno Terkaly (@brunoterkaly) described How to create a custom blob manager using Windows 8, Visual Studio 2012 RC, and the Azure SDK 1.7 in a 7/24/2012 post:

Programmatically managing blobs

- This post has two main objectives: (1) Educate you that you can host web content very economically; (2) Show you how you can create your own blob management system in the cloud.

- Download the source to my VS 2012 RC project:

Download The Source

https://skydrive.live.com/embed?cid=98B7747CD2E738FB&resid=98B7747CD2E738FB%212850&authkey=AAWxTD4cCiYKJ60

Free Trial Account

http://www.windowsazure.com/en-us/pricing/free-trial/?WT.mc_id=A733F5829- Hosting web content as a blob on Windows Azure is powerful. To start with, it is extremely economical; it doesn't require you to host a web server yourself. As a result blobs are very cost-effective. Secondly, the other powerful aspect of hosting html content as blobs on Windows Azure is that you get that blobs get replicated 3 times. It will always be available, with SLA support.

- I use Windows Azure-hosted blobs for my blog. I store html, javascript, and style sheets. I manage video content as well. You can see my article in MSDN Magazine for further details.

See my article in MSDN Magazine, Democratizing Video Content with Windows Azure Media Services

http://msdn.microsoft.com/en-us/magazine/jj133821.aspx- I could store anything I want. When you visit my blog, you are pulling content from Windows Azure storage services.

- You can dynamically create content and then upload to Azure. I'll show you how to upload the web page as a blob.

- But that web page can be dynamically created based on a database. The code I am about to show is infinitely flexible. You could adapt it to manage all your content programmatically.

- I will illustrate with the latest tools and technologies, as of July 2012. This means we will use:

- Windows 8

- Visual Studio 2012 RC

- Azure SDK and Tooling 1.7

- I assume you have an Azure Account (free trials are available)

2 main blob types

- The storage service offers two types of blobs, block blobs and page blobs.

- You specify the blob type when you create the blob.

- You can store text and binary data in either of "two types of blobs":

- Block blobs, which are optimized for streaming.

- Page blobs, which are optimized for random read/write operations and which provide the ability to write to a range of bytes in a blob.

- Windows Azure Blob storage is a service for storing large amounts of unstructured data that can be accessed from anywhere in the world via HTTP or HTTPS.

- A single blob can be hundreds of gigabytes in size, and a single storage account can contain up to 100TB of blobs.

- Common uses of Blob storage include:

- Serving images or documents directly to a browser

- Storing files for distributed access

- Streaming video and audio

- Performing secure backup and disaster recovery

- Storing data for analysis by an on-premise or Windows Azure-hosted service

- Once the blob has been created, its type cannot be changed, and" it can be updated only by using operations appropriate for that blob type", i.e., writing a block or list of blocks to a block blob, and writing pages to a page blob.

- All blobs reflect committed changes immediately.

- Each version of the blob has a unique tag, called an ETag, that you can use with access conditions to assure you only change a specific instance of the blob.

- Any blob can be leased for exclusive write access.

- When a blob is leased, only calls that include the current lease ID can modify the blob or (for block blobs) its blocks.

- You can assign attributes to blobs and then query those attributes within their corresponding container using LINQ.

- Blobs allow you to write bytes to specific offsets. You can enjoy typical read/write block-oriented operations.

- Note following attributes of blob storage:

- Storage Account

- All access to Windows Azure Storage is done through a storage account. This is the highest level of the namespace for accessing blobs. An account can contain an unlimited number of containers, as long as their total size is under 100TB.

- Container

- A container provides a grouping of a set of blobs. All blobs must be in a container. An account can contain an unlimited number of containers. A container can store an unlimited number of blobs.

- Blob

- A file of any type and size.

- "A single block blob can be up to 200GB in size". "Page blobs, another blob type, can be up to 1TB in size", and are more efficient when ranges of bytes in a file are modified frequently. For more information about blobs, see Understanding Block Blobs and Page Blobs.

- URL format

- Blobs are addressable using the following URL format:

- http://.blob.core.windows.net/

Web Pages as blobs

- As I explained, what I am showing is how I power my blog with Windows Azure [1]. My main blog page starts with an <iframe>[2][3]. This tag lets you embed an html page within an html page. My post is basically a bunch of iframe's glued together. One of those iframe's is a menu I have for articles I have created. It really is a bunch of metro-styled hyperlinks.

- As I said before, this post is about how I power my blog[1]. This post is about generating web content and storing it as a web page blob up in a MS data center. The left frame on my blog is nothing more than an iframe with a web page.[2][3]

- The name of the web page is key_links.html. Key_links.html is generated locally, then uploaded to blog storage.

- The pane on the left here that says Popular Posts. It is just an embedded web page, that is stored as a blob on Windows Azure. I upload the blob through a Windows 8 Application that I am about to build for you.

- The actual one that I use his slightly more complicated. It leverages a SQL Server database that has the source for the content you see in Popular Posts.

- For my blog, all I do is keep a database of up to date. The custom app we are writing generates a custom web page, based on the SQL server data that I previously entered.

- My app then simply loops through the rows in the SQL server database table and generates that colorful grid you see labeled Popular Posts.

- You can see my blob stored here:

- https://brunoblogcontent.blob.core.windows.net/blobcalendarcontent/key_links.html

Dynamically created based on SQL Server Data

- You can navigate directly to my blob content.

- https://brunoblogcontent.blob.core.windows.net/blobcalendarcontent/key_links.html

- The point here is that Key_links.html is generated based on entries in a database table

- You could potentially store the entries in the cloud as well using SQL Database (formerly SQL Azure)

- This post will focus on how you would send key_links.html and host it in the Windows Azure Storage Service

- Here you can see the relationship between the table data and the corresponding HTML content.

- The metro-like web interface you see up there is generated dynamically by a Windows 8 application. We will not do dynamic creation here.

- I used Visual Studio 2012 RC to write the Windows 8 application. To upload the blob of all I needed the Windows Azure SDK and Tooling. …

Denny Lee (@dennylee) posted a Power View Tip: Scatter Chart over Time on the X-Axis and Play Axis post on 7/24/2012:

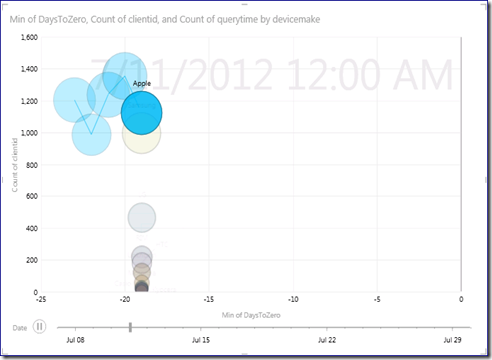

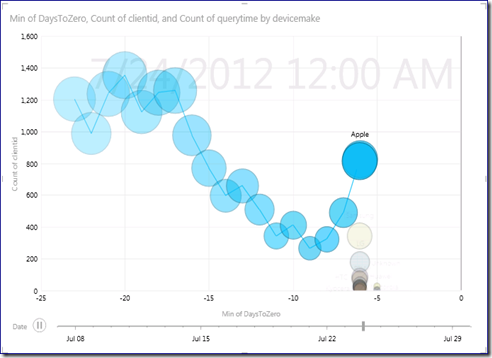

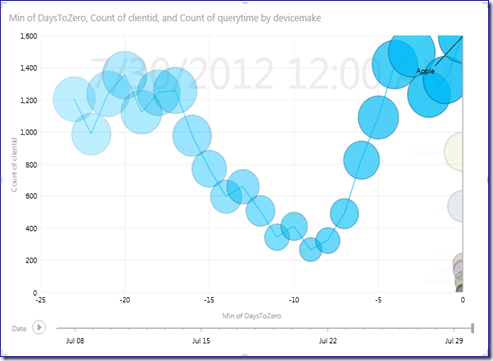

As you have seen in many Power View demos, you can run the Scatter Chart over time by placing date/time onto the Play Axis. This is pretty cool and it allows you to see trends over time on multiple dimensions. But how about if you want to see time also on the x-axis?

For example, let’s take the Hive Mobile Sample data as noted in my post: Connecting Power View to Hadoop on Azure. As noted in Office 2013 Power View, Bing Maps, Hive, and Hadoop on Azure … oh my!, you can quickly create Power View reports right out of Office 2013.

Scenario

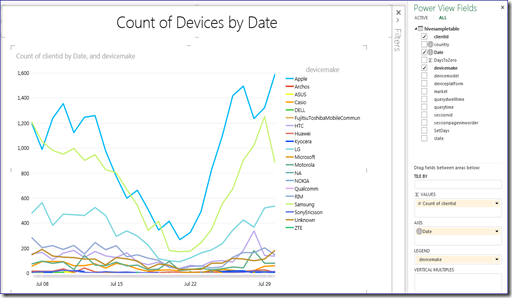

In this scenario, I’d like to see the number of devices on the y-axis, date on the x-axis, broken out by device make. This can be easily achieved using a column bar chart.

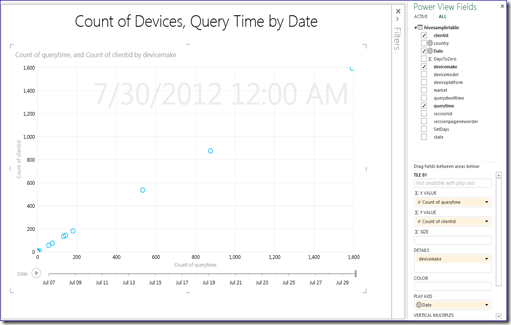

Yet, if I wanted to add another dimension to this, such as the number of calls (QueryTime), the only way to do this without tiling is to use the Scatter Chart. Yet, this will not yield the results you may like seeing either.

It does have a Play Axis of Date, but while the y-axis has count of devices (count of ClientID), the x-axis is the count of QueryTime – it’s a pretty lackluster chart. Moving Count of QueryTime to the Bubble Size makes it more colorful but now all the data is stuck near the y-axis. When you click on the play-axis, the bubbles only move up and down the y-axis.

Date on X-Axis and Play Axis

So to solve the problem, the solution is to put the date on both the x-axis and the play axis. Yet, the x-axis only allows numeric values – i.e. you cannot put a date into it. So how do you around this limitation?

What you can do is create a new calculated column:

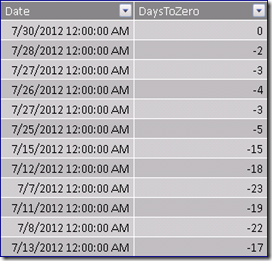

DaysToZero = -1*(max([date]) – [date])

What this does is to calculate the number of days differing between the max([date]) within the [date] column as noted below.

As you can see, the max([date]) is 7/30/2012 and the [DaysToZero] column has the value of datediff(dd, [Date], max([Date]))

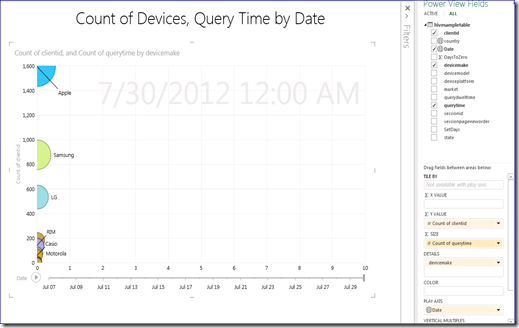

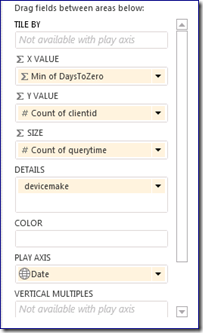

Once you have created the [DaysToZero] column, you can then place this column onto the x-axis of your Scatter Chart. Below is the scatter chart configuration.

With this configuration, you can see events occur over time when running the play axis as noted in the screenshots below.

Paul Miller (@paulmiller) described his GigaOM Pro report on Hadoop and cluster management in a 7/23/2012 post to his Cloud of Data blog:

My latest piece of work for GigaOM Pro just went live. Scaling Hadoop clusters: the role of cluster management is available to GigaOM Pro subscribers, and was underwritten by StackIQ.

Thanks to everyone who took the time to speak with me during the preparation of this report.

As the blurb describes,

From Facebook to Johns Hopkins University, organizations are coping with the challenge of processing unprecedented volumes of data. It is possible to manually build, run and maintain a large cluster and to use it to run applications such as Hadoop. However, many of the processes involved are repetitive, time-consuming and error-prone. So IT managers (and companies like IBM and Dell) are increasingly turning to cluster-management solutions capable of automating a wide range of tasks associated with cluster creation, management and maintenance.

This report provides an introduction to Hadoop and then turns to more-complicated matters like ensuring efficient infrastructure and exploring the role of cluster management. Also included is an analysis of different cluster-management tools from Rocks to Apachi Ambari and how to integrate them with Hadoop.

Compulsory picture of an elephant as it’s a Hadoop story provided by Flickr user Brian Snelson.

Related articles

<Return to section navigation list>

SQL Azure Database, Federations and Reporting

No significant articles today.

<Return to section navigation list>

MarketPlace DataMarket, Social Analytics, Big Data and OData

•• Scott M. Fulton (@SMFulton3) asked Big Data: What Do You Think It Is? in a 7/27/2012 article for the ReadWriteCloud:

"Big Data" is the technology that is supposedly reshaping the data center. Sure, the data center isn't as fun a topic as the iPad, but without the data center supplying the cloud with apps, iPads wouldn't nearly as much fun either. Big Data is also the nucleus of a new and growing industry, injecting a much-needed shot of adrenaline in the business end of computing. It must be important; in March President Obama made it a $200 million line item in the U.S. Federal Budget. But what the heck is Big Data?

With hundreds of millions of taxpayer dollars behind it, with billions in capital and operating expenditures invested in it, and with a good chunk of ReadWriteWeb's space and time devoted to it, well, you'd hope that we all pretty much knew what Big Data actually was. But a wealth of new evidence, including an Oracle study reported by RWW's Brian Proffitt last week, a CapGemini survey uncovered by Sharon Fisher also last week, and now a Harris Interactive survey commissioned by SAP, all indicate a disturbing trend: Both businesses and governments may be throwing money at whatever they may think Big Data happens to be. And those understandings may depend on who their suppliers are, who's marketing the concept to them and how far back they began investigating the issue.

That even the companies investing in Big Data have a relatively poor understanding of it may be blamed only partly on marketing. To date, the Web has done a less-than-stellar job at explaining what Big Data is all about. "The reality is, when I looked at these survey results, the first thing I said was, wow. We still don't have people who have a common definition of Big Data, which is a big problem," said Steve Lucas, executive vice president for business analytics at SAP.

The $500 Million Pyramid

The issue is that many companies are just now facing the end of the evolutionary road for traditional databases, especially now that accessibility through mobile apps by thousands of simultaneous users has become a mandate. The Hadoop framework, which emerged from an open source project out of Yahoo and has become its own commercial industry, presented the first viable solution. But Big Data is so foreign to the understanding customers have already had about their own data centers, that it's no wonder surveys are finding their strategies spinning off in various directions.

"What I found surprising about the survey results was that 18% of small and medium-sized businesses under $500 million [in revenue] per year think of Big Data as social- and machine-generated," Lucas continued. "Smaller companies are dealing with large numbers of transactions from their Web presence, with mobile purchases presenting challenges for them. Larger companies have infrastructure to deal with that. So they’re focused ... on things like machine-generated data, cell phones, devices, sensors, things like that, as well as social data."

Snap Judgment

Harris asked 154 C-level executives from U.S.-based multi-national companies last April a series of questions, one of them being to simply pick the definition of "Big Data" that most closely resembled their own strategies. The results were all over the map. While 28% of respondents agreed with "Massive growth of transaction data" (the notion that data is getting bigger) as most like their own concepts, 24% agreed with "New technologies designed to address the volume, variety, and velocity challenges of big data" (the notion that database systems are getting more complex). Some 19% agreed with the "requirement to store and archive data for regulatory and compliance," 18% agreed with the "explosion of new data sources," while 11% stuck with "Other."

All of these definition choices seem to strike a common theme that databases are evolving beyond the ability of our current technology to make sense of it all. But when executives were asked questions that would point to a strategy for tackling this problem, the results were just as mixed.

When SAP's Lucas drilled down further, however, he noticed the mixture does tend to tip toward one side or the other, with the fulcrum being the $500 million revenue mark. Companies below that mark (about 60% of total respondents), Lucas found, are concentrating on the idea that Big Data is being generated by Twitter and social feeds. Companies above that mark may already have a handle on social data, and are concentrating on the problem of the wealth of data generated by the new mobile apps they're using to connect with their customers - apps with which the smaller companies aren't too familiar yet.

"That slider scale may change the definition above or below that $500 million in revenue mark for the company based on their infrastructure, their investment, and their priorities," Lucas said. "They also pointed out that the cloud is a critical part of their Big Data strategy. I took that as a big priority."

Final Jeopardy

So what's the right answer? Here is an explanation of "Big Data" that, I believe, applies to anyone and everyone:

Database technologies have become bound by business logic that fails to scale up. This logic uses inefficient methods for accessing and manipulating data. But those inefficiencies were always masked by the increasing speed and capability of hardware, coupled with the declining price of storage. Sure, it was inefficient, but up until about 2007, nobody really noticed or cared.

The inefficiencies were finally brought into the open when new applications found new and practical uses for extrapolating important results (often the analytical kind) from large amounts of data. The methods we'd always used for traditional database systems could not scale up. Big Data technologies were created to enable applications that could scale up, but more to the point, they addressed the inefficiencies that had been in our systems for the past 30 years - inefficiencies that had little to do with size or scale but rather with laziness, our preference to postpone the unpleasant details until they really became bothersome.

Essentially, Big Data tools address the way large quantities of data are stored, accessed and presented for manipulation or analysis. They do replace something in the traditional database world - at the very least, the storage system (Hadoop), but they may also replace the access methodology.

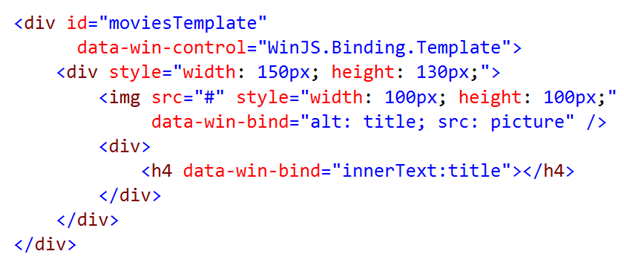

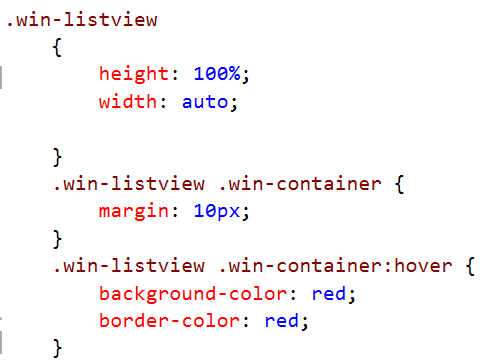

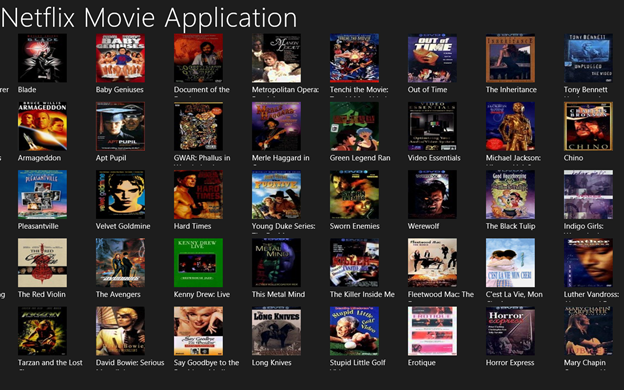

•• Dhananjay Kumar (@debug_mode) described Working with OData and WinJS ListView in a Metro Application in a 7/27/2012 post:

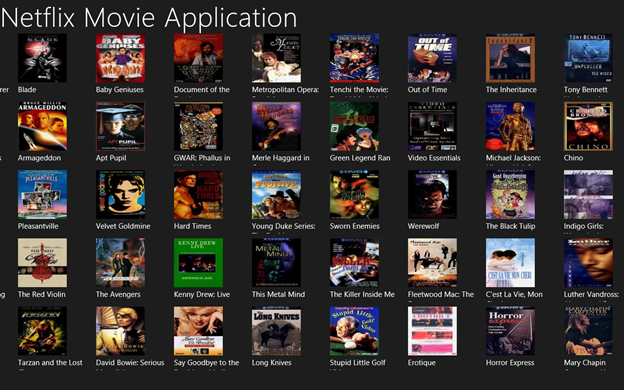

In this post we will see how to consume Netflix OData feed in HTML based Metro Application. Movies information will be displayed as following. At the end of this post, we should have output as below,

Netflix exposed all movies information as OData and that is publicly available to use. Netflix OData feed of movies are available at following location

http://odata.netflix.com/Catalog/

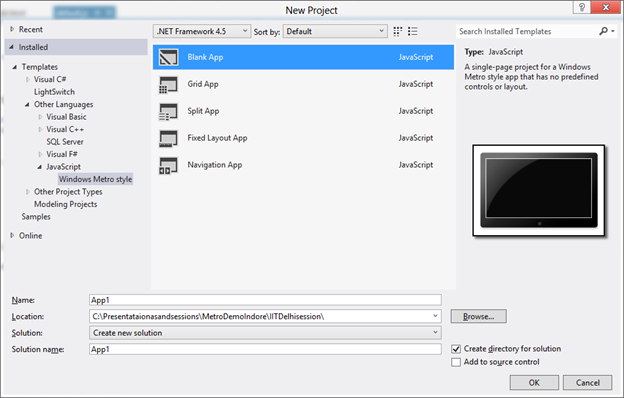

Essentially we will pull movies information from Netflix and bind it to ListView Control of WinJS. We will start with creating a blank application.

In the code behind (default.js) define a variable of type WinJS List.

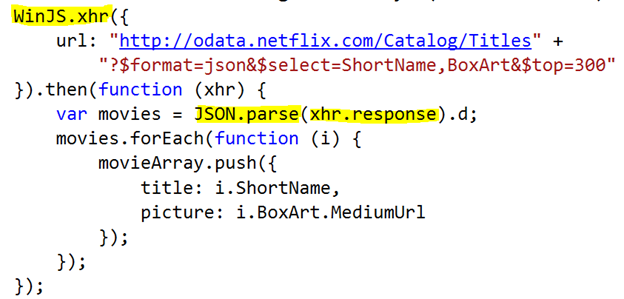

Now we need to fetch the movies detail from Netflix. For this we will use xhr function of WinJS. As the parameter of function, we will have to provide URL of the OData feed.

In above code snippet, we are performing following tasks

- Making a call to Netflix OData using WinJS .xhr function

- As input parameter to xhr function, we are passing exact url of OData endpoint.

- We are applying projection and providing JSON format information in URL itself.

- Once JSON data is fetched form Netflix server data is being parsed and pushed as individual items in the WinJS list.

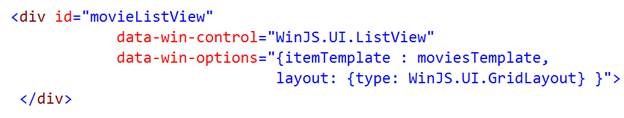

As of now we do have data with us. Now let us go ahead and create a ListView control. You can create WinJS ListView as following. Put below code on the default.html

In above code we are simply creating a WinJS ListView and setting up some basic attributes like layout and itemTemplate. Now we need to create Item Template. Template is used to format data and controls how data will be displayed. Template can be created as following

In above code, we are binding data from the data source to different HTML element controls. At last in code behind we need to set the data source of ListView

Before you go ahead and run the application just put some CSS to make ListView more immersive. Put below CSS in default.css

Now go ahead and run the application. You should be getting the expected output as following

…

Dhananjay continues with the consolidate code for the application.

• Glenn Gailey (@ggailey777) analyzed Windows Azure Storage: SQL Database versus Table Storage in a 7/26/2012 post:

I wrote an article for Windows Azure a while back called Guidance for OData in Windows Azure, where I described options for hosting OData services in Windows Azure. The easiest way to do this is to create a hosted WCF Data Service in Windows Azure that uses EF to access a SQL Database instance as the data source. This lets you access and change data stored in the cloud by using OData. Of course, another option for you is to simply store data directly in the Windows Azure Table service, since this Azure-based service already speaks OData. Using the Table service for storage is less work in terms of setting-up , but using the Table service for storage is fairly different from storing data in SQL Database tables.

Note #1: As tempting as it may seem, do not make the Table service into a data source for WCF Data Services. The Table service context is really just the WCF Data Services client context—and it doesn’t have the complete support for composing all OData queries.

Leaving the discussion of an OData service aside, there really is a fundamental question when it comes to storing data in Windows Azure.

Note #2: The exception is for BLOBs. Never, ever store blobs anywhere but in the Windows Azure Blob service. Even with WCF Data Services, you should store blobs in the Blob service and then implement the streaming provider that is backed by the Blob service.

Fortunately, some of the guys that I work with have just published a fabulous new article that addresses just this SQL Database versus Tables service dilemma, and they do the comparison in exquisite detail. I encourage you to check out this new guidance content.

Windows Azure Table Storage and Windows Azure SQL Database - Compared and Contrasted

This article even compares costs of both options. If you are ever planning to store data in Windows Azure—this article is very much worth your time to read.

For the above article, see the Windows Azure Blob, Drive, Table, Queue and Hadoop Services section above.

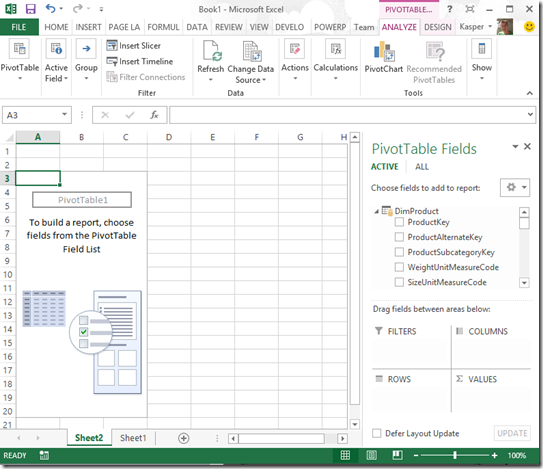

• Kasper de Jonge (@Kjonge) described Implementing Dynamic Top X via slicers in Excel 2013 using DAX queries and Excel Macros, which is of interest to Windows Azure Marketplace DataMarket and OData users, on 7/25/2012:

Our First Post on Excel 2013 Beta!

Guest post by… Kasper de Jonge!

Notes from Rob: yes, THAT Kasper de Jonge. We haven’t seen him around here much, ever since he took over the Rob Collie Chair at Microsoft. (As it happens, “de Jonge” loosely translated from Dutch means “of missing in action from this blog.” Seriously. You can look it up.)

- Excel 2013 public preview (aka beta) is out, which means that now we’re not only playing around with PowerPivot V2 and Power View V1, but now we have another new set of toys to take for a spin. I am literally running out of computers – I’m now running five in my office. Kasper is here to talk about Excel 2013.

- I’ve been blessed with a number of great guest posts in a row, and there’s already one more queued up from Colin. This has given me time to seclude myself in the workshop and work up something truly frightening in nature that I will spring on you sometime next week. But in the meantime, I hand the microphone to an old friend.

Back to Kasper…

Inspired by all the great blog posts on doing a Dynamic Top X reports on PowerPivotPro I decided to try solving it using Excel 2013. As you might have heard Excel 2013 Preview has been released this week, check this blog post to read more about it.

The trick that I am going to use is based on my other blog post that I created earlier: Implementing histograms in Excel 2013 using DAX query tables and PowerPivot. The beginning is the same so I reuse parts of that blog post in this blog.

In this case we want to get the top X products by sum of salesAmount sliced by year (using AdventureWorks). To get started I import the data into Excel. As you might know you no longer need to separately install PowerPivot. Excel 2013 now by default contains our xVelocity in-memory engine and the PowerPivot add-in when you install Excel. When you import data from sources to Excel they will be available in our xVelocity in-memory engine.

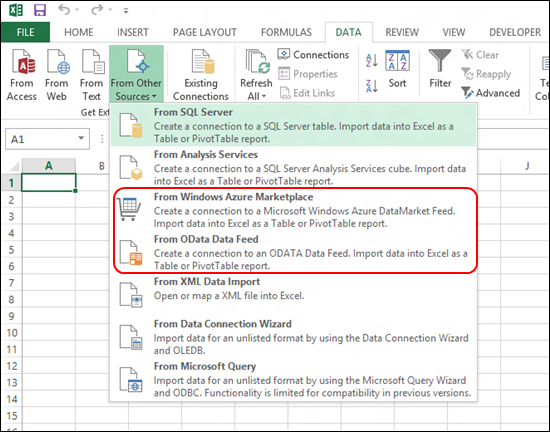

I start by opening Excel 2013, go to the data tab and import from SQL Server:

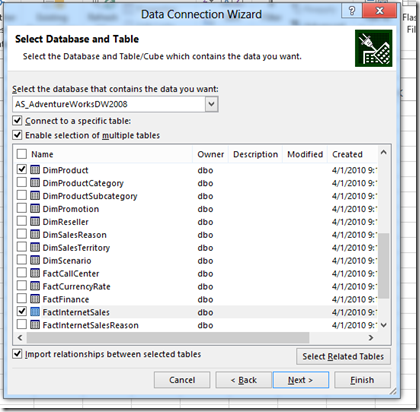

I connect to the database server and database and select the tables DimProduct, DimDate and FactInternetSales:

Key here is to select the checkbox “Enable selection of multiple tables”. As soon as you select that the tables are imported into the xVelocity in-memory engine. Press Finish and the importing starts.

When the import is completed you can select what you want to do with the data, I selected PivotTable:

Now I get the PivotTable:

I am not actually going to use the PivotTable, I need a way to get the top selling products by Sum of salesAmount. First thing that I want to do is create a Sum of SalesAmount measure using the PowerPivot Add-in. With Excel 2013 you will get the PowerPivot add-in together with Excel, all you need to do is enable it.

Click on File, Options, Select Add-ins and Manage Com Add-ins. Press Go.

Now select PowerPivot and press ok:

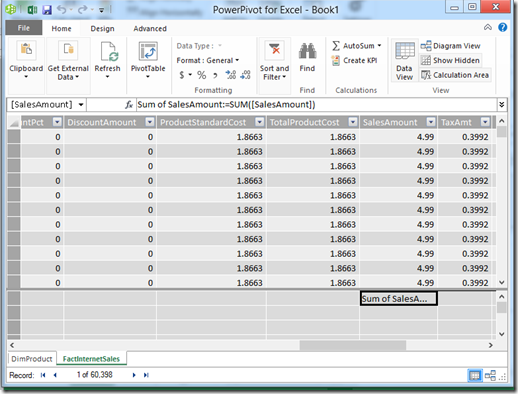

Now notice that the PowerPivot tab is available in the ribbon, and click on Manage, to manage the model

Select the FactInternetSales table, and the SalesAmount column, click AutoSum on the ribbon.

This will create the measure Sum of SalesAmount in the model.

Next up is creating a table that will give us the top 10 Products by Sum of SalesAmount.

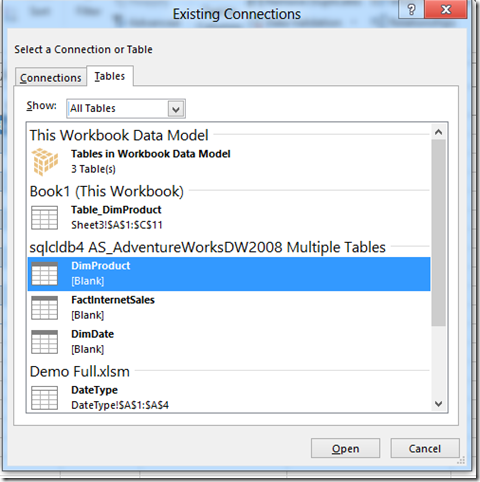

There is no way to get this using a PivotTable, this is where the fun starts. I am going to use a new Excel feature called DAX Query table, this is a hidden feature in Excel 2013 but very very useful! Lets go back to Excel, select the data tab, click on Existing connections and select Tables:

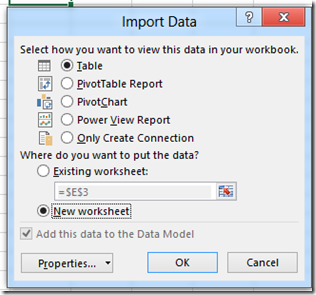

Double click on DimProduct and select Table and New worksheet:

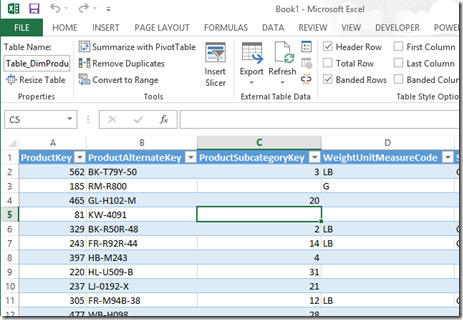

This will add the table to the Excel worksheet as a Excel table:

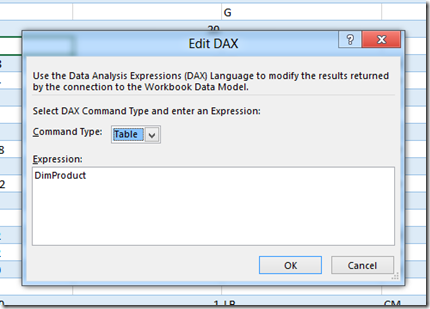

Now this is where the fun starts. Right mouse click on the table, Select Table, Select Edit DAX (ow yes !).

This will open a hidden away Excel dialog without any features like autocomplete and such:

But it will allow us to create a table based on a DAX query that points to the underlying Model. What I have done is create a DAX Query that will give us the Top 10 products filtered by a year and pasted it in the Expression field. When you use a DAX query you need to change the command to type to DAX.

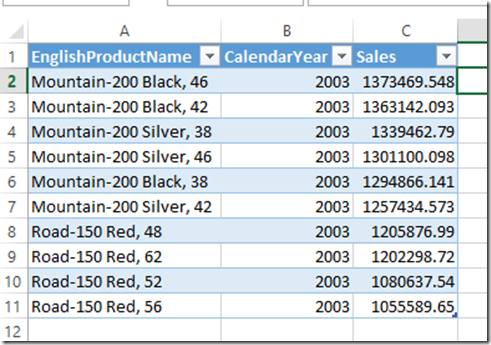

This is the query that will give us the top 10 products by Sum of SalesAmount filtered by Year.:

EVALUATE

ADDCOLUMNS(

TOPN(10,

FILTER(CROSSJOIN(VALUES(DimProduct[Englishproductname])

,VALUES(DimDate[CalendarYear]))

, DimDate[CalendarYear] = 2003

&& [Sum of SalesAmount] > 0

)

, [Sum of SalesAmount])

,”Sales”, [Sum of SalesAmount])

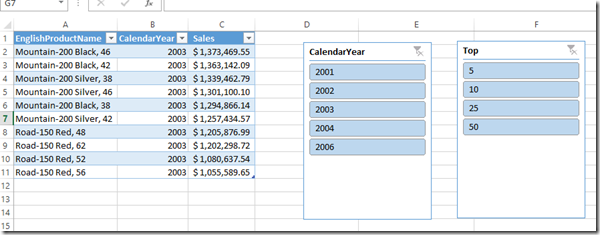

ORDER BY [Sum of SalesAmount] DESCThis results in the following table:

Since DAX queries don’t give formatted results back (unlike MDX) we need to format Sales ourselves using Excel formatting. Now here comes a interesting question, how do we get this to react to input from outside? There is no way to create slicers that are connected to table, so we need to find a way to work around this.

Since this is a native Excel feature now we can actually program these object using an Excel Macro and that is what we are going to do. But first we just add two slicers to the workbook. One for the years and the other one for the TOP X that I want the user to select from.

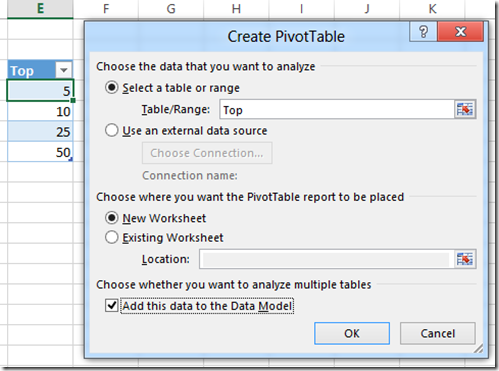

I created a small table that contains the top X values in Excel and pushed that to the model. To do that I selected the table, click insert on the ribbon, PivotTable and select “Add this data to the Data Model”:

After that I created both slicers, both based on model tables, click Insert, Slicer and select “Data Model” and double click on Tables in Workbook Data Model

Now how do we get the query we used in the table to change based on the slicer selection? First I changed the name of the Table to “ProdTable” and Sheet to “TopProducts”.

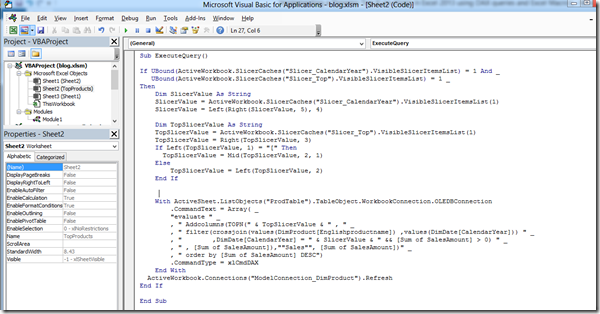

Next I wrote a Marco that will get the values from the Slicers and create a DAX query on the fly and refresh the connection to update the table. I added a procedure to the code for the sheet:

By the way I learned this by starting the Marco recording and start clicking in Excel

just try it, you’ll love it.

This is the Macro I wrote to change the DAX query of the Table based on the slicer values and refresh the table (disclaimer: it will not be foolproof

nor perfect code):

Sub ExecuteQuery()

‘Make sure only one value is selected in both slicers

If UBound(ActiveWorkbook.SlicerCaches(“Slicer_CalendarYear”).VisibleSlicerItemsList) = 1 And _

UBound(ActiveWorkbook.SlicerCaches(“Slicer_Top”).VisibleSlicerItemsList) = 1 _

Then

‘ Get the slicer values from Slicer CalendarYear

Dim SlicerValue As String

SlicerValue = ActiveWorkbook.SlicerCaches(“Slicer_CalendarYear”).VisibleSlicerItemsList(1)

SlicerValue = Left(Right(SlicerValue, 5), 4)

‘ Get the slicer values from Slicer Top

Dim TopSlicerValue As String

TopSlicerValue = ActiveWorkbook.SlicerCaches(“Slicer_Top”).VisibleSlicerItemsList(1)

TopSlicerValue = Right(TopSlicerValue, 3)

If Left(TopSlicerValue, 1) = “[" Then

TopSlicerValue = Mid(TopSlicerValue, 2, 1)

Else

TopSlicerValue = Left(TopSlicerValue, 2)

End If'Load the new DAX query in the table ProdTable

With ActiveSheet.ListObjects("ProdTable").TableObject.WorkbookConnection.OLEDBConnection

.CommandText = Array( _

"evaluate " _

, " Addcolumns(TOPN(" & TopSlicerValue & " , " _

, " filter(crossjoin(values(DimProduct[Englishproductname]) ,values(DimDate[CalendarYear])) ” _

, ” ,DimDate[CalendarYear] = ” & SlicerValue & ” && [Sum of SalesAmount] > 0) ” _

, ” , [Sum of SalesAmount]),”"Sales”", [Sum of SalesAmount])” _

, ” order by [Sum of SalesAmount] DESC”)

.CommandType = xlCmdDAX

End With

‘Refresh the connection (might be hard to find the connection name.

‘If you cant find it use Macro recording

ActiveWorkbook.Connections(“ModelConnection_DimProduct”).Refresh

End IfEnd Sub

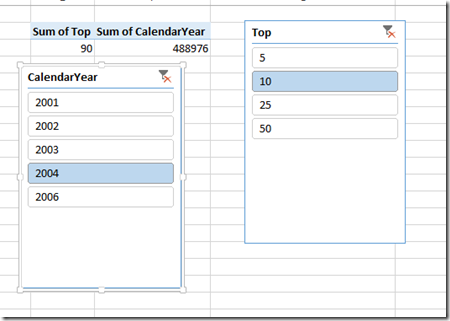

Unfortunately there is no way to react to a slicer click event or something like that. I decided to use a worksheet_change event, now here is another issue. How to get a worksheet to change on a slicer click ?

I decided to create a hidden PivotTable that I connect up to the slicers so clicking would change the PivotTable and hide it behind the slicers:

Now we end up with this worksheet:

Last thing that we need to do is connect the worksheet change event to the procedure we created. I created a procedure that I also added to the code part of the worksheet, it checks if something changes on the TopProducts sheet we execute the refresh of the PivotTable.

Private Sub Worksheet_Change(ByVal Target As Range)

If ActiveSheet.Name = “TopProducts” Then

Call ExecuteQuery

End If

End SubAnd that is all there is to it

. This allows us to get a dynamic top x based on slicers, now of course you can do whatever you want with the results, use them in a graph and so on.

Hope you enjoyed Excel 2013 and all the new features that it brings, like Marco’s to the underlying Model and DAX query tables right in Excel !

• The Office Developer Center published ProjectData - Project 2013 OData service reference on 7/16/2012 (missed when posted):

The Open Data Protocol (OData) reference for Project Server 2013 Preview documents the entity data model (EDM) for the ProjectData service and shows how to use LINQ queries and REST queries to get report data.

Applies to: Project Server 2013 Preview

ProjectData is a WCF Data Service, also known as an OData service. The ProjectData service is implemented with the OData V3 libraries.

The ProjectData service enables REST queries and a variety of OData client libraries to make both online and on-premises queries of reporting data from a Project Web App instance. For example, you can directly use a REST query in web browsers, or use JavaScript to build web apps and client apps for mobile devices, tablets, PCs, and Mac computers. Client libraries are available for JavaScript, the Microsoft .NET Framework, Microsoft Silverlight, Windows Phone 7, and other languages and environments. In Project Server 2013 Preview, the ProjectData service is optimized to create pivot tables, pivot charts, and PowerView reports for business intelligence by using the Excel 2013 Preview desktop client and Excel Services in SharePoint.

For information about a task pane app for Office that runs in Project Professional 2013 Preview and uses JavaScript and JQuery to get reporting data from the ProjectData service, see the Task pane apps in Project Professional section in What's new for developers in Project 2013.

You can access the ProjectData service through a Project Web App URL. The XML structure of the EDM is available through http://ServerName/ProjectServerName/_api/ProjectData/$metadata. To view a feed that contains the collection of projects, for example, you can use the following REST query in a browser: http://ServerName/ProjectServerName/_api/ProjectData/Projects. When you view the webpage source in the browser, you see the XML data for each project, with properties of the Project entity type that the ProjectData service exposes.

The EDM of the ProjectData service is an XML document that conforms to the OData specification. The EDM shows the entities that are available in the reporting data and the associations between entities. The EDM includes the following two Schema elements:

The Schema element for the ReportingData namespace defines EntityType elements and Association elements:

EntityType elements: Each entity type, such as Project and Task, specifies the set of properties, including navigation properties, that are available for that entity. For example, task properties include the task name, task GUID, and project name for that task. Navigation properties define how a query for an entity such as Project is able to navigate to other entities or collections, such as Tasks within a project. Navigation properties define the start role and end role, where roles are defined in an Association element.

Association elements: An association relates one entity to another by endpoints. For example, in the Project_Tasks_Task_Project association, Project_Tasks is one endpoint that relates a Project entity to the tasks within that project. Task_Project is the other endpoint, which relates a Task entity to the project in which the task resides.

The Schema element for the Microsoft.Office.Project.Server namespace includes just one EntityContainer element, which contains the child elements for entity sets and association sets. The EntitySet element for Projects represents all of the projects in a Project Web App instance; a query of Projects can get the collection of projects that satisfy a filter or other options in a query.

An AssociationSet element is a collection of associations that define the primary keys and foreign keys for relationships between entity collections. Although the ~/ProjectData/$metadata query results include the AssociationSet elements, they are used internally by the OData implementation for the ProjectData service, and are not documented.

For a Project Web App instance that contains a large number of entities, such as assignments or tasks, you should limit the data returned in at least one of the following ways. If you don't limit the data returned, the query can take a long time and affect server performance, or you can run out of memory on the client device.

Use a $filter URL option, or use $select to limit the data. For example, the following query filters by project start date and returns only four fields, in order of the project name (the query is all on one line):

http://ServerName/ProjectServerName/_api/ProjectData/Projects?

$filter=ProjectStartDate gt datetime'2012-01-01T00:00:00'&

$orderby=ProjectName&

$select=ProjectName,ProjectStartDate,ProjectFinishDate,ProjectCostGet an entity collection by using an association. For example, the following query internally uses the Project_Assignments_Assignment_Project association to get all of the assignments in a specific project (all on one line):

http://ServerName/ProjectServerName/_api/ProjectData

/Projects(guid'263fc8d7-427c-e111-92fc-00155d3ba208')/AssignmentsDo multiple queries to return data one page at a time, by using the $top and $skip URL options in a loop. For example, the following query gets issues 11 through 20 for all projects, in order of the resource who is assigned to the issue (all on one line):

http://ServerName/ProjectServerName/_api/ProjectData

/Issues?$skip=10&$top=10&$orderby=AssignedToResourceFor information about query string options such as $filter, $orderby, $skip, and $top, see OData: URI conventions.

Note

The ProjectData service does not implement the $links query option or the $expand query option. Excel 2013 Preview internally uses the Association elements and the AssociationSet elements in the entity data model to help create associations between entities, for pivot tables and other constructs.

Chris Webb (@Technitrain) described Consuming OData feeds from Excel Services 2013 in PowerPivot in a 7/24/2012 post:

In yesterday’s post I showed how you could create surveys in the Excel 2013 Web App, and mentioned that I would have liked to consume the data generated by a survey via the new Excel Services OData API but couldn’t get it working. Well, after a good night’s sleep and a bit more tinkering I’ve been successful so here’s the blog post I promised!

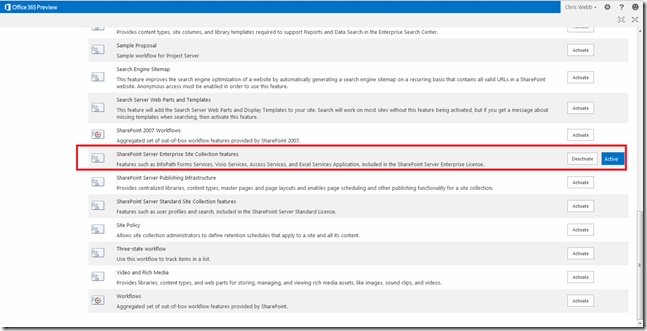

First of all, what did I need to do to get this working? Well, enable Excel Services for a start, duh. This can be done by going to Settings, then Site Collections features, and activating Sharepoint Server Enterprise Site Collection features:

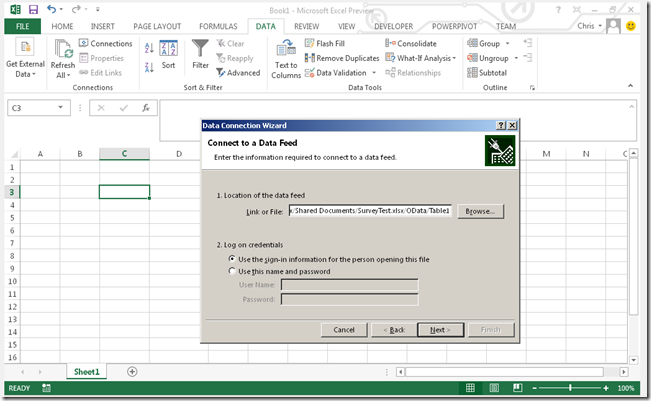

With that done, and making sure that my permissions are all in order, I can go into Excel, start the OData feed import wizard (weirdly, the PowerPivot equivalent didn’t work) and enter the URL for the table in my worksheet (called Table1, helpfully):

Here’s what the URL for the Survey worksheet I created in yesterday’s post looks like:

https://mydomain.sharepoint.com/_vti_bin/ExcelRest.aspx/Shared%20Documents/SurveyTest.xlsx/OData/Table1(there’s much more detail on how OData requests for Excel Services can be constructed here).

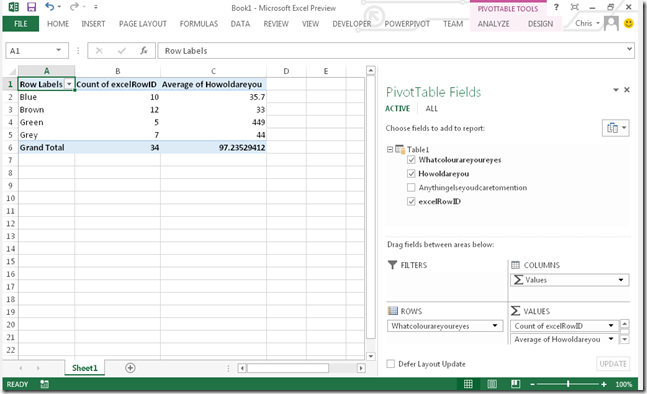

And bingo, the data from my survey is loaded into Excel/PowerPivot and I can query it quite happily. Nothing to it.

In a way it’s a good thing I’m writing about this as a separate post because I’m a big fan of OData and I believe that the Excel Services OData API is a big deal. It’s going to be useful for a lot more than consuming data from surveys: I can imagine it could be used for simple budgeting solutions where managers input values on a number of spreadsheets, which are then pulled together into a PowerPivot model for reporting and analysis; I can also imagine it being used for simple MDM scenarios where dimension tables are held in Excel so users can edit them easily.

There are some obvious dangers with using Excel as a kind of database in this way, but there are also many advantages too, most of which I outlined in my earlier discussions of data stores that are simultaneously human readable and machine readable (see here and here). I can see it as being the glue for elaborate multi-spreadsheet-based solutions, although it’s still fairly clunky and some of the ideas I saw in Project Dirigible last year are far in advance of what Excel 2013 offers now. It’s good to see Microsoft giving us an API like this though and I’m sure we’ll see some very imaginative uses for it in the future.

<Return to section navigation list>

Windows Azure Service Bus, Access Control Services, Caching, Active Directory and Workflow

•• Haishi Bai (@HaishiBai2010) explained Enterprise Integration Patterns with Windows Azure Service Bus (1) – Dynamic Router in a 7/27/2012 post:

The book Enterprise Integration Patterns by Gregor Hohpe et al. has been sitting on my desk for several years now, and I’ve always been planning writing a library that brings those patterns into easy-to-use building blocks for .Net developers. However , the motion of writing a MOM system by myself doesn’t sound quite plausible to myself, even though I’ve been taking on some insane projects from time to time.

On the other hand, the idea is so fascinating that I keep looking for opportunities to actually start the project. This is why I was really excited when I first learned about Windows Azure Service Bus. Many of the patterns, including (but not limited to) Message Filter, Content-Based Router, and Competing Consumers, are either supported out-of-box or can be implemented with easy customizations. However, I want more. I want ALL the patterns in the book supported and can be applied to applications easily. So, after a couple years of waiting, I finally started the project to implement Enterprise Integration Patterns.

About the project

The project is open source, which can be accessed here. I’m planning to add more patterns to the project on a monthly basis (as I DO, maybe surprisingly, have other things to attend to). I’d encourage you, my dear readers, to participate in the project if you found the project interesting. And you can always send me comments and feedbacks to hbai@microsoft.com. At the time when this post is written, the code is at its infant stage and needs lots of work. For example, one missing part is configuration support, which will make constructing processing pipelines cleaner that it is now. It will be improved over time. Before using the test console application in the solution, you need to modify app.config file to use your own Windows Azure Service Bus namespace info.

Extended Windows Azure Service Bus constructs

As this is the first post of the series, I’ll spend sometime to explain the basic entities used in this project, and then give you an overview of the overall architecture.

Processing Units

Windows Azure Service Bus provides two types of “pipes” – queues and topics, which are fundamental building blocks of a messaging system. Customization points, such as filters, are provided on these pipes so you can control how these pipes are linked into processing pipelines. This works for many of the simple patterns perfectly. However, for more complex patterns, the pipes are linked in more convoluted ways. And very often the connectors among the pipes assume enough significance to be separate entities. In this project I’m introducing “Processing Unit” as separate entities to provide greater flexibilities and capabilities to building processing pipelines. A Processing Unit can be linked to a number of inbound channels, outbound channels as well as control channels. It’s self-contained so it can be hosted in any processes – it can be hosted on-premises or on cloud, in cloud services or console applications, or wherever else. And in most cases you can easily scale out Processing Units by simply hosting more instances. In addition, Processing Units support events for event-driven scenarios.

Channel

Channels are the “pipes” in a messaging system. These are where your messages flow from one place to another. Channels connect Processing Units, forming a Processing Pipeline.

Processing Pipeline

Process Pipelines comprise Processing Units and Channels. It provides management boundaries so that you can construct and manage messaging routes more easily. Here I need to point out that Processing Pipeline is more a management entity than runtime entity. Parts of a Processing Pipeline can be (and usually are) scattered across different networks, machines and processes.

Processing Unit Host

Processing Unit Host, as its name suggests, hosts Processing Units. It manages lifecycle of hosted Processing Units as well as governing overall system resource consumption.

Pattern 1: Dynamic Router

Problem

Message routing can be achieved by a topic/subscription channel with filters that control which recipients get what messages. In other words, messages are broadcasted to all potential recipients before they are filtered by receiver-side filters. If we only want to route messages to specific recipients, Message Routers are more efficient because there’s only one message sent to designated recipient. Further more, routing criteria may be dynamic, requiring the routing Processing Unit to be able to take in feedbacks from recipients so that it can make informed decisions when routing new messages.

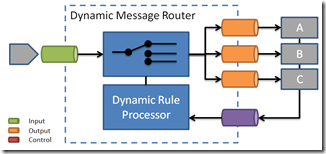

Pattern

Dynamic Router pattern is well-defined in Gregor’s book, so I won’t bother to repeat the book here, other than stealing a diagram from it (see below). What’s make this pattern interesting is that the recipients are provided with a feedback channel so that they can report their updated states back to the router to affect future routing decisions. As you can see in the following diagram, the rule processor (as well as its associated storage, which is not shown in the following diagram) doesn’t belong to any specific channels, so it should exist as a separate entity.

In my implementation the Dynamic Message Router is implemented as a Processing Unit, which is hosted independently from message queues. Specifically, DynamicRouter class, which has the root class ProcessingUnit, defines a virtual PickOutputChannel method that returns a index to the channel that is to be selected for next message. The default implementation of this method doesn’t take feedbacks into consideration. Instead, it simply selects output channels in a round-robin fashion:

protected virtual int PickOutputChannel(ChannelMessageEventArgs e) { mChannelIndex = OutputChannels.Count > 0 ? (mChannelIndex + 1) % OutputChannels.Count : 0; return mChannelIndex; }Obviously the method can be overridden in sub classes to implement more sophisticated routing logics. In addition, the Processing Unit can subscribe to MessageReceviedOnChannel on its ControlChannels property to collect feedbacks from recipients. The architecture of the project doesn’t mandate any feedback formats or storage choices, you are pretty much free to implement whatever fits the situation.

Sample Scenario – dynamic load-balancing

In this sample scenario, we’ll implement a Dynamic Message Router that routes messages to least pressured recipients using a simple greedy load-balancing algorithm. The pressure of a recipient is defined as the current workload of that recipient. When the router tries to route a new message, it will always routes the message to the recipient with least workload. This load-balancing scheme often yields better results than round-robin load-balancing. The following screenshot shows the running result of such as scenario. In this scenario, we have 309 second worth of work to be distributed to 2 nodes. If we followed round-robin pattern, this particular work set will take 161 seconds to finish. While using the greedy load-balancing, it took the two nodes 156 seconds to finish all the tasks, which is pretty close to the theoretical minimum processing time – 154.8 seconds. Note that it’s virtually impossible to achieve the optimum result as the calculation assumes any single task can be split freely without any penalties.

This scenario uses GreedyDynamicRouter class, which is a sub class of DynamicRouter. The class subscribes to MessageReceivedOnchannel event of its ControlChannels collection to collect recipient feedbacks, which are encoded in message headers sent back from the recipients. As I mentioned earlier, the architecture doesn’t mandate any feedback formats, the recipients and the router need to negotiate a common format offline, or use the “well known” headers, as used by the GreedyDynamicRouter implementation. This implementation saves all its knowledge about the recipients in memory. But obviously it can be extended to use external storages or distributed caching for the purpose.

Summary

In this post we had a brief overview of the overall architecture of Enterprise Integration Patterns project, and we also went through the first implemented pattern – Dynamic Router. We are starting with harder patterns in this project so that we can validate and improve the overall infrastructure during earlier phases of the project. At this point I haven’t decided which pattern to go after next, but message transformation patterns and other routing patterns are high in the list. See you next time!

•• Vittorio Bertocci (@vibronet, pictured in the middle below) described Identity & Windows Azure AD at TechEd EU Channel9 Live in a 7/27/2012 post:

Is it a month already? Oh boy, apparently I am in full time-warp mode…

Anyhow: as you’ve read, about one month ago I flew to Amsterdam for a very brief 2-days stint at TechEd Europe (pic or it didn’t happen).The morning of the day I was going to fly back, I was walking by the O’Reilly booth… which happened to be in front of the Channel9 Live stage.

My good friend Carlo spotted me, and invited me for a totally impromptu chat for the opening of the Channel9 live broadcasting from TechEd Europe! Who would have thought, luckily I shaved that morning.I enjoyed that ~30 minutes immensely. Carlo and Joey are fantastic anchors, and very smart guys who did all the right questions. If you want a high level overview of Windows Azure AD (say a 100 level) and you don’t mind my heavy accent, then you’ll be happy to know that today the recording of the event just showed up on Channel9’s home page!

If when watching the video you’ll have the impression that it’s just a chat between old friends rather than an interview, that’s because it is exactly it.

•• See the Windows Azure and Office 365 article by Scott Guthrie (@scottgu) in the Live Windows Azure Apps, APIs, Tools and Test Harnesses section below.

Manu Cohen-Yashar (@ManuKahn) described Running WIF Relying parties in Windows Azure in a 7/22/2012 post:

When running in a multi server environment like windows azure it is required to make sure the cookies generated by WIF are encrypted with the same pair of keys so all servers can open them.

Encrypt cookies using RSA

In Windows Azure, the default cookie encryption mechanism (which uses DPAPI) is not appropriate because each instance has a different key. This would mean that a cookie created by one web role instance would not be readable by another web role instance. This could lead to service failures effectively causing denial of the service. To solve this problem you should use a cookie encryption mechanism that uses a key shared by all the web role instances. The following code written to global.asax shows how to replace the default SessionSecurityHandler object and configure it to use the RsaEncryptionCookieTransform class:

void Application_Start(object sender, EventArgs e) { FederatedAuthentication.ServiceConfigurationCreated += OnServiceConfigurationCreated; } private void OnServiceConfigurationCreated(object sender, ServiceConfigurationCreatedEventArgs e) { List<CookieTransform> sessionTransforms = new List<CookieTransform>(new CookieTransform[] { new DeflateCookieTransform(), new RsaEncryptionCookieTransform(e.ServiceConfiguration.ServiceCertificate), new RsaSignatureCookieTransform(e.ServiceConfiguration.ServiceCertificate) }); SessionSecurityTokenHandler sessionHandler = new SessionSecurityTokenHandler(sessionTransforms.AsReadOnly()); e.ServiceConfiguration.SecurityTokenHandlers.AddOrReplace(sessionHandler); }next upload the certificate to the hosted service and declare it in the LocalMachine certificate store of the running role.

Failing to do the above will generate the following exception when running a relying party in azure: "InvalidOperationException: ID1073: A CryptographicException occurred when attempting to decrypt the cookie using the ProtectedData API". It means that decryption with DPAPI failed. It makes sense because DPAPI key is coupled with the physical machine it is running on.

After changing the encryption policy (like so) make sure to delete all existing cookies other wise you will get the following exception: CryptographicException: ID1014: The signature is not valid. The data may have been tampered with. (It means that an old DPAPI cookie is being processed by the new RSA policy and that will obviously will fail.

Richard Seroter (@rseroter) described Installing and Testing the New Service Bus for Windows in a 7/17/2012 post (missed when published):

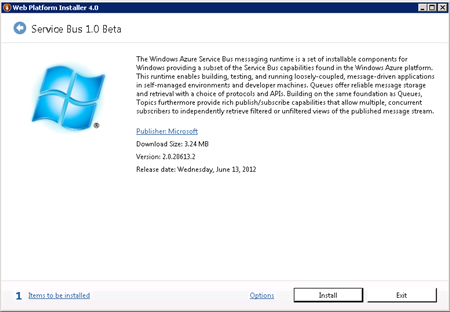

Yesterday, Microsoft kicked out the first public beta of the Service Bus for Windows [Server] software. You can use this to install and maintain Service Bus queues and topics in your own data center (or laptop!). See my InfoQ article for a bit more info. I thought I’d take a stab at installing this software on a demo machine and trying out a scenario or two.

To run the Service Bus for Windows, you need a Windows Server 2008 R2 (or later) box, SQL Server 2008 R2 (or later), IIS 7.5, PowerShell 3.0, .NET 4.5, and a pony. Ok, not a pony, but I wasn’t sure if you’d read the whole list. The first thing I did was spin up a server with SQL Server and IIS.

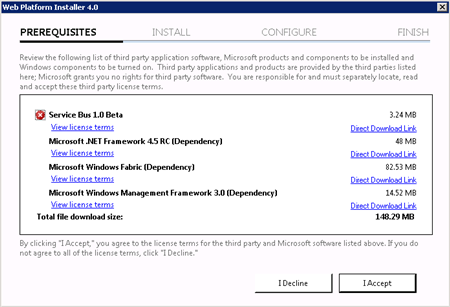

Then I made sure that I installed SQL Server 2008 R2 SPI. Next, I downloaded the Service Bus for Windows executable from the Microsoft site. Fortunately, this kicks off the Web Platform Installer, so you do NOT have to manually go hunt down all the other software prerequisites.

The Web Platform Installer checked my new server and saw that I was missing a few dependencies, so it nicely went out and got them.

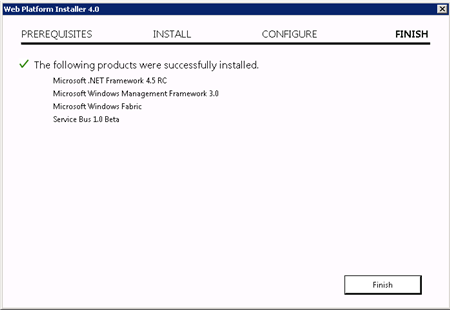

After the obligatory server reboots, I had everything successfully installed.

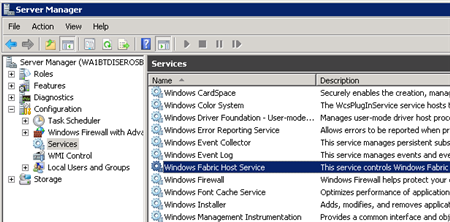

I wanted to see what this bad boy installed on my machine, so I first checked the Windows Services and saw the new Windows Fabric Host Service.

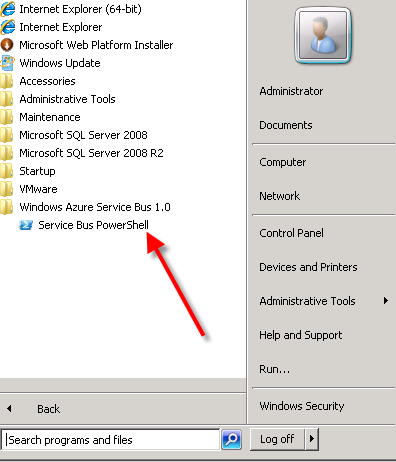

I didn’t have any databases installed in SQL Server yet, no sites in IIS, but did have a new Windows permissions Group (WindowsFabricAllowedUsers) and a Service Bus-flavored PowerShell command prompt in my Start Menu.

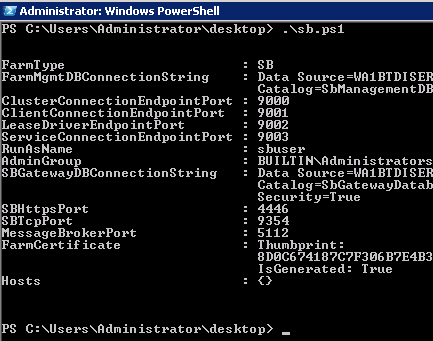

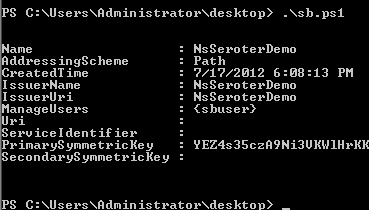

Following the configuration steps outlined in the Help documents, I executed a series of PowerShell commands to set up a new Service Bus farm. The first command which actually got things rolling was New-SBFarm:

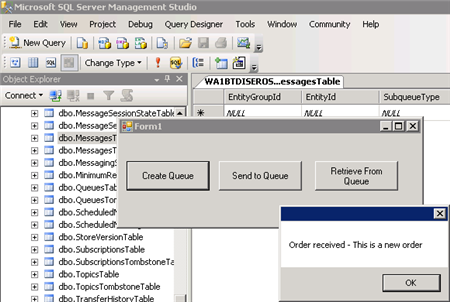

$SBCertAutoGenerationKey = ConvertTo-SecureString -AsPlainText -Force -String [new password used for cert] New-SBFarm -FarmMgmtDBConnectionString 'Data Source=.;Initial Catalog=SbManagementDB;Integrated Security=True' -PortRangeStart 9000 -TcpPort 9354 -RunAsName 'WA1BTDISEROSB01\sbuser' -AdminGroup 'BUILTIN\Administrators' -GatewayDBConnectionString 'Data Source=.;Initial Catalog=SbGatewayDatabase;Integrated Security=True' -CertAutoGenerationKey $SBCertAutoGenerationKey -ContainerDBConnectionString 'Data Source=.;Initial Catalog=ServiceBusDefaultContainer;Integrated Security=True';When this finished running, I saw the confirmation in the PowerShell window:

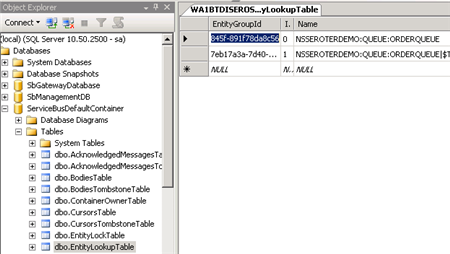

But more importantly, I now had databases in SQL Server 2008 R2.

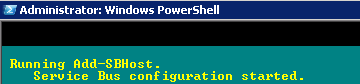

Next up, I needed to actually create a Service Bus host. According to the docs about the Add-SBHost command, the Service Bus farm isn’t considered running, and can’t offer any services, until a host is added. So, I executed the necessary PowerShell command to inflate a host.

$SBCertAutoGenerationKey = ConvertTo-SecureString -AsPlainText -Force -String [new password used for cert] $SBRunAsPassword = ConvertTo-SecureString -AsPlainText -Force -String [password for sbuser account]; Add-SBHost -FarmMgmtDBConnectionString 'Data Source=.;Initial Catalog=SbManagementDB;Integrated Security=True' -RunAsPassword $SBRunAsPassword -CertAutoGenerationKey $SBCertAutoGenerationKey;A bunch of stuff started happening in PowerShell …

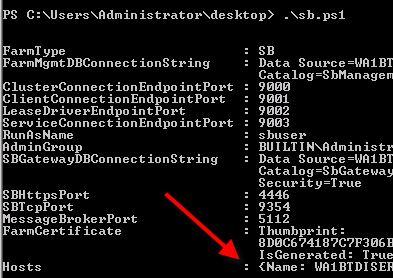

… and then I got the acknowledgement that everything had completed, and I now had one host registered on the server.

I also noticed that the Windows Service (Windows Fabric Host Service) that was disabled before, was now in a Started state. Next I required a new namespace for my Service Bus host. The New-SBNamespace command generates the namespace that provides segmentation between applications. The documentation said that “ManageUser” wasn’t required, but my script wouldn’t work without it, So, I added the user that I created just for this demo.

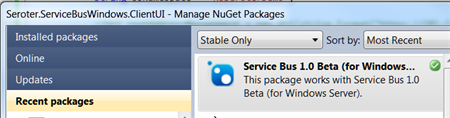

New-SBNamespace -Name 'NsSeroterDemo' -ManageUser 'sbuser';To confirm that everything was working, I ran the Get-SbMessageContainer and saw an active database server returned. At this point, I was ready to try and build an application. I opened Visual Studio and went to NuGet to add the package for the Service Bus. The name of the SDK package mentioned in the docs seems wrong, and I found the entry under Service Bus 1.0 Beta .

In my first chunk of code, I created a new queue if one didn’t exist.

//define variables string servername = "WA1BTDISEROSB01"; int httpPort = 4446; int tcpPort = 9354; string sbNamespace = "NsSeroterDemo"; //create SB uris Uri rootAddressManagement = ServiceBusEnvironment.CreatePathBasedServiceUri("sb", sbNamespace, string.Format("{0}:{1}", servername, httpPort)); Uri rootAddressRuntime = ServiceBusEnvironment.CreatePathBasedServiceUri("sb", sbNamespace, string.Format("{0}:{1}", servername, tcpPort)); //create NS manager NamespaceManagerSettings nmSettings = new NamespaceManagerSettings(); nmSettings.TokenProvider = TokenProvider.CreateWindowsTokenProvider(new List() { rootAddressManagement }); NamespaceManager namespaceManager = new NamespaceManager(rootAddressManagement, nmSettings); //create factory MessagingFactorySettings mfSettings = new MessagingFactorySettings(); mfSettings.TokenProvider = TokenProvider.CreateWindowsTokenProvider(new List() { rootAddressManagement }); MessagingFactory factory = MessagingFactory.Create(rootAddressRuntime, mfSettings); //check to see if topic already exists if (!namespaceManager.QueueExists("OrderQueue")) { MessageBox.Show("queue is NOT there ... creating queue"); //create the queue namespaceManager.CreateQueue("OrderQueue"); } else { MessageBox.Show("queue already there!"); }After running this (directly on the Windows Server that had the Service Bus installed since my local laptop wasn’t part of the same domain as my Windows Server, and credentials would be messy), as my “sbuser” account, I successfully created a new queue. I confirmed this by looking at the relevant SQL Server database tables.

Next I added code that sends a message to the queue.

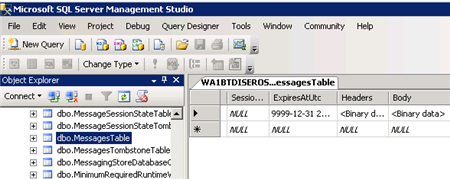

//write message to queue MessageSender msgSender = factory.CreateMessageSender("OrderQueue"); BrokeredMessage msg = new BrokeredMessage("This is a new order"); msgSender.Send(msg); MessageBox.Show("Message sent!");Executing this code results in a message getting added to the corresponding database table.

Sweet. Finally, I wrote the code that pulls (and deletes) a message from the queue.

//receive message from queue MessageReceiver msgReceiver = factory.CreateMessageReceiver("OrderQueue"); BrokeredMessage rcvMsg = new BrokeredMessage(); string order = string.Empty; rcvMsg = msgReceiver.Receive(); if(rcvMsg != null) { order = rcvMsg.GetBody(); //call complete to remove from queue rcvMsg.Complete(); } MessageBox.Show("Order received - " + order);When this block ran, the application showed me the contents of the message, and upon looking at the MessagesTable again, I saw that it was empty (because the message had been processed).

So that’s it. From installation to development in a few easy steps. Having the option to run the Service Bus on any Windows machine will introduce some great scenarios for cloud providers and organizations that want to manage their own message broker.

Abishek Lal described Service Bus [for Windows Server] Symmetry in a 7/16/2012 post (missed when published):

Whether your application runs in the cloud or on premises, it often needs to integrate with other applications or other instances of the application. Windows Azure Service Bus provides messaging technologies including Relay and Brokered messaging to achieve this. You also have the flexibility of using the Azure Service Bus (multi-tenant PAAS) and/or Service Bus 1.0 (for Windows Server). This post takes a look at both these hosting options from the application developer perspective.

The key principle in providing these offerings is to enable applications to be developed, hosted and managed consistently between cloud service and on-premise hosted environments. Most features in Service Bus are available in both environments and only those that are clearly not applicable to a certain hosting environment are not symmetric. Applications can be written against the common set of features and then can be run between these environments with configuration only changes.

Overview

The choice of using Azure Service Bus and Service Bus on-premise can be driven by several factors. Understanding the differences between these offering will help guide the right choice and produce the best results. Azure Service Bus is a multi-tenant cloud service, which means that the service is shared by multiple users. Consuming this service requires no administration of the hosting environment, just provisioning through your subscriptions. Service Bus on-premise is a when you install the same service bits on machines and thus manage tenancy and the hosting environment yourself.

Figure 1: Windows Azure Service Bus (PAAS) and Service Bus On-premise

Development

To use any of the Service Bus features, Windows applications can use Windows Communication Foundation (WCF). For queues and topics, Windows applications can also use a Service Bus-defined Messaging API. Queues and topics can be accessed via HTTP as well, and to make them easier to use from non-Windows applications, Microsoft provides SDKs for Java, Node.js, and other languages.

All of these options will be symmetric between Azure Service Bus and Service Bus 1.0, but given the Beta nature of the release, this symmetry is not yet available. The key considerations are called out below:

Similarities

- The same APIs and SDKs can be used to target Azure Service Bus and Service Bus on-premise

- Configuration only changes can target application to the different environments

- The same application can target both environments

Differences

- Identity and authentication methods will vary thus having application configuration impact

- Latency, throughput and other environmental differences can affect application performance since these are directly tied to the physical hardware that the service is hosted in

- Quotas vary between environments (details here)

It’s important to understand that there is only one instance of Azure Service Bus that is available as a PAAS service but several on-premise environments may exists either thru third-party hosters or self-managed IT departments. Since the service is continually evolving with new features and improvements it is a significant factor in deciding with features to consume based on the environment targeted. Below is a conceptual timeline of how the features will be released (note this does NOT track to any calendar dates):

Figure 2: Client SDK release and compatibility timeline

Application Considerations

The key considerations from an application perspective can be driven by business or engineering needs. The key similarities and differences from this perspective are listed below:

Similarities

- Namespaces are the unit for identity and management of your artifacts

- Queues/Topics are contained within a Namespace

- Claims based permissions can be managed on a per-entity basis

- Size constraints are applied on Queues/Topics

Differences

- Relay messaging is currently unavailable on Service Bus on-premise

- Service Registry is currently available on Service Bus on-premise

- Token based Identity providers for Service are ACS and for on-premise is AD and Self-signed tokens

- SQL Server is the storage mechanism that is provisioned and managed by you for on-premises

- Latency and throughput of messages vary between the environments

- The maximum allowable values for message size and entity size vary

Do give the Service Bus 1.0 Beta a try, following are some additional resources:

<Return to section navigation list>

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

•• See the Windows Azure and Office 365 article by Scott Guthrie (@scottgu) in the Live Windows Azure Apps, APIs, Tools and Test Harnesses section below.

• Kristian Nese (@KristianNese) continued his series with Windows Azure Services for Windows Server - Part 2 on 7/26/2012:

In Part 1, we Introduced Windows Azure Services for Windows Server

In Part 2, we will take a closer look at the experience of this solution, already running in Windows Azure and the changes that were announced early in June. This is to help you to better understand Windows Azure in general, and to be able to use it in your strategy and also explain the long term goal of a common experience in cloud computing, no matter if it`s on-premise or public.

Windows Azure used to be PaaS – Only until recently.

I have blogged several times about Windows Azure and that it`s PaaS and not IaaS, even with the VM Role in mind.

A bit of history:

In the early beginning in 2007, Windows Azure did only support ASP.NET for the front-end and .NET in the back-end and was ideal for running Microsoft based code in the cloud and take advantage of Microsoft`s scalable datacenters. The only thing the developer had to focus on was to write code.

Based on feedback from customers, Microsoft had to open up a bit to support various workloads. People wanted to move to cloud computing but didn’t had the time or effort necessary to perform the transition. And of course, it was a huge question about costs as well.