Windows Azure and Cloud Computing Posts for 5/15/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDIm Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database, Federations and Reporting

- Marketplace DataMarket, Social Analytics, Big Data and OData

- Windows Azure Access Control, Identity and Workflow

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database, Federations and Reporting

Clint Edmonson (@clinted) answered Why can’t I connect to my SQL Azure database? in a 5/15/2012 post to the US DPE Azure Connection blog:

We’ve been running our Azure Hands On Experience labs throughout the US Central Region for the last month or so and the SQL Azure exercises require the user to connect a newly created SQL Azure database from their development workstation.

Inevitably, at least one machine is configured with one or more settings that prevent a connection from succeeding, either from SQL Server Management Studio or Visual Studio’s Data Connections tree in Server Explorer (my preferred method btw).

Here are the troubleshooting tips we’ve been using in the labs:

1. Double check your software versions.

If you’re using SQL Server Management Studio, it needs to be SQL Server Management Studio 2008 R2 (the free express or full edition). If you go to the Help | About menu, the dialog will say R2 in the logo product logo.

If you want to connect from directly inside Visual Studio 2010, make sure you have Visual Studio 2010 Service Pack SP1 installed as well. If you go to the Help | About menu item in Visual Studio 2010, the popup dialog will say SP1 immediately after the product version number. Also, make sure you have the latest Windows Azure SDK installed. It will set up the hooks for azure database connectivity from within Visual Studio 2010.

2. Double check your SQL Server Native Client Configuration

If you’re getting an error that mentions named pipes it means the SQL client networking protocol settings on your development machine are set to connect to servers using the named pipes protocol by default. This won’t work with SQL Azure because named pipes is a network protocol optimized for local LAN traffic. All connections to SQL Azure are done using TCP/IP so you need TCP/IP enabled.

To do this, run SQL Server Configuration Manager go to your start menu and select All Programs | SQL Server 2008 R2 | Configuration Tools | SQL Server Configuration Manager.

You need to make sure the TCP/IP protocol is enabled under the SQL Native Client 10.0 Configuration tree item. (On 64 bit machines you might have both 32 bit and 64 bit tree branches – be sure to enable TCP/IP in both). It’s generally a good ide to set TCP/IP as a higher priority protocol over named pipes as TCP/IP is the most common network protocol for SQL severs these days. This msdn article has more details about the configuration options.

3. Check your client side firewall rules.

SQL Servers communicate over TCP/IP through port 1433. The Windows Firewall software that shipped with XP SP2, Vista and Windows 7 is pretty good about about asking you when a new port is about to be used and letting you choose if you want it opened. If you're using another client side firewall solution, be sure to enable port 1433 for outbound connections. If your firewall software is set on an application by application basis, set up a rule for SQL Server Management Studio or Visual Studio appropriately.

4. Check the SQL Azure server firewall rules

When you create your SQL Azure database, you were asked to set up firewall rules to allow outside connections to the server. When you add a rule the dialog box lets you to set a range of IP addresses. It also shows your current IP address. In most cases your current IP address is chosen from a bank of addresses on the network you’re connected to.

To check these settings, log into your windows azure account, navigate to the databases area, and choose the database you’re trying to connect to from the tree on the left side of the screen. You should see the dropdown option to view and edit the Firewall Rules for your database server. Add or edit a rule to enable access for the development machine you’re trying to connect with.

As a minimum, you should add your current IP address as it is shown at the bottom. The hands on labs recommend adding a large enough address range so that if you have to reboot and end up acquiring a slightly different address you’ll still be able to connect with having to add another rule. You cans that I did this here.

Note: The address shown for Your current IP address is the public address that the server sees your internet traffic coming from. In the big chain of firewalls between you and your SQL Azure database, this is the address as it will be recognized from the network you currently reside in. It may or may not match the actual IP address your individual machine says it’s using. That’s OK. What it’s seeing is the most public internet facing firewall that you’re going through to get to the database. That’s all the server needs to know to allow you in.

5. Check your corporate firewall rules

If you still can’t connect and all of the above items check out and you’re currently connected to corporate network, chances are your corporate IT folks have another firewall in place to keep the baddies out and to keep you from accidentally letting them in.

If you’ve got an alternative method to connect to the internet such as a wireless hotspot or a guest network, try connecting to it and see if your attempt to reach your SQL Azure server succeeds. If so, then you know there’s a corporate firewall blocking you.

You’ll have to check with your network admins to see if they allow outbound TCP/IP connections on port 1433. Chances are you’ll have to do some paperwork to get that set up.

These are the troubleshooting steps we’ve found fruitful during labs. If you’re still stuck beyond this point, get a colleague to try connecting to your server from their machine. If they succeed, then you know there’s a configuration difference. It’s just a matter of finding the difference.

<Return to section navigation list>

MarketPlace DataMarket, Social Analytics, Big Data and OData

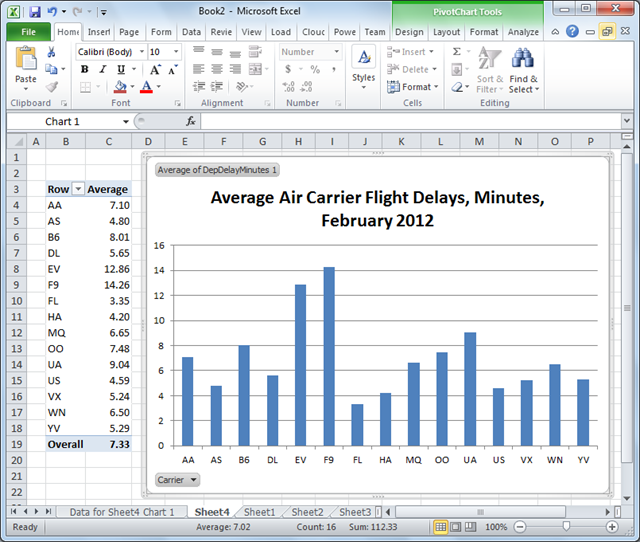

My (@rogerjenn) updated Accessing US Air Carrier Flight Delay DataSets on Windows Azure Marketplace DataMarket and “DataHub” added a Exporting Data to Excel PowerPivot Tables and Charts section on 5/16/2012:

Exporting Data to Excel PowerPivot Tables and Charts

My two-year-old Enabling and Using the OData Protocol with SQL Azure of 3/26/2011 explained “how to enable the OData protocol for specific SQL Azure instances and databases, query OData sources, and display formatted Atom 1.0 data from the tables in Internet Explorer 8 and Excel 2010 PowerPivot tables.” The post also provided a “comparison of PowerPivot for Excel and Tableau (see the end of the “Working with OData Feeds in PowerPivot for Excel 2010” section.)”

My later Using the Microsoft Codename “Social Analytics” API with Excel PowerPivot and Visual Studio 2010 of 11/2/2011 explained how to open an empty PowerPivot worksheet and connect to a dataset provided by the Windows Azure Marketplace DataMarket.

This example uses the Explorer’s Export to Excel 2010’s PowerPivot feature to create worksheet and chart of average departure delays by air carrier for a particular month. This procedure assumes that you have a MarketPlace account and a free subscription to the US Air Carrier Flight Delays dataset.

1. Download and install the x86 or x64 version of Excel PowerPivot from the download page to match the bitness of your Office 2010 installation.

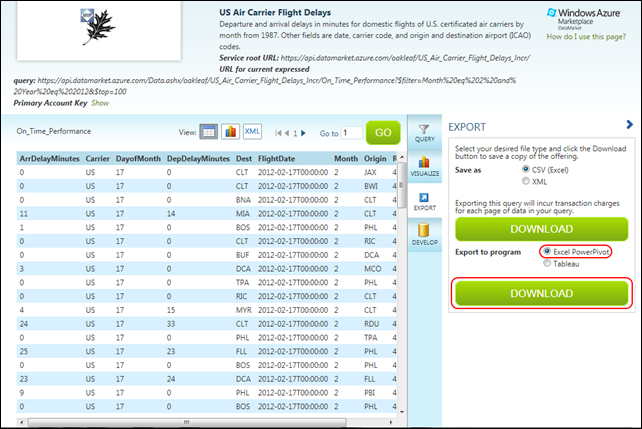

2. Open the US Air Carrier Flight Delays dataset, sign into the DataMarket with an account that has a subscription to the dataset, click the Explore This Dataset link, specify 2 as the Month and 2012 as the Year for optional parameters, and click the Export button to open the Export pane:

Note: Specifying a single month and year limits the spreadsheet to about 500,000 rows.

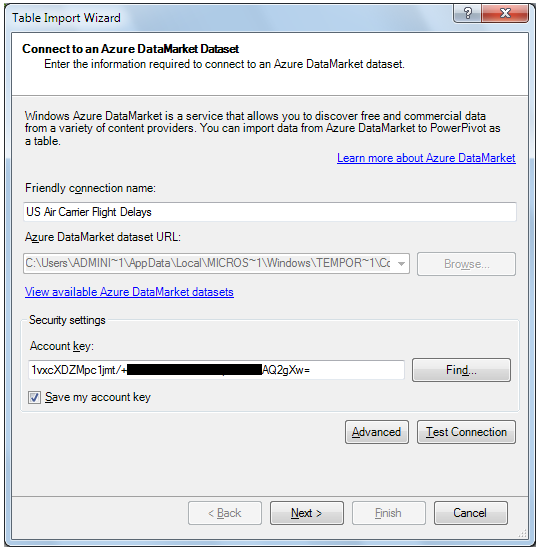

3. Accept the Excel PowerPivot option and click the Lower Download button and click Open when asked if you want to open or save the ServiceQuery.atomsvc file from datamarket.azure.com to open Excel’s Table Import Wizard dialog. Replace the default Friendly Connection Name with US Air Carrier Flight Delays for this example, copy your Account Key from Notepad and paste it into the Account Key Text box, and mark the Save My Account key:

Note: If you don’t have the Account Key copy in Notepad, click the Find button to open the Account Keys page, select your Account Key, and then copy and paste it to the text box.

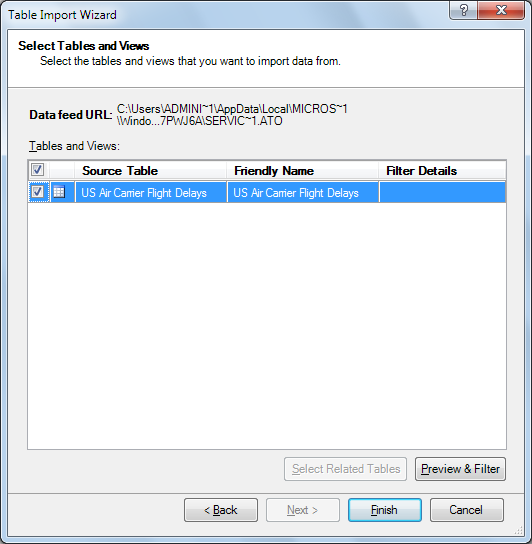

4. Click Next to open the Wizard’s Select Tables and Views dialog:

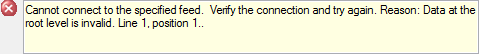

Note: If you receive the following message:

the account key you entered probably is for a non-administrator user. Log in with an administrative account, and repeat the process in the preceding note.

5. Click the Preview & Filter button to display the Preview Selected Table dialog. Clear the DayofMonth, Month, RowId and Year check boxes to omit the columns from the PowerPivot worksheet:

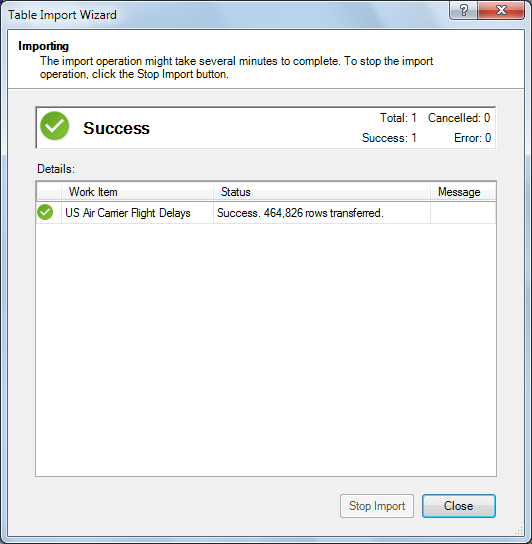

6. Click OK to close the dialog and click Finish to begin downloading data. The Status column displays the number of rows downloaded:

7. After all rows download, click Close to dismiss the Wizard and view the data in a worksheet:

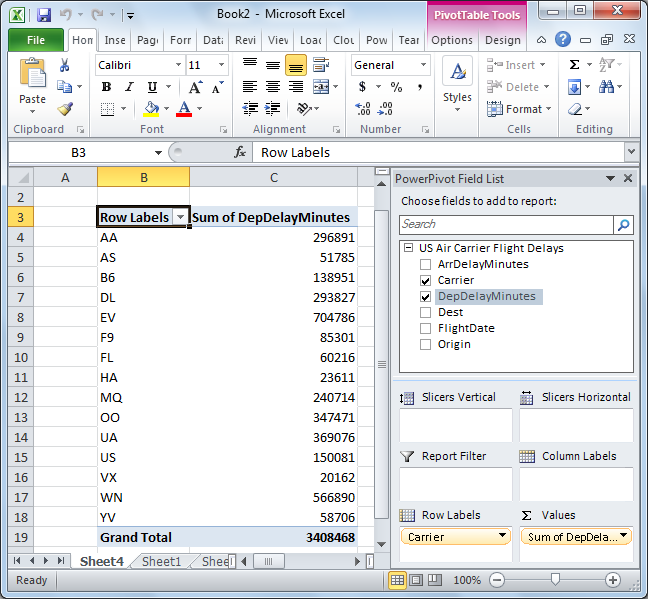

8. Open the PivotTable gallery and select Pivot table to open a new sheet with a PowerPivot Field List pane. Mark the Carrier and DepDelayMinutes check boxes to add Row Labels for Carriers and default Sum of DepDelayMinutes values:

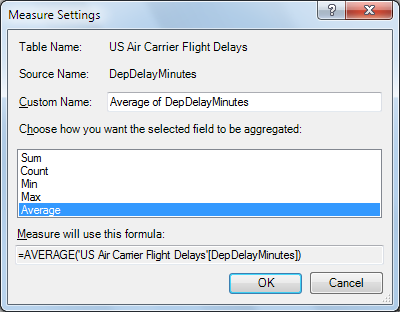

9. Click the arrow in the Values text box’s Sum of DepDelay… item, select Edit Measure to open the Measure Settings dialog and select Average as the aggregation function:

10. Click OK to close the dialog and display the average values:

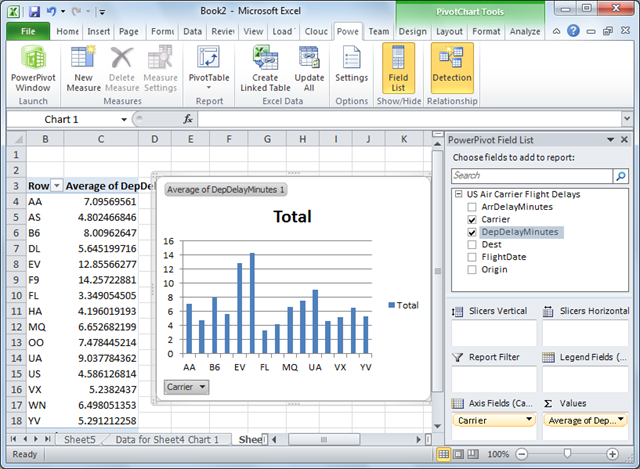

11. Click the PivotTable button again, select Pivot Chart and specify this worksheet:

12. Click the Field List to hide the pane, change column C’s heading to Average, change the cell format of Column C to Number, expand the chart by dragging the corners, edit the title as shown below, and select and delete the Total legend:

Miguel Parajo wrote ISV Guest Post Series: Softlibrary and Kern4Cloud on Windows Azure on 5/15/2012:

Editor’s Note: Today’s post, written by Miguel Parejo, CTO at Softlibrary describes how the company uses Windows Azure and the Windows Azure Marketplace to run and sell its multi-tenant information management service.

Softlibrary is a company started in 1988 in Barcelona (Spain). Since then, it has always been involved in information management, and providing cutting-edge custom solutions to our customers. For that purpose, the company adopted Microsoft platforms and architectures from its very beginning.

Kern4Cloud is a multi-tenant service focused on information management whether it comes from a corporate nature or not. It can handle all the information lifecycle providing a set of tools for publication, categorization and classification, lexical-semantic and thesauruses systems, version control, multilingual, live-translation and workflow processes.

We chose Windows Azure because it resides in certified data centers where information and services are kept in a reliable and secure way. Windows Azure resources are capable of being stretched in order to provide high-performance solutions, as well.

Every single portion of existing information within the system is saved in XML format, so we can also look at Kern4cloud as a black-box that transforms heterogeneous sources into standard and internationalized ones.

Let’s see how we can accomplish a typical flow with Kern4Cloud. Your company likely has a Privacy Policy statement and it will probably change over time. Once you have imported the first version you can create new versions, duplicate existing versions, and even translate versions on-the-fly using the main translation engines available as part of the solution. The system can also convert your files to pure XML so you can later edit them with its own editor, called X.Edit, a WYSIWYM editor. All this can be accomplished with the web component called K4C.Workplace. Your company is likely structured in a way that some departments must give their consent before publishing. Given this, you can first create a workflow to force those and only those departments involved in publishing process to read and revise the statement, review them conforming laws, correct translations and finally give their consent so the document can be published and ready to be consumed.

The Challenge

When we first looked Windows Azure, we realized that architecture and design stages should now include some cost-efficient strategies. There are some billing drivers you must consider when migrating or creating from scratch your solutions on Windows Azure. Fortunately, Microsoft has provided some extra features and capabilities to make this process much easier. To mention a couple:

- Dynamic Management Views: Used them on SQL Azure to determine how big your databases are and how you can increase and decrease its size to stabilize costs.

- Storage Metrics: Track your storage to see transactions and capacity metrics.

There are other tools but you can also take a look at some cost-efficient strategies out there. So that was the main challenge: design, migrate, adapt and write code in a way developers had never done before. Every single stakeholder in the project must now take in consideration one new parameter: cost. We don’t mean Windows Azure is expensive, just the other way around.

Once we had redesigned the core components we faced another challenge. How could we authenticate users in a multi-tenant service? Windows Azure comes with Access Control Service (ACS). ACS lets us deal with identities in a transparent way and focus on the authorization process.

The Architecture

Now we know what Kern4cloud does, we’ll show who is doing it and later we’ll map these with Windows Azure components.

Here’s a list of the main components:

- K4C.Workplace: The core of the UI. Users can version, edit, publish, delete, batch-operate, sort, search, filter data easily with a single window. Everything is organized in a grid to increase visibility and hence, usability. Refer to first picture to have an idea.

- K4C.Admin: The place where administrators can manage all the properties, X.Edit styles and mapping, group of users, user permissions, workflows, and so on.

- Repositories: There are three repositories. One for binary documents (Office, images, videos, etc.), one for the XML files (the ones that contain extracted information and meta-data) and finally all the information handled by K4C.Admin and indexed data used for fast-searching, which is stored in two databases.

- Workflows: This component deals with all the workflow processes defined by users.

This is an eagle-eye view of the architecture. Let’s see how it’s mapped with Windows Azure components.

Before getting into specifics there’s one thing that must be explained. Kern4Cloud modeling service is offered for two main audiences.

- Individual users: They have limited disk quota space, some features disabled and share storage resources.

- Business users: When a company subscribes this model, they are automatically provisioned with a set of private resources. All users inside the company will access the common repository through K4C.Workplace. However, K4C.Admin can help administrators to isolate information within the organization.

Below, there are four figures showing the most important Windows Azure components and services in our architecture. There are some interactions between them which we have been omitted for readability.

- Figure 1: Shows the Web Roles K4C.Workplace and K4C.Admin. Both are deployed on the same hosted service. They are the entry point of the system and the only ones that have some UI. They retrieve up-to-date information from SQL Azure because our indexing engine and K4C.Workplace component assure it will be there as the user interacts and modifies data. Since Full-Text indexing support is not currently available in SQL Azure, our indexing engine has a little bit of extra work. Recently, we’ve started looking into Hadoop because we believe it could be a good alternative.

- Figure 2:By the moment, all these roles are deployed on the same hosted service (but different from the ones in Figure 1).

- Workflows and Index: These are Worker Roles. The first one pops messages from the Workflows Queues and processes them. Indexing worker role is a service that indexes and keeps all the information in a coherent state. It also detects document changes to push messages into Workflows Queues as well. Both are multithreaded in order to minimize bottlenecks. However, the resources of one single instance are limited, so scaling is needed. By the moment, this component is scaling manually but we are preparing a new version that includes the Windows Azure Autoscaling Application Block (WASABi), a component of the Enterprise Library 5.0 Integration Pack for Windows Azure. We plan to autoscale this and other K4C components based on CPU and memory usage and network load.

- K4C.FileProperties: This is a web service that processes binary files to convert them into XML. Following the Privacy Policy sample, imagine they consist of Word files in your environment with some styles used for headings, footers, etc., which can be mapped with K4C.Admin into XML tags. Once you have saved a new version, if mapping is properly set, the indexing role will send the file to this service and the result will be an XML which can be used for further editing. That is the way you can show your information in many platforms and devices.

- K4C.Backoffice: It’s the middle-tier between a website and the K4C system. If you want to show your Privacy Policy statement on your website, you’ll have to request it to this service, which will also be shared by any other customers, but it relies on Access Control Service to assure data isolation, so that’s why you’ll first need to authenticate to ACS before making any request.

Each role in our system has its own scaling parameters. Some should scale based on CPU usage only, others on network usage, and so on. We deploy a role into a specific hosted service if its scaling parameters are similar to the ones previously deployed (though this is not the only rule we follow). K4C.Backoffice is pretended to be consumed by third-party users in the future, so we presume its scaling parameters will differ quite a lot. Hence, we are planning to deploy a new version of this component to its own hosted service.- Figure 3: SQL Azure server holds data for every single customer. Individual users share two databases while businesses have their own pair. All sensitive information is properly encrypted in our data layer.

- Figure 4: We have two storage accounts. One for customers and the other for deploying, diagnostics and backup. XML data is stored in Tables using a partition key for each customer identity, so data will be served from different servers as explained here. We’ve overridden the TableServiceContext class and intercepted the WritingEntity and ReadingEntitity events, so we encrypt and compress xml data before writing to tables and the other way around after consuming from tables.

Windows Azure Marketplace Integration

Finally we decided to empower Kern4Cloud by filing an application to be published on the Windows Azure Marketplace. We found it to be a perfect destination for a cloud-based solution because it’s a platform where our customers can securely subscribe our services and makes billing a worry of the past. When customers want to subscribe our service, they only need visit the Windows Azure Marketplace and search for Kern4Cloud. After they choose the most suitable offering, the Windows Azure Marketplace will ask them to login with a Windows Live ID account and provide some billing information (such as credit card number). In the Windows Azure Marketplace, subscriptions are billed monthly, so the first month will be immediately charged. Customers will trust the billing process because it’s provided by Microsoft and ISVs will only have to follow some rules and provide their offering prices to the Windows Azure Marketplace. The whole process is fast, secure and reliable for customers and providers.

Furthermore, integrating any solution to the Marketplace is quite easy. Basically you should follow the steps shown in the proper sample found at the Windows Azure Training Kit (WATK). Microsoft has made a big job recollecting good samples and best practices to build Windows Azure-based solutions. You can find the Windows Azure Marketplace integration project separately but I recommend downloading the whole kit.

Let’s see how this works with a sample:

- A customer finds your solution in the Windows Azure Marketplace and desires to subscribe. Once the billing and subscription information has been provided, Marketplace redirects the customer to the AzureMarketplaceOAuthHandler.ashx handler on your website, telling a new customer has subscribed.

- Your website must confirm the request is truly coming from the Windows Azure Marketplace. This task is handled by a project called AzureMarketplace.OAuthUtility which you’ll find in the WATK. You can either attach the project or reference the DLL. It contains the handler mentioned above, so you should also be adding the following line to your web.config:

<handlers>

<add name="AzureMarketplaceOAuthHandler" verb="*"

path="AzureMarketplaceOAuthHandler.ashx"

type="Microsoft.AzureMarkeplace.OAuthUtility.AuthorizationResponseHandler, Microsoft AzureMarketplace.OAuthUtility"/></handlers>

This project relies on Microsoft.IdentityModel and Microsoft.IdentityModel.Protocols.OAuth libraries, as well.

- When the origin of the request is confirmed to come from the Windows Azure Marketplace, your solution is told a customer is subscribing and, hence you can ask for additional information if needed. To do this you must add five classes to your website. One of them is used to read the information that purely defines your website as a Windows Azure Marketplace client. This information should be stored in your web.config like this:

<azureMarketplaceConfiguration

appSpecificAzureMarketplaceOAuthClientId="YOUR_CLIENT_ID"

appSpecificAzureMarketplaceOAuthClientSecret = “CLIENT_ECRET_KEY"

appSpecificPostConsentRedirectUrl =

"http://127.0.0.1:81/AzureMarketplaceOAuthHandler.ashx"

appSpecificWellKnownPostConsentUseUri =

"http://127.0.0.1:81/Subscription/New"/>This information must be the same as the one defined in the Marketplace (whether it is the playground or not).

Of course, a section element must be added, as well:

<section name="azureMarketplaceConfiguration"

type="YOURNAMESPACE.AzureMarketplaceConfiguration, YOURASSEMBLY"

requirePermission="false"/>Note the testing URLs should be replaced in production environment.

The other important class is SubcriptionUtils.cs. This will be the final destination of the Marketplace requests. Here you will create the CreateSubscription and Unsubscribe methods in order to process all the requests. You only need to add this line to your Global.asax (Application_Start method):

AzureMarketplaceProvider.ConfigureOAuth(new SubscriptionUtils());

And you’re done. Your website is now capable of getting subscriptions requests only from the Marketplace and process them according to your needs.

In a Nutshell

The migration from our on-premises system has not been an easy task, but a thrilling one. Windows Azure provides all the services we needed to accomplish our mission. We only had to take some considerations before. One of them regards some discussions existing on the web, trying to say whether the Cloud means the death of IT. Our experience concludes that IT departments won’t disappear, they’ll just have to get used to these platforms. Furthermore, we believe the debate is going to finish soon with the introduction of hybrid clouds to the mainstream.

We’d like to thank Microsoft for the opportunity of being the first Spanish company to write for this blog series. If you would like more information regarding some of the aspects shown here, feel free to contact us.

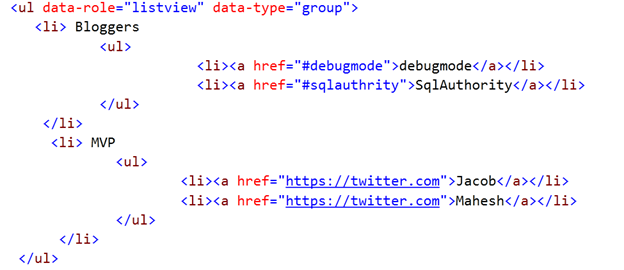

Dhananjay Kumar (@debug_mode) described Kendo UI ListView Control and OData in Windows Phone Application in a 5/15/2012 post:

In this post we will see the way to work with Kendo UI Mobile ListView control and OData. Before you go ahead with this post, I recommend you to read Creating First Windows Phone Application using Kendo UI mobile and PhoneGap or Cordova

Using ListView

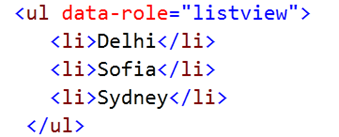

We can use a ListView control as following. You need to explicitly set data-role for Ul HTML element as listview

Resultant listview would be rendered as following in Windows Phone emulator

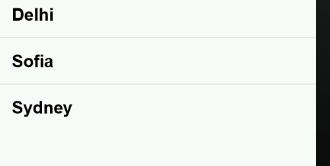

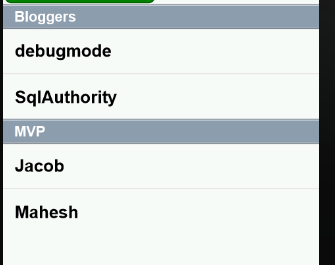

We can create Grouped ListView as following. We need to specify data-type as group.

Resultant listview would be rendered as following in Windows Phone emulator

If we want to make ListView items as link we can do that as following .

Resultant listview would be rendered as following in Windows Phone emulator

Working with OData

We are going to fetch movies details form OData feed of Netflix. OData feed if Netflix is available at

http://odata.netflix.com/Catalog/Titles

Very first we need to create datasource from OData feed. Datasource from OData feed can be created as following

While creating [the] datasource, we are specifying URL of OData feed, type and pagesize need to be fetched from Netflix server. After datasource being created we need to set template and datasource of ListView as following.

$("#lst").kendoMobileListView( { template: "<strong>${data.Name}<br/><a href= ${data.Url}></a></strong><br/><img src=${data.BoxArt.MediumUrl} alt=a />", dataSource: data }); });In above code snippet we are setting datasource and template. Template can have HTML elements. Any variable data can be fetched as $datasourcename.fieldname

Complete code is as following. We are having two ListViews on the view to display. First ListView is fetching data from OData feed and second ListView is having hard coded data.

<!DOCTYPE html> <html> <head> <meta name="viewport" content="width=device-width, height=device-height, initial-scale=1.0, maximum-scale=1.0, user-scalable=no;" /> <meta http-equiv="Content-type" content="text/html; charset=utf-8"/> <title>Cordova WP7</title> <!-- <link rel="stylesheet" href="master.css" type="text/css" media="screen" title="no title" charset="utf-8"/>--> <link rel="stylesheet" href="styles/kendo.mobile.all.min.css" type="text/css"/> <script type="text/javascript" charset="utf-8" src="cordova-1.7.0.js"></script> <script type="text/javascript" src="js/jquery.min.js"></script> <script type="text/javascript" src="js/kendo.mobile.min.js"></script> <script type="text/javascript"> $(document).ready(function () { var data = new kendo.data.DataSource({ type:"odata", // specifies data protocol pageSize:10, // limits result set transport: { read: "http://odata.netflix.com/Catalog/Titles" } }); $("#lst").kendoMobileListView( { template: "<strong>${data.Name}<br/><a href= ${data.Url}></a></strong><br/><img src=${data.BoxArt.MediumUrl} alt=a />", dataSource: data }); }); </script> </head> <body> <div id="firstview" data-role="view" data-transition="slide"> <div data-role="header">First View Header</div> Hello World First View <br /> <ul id="lst" data-role="listview" > </ul> <ul data-role="listview" data-type="group"> <li> Bloggers <ul> <li><a href="#debugmode">debugmode</a></li> <li><a href="#sqlauthrity">SqlAuthority</a></li> </ul> </li> <li> MVP <ul> <li><a href="https://twitter.com">Jacob</a></li> <li><a href="https://twitter.com">Mahesh</a></li> </ul> </li> </ul> <div data-role="footer">First View Footer</div> </div> <script type="text/javascript"> var app = new new kendo.mobile.Application(); </script> </body> </html>In Windows Phone emulator we should be getting output as following

In this way we can work with Kendo UI ListView control. I hope this post is useful.

David Linthicum (@DavidLinthicum) asserted “CIOs will do well, traditional vendors will fare poorly, and users will find a mixed bag” in a deck for his 3 winners, 3 losers in the move to big data article of 5/15/2012 for InfoWorlds’ Cloud Computing blog:

The move to big data is afoot. Recently, Yahoo and Google both tossed their very big hats into the ring, and the cloud computing leaders are already offering access to big data services. It's becoming the killer application for cloud computing, and I believe it will drive a tremendous amount of growth in 2012 and 2013.

However, with any shift in technology, there are those who win and those who lose. Here are three of each for your consideration.

Winners in the move to big data

1. CIOs. This group can finally get its enterprise data under control on the cheap and make sense of all the information it's been trying to manage. Although many CIOs have been the target of corporate budget cuts, they finally can put a big mark in the win column.2. Users. Ever dial into a call center that has no idea who you are or what you mean to the company? Big data lets companies understand their customers at a level once unheard of, including demographics, social circles, and dealings with other businesses. Customers should benefit.

3. Cloud providers. What do you do with an public IaaS cloud? Big data is a good start, with value that's easy to define.

Losers in the move to big data

1. Big database vendors. They'll suffer as the market turns to the big data names, which are more open (Hadoop-y, I call it). Proprietary database software will lose some of its attraction, and the movement to big data and the cloud services that deliver database systems for big data will cut right into the proprietary vendors' bottom lines.2. Data warehouse/BI specialists. They did not see this coming. They've been busy working with traditional analytics technology to manage data sets for business analysis and decision support, including million-dollar hardware and software systems. The movement to big data and cloud computing commoditizes many of their concepts -- and makes these older approaches appear wasteful.

3. Users. The use of big data lets huge amounts of personal information be culled, combined, and analyzed. Thus, everything from who you dated in high school to your buying patterns in college to the number of miles you put on your car each year could be much easier to obtain. Much of this will be through analysis of patterns of massive amounts of available data that you had no idea would provide this kind of visibility into your personal information. Bye-bye, privacy.

<Return to section navigation list>

Windows Azure Service Bus, Access Control, Identity and Workflow

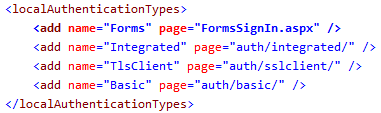

Haishi Bai (@HaishiBai2010) recommended Configure ADFS to show a login page instead of a dialog in a 5/15/2012 post:

Configure ADFS to show a login page instead of a dialog

When you enable ADFS for a website, by default your users will get a login dialog as shown below.

You can configure ADFS to bring up a login page instead. To do this, you need to modify web.config file under c:\inetpub\adfs\ls folder (assuming you

Configure ADFS to show a login page instead of a dialog

After saving the file, you’ll see a login page instead when you try to login:

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Avkash Chauhan (@avkashchauhan) described Windows Azure Management using Burp (Java based GUI tool) and REST API in a 5/16/2012 post:

Burp is a great tool to use REST API directly in the GUI. I have written this blog [post] to understand how to configure Burp to Manage Windows Azure subscription and services using REST API.

You can download the tool below:

http://portswigger.net/burp/proxy.htmlAfter you start the tool first step is to setup the PFX certificate which you have deployed to Windows Azure Management Portal.

Open [the] “Options” tab and select your PFX certificate as below:

Now open the “repeater” tab and input the following info:

Host > management.core.windows.net

Check > use SSL

In the request window please enter the REST API and parameters as below:

GET /<You_Subscription_ID>/services/hostedservices HTTP/1.1

Content-Type: text/xml

x-ms-version: 2010-04-01

Host: management.core.windows.net (end of this line please enter 2 times)Once you have entered REST API and parameters, you see the input request window changed with new tab as “Headers” and when you are ready select “go” to submit the request:You will see the response as below:

Opstera (@Opstera) reported Opstera Eases Transition to the Cloud With New Dashboard for Monitoring Windows Azure in a 5/16/2012 press release (via Marketwire):

Today, Opstera -- a cloud-based health management and capacity optimization provider -- announced a new online dashboard called CloudGraphs, designed to monitor quality of service (QoS) for cloud platforms such as Windows Azure. The dashboard offers Windows Azure customers additional visibility and new metrics related to Microsoft Corp.'s cloud platform in real time, providing a new level of control and assurance. Over time, CloudGraphs will expand to provide data on the availability and performance of additional cloud services. The new dashboard is an important component of Opstera's vision of providing customers with an end-to-end view of their entire cloud operations which includes health management of the cloud platform, the application itself, and the related third-party services.

"As customers make the transition from the datacenter to the cloud, they are concerned about losing control over the operations of their critical business systems," said Opstera CEO Paddy Srinivasan. "CloudGraphs reveals new performance metrics for Windows Azure services, providing customers with vital insights into the overall health of their cloud applications."

Opstera's CloudGraphs compiles ongoing data on the performance of Windows Azure services by conducting workload tests against the service on a continual basis, then compares the time required to complete each test. Using a series of algorithms, CloudGraphs calculates latency data for the most popular Windows Azure services: Cloud Services, SQL Azure, and Storage. The dashboard synthesizes the data and displays the information in buttons reflecting available, degraded, or interrupted Windows Azure service. Customers can click the buttons to review historical data for a particular test and learn more information.

"Windows Azure provides on-demand compute, storage, networking, and content delivery, and also automates system management tasks," said Brian Goldfarb, Director of Windows Azure Product Marketing at Microsoft. "Opstera's CloudGraphs gives our mutual customers a deeper view into their mission critical cloud operations. We are excited about future possibilities of this service and the prospect of input from the Windows Azure community."

The dashboard will be submitted to the vibrant Windows Azure community of developers and programmers. These Windows Azure experts can offer valuable feedback to enhance customers' experiences and improve performance data. Community members will tweak the dashboard's existing algorithms -- or suggest new ones -- and provide recommendations for the types and numbers of latency tests in an effort to continually upgrade the tool.

CloudGraphs complements Opstera's existing suite of products and tools designed to provide customers with deeper insights into every aspect of their cloud operations. Based on customer demand for better quality of service in the cloud, CloudGraphs goes beyond application health management by allowing customers to proactively monitor the quality of service of the underlying Windows Azure service. This is a critical part of Opstera's commitment to improve overall cloud operations management for customers.

Opstera's CloudGraphs is a cloud service and is available free of charge. For more information, visit www.CloudGraphs.com or www.opstera.com.

About Opstera

Opstera is the only cloud-based operations management provider for Windows Azure and offers the industry's most comprehensive view of health management and capacity optimization. Opstera provides customers with end-to-end visibility into application performance in the cloud by monitoring the underlying Windows Azure services, the application itself, and the dependent third-party cloud services. By providing a comprehensive set of tools and dashboards, Opstera monitors more than 100 million Windows Azure metrics per month and has helped more than 100 customers gain deeper insights into their cloud operations. For more information, go to www.opstera.com, visit Facebook, or follow Opstera or on Twitter at @opstera.

My (@rogerjenn) updated Links to My Cloud Computing Articles at Red Gate Software’s ACloudyPlace Blog listed Links to My Cloud Computing Articles at Red Gate Software’s ACloudyPlace Blog on 5/15/2012:

I’m a regular contributor of articles about cloud computing/big data development and strategy to Red Gate Software’s (@redgate) ACloudyPlace (@ACloudyPlace) blog.

• Updated 5/15/2012 for the latest article.

The following table lists the topics I’ve covered to date:

Red Gate Software offers the following “cloud-ready” tools:

The firm acquired Cerebrata Software Private Ltd in October 2011. The press release for the acquisition is here.

Full disclosure: I have gratis licenses for most Cerebrata products, including Cloud Storage Studio and Diagnostics Manager. I use these tools regularly.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

The Entity Framework Team announced EF5 Release Candidate Available on NuGet on 5/15/2012:

A couple of months ago we released EF5 Beta 2. Since releasing Beta 2 we have made a number of changes to the code base, so we decided to publish a Release Candidate before we make the RTM available.

This release is licensed for use in production applications. Because it is a pre-release version of EF5 there are some limitations, see the license for more details.

What Changed Since Beta 2?

This Release Candidate includes the following changes:

- Added a CommandTimeout property to DbMigrationsConfiguration to allow you to override the timeout for applying migrations to the database.

- We updated Code First to add tables to existing database if the target database doesn’t contain any tables from the model. Previously, Code First would assume that the database contained the correct schema if it was pointed at an existing database that was not created using Code First. Now it checks to see if the database contains any of the tables from the model. If it does, Code First will continue to try and use the existing schema. If not, Code First will add the tables for the model to the database. This is useful in scenarios where a web hoster gives you a pre-created database to use, or adding a Code First model to a database created by the ASP.NET Membership Provider etc.

This release also includes fixes for the following bugs found in Beta 2:

- Exception in partial trust applications ‘Request for ConfigurationPermission failed while attempting to access configuration section 'entityFramework'.’

- Migrations: Using a login that has a default schema other than ‘dbo’ for the user causes runtime failures

- Migrations: DateTime format issue on non-en cultures

- Migrations: Migrate.exe does not set error code after a failure

- Migrations: Error renaming entity in many:many relationship

- Migrations: Checking for Seed override fails in partial trust

- Migrations: Better error message when startup project doesn't reference assembly with migrations

- Migrations: ModuleToProcess deprecated in PowerShell 3 causes warning when installing EF NuGet package

What’s New in EF5?

EF 5 includes bug fixes to the 4.3.1 release and a number of new features. Most of the new features are only available in applications targeting .NET 4.5, see the Compatibility section for more details.

- Enum support allows you to have enum properties in your entity classes. This new feature is available for Model, Database and Code First.

- Table-Valued functions in your database can now be used with Database First.

- Spatial data types can now be exposed in your model using the DbGeography and DbGeometry types. Spatial data is supported in Model, Database and Code First.

- The Performance enhancements that we recently blogged about are included in EF 5.

- Visual Studio 11 includes LocalDb database server rather than SQLEXPRESS. During installation, the EntityFramework NuGet package checks which database server is available. The NuGet package will then update the configuration file by setting the default database server that Code First uses when creating a connection by convention. If SQLEXPRESS is running, it will be used. If SQLEXPRESS is not available then LocalDb will be registered as the default instead. No changes are made to the configuration file if it already contains a setting for the default connection factory.

The following new features are also available in the Entity Model Designer in Visual Studio 11 Beta:

- Multiple-diagrams per model allows you to have several diagrams that visualize subsections of your overall model.

- Shapes on the design surface can now have coloring applied.

- Batch import of stored procedures allows multiple stored procedures to be added to the model during model creation.

Getting Started

You can get EF 5 Release Candidate by installing the latest pre-release version of the EntityFramework NuGet package.

PM> Install-Package EntityFramework –Pre

These existing walkthroughs provide a good introduction to using the Code First, Model First & Database First workflows available in Entity Framework:

We have created walkthroughs for the new features in EF 5:

Compatibility

This version of the NuGet package is fully compatible with Visual Studio 2010 and Visual Studio 11 Beta and can be used for applications targeting .NET 4.0 and 4.5.

Some features are only available when writing an application that targets .NET 4.5. This includes enum support, spatial data types, table-valued functions and the performance improvements. If you are targeting .NET 4.0 you still get all the bug fixes and other minor improvements.

Support

We are seeing a lot of great Entity Framework questions (and answers) from the community on Stack Overflow. As a result, our team is going to continue spending more time reading and answering questions posted on Stack Overflow.

We would encourage you to post questions on Stack Overflow using the entity-framework tag. We will also continue to monitor the Entity Framework forum.

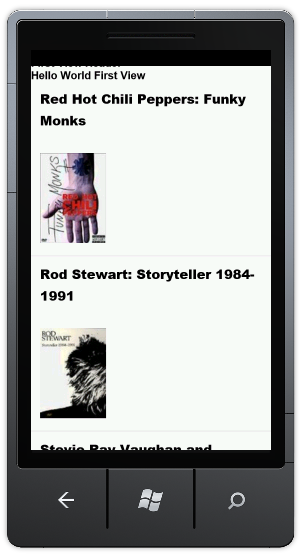

Beth Massi (@bethmassi) reported "What’s New with LightSwitch in Visual Studio 11" Recording Available on 5/14/2012:

On Friday last week I delivered a webcast on the new LightSwitch features in Visual Studio 11 beta. I think it went pretty well considering it was the first time I had done the session end-to-end :-). You can now view the on-demand webcast here:

Download: What’s New with LightSwitch in Visual Studio 11

(Click the “register” button, log in, and then select “download”.)

In the session we built an application called “Media Mate” that connects to the Netflix OData source to keep track of favorite movies and music. I showed off new features around all our OData work like how to consume external OData services. I also demonstrated how LightSwitch creates its own OData services adhering to your business rules and security settings so that other clients on different platforms can access your middle-tier easily and securely. I showed off some of the new UI enhancements and business types as well as some of the new deployment enhancements. I attached the presentation slides to the bottom of this post.

One demo hiccup happened where I messed up the search ability over the Netflix data. Doh! What I ended up doing is unchecking the IsSearchable property on the Title entity in the data designer which subsequently disabled the searching on the screen. So it was user error, not a beta bug ;-). At any rate, I think folks got the point. By the way, there are a lot of things you can do to improve search query performance in LightSwitch and I’ll follow up with a post about that soon.

I also demonstrated the updated Contoso Construction sample that you can download here:

Contoso Construction - LightSwitch Advanced Sample (Visual Studio 11 Beta)

For more information on the new features I demonstrated please see:

- LightSwitch in Visual Studio 11 Beta Resources

- Enhance Your LightSwitch Applications with OData

- Creating and Consuming LightSwitch OData Services

- LightSwitch Companion Client Examples using OData

- LightSwitch Architecture: OData

- LightSwitch Cosmopolitan Shell and Theme for Visual Studio 11

- User Defined Relationships within Attached Database Data Sources

- Filtering Data using Entity Set Filters

- New Business Types: Percent & Web Address

- How to Add Images and Text to your LightSwitch Applications

- How to Use the New LightSwitch Group Box

- How to Format Values in LightSwitch

- Active Directory Group Support

- LightSwitch IIS Deployment Enhancements in Visual Studio 11

Also don’t forget to visit the LightSwitch Developer Center, your one-stop-shop for learning all about LightSwitch.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

No significant articles today.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

DevX.com’s TechNet Spotlight answered Why Get a Microsoft Private Cloud? on 5/15/2012:

A Microsoft private cloud puts your applications first. It offers you deep application insight, a comprehensive cross platform approach, best-in-class performance, and the power to run, migrate, or extend your applications out to the public cloud whenever you need.

All About the App

Applications are the lifeblood of your business. The ability to deploy new applications faster and keep them up and running more reliably is the central mission of IT as a competitive differentiator. To gain a real edge, you need to go beyond just managing infrastructure. You need to manage your applications in a new way.

Get Started Now! Download Microsoft Private Cloud Software today.

The Microsoft Private Cloud lets you deliver applications as a service. You can deploy both new and legacy applications on a self-service basis, and manage them across private cloud and public cloud environments. And with a new way to see what’s happening inside the performance of your applications, you can remediate issues faster—before they become show-stoppers. The Microsoft Private Cloud is all about the app. That means better SLA’s, better customer satisfaction, and a new level of agility across the board.

Cross Platform from the Metal Up

No datacenter is an island. Odds are, you run and manage an IT environment today that is deeply heterogeneous, with a wide range of OS, hypervisor, and development tools in the mix. You want to gain the advantages of private cloud computing, but not if it means walking away from your existing IT investments or adding new layers of complexity.

The Microsoft Private Cloud lets you keep what you’ve got and make the move now to a new kind of agility. That’s because it’s architected from the raw metal up to enable process automation and configuration across platforms and environments. You can manage multiple hypervisors, including VMware, Citrix, and Microsoft offerings. You can run and monitor all major operating systems. And you can develop new applications using multiple toolsets. Because the Microsoft Private Cloud provides comprehensive management of heterogeneous IT environments, you can put your business’s needs ahead of the needs of any particular technology or vendor.

Foundation for the Future

Cloud computing offers the promise of agility, economics, and focus that can unlock new innovation and transform the role of IT in driving business success. The game is no longer just about virtualization and server consolidation. A private cloud delivers fundamentally new capabilities that represent a generational paradigm shift in computing. The bet you make today will have long-term implications for the future of your business.

Only Microsoft delivers a complete private cloud solution that provides a true cloud computing platform. For more than 15 years, we have operated some of the world’s biggest and most advanced datacenters and driven the evolution of major Internet services such as Windows Live, Hotmail, and Bing. We’ve taken all that we’ve learned from this unparalleled experience and put it into the DNA of our private cloud solutions. Go beyond virtualization—and unnecessary per-VM licensing—and proceed with confidence in building a secure and manageable private cloud that delivers best-in-class performance for Microsoft workloads (including Exchange, SQL Server and SharePoint), deep management integration, and compelling economics.

Cloud on Your Terms

The move to cloud computing involves more than just building a private cloud. The undeniable benefits of public cloud computing – on-demand scalability, flexibility, and economics, to name a few—also promise significant competitive advantages. The challenge is to leverage your existing investments, infrastructure, and skill sets to build the right mix of private and public cloud solutions for your business—one that will work for you today and in the future.

With Microsoft, you have the freedom to choose. Our solutions are built to give you the power to construct and manage clouds across multiple datacenters, infrastructures, and service providers—on terms that you control. Because Microsoft solutions share a common set of management, identity, virtualization, and development technologies, you can distribute IT across physical, virtual, and cloud computing models. That means you can keep a handle on compliance, security, and costs. And you can let your business needs drive your IT strategy, instead of having IT limit your options.

Microsoft is the sponsor of TechNet Spotlight.

<Return to section navigation list>

Cloud Security and Governance

No significant articles today.

No significant articles today.

<Return to section navigation list>

Cloud Computing Events

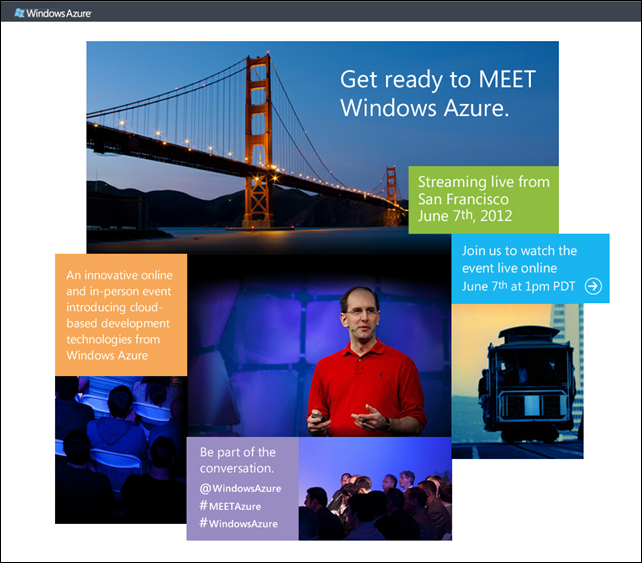

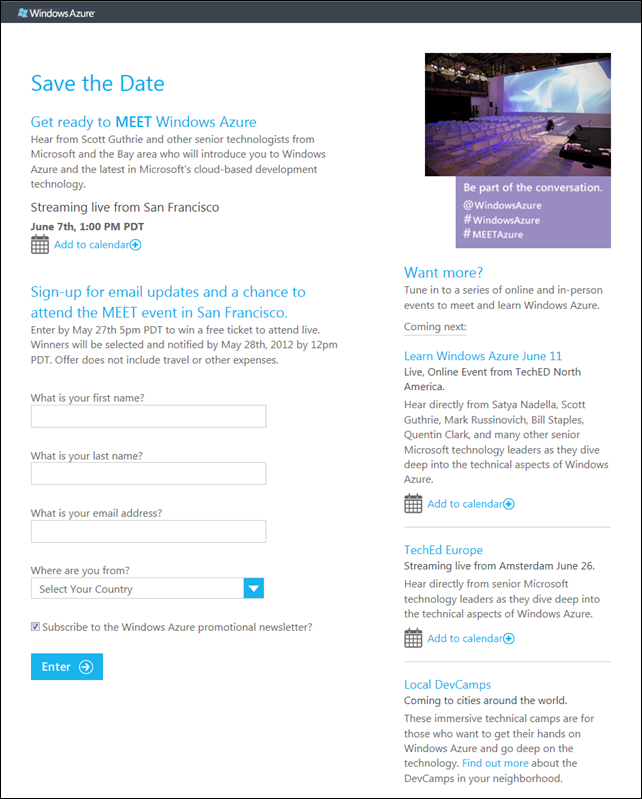

My (@rogerjenn) “Meet Windows Azure” Event Scheduled for 6/7/2012 in San Francisco post of 5/15/2012 appears as follows:

The Windows Azure Team announced on 5/15/2012 “An innovative online and in-person event introducing cloud-based development technologies from Windows Azure” on June 7, 2012 at 1 PM PDT. Click here to open the landing billboard:

Register here to attend online and for a chance for a ticket to attend in person:

Hope to See You There …

Rick Garibay (@rickggaribay) announced PCSUG.org June Meeting Featuring Glenn Block on Node.js on 5/16/2012:

You asked for it, and here it is.

As a user group that is exclusively focused on distributed computing, the appeal of Node.js to a bunch of messaging guys is obvious. Node.js is new, fascinating and seductive.

After much excitement and buzz around Node.js in general and on Windows Azure in particular, PCSUG.org is thrilled to kick off the summer with Glenn Block, Senior Program Manager on the Node.js SDK for Windows Azure team.

Joining us from Redmond, Glenn will be sharing the latest on what the Azure SDK for Windows Azure has to offer, whether you are new to Node.js development or a seasoned veteran.

In addition, our own Developer Evangelist, J. Michael Palermo will help kick things off with a discussion on what the back-end developer needs to know about HTML5 and Windows 8.

Below is the agenda and logistics- we hope you'll join us for a great opportunity to learn about what Node.js on Windows Azure has to offer as well as network and connect with other members of the Phoenix developer community.

6:00 to 8:00 pm: "Unlock your Inner Node.js in the Cloud with Windows Azure" with Glenn Block, Senior Program Manager, Microsoft Corporation

If I told you that you can build node.js applications in Windows Azure would you believe me? Come to this session and I’ll show you how. You’ll see how take those existing node apps and easily deploy them to Windows Azure from any platform. You’ll see how you can make yours node apps more robust by leveraging Azure services like storage and service bus, all of which are available in our new “azure” npm module. You’ll also see how to take advantage of cool tools like socket.io for WebSockets, node-inspector for debugging and Cloud9 for an awesome online development experience.

About Glenn Block

Glenn is a PM at Microsoft working on support for node.js in Windows and Azure. Glenn has a breadth of experience both both inside and outside Microsoft developing software solutions for ISVs and the enterprise. Glenn has been a passionate supporter of open source and has been active in involving folks from the community in the development of software at Microsoft. This has included shipping products under open source licenses, as well as assisting other teams looking to do so. Glenn is also a lover of community and a frequent speaker at local and international events and user groups.

5:30 to 6:00 pm: “What the back-end developer needs to know about HTML5 and Windows 8” with J. Michael Palermo, Senior Developer Evangelist, Microsoft Corporation

Understanding how client applications use and access data is essential for structuring and providing data through services. And just as back-end solutions evolve, so does the client. In this session, you will see how a Windows 8 Metro Style application written in JavaScript and HTML5 is developed to access data via REST APIs and web sockets.

About J. Michael Palermo:

J. “Michael” Palermo IV is a Developer Evangelist with Microsoft. In his years prior to joining Microsoft, Michael served as a Microsoft Regional Director and MVP. Michael has authored several technical books and has published online courses with Pluralsight on HTML5 technologies. Michael continues to share his passion for software development by speaking at developer events around the world.

Registration is required. Please visit pcsug.org for more information and to register for this event: http://pcsug.org/Home/Events

K. Scott Morrison (@KScottMorrison) announced APIs, Cloud and Identity Tour 2012: Three Cities, Two Talks, Two Panels and a Catalyst on 5/15/2012:

On May 15-16 2012, I will be at the Privacy Identity Innovation (pii2012) conference held at the Bell Harbour International Conference Center in Seattle. I will be participating on a panel moderated by Eve Maler from Forrester, titled Privacy, Zero Trust and the API Economy. It will take place at 2:55pm on Tuesday, May 15th:

The Facebook Connect model is real, it’s powerful, and now it’s everywhere. Large volumes of accurate information about individuals can now flow easily through user-authorized API calls. Zero Trust requires initial perfect distrust between disparate networked systems, but are we encouraging users to add back too much trust, too readily? What are the ways this new model can be used for “good” and “evil”, and how can we mitigate the risks?

On Thursday May 17 at 9am Pacific Time, I will be delivering a webinar on API identity technologies, once again with Eve Maler from Forrester. We are going to talk about the idea of zero trust with APIs, an important stance to adopt as we approach what Eve often calls the coming identity singularity–that is, the time when identity technologies and standards will finally line up with real and immediate need in the industry. Here is the abstract for this webinar:

Identity, Access & Privacy in the New Hybrid Enterprise

Making sense of OAuth, OpenID Connect and UMA

In the new hybrid enterprise, organizations need to manage business functions that flow across their domain boundaries in all directions: partners accessing internal applications; employees using mobile devices; internal developers mashing up Cloud services; internal business owners working with third-party app developers. Integration increasingly happens via APIs and native apps, not browsers. Zero Trust is the new starting point for security and access control and it demands Internet scale and technical simplicity – requirements the go-to Web services solutions of the past decade, like SAML and WS-Trust, struggle to solve. This webinar from Layer 7 Technologies, featuring special guest Eve Maler of Forrester Research, Inc., will:

- Discuss emerging trends for access control inside the enterprise

- Provide a blueprint for understanding adoption considerations

You Will Learn

- Why access control is evolving to support mobile, Cloud and API-based interactions

- How the new standards (OAuth, OpenID Connect and UMA) compare to technologies like SAML

- How to implement OAuth and OpenID Connect, based on case study examples

- Futures around UMA and enterprise-scale API access

You can sign up for this talk at the Layer 7 Technologies web site.

Next week I’m off to Dublin to participate in the TMForum Management World 2012. I wrote earlier about the defense catalyst Layer 7 is participating in that explores the problem of how to manage clouds in the face of developing physical threats. If you are at the show, you must drop by the Forumville section on the show floor and have a look. The project results are very encouraging.

I’m also doing both a presentation and participating on a panel. The presentation title is API Management: What Defense and Service Providers Need to Know. Here is the abstract:

APIs promise to revolutionize the integration of mobile devices, on-premise computing and the cloud. They are the secret sauce that allows developers to bring any systems together quickly and efficiently. Within a few years, every service provider will need a dedicated API group responsible for management, promotion, and even monetization of this important new channel to market. And in the defense arena, where agile integration is an absolute necessity, APIs cannot be overlooked.

In this talk, you will learn:

· Why APIs are revolutionizing Internet communications

- And making it more secure

· Why this is an important opportunity for you

· How you can successfully manage an API program

· Why developer outreach matters

· What tools and technologies you must put in placeThis talk takes place at the Dublin Conference Centre on Wed May 23 at 11:30am GMT.

Finally, I’m also on a panel organized by my friend Nava Levy from Cvidya. This panel is titled Cloud adoption – resolving the trust vs. uptake paradox: Understanding and addressing customers’ security and data portability concerns to drive uptake.

Here is the panel abstract:

As cloud services continue to grow 5 times faster vs. traditional IT, it seems that also concerns re security and data portability are on the rise. In this session we will explain the roots of this paradox and the opportunities that arise by resolving these trust issues. By examining the different approaches other cloud providers utilize to address these issues, we will see how service providers, by properly understanding and addressing these concerns, can use trust concerns as a competitive advantage against many cloud providers who don’t have the carrier grade trust as one of their core competencies. We will see that by addressing fraud, security, data portability and governances risks heads on, not only the uptake of cloud services will rise to include mainstream customers and conservative verticals, but also the type of data and processes that will migrate to the cloud will become more critical to the customers

The panel is on Thursday, May 24 at 9:50am GMT.

A bit short notice on pii2012, no?

Paul Miller (@PaulMiller) reported CloudCamp reaches Leeds on 14 June on 5/16/2012:

The global CloudCamp movement continues to grow, with events over the next few weeks in Denmark, Germany, Nigeria, Ghana, Kenya, and across the United States. And now, I’m very pleased to announce that the English city of Leeds is joining the party.

CloudCamp events have been taking place in the UK for years, and the London gatherings have picked up real momentum. Outside London, we’ve seen a few events in Warrington, Newcastle, and Edinburgh. We believe that the time is now right for something more regular; a place in which the cloud-building, cloud-using, cloud-interested and cloud-exploring can come together for talk, beer, pizza and more… without having to jump on a train to the deep south.

CloudCamps are interesting events, with a real emphasis on informality. I’ve attended several around the world, and am always impressed by the energy in the room, and by the welcome extended to newcomers. As the main CloudCamp site describes,

“CloudCamp is an unconference where early adopters of Cloud Computing technologies exchange ideas. With the rapid change occurring in the industry, we need a place we can meet to share our experiences, challenges and solutions. At CloudCamp, you are encouraged you to share your thoughts in several open discussions, as we strive for the advancement of Cloud Computing. End users, IT professionals and vendors are all encouraged to participate.”

Jeremy Jarvis at Brightbox and Karyn Fleeting and Joel Turner at Tinderbox Media have been driving this event forward, and they’ve invited me on board to help out. I also get to be MC on the night.

We’ve got speakers and sponsors committed, with more of both to come. If you think you should be one of those doing the speaking or the sponsoring, do let us know.

So, if you like talking Cloud, if your boss has ordered you to learn Cloud, or if you’re just keen to understand a little more about what this Cloud thing can do for you, stick the evening of 14 June in your diary, sign up (for free) on Eventbrite, and come along to the Hilton DoubleTree in Leeds for an evening of fun, learning, beer, and more.

See you there!

Image of the County Arcade in Leeds by Flickr user Francisco Perez

Tim Huckaby (@TimHuckaby) and Michael Collier (@MichaelCollier) produced Bytes by MSDN May 15: Michael Collier on 5/15/2012:

Join Tim Huckaby, Founder of InterKnowlogy and Actus Interactive Software, and Michael Collier, National Architect for Neudesic, discuss Window Azure and Windows Phone. Michael raves about helping customers with their cloud projects and shares his insights into the marriage of cloud and mobility in a cost of effective way as well as his favorite feature Web Roles.

Another great bytes interview! Get Free Cloud Access: Window Azure MSDN Benefits | 90 Day Azure Trial

Video Downloads

WMV (Zip) | WMV | iPod | MP4 | 3GP | Zune | PSP

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Alex Williams (@alexwilliams) reported SAP Annnounces Free HANA Developer Images on Amazon Web Services in a 5/16/2012 post to the SiliconANGLE blog:

Over the past week, SAP and Amazon Web Services announced a number of alliances. Earlier this week, SAP said they would sell Afaria, its mobile management software, on the AWS Marketplace. Last Friday, SAP and AWS announced that SAP customers can now deploy their SAP solutions on SAP EC2 instances in production and non-production environments for both Linux and Windows environments.

Now comes the news that developers may now set up a HANA database on Amazon Web Services at no cost. This is huge. HANA is the in-memory database that SAP is positioning to compete directly with Oracle and SAP.

It’s a major coup for the SAP Mentor community, and people like Vijay Vijayasankar, John Applebee, Jon Reed and Dennis Howlett, who have been pushing SAP for the past two years to take this kind of step.

It’s noteworthy, too, as SAP is now without question the most developer friendly enterprise technology company in the market. [Neither] IBM nor Oracle nor HP can match right now the level of commitment that SAP is making to developers.

Developers who sign up are able to run their own pre-configured HANA instance to create their data analysis application. The signup page includes detailed instructions for the setup.

SAP is making three different sizes available for developers. As noted on the SAP community network site, you can use the AWS pricing calculator, which is pre-configured for 4 hours of daily usage on the smallest available size.

Derrick Harris (@derrickharris) reported Calvin: A fast, cheap database that isn’t a database at all in a 5/16/2012 post to GigaOm’s Structure blog:

Yale researchers Daniel Abadi and Alexander ThomsonA team of Yale researchers think they have developed the cure for Oracle and IBM dominance in the world of database performance, and it isn’t even technically a database. In a blog post Wednesday morning written by team members Daniel Abadi and Alexander Thomson (and in a related research paper), the two researchers detail Calvin, a “transaction scheduling and replication coordination service” that they think can level the playing field between high-cost distributed relational databases and less-expensive, but limited, NoSQL and NewSQL databases.

Abadi and ThomsonThe researchers aren’t dismissing either NoSQL or NewSQL, but rather attempting to address the type of use case on which the popular TPC-C database peformance benchmark is based. That benchmark, which simulates an online retail application, requires ACID compliance — which NoSQL options can’t meet — and the ability to update records across database shards in the same transaction — something the authors claim NewSQL databases can’t do.

Why not just stick with Oracle Database and IBM DB2? Cost, especially at scale. As Abadi and Thomson point out in the blog, an Oracle system capable of handling 500,000 transactions per second costs $30 million in hardware and software expenditures.

So, what is Calvin? In a nutshell, it’s software that sits above above a scale-out storage system and turns it into a transaction-processing system by capturing, scheduling and executing transactions. Here’s how Abadi and Thomson describe it in the blog post, allthough the paper goes into much more detail.

Calvin requires all transactions to be executed fully server-side and sacrifices the freedom to non-deterministically abort or reorder transactions on-the-fly during execution. In return, Calvin gets scalability, ACID-compliance, and extremely low-overhead multi-shard transactions over a shared-nothing architecture. In other words, Calvin is designed to handle high-volume OLTP throughput on sharded databases on cheap, commodity hardware stored locally or in the cloud. … Calvin allows user transaction code to access the data layer freely, using any data access language or interface supported by the underlying storage engine (so long as Calvin can observe which records user transactions access).

Calvin, the researchers claim, can match Oracle’s 500,000 transaction-per-second performance running on commodity servers on Amazon EC2. The cost of the resources to run their benchmark was only $300. (Although, obviously, that doesn’t account for the cost of running the system continuously for years, potentially. Commodity physical hardware might be a better bet in the long term.)

Ultimately,

Abadi and Thomsonthe researchers conclude, for transactions that can execute entirely on the server side, Calvin could be the foundation for an end to the current OLTP regime. The world certainly is hungry for something that can do what Oracle and IBM can do, but that costs what NoSQL databases cost (i.e., nothing, often). And Abadi has some distributed database street cred — the HadoopDB project he led is the foundation of Hadapt’s Hadoop-and-data-warehouse hybrid — so, especially if it’s open sourced, one can’t dismiss Calvin out of hand.Feature image courtesy of Shutterstock user Semisatch.

Related research and analysis from GigaOM Pro:

Subscriber content. Sign up for a free trial.

Jeff Barr (@jeffbarr) announced Domain Verification for the Amazon Simple Email Service on 5/15/2012:

The Amazon Simple Email Service (SES) makes it easy and cost-effective for you to send bulk or transactional email messages.

As I described in my introductory post (Introducing the Amazon Simple Email Service), you must verify the email address (or addresses) that you plan to use to send messages. The initial verification process must be repeated for each email address.

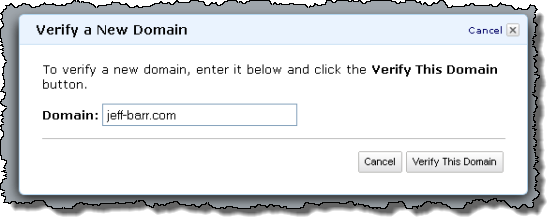

Today we are introducing a new SES feature. You can now verify an entire domain, and then send email from any address in that domain. In addition to saving you time and effort, this new feature now allows you to use Amazon SES in situations where you don't accept email at the From address, or when you don't know the From address ahead of time.

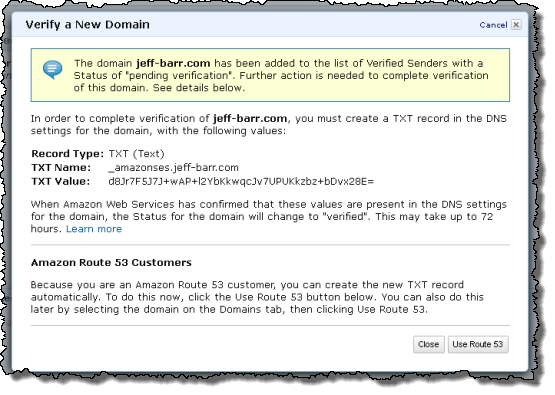

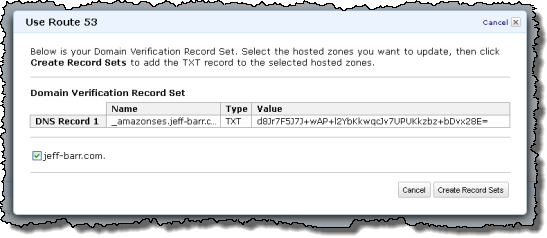

You verify a domain by creating a TXT record in the domain's DNS record using information that we provide you as part of the domain verification process. Most (not all) DNS providers allow you to create TXT records.

If you are using Amazon Route 53 to provide DNS service for your domain, the process is very straightforward; you can verify the domain using the AWS Management Console. Here's a tour...

The first step is to visit the SES tab of the console and add your domain to the Domains tab in the Verified Senders section:

If you are not using Route 53, the next step is to update your domain's DNS settings using the TXT record information displayed in the console:

If you are using Route 53, push the Use Route 53 button and select the domains and subdomains that you want to verify:

Either way (Route 53 or your own DNS provider), Amazon SES will verify your domain within 72 hours. Once the domain has been verified, you'll receive an email and the domain will be marked as "verified" in the console.

You can now send email from any address in the domain!

If you would like to learn more about domain verification, please sign up for the June 12th webinar: Using Domain Verification with Amazon Simple Email Service. The webinar is free but space is limited!

We have also changed the limit on the number of verified addresses and domains allowed per AWS account from 100 to 1000.

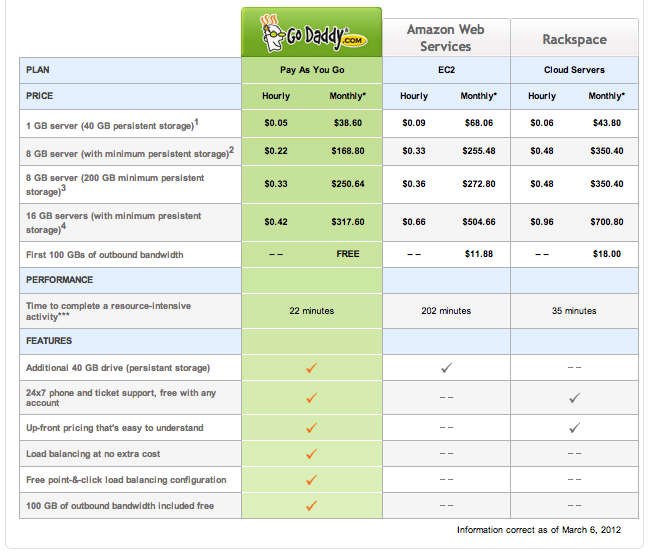

Martin Tantow (@mtantow) reported GoDaddy Introduces Cloud Servers, Competes with AWS and Rackspace in a 5/14/2012 post to the CloudTimes blog:

GoDaddy recently announced its newest offering, Cloud Servers, and competes for the first time with companies like Amazon Web Services and Rackspace. (See an exclusive interview with GoDaddy CEO Warren Adelman below.)

Apart from a very competitive price point, GoDaddy’s Cloud Solution is designed to give its customers a fast plug & play installation, combines convenient control panels, a strong infrastructure and frequently used features, like firewalls and load balancers.

As the Internet expands and websites become more resource-intensive, many businesses are moving from traditional hosting to more advanced, scalable servers with flexible network options. Go Daddy Cloud Servers are designed for companies looking to take complete control of their Web hosting environment.

In an exclusive interview with CloudTimes (see below), Warren Adelman, CEO of GoDaddy said that “Cloud Server are a natural progression – offering shared hosting with ‘cloudy’ attributes like elasticity, offering virtual servers and dedicated servers. Our new product is the next piece in the hosting landscape – offering Infrastructure-as-a-Service with Cloud Servers that include load balancing, network configuration and easy spinning up and down of environments.”

In an initial test of the service, we have been quite impressed with its intuitive UI and quick setup.

Dashboard Screenshot

Machine Configuration

CEO Series Interview

Watch the exclusive interview with GoDaddy CEO, Warren Adelman, about the its new cloud computing offering. Warren was interviewed by Martin Tantow, Founder of CloudTimes, as part of the CloudTimes CEO Series.

<Return to section navigation list>

0 comments:

Post a Comment