Windows Azure and Cloud Computing Posts for 5/10/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI, Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database, Federations and Reporting

- Marketplace DataMarket, Social Analytics, Big Data and OData

- Windows Azure Access Control, Identity and Workflow

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Services

Gaurav Mantri (@gmantri) continued his series with Comparing Windows Azure Blob Storage and Amazon Simple Storage Service (S3)–Part II on 5/11/2012:

In part I of this blog post, we started comparing Windows Azure Blob Storage and Amazon Simple Storage Service (S3). We covered basic concepts and compared pricing and features of Blob Container and Buckets. You can read that blog post here: http://gauravmantri.com/2012/05/09/comparing-windows-azure-blob-storage-and-amazon-simple-storage-service-s3part-i/

In this post, we’re going to compare Blobs and Objects from features point of view.

Like the previous post, we’re going to refer Windows Azure Blob Storage as WABS and Amazon Simple Storage Service as AS3 in the rest of this blog post for the sake of brevity.

Concepts

Before we talk about these two services in greater detail, I think it is important to get some concepts clear about blobs and objects. This section is taken verbatim from the previous post.

Blobs and Objects: Simply put blobs (in WABS) and objects (in AS3) are the files in your cloud file system. They go into blob containers and buckets respectively.

A few comments about blobs and objects:

- There is no limit on the number of blobs and objects you can store. While AS3 does not tell you the maximum storage capacity allocated for you, the total number of blobs in WABS is restricted by the size of your storage account (100 TB currently).

- The maximum size of an object you can store in AS3 is 5 TB where as the maximum size of a blob in WABS is 1 TB.

- In WABS, there are two kinds of blobs – Block Blobs and Page Blobs. Block Blobs are suitable for streaming payload (e.g images, videos, documents etc.) and can be of a maximum of 200 GB in size. Page Blobs are suitable for random read/write payload and can be of a maximum of 1 TB in size. A common use case of a page blob is a VHD mounted as a drive in a Windows Azure role. In AS3, there is no such distinction. To learn more about block blobs and page blobs, click here.

- Both systems are quite feature rich as far as operations on blobs and objects are concerned. You can copy, upload, download and perform other operations on them.

- While both systems allow you to protect your content from unauthorized access, the ACL mechanism is much granular in AS3 where you can set custom ACL on each object in a bucket. In WABS, it is at a blob container level. …

Gaurav continues with a detailed description of the differences between WABS and AS3. Read more.

Full disclosure: I have received and use gratis copies of Cerebrata’s Windows Azure tools. Free licenses for these tools are offered by Red Gate Software to all Windows Azure MVPs and Insiders.

Alejandro Jezierski (@alexjota) described Hadoop on Azure and importing data from the Windows Azure Marketplace in a 5/10/2012 post:

In a previous post I briefly introduced Hadoop and described a brief overview of its components. In this post I’ll put my money where my mouth is and actually do stuff with Hadoop on Azure. Again, one step at a time.

So I went to the market and found some free demographics data available from the UN to mess around with and imported it to my Hadoop on Azure cluster via the portal. Before you blindly go about importing data, you can first sample the goods. In the Windows Azure Marketplace you can subscribe to published data, and build a query of your interest. The most important thing here is that you need to take note of your primary account key and the query url.

Take note of the query and passkey. Click on Show.

Sample the goods, build your query.

Once we have the query we want, we need to go to our Hadoop on Azure portal, select Manage Cluster and then select DataMarket. Here we will have to input our user name (from your email), the passkey obtained earlier, the query url you obtained as well and the name of the Hive table so we can access the data after the import is done. Note: I’ve replaced the encoded space and quotation marks to avoid Bad Request errors. This happened to me because I copied and pasted the query right out from the marketplace. It took a couple of tries until I figured it out, oh well. Run the query by selecting Import Data.

Now we can go to the Hive interactive console and take a look at the results. We can type the show tables command for a list of the tables, and make sure ours is there.

Take into consideration that, although this looks like a table, it has columns like a table, and we can query the data as if it were stored in the table, it’s not. When we create tables in HiveQL, partition them and load data, HDFS files are actually created and stored (and replicated and distributed through the nodes).

Now we can go on and type our HiveQL query. Remember what’s happening under the hood. This query is creating and executing a MapReduce job. I’m also a newbie in the MapReduce world, so I trust Gert Drapers when he says a simple join in HiveQL is equivalent to writing a much more complex bunch of code, and that Facebook’s jobs are mostly written in HiveQL. That means something, doesn’t it?

So we’ve executed our simple HiveQL query and seen the results. We can always go back to the job history for that query, too (you can’t miss that big orange button in the main page).

Job History.

So, we’ve imported data from the marketplace and ran a simple HiveQL query. In a future post we can go through the samples that are included when you setup your cluster and mess around with the Javascript console. Refer to the previous post for additional links and resources.

Alejandro Jezierski (@alexjota) posted Big Data, Hadoop on Azure and the elephant in the room on 5/8/2012:

Seriously. There’s an elephant in the room, so I’ve no choice but to talk about it. I’m new to Big Data and newer to Hadoop on Azure, so this post (and future ones as well) will serve as an introduction to the underlying concepts of big data and my experience on using Hadoop on Azure, one step at a time.

Big Data

So we generate massive amounts of data. Massive. Structured, not structured, from devices, from sensors, feeds, tweets, blogs, everything we do in our daily lives generate data at some point. What do we do with it besides store it? Ignore it? Throw it away? We could. But data is there for a reason. We can extract valuable information from it. We can discover new business insights, interesting patterns emerge, and most important we could save lives… so yes, it’s a big deal. Ok, so we’ll leave the processing to multi-million dollar companies, they can afford it, right? One misconception is that we need all this massive state of the art infrastructure to be able to handle big data. We can setup nodes on affordable, commodity hardware, and achieve the same results. Nice. But I still need to maintain all these boxes, and they WILL fail eventually…

Hadoop is a scalable, hi fault tolerant open source MapReduce solution. It runs on commodity hardware, so there is an economic advantage to it.

The main components of Hadoop are illustrated in the following diagram.

HDFS: Hadoop Distributed File System. It’s the storage mechanism used by Hadoop applications. Amongst other things, it stores replicas of data blocks on the nodes of your cluster, aid availability, reliability, performance, etc.

Map Reduce. A programming framework that allows to create mappers and reducers. The framework will construct a set of jobs, hand them over to the nodes for processing, and keep track of them. The map operation let’s you take the processing to where the data is stored in the distributed file system. The reduce operation summarizes the results from the mappers.

Hive: it provides a few things, such as the possibility to create a structure for data through the use of tables. It also defines a SQL oriented language (QL, or Hive QL), in other words, MapReduce for mortals. The magic behind this is that a Hive query can be translated to a MapReduce job, and present the results back to the user. The need for Hive appeared because creating a relatively simple query in plain MapReduce jobs resulted in a cumbersome coding experience.

Sqoop: Bridge between the Hadoop and the relational world. Because we also live in a relational world, right? We can import data from SQL Server, let Sqoop store the data in HDFS, and make the data available for our MapReduce jobs as well.

Hadoop on Azure is Microsoft’s Hadoop distribution that runs on Windows, plus a hosting environment on Windows Azure. I recently got invited to use the CTP version of Hadoop on Azure (I did ask for an invitation a few weeks ago) and started to get familiar with its features. The huge benefit to this is that I don’t need to maintain all those nodes, I have my own cluster now, and I’m ready to handle massive amounts of data. Tada!

In future posts I’ll be showing how to execute a simple MapReduce job, or how to get data from the Windows Azure Marketplace and query the data using Hive. The following links lead you to useful resources if you are getting started with Hadoop on Azure.

Big Data, Big deal, video by Gert Drapers

Introduction to Hadoop on Azure, video by Wenming Ye

Hadoop on Azure portal, get invited!

<Return to section navigation list>

SQL Azure Database, Federations and Reporting

Nathan Totten (@ntotten) and Nick Harris (@cloudnick) produced CloudCover Episode 80 - Getting Started with SQL Azure Data Sync Preview on 5/11/2012:

Join Nate and Nick each week as they cover Windows Azure. You can follow and interact with the show at @CloudCoverShow.

In this episode, we are joined by Cory Fowler and Scott Klein — Windows Azure Technical Evangelists — who demonstrate how to get started with the SQL Azure Data Sync Preview.

In the News:

- The Rock Paper Azure Challenge is Back For a Spring Fling!

- Real World Windows Azure: Interview with Adrian Gonzalez, Technology Manager for the San Diego County Public Safety Group

- Updates to Windows Azure Marketplace Offer More Flexibility and Opportunity

In the Tip of the Week, we discuss the Cloud Ninja Metering Block - an extensible and reusable software component designed to assist software developers with the metering of tenant resource usage in a multi-tenant solution on the Windows Azure platform.

Learn more about the SQL Azure Data Sync Preview FAQ here.

<Return to section navigation list>

MarketPlace DataMarket, Social Analytics, Big Data and OData

My (@rogerjenn) Creating An Incremental SQL Azure Data Source for OakLeaf’s U.S. Air Carrier Flight Delays Dataset post of 5/8/2012 (updated 5/11/2012) begins:

• Updated 5/11/2012 with a correction regarding free databases for Windows Azure Marketplace DataMarket datasets.

- Background

- Creating the SQL Azure On_Time_Performance Table

- Creating an On-Premises SQL Server Clone Table

- Importing *.csv Data with the BULK IMPORT Command

- Uploading Data to the SQL Azure Table with SQLAzureMW

- Calculating the Size of the SQL Azure Database and Checking for Upload Errors

- Conclusion

Background

My initial U.S. Air Carrier Flight Delays, Monthly dataset for the Windows Azure Marketplace DataMarket, which has been disabled, was intended to incorporate individual tables for each month of the years 1987 through 2012 (and later.) I planned to compare performance of datasets and Windows Azure blob storage as persistent data sources for Apache Hive tables created with the new Apache Hadoop on Windows Azure feature.

I used Microsoft Codename “Data Transfer” to create the first two of these SQL Azure tables, On_Time_Performance_2012_1 and On_Time_Performance_2012_2, from corresponding Excel On_Time_Performance_2012_1.csv and On_Time_Performance_2012_2.csv files in early May 2012. For more information about these files and the original U.S. Air Carrier Flight Delays, Monthly dataset see my Two Months of U.S. Air Carrier Flight Delay Data Available on the Windows Azure Marketplace DataMarket post of 5/4/2012.

Subsequently, I discovered that the Windows Azure Marketplace Publishing Portal had problems uploading the large (~500,000 rows, ~15 MB) On_Time_Performance_YYYY_MM.csv files. I was advised by Microsoft’s Group Program Manager for the DataMarket that the *.csv upload feature would be disabled to “prevent confusion.” For more information about this issue, see my Microsoft Codename “Data Transfer” and “Data Hub” Previews Don’t Appear Ready for BigData post updated 5/5/2012.

A further complication was the suspicion that editing the current data source to include each additional table would require a review by a DataMarket proctor. An early edit of one character in a description field had caused my dataset to be offline for a couple of days.

A workaround for the preceding two problems is to create an on-premises clone of the SQL Azure table with a RowID identity column and recreate the SQL Azure table without the identity property on the RowID column. Doing this permits using a BULK INSERT instruction to import new rows from On_Time_Peformance_YYYY_MM.csv files to the local SQL Server 2012 table and then use George Huey’s SQL Azure Migration Wizard (SQLMW) v3.8.7 or later to append new data to a single On_Time_Performance SQL Azure table. Managing primary key identity values of an on-premises SQL Server table is safer and easier than with SQL Azure.

• The downside of this solution is that maintaining access to the 1-GB SQL Azure Web database will require paying at least US$9.99 per month plus outbound bandwidth charges after your free trial expires. Microsoft provides up to four free SQL Azure 1-GB databases when you specify a new database on the Codename “Data Hub” Publishing Portal’s Connect page.

This post describes the process and T-SQL instructions for creating and managing the on-premises SQL Server [Express] 2012 databases, as well as incrementally uploading new data to the SQL Azure database.

My new US Air Carrier Flight Delays dataset on the Windows Azure Marketplace DataMarket and Microsoft Codename “Data Hub” have been updated to the new data source. The “Monthly” suffix has been removed.

Vitek Karas described OData V3 demo services in a 5/11/2012 post to the Odata.org blog:

In April we shipped the OData version 3 along with the 5.0 release of WCF Data Services. To make it easier for you discover the new features and for us to show you some of them, we’re now making available the OData demo services which use the OData V3 protocol.

The new services are hosted side by side with old demo services which we didn’t change. The new demo services are using the WCF Data Services 5.0.1-rc release currently, and we will update them to the newer version once it becomes available.

There are 3 demo services:

The read-only Demo service, which has an updated model to use some V3 features as described below, is hosted here:

http://services.odata.org/V3/OData/OData.svc/

The read-write Demo service, which is the exact same model as the read-only Demo service including new V3 features, is hosted here:

http://services.odata.org/V3/(S(readwrite))/OData/OData.svc/

The read-only Northwind service, which is the exact same model as the existing Northwind service, is hosted here:

http://services.odata.org/V3/Northwind/Northwind.svc/

Here are the V3 features we’ve enabled so far.

Actions

The Product type has a Discount action on it which takes a single parameter discountPercentage of type Edm.Int32. The action takes the Price of the product it’s applied to and decreases it by the percentage specified by the parameter. For example:

GET http://services.odata.org/V3/(S(plcxuejnllfvrrecpvqbehxz))/OData/OData.svc/Products(1)

Will return a product with Price: 3.5

POST http://services.odata.org/V3/(S(plcxuejnllfvrrecpvqbehxz))/OData/OData.svc/Products(1)/Discount HTTP/1.1

Content-Type: application/json;odata=verbose

{ "discountPercentage": 25 }The response should be 204 No Content.

And now again

GET http://services.odata.org/V3/(S(plcxuejnllfvrrecpvqbehxz))/OData/OData.svc/Products(1)

Returns a product with Price: 2.625

Spatial

The Supplier type has a property Location which is of type Edm.GeographyPoint. You can see it here:

http://services.odata.org/V3/OData/OData.svc/Suppliers(0)

Any and All

The service now supports any and all operators. For example:

http://services.odata.org/V3/OData/OData.svc/Categories?$filter=Products/any(p: p/Rating ge 4)

Inheritance support

You can now address properties on derived types. The demo service doesn’t have a sample property like that yet, but you can try the new URL syntax with type cast anyway:

http://services.odata.org/V3/OData/OData.svc/Products/ODataDemo.Product

Patch support

You can send PATCH requests instead of MERGE. The behavior is identical otherwise.

Prefer header support

You can specify a Prefer header in create or update requests and ask the server to either omit or include the payload. For example:

PATCH http://services.odata.org/V3/(S(plcxuejnllfvrrecpvqbehxz))/OData/OData.svc/Products(1) HTTP/1.1

Accept: application/json;odata=verbose

Content-Type: application/json;odata=verbose

Prefer: return-content

DataServiceVersion: 3.0;{ "Price": "3.5" }

Responds with (trimmed for readability):

HTTP/1.1 200 OK

Content-Type: application/json;odata=verbose;charset=utf-8

Preference-Applied: return-content

DataServiceVersion: 3.0;

{"d":{

"__metadata":{…},

…

"ID":1,

"Name":"Milk",

"Description":"Low fat milk",

"ReleaseDate":"1995-10-01T00:00:00",

"DiscontinuedDate":null,

"Rating":3,

"Price":"3.5"

}}Association links

Each navigation link can now also specify an association link which is the URL to manipulate the association with. For example this is a part of the ATOM payload for ~/Product(1):

<link

rel="http://schemas.microsoft.com/ado/2007/08/dataservices/related/Category"

type="application/atom+xml;type=entry"

title="Category"

href="Products(1)/Category" />

<link

rel="http://schemas.microsoft.com/ado/2007/08/dataservices/relatedlinks/Category"

type="application/xml"

title="Category"

href="Products(1)/$links/Category" />We are working on adding more V3 features to the Demo service and we’ll be updating the service as we have them available.

The Datanami Staff (@datanami) posted Chevron Drills Down Big Data Assets on 5/10/2012:

The world isn’t running out of oil and natural gas, it’s running out of easy supplies of it. For energy companies, including Chevron, this means drilling ever-deeper and in more remote locations for deposits of precious fossil fuels—all tasks that require advanced big data tools and technologies.

According to Paul Siegele, president of Energy Technology at Chevron, the data involved in oil and gas exploration is staggering. However, without the technologies his team provides, which includes distributed sensors, high-speed communications, massive data-mining operations and remote drilling operations data management, the tasks of finding and exploiting new natural resources would be nearly impossible.

According to Technology Review’s Jessica Leber, programs that invoke the “digital oilfield” approach to the new era of oil and gas will play a huge role in the future of energy companies. Leber says, “ The ones that are most successful at operating remotely and using data wisely will claim big rewards. Chevron cites industrywide estimates suggesting 8 percent higher production rates and 6 percent higher overall recovery from a ‘fully optimized’ digital oil field.”

As the Leber cites, despite advancing renewable technologies, the International Energy Agency projects that global oil demand will still be growing by 2035 as more people use cars. And, as extraction becomes more difficult, almost $20 trillion in investments will be needed to satisfy these future needs.

Chevron is currently deploying up to eight global "mission control" centers as part of its digital program. Each is focused on a particular goal, such as using real-time data to make collaborative decisions in drilling operations, or managing wells and imaging reservoirs for higher production yields. The purpose is to improve performance at more than 40 of its biggest energy developments. The company estimates that these centers will help it save $1 billion a year.

Chevron's internal IT traffic alone exceeds 1.5 terabytes a day. Leber says that to keep up with this and new digital oil field projects, software tools are being developed at big oil contracting companies, such as Halliburton and Schlumberger, and big IT providers including Microsoft and IBM. (Emphasis added.)

Related Stories

<Return to section navigation list>

Windows Azure Service Bus, Access Control, Identity and Workflow

No significant articles today.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Joe Brinkman (@jbrinkman) posted Getting Started with Windows Azure and DotNetNuke on 5/12/2012:

Every week it seems more and more people are asking me how they can run DotNetNuke on Windows Azure. Last year David Rodriguez released the DotNetNuke Azure Accelerator which aims to simplify the process of installing DotNetNuke on Windows Azure. It was a great alternative to manually deploying DotNetNuke but it required the user to know how to use the Windows Azure Management Portal for setting up their Azure account. The original version of the accelerator also included the DotNetNuke installation package within the download. This meant that the accelerator was closely tied to the DotNetNuke version and had to be updated with every DotNetNuke release.

A few weeks ago, David uploaded a 6.2 beta version of the accelerator. This release resolves a number of outstanding issues with the accelerator and really takes it to a whole new level. With the 6.2 release users won’t have to mess with the Windows Azure Management Portal. All of the tasks for configuring and deploying the Azure resources are completely automated. The Accelerator presents a wizard which walks you through all of the steps for provisioning your compute, storage and database accounts on Azure. It even makes it possible to configure your account for RDP access and Windows Azure Connect access. These updates will make installing DotNetNuke on Windows Azure much simpler.

In addition to the simplified installation, the 6.2 accelerator also removed the tight coupling between the accelerator and DotNetNuke releases. The accelerator now queries DotNetNuke.com to find out the current version of DotNetNuke and has Windows Azure automatically download and install this version from CodePlex. As a result the accelerator has shrunk from a 66Mb download to a 4Mb package. This speeds up the deployment process as well since you don’t have to re-upload DotNetNuke to Windows Azure over your own network but can instead take advantage of Microsoft’s bandwidth availability between the CodePlex and Windows Azure data centers. While not available in the Beta release, we are working to have all the infrastructure pieces in place so that you will even be able to choose which version of DotNetNuke to install from within the accelerator. As new versions of DotNetNuke are released, the accelerator will automatically update it’s list giving you access to the latest versions of DotNetNuke without any changes to the accelerator.

I am really excited about all of these changes and have been going back and forth with David on even more ways to enhance the accelerator to make it even easier and more powerful. I have created a short video which walks through the installation process and as you can see it really is very easy to get started with Windows Azure and DotNetNuke.

David Gristwood posted Real World Windows Azure: LinkFresh Cloud QA – An application that helps businesses deliver safe, fresh, high quality food on 5/11/2012:

One of the best things about my work with Windows Azure is when a system actually goes live, so our team are going to be doing more blogs about people building on the Windows Azure platform, so you can what sorts of applications they built, why the chose the cloud and Windows Azure, and what they learnt in the process.

The blogs will all be on our partner blog under the “Real World Windows Azure” tag, and I will post some of the projects on my blog, such as this first one:

LINKFresh Cloud QA by Anglia Business Solutions.

Fresh food producers are subject to stringent food safety and quality standards, so managing and monitoring this requires a flexible and powerful mobile solution. LINKFresh Cloud Quality Assurance, part of the Anglia Business Solutions' LINKFresh Cloud platform, delivers a mobile device application which offers a complete Business Management solution for the food supply chain industry. The application runs on Windows Azure and captures produce information, including photo capture, signatures and geo location and stores it safely and securely in the cloud for processing.

Richard Jones, Anglia’s Director of Technology, shared their reasoning for building this application on the cloud and the benefits that it brings to their customers “Reaching a large volume of mobile users stretching across the globe always presents challenges for support, infrastructure and rollout. Using cloud technology simply made sense for us; we didn’t want the expense of building a backend server environment for each territory we wish to deploy in. The cloud means that we can switch on and off IT infrastructure as and when we require. This makes a great customer experience as the end user is always presented with a fast and useable App. That works reliably all the time. Being able to provide a unparalleled level of service to mobile users; makes our solution scale and keeps customers enthusiastic and loyal to our product offering.”

He has also commented on how the company made some if its key technical decisions “Windows Azure takes the pain out of deploying large scale systems. The ability to use familiar development tools such as Visual Studio to manage and deploy a cloud solution is truly remarkable. Microsoft's ability to ramp up the number of instances and servers we deploy on makes reaching a large geography of users very straight-forward; we are deploying to a large mobile workforce and Azure fits perfectly with our requirement”

David Gristwood, Windows Azure architect with Microsoft, noted that “This is a really innovative use of Windows Azure. The peak loads that there system has to deal with during busy periods is a perfect match for Windows Azure’s elasticity, which can scale up to cope with high throughout and traffic, then scale back down in quiet periods to reduce the running costs, which make for a really compelling story. Wrapping up their programming logic in Windows Azure web roles reduces the amount of programming effort required to manage this elasticity. Mobile scenarios are very common with Windows Azure, because they enable a wide range of devices to connect to Windows Azure through REST based webs service calls, to upload data and get the very latest information, whilst on the move, or in this case, working in the middle of a field”

Fast Facts

- The LINKFresh Cloud Quality Assurance runs on Windows Azure and uses SQL Azure and Windows Azure storage. The mobile client runs on Windows Phone, Android and iOS

- To cope with peak time demands, the system has been designed to handle hundreds of GB per day from thousands of workers in fields, farms and warehouses

- The single code base used for this solution has simplified the development and deployment, and Windows Azure gives Anglia resilience, high availability and international reach without the up-front capital expenditure.

- Anglia Business Solutions was founded in 1981 and is a gold certified partner based in Cambridge, UK, and was Dynamics partner of the year 2010

For more information

- Read more about LINKFresh Quality Assurance

- Get help exploring Windows Azure from Microsoft UK

- Learn more about Windows Azure by watching the Six Weeks of Windows Azure sessions

- Check out the Windows Azure Marketplace

- Connect with us on our ISV Dev LinkedIn group

Wely Lau (@wely_live) continued his series with An Introduction to Windows Azure (Part 2) on 5/10/2012:

This is the second article of a two-part introduction to Windows Azure. In Part 1, I discussed the Windows Azure data centers and examined the core services that Windows Azure offers. In this article, I will explore additional services available as part of Windows Azure which enable customers to build richer, more powerful applications.

Additional Services

1. Building Block Services

‘Building block services’ were previously branded ‘Windows Azure AppFabric’. The main objective of building block services is to enable developers to build connected applications. The three services under this category are:

(i) Caching Service

Generally, accessing RAM is much faster than accessing disk, including storage and databases. For that reason, Microsoft have developed an in-memory and distributed caching service to deliver low latency, high-performance access, namely Windows Server AppFabric Caching. However, there are some activities, such as installing and managing, and some hardware requirements like investing in clustered servers, which have to be handled by the end-user.

Windows Azure Caching Service is a self-managed, yet distributed, in-memory caching service built on top of the Windows Server AppFabric Caching Service. Developers will no longer have to install and manage the Caching Service / Clusters. All they need to do is to create a namespace, specify the region, and define the Cache Size. Everything will get provisioned automatically in just a few minutes.

Creating new Windows Azure Caching Service

Additionally, Azure Caching Service comes along with a .NET client library and session providers for ASP.NET, which allow the developer to quickly use them in the application.

(ii) Access Control Service

Third Party Authentication

With the trend for federated identity / authentication becoming increasingly popular, many applications have relied on authentication from third party identity providers (IdPs) such as Live ID, Yahoo ID, Google ID, and Facebook.

One of the challenges developers face when dealing with different IdPs is that they use different standard protocols (OAuth, WS-Trust, WS-Federation) and web tokens (SAML 1.1, SAML 2.0, SWT).

Multiple ID Authentication

Access Control Service (ACS) allows application users to authenticate using multiple IdPs. Instead of dealing with different IdPs individually, developers just need to deal with ACS and let it take care of the rest.

AppFabric Access Control Services

(iii) Service Bus

Windows Azure’s Service Bus allows secure messaging and connectivity across multiple network hierarchies. It enables hybrid model scenarios, such as connecting cloud applications with on-premise systems. The Service Bus allows applications running on Windows Azure to call back to on-premise applications located behind firewalls and NATs.

Service Bus Diagram

Migrating from an on-premise Windows Communication Foundation (WCF) framework to the Service Bus is trivial as they use a similar programming approach.

2. Data Services

Data Services consists of SQL Azure Reporting and SQL Azure Data Sync, both of which are still currently available as Community Technology Previews (CTP).

(i) SQL Azure Reporting

SQL Azure Reporting aims to provide developers with a service similar to that of the current SQL Server Reporting Service (SSRS), with the advantages of being in the cloud. Developers are still able to use familiar tools such as SQL Server Business Intelligence Development Studio. Migrating on-premise reports is also easy as SQL Azure Reporting is essentially built on top of SSRS architecture.

(ii) SQL Azure Data Sync

SQL Azure Data Sync is a cloud-based data synchronization service built on top of theMicrosoft Sync Framework. It enables synchronization between a cloud database and another cloud database, or with an on-premise database.

SQL Azure Data Sync

(from Windows Azure Bootcamp)

3. Networking

Three networking services are available today:

(i) Windows Azure CDN

The Content Delivery Network (CDN) caches static content such as video, images, JavaScript, and CSS at the closest node to users. By doing so, it improves performance and provides the best user experience. There are currently 24 nodes available globally.

Windows Azure CDN Locations

(ii) Windows Azure Traffic Manager

Traffic Manager is designed to enable high performance and high availability of web applications, by providing load-balancing across multiple hosted services in the six available data centers. In its current CTP guise, developers can select one of the following rules:

- Performance – detects the location of the user traffic and routes it to the best online hosted service based on network performance.

- Failover – based on an ordered list of hosted services, traffic is routed to the online service highest on the list.

- Round Robin – equally distributes traffic to all hosted services.

(iii) Windows Azure Connect

Windows Azure Connect supports secure network connectivity between on-premise resources and the cloud by establishing a virtual network environment between them. With Windows Azure Connect, cloud applications appear to reside on the same network environment as on-premise applications.

Windows Azure Connect

(from the Windows Azure Platform Training Kit)

Windows Azure Connect enables scenarios such as:

- Using an on-premise SMTP Server from a cloud application.

- Migrating enterprise apps which require an on-premise SQL Server to Windows Azure.

- Domain-join a cloud application running in Azure to an Active Directory.

4. Windows Azure Marketplace

Windows Azure Marketplace is a centralized online market where developers are able to easily sell their applications or datasets.

(i) Marketplace for Data

Windows Azure Marketplace for Data is an information marketplace allowing ISVs to provide datasets (either free or paid) on any platform, and available to the global market. For example, Average House Prices, Borough provides annual and quarterly house prices based on Land Registry data in the UK. Developers can then subscribe and utilize this dataset to develop their application.

(ii) Marketplace for Applications

Windows Azure Market Place for Applications enables developers to publish and sell their applications. Many, if not all of these applications are SAAS applications built on Windows Azure. Applications submitted to the Marketplace must meet a set of criteria.

Conclusion

To conclude, we have examined the huge investment that Microsoft is making and will continue to make in Windows Azure, the core of its cloud strategy. Three fundamental services (Compute, Storage, and Database) are offered to developers to satisfy the basic needs of developing cloud applications. Additionally, with Windows Azure services, (Building Blocks Services, Data Services, Networking, and Marketplace) developers will find it increasingly easy to develop rich and powerful applications. The foundations of this cloud offering are robust and we should continue to look out for new features to be added to this platform.

References

This article was written using the following resources as references:

- Windows Azure Platform Training Kit

- https://www.windowsazure.com/en-us/home/tour/overview/

- http://azurebootcamp.com/

This post was also published at A Cloud Place blog.

Himanshu Singh (@himanshuks) posted Real World Windows Azure: Interview with IDV Solutions Vice President Scott Caulk on 5/10/2012:

As part of the Real World Windows Azure series, I connected with Scott Caulk, vice president of Product Management at IDV Solutions to learn more about how the company uses Windows Azure. Read IDV Solution’s success story here. Read on to find out what he had to say.

Himanshu Kumar Singh: Tell me about IDV Solutions.

Scott Caulk: We provide large organizations with business intelligence, security, and risk visualization solutions. Our flagship product, Visual Fusion, is a business intelligence software solution that helps organizations unite content from virtually any data source and then deliver it to end users in a visual, interactive context for better business insights. Visual Fusion and our other products have helped us establish a strong presence among major organizations in government and private industry sectors, including the U.S. Department of Homeland Security, the U.S. Department of Transportation, Pfizer, Pacific Hydro, BP, and the Thomson Reuters Foundation.

HKS: What led you to develop Fetch! on Windows Azure?

SC: In 2010, one of our customers asked us for an application that would make it easy for their mobile employees to access the organization’s large data collection. The prototype we created turned out to work really well, and the customer liked it. The application was first deployed entirely on the customer’s servers and was accessed by end users though mobile email; however, our development team decided that a more interactive experience was necessary. To create a more interactive version that maintained device compatibility, we built it as a rich web application.

What we discovered is that getting the solution to run in an enterprise infrastructure but also exposing it to the Internet began to create risks. We had the foundation of a good idea that could be marketed to other customers as well, but realized that IT departments would worry about data security issues and opening up ports in their firewalls so mobile devices could use the Internet to access internal data.

So we began looking for a cloud platform that could help provide the essential functionality of providing data access to mobile users while minimizing the exposure risk for corporate data. We chose Windows Azure as the cloud platform on which to develop the app. Called Fetch!, its a hybrid solution that uses cloud capabilities to link mobile users with on-premises enterprise data. As a platform-agnostic mobile app, Fetch! supports the broadest range of common mobile operating systems, including Windows Phone, Android, and iOS, as well as any device capable of sending and receiving email.

HKS: What capabilities does Fetch! deliver?

SC: Fetch! allows mobile corporate employees to access a wide range of information such as data grids and text, charts and graphs, documents and images, scorecards, and maps. It supports full access to systems such as IDV Solutions Visual Fusion; Microsoft SharePoint and related PerformancePoint services; Microsoft SQL Server databases; Oracle databases; Salesforce.com; and custom line-of-business systems and web services.

HKS: What factors led you to choose Windows Azure?

SC: We’re a member of the Microsoft Partner Network, and have expertise with Windows development tools such as the .NET Framework the Microsoft Visual Studio development system, and ASP.NET. This [experience] gave Windows Azure an advantage in our evaluation process. The tight integration of Windows Azure with our existing development environment made our development efforts go more smoothly.

Windows Azure also provided a key feature that was invaluable during the development cycle: the Windows Azure Service Bus. The Service Bus provides a hosted, secure, and widely available infrastructure for secure messaging and communications relay capabilities. It offers connectivity options for service endpoints that in other cloud solutions would be difficult or impossible to reach. The Service Bus relay service also eliminates the need to set up a new connection for each communications instance, resulting in faster and more reliable connections for mobile users.

With Windows Azure, we could jump into the project using our .NET expertise, quickly ramp up, and then deploy a solid app, whether for a smartphone or tablet device. Time to deployment went quickly, and any modifications we have for Fetch! Will be very fast. If we had gone for a non-Microsoft cloud platform, our development time probably would have been slowed by weeks, if not months.

HKS: How does Fetch! work?

SC: When accessing data through Fetch!, mobile employees use an email address and password to log on, and a web application provides the means for requesting data. After the user enters a command to query data, the command is processed in Windows Azure and then sent via the Windows Azure Service Bus to a service running within the corporation’s on-premises IT infrastructure. The on-premises service uses “connectors” that are part of the Fetch! solution to link to variety of data sources. The relevant data is collected and then returned using a web service, which formats it and presents it to the user. The speed of the process depends on the particular IT infrastructure, but it typically occurs in just a few seconds.

Other platform components used in the solution include Windows Azure Storage, which provides scalable and easily accessible data storage services, and Windows Azure Compute, which lets us run application code in the cloud. Each Windows Azure Compute instance runs as a virtual machine that is isolated from other Windows Azure customers and handles activities such as network load balancing and failover for continuous availability. Additionally, Fetch! can connect to data hosted on SQL Azure.

HKS: What are some of the advantages of using Windows Azure for Fetch!?

SC: By using Windows Azure as an integral part of Fetch! we were able to use features that ease enterprise customers’ concerns about the security of data accessed from mobile devices. And with Windows Azure Service Bus, our customers can have the Fetch! service running inside their infrastructure without the need to poke holes in their firewall to get data in and out. This is especially important for customers in security fields, where the safety of data is critical. With Service Bus, our customers’ mobile users can connect to rich enterprise information without exposing the network to any additional security concerns.

HKS: What are some of the benefits of using Windows Azure for your business?

SC: When developing Fetch!, we weren’t sure if we would have just a few customers or many, including large customers that might add thousands of users at a time to the solution. Windows Azure gives us the scalability to very quickly add large volumes of users as the product is adopted across more and more of our customer base.

Furthermore, due to their large size, the enterprise customers in our target markets can cause sharp spikes in traffic—sometimes overnight in cases where they add entire groups or departments. This means that Fetch! needs to run on a cloud platform that can deliver enormous scalability at a moment’s notice. That’s one of the big benefits of Windows Azure. We have customers with tens of thousands of users, and we can click a button to go from having two load-balanced web servers to having a dozen or more in a matter of minutes is a very powerful feature for us—and for our customers.

We also benefit from end-to-end development tools that provide a seamless environment for innovations and upgrades. Windows Azure was key in helping our company build and deliver a mobile, data access app that meets the security and scalability needs of our large customers.

Leandro Boffi (@leandroboffi) described Building Elastic and Resilient Cloud Applications in a 5/2/2012 post (missed when pubished):

During the last months I’ve been collaborating as advisor with the Microsoft Patterns and Practices Team in a very interesting project. They worked on an integration pack for Windows Azure and Enterprise library.

One of the outcomes of that work is a book called “Building Elastic and Resilient Cloud Applications”. This book provides background information on autoscaling and transient fault handling which makes it useful even if you don’t want to use the Application Blocks.

The P&P guys sent me a copy of the book as a gift and they mentioned my name on the list of advisors. I am very proud and thankful of have participated in this project.

You can find the details on MSDN clicking here.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

No significant articles today.

No significant articles today.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

My (@rogerjenn) Uptime Report for my Live OakLeaf Systems Azure Table Services Sample Project: April 2012 of 5/10/2012 begins:

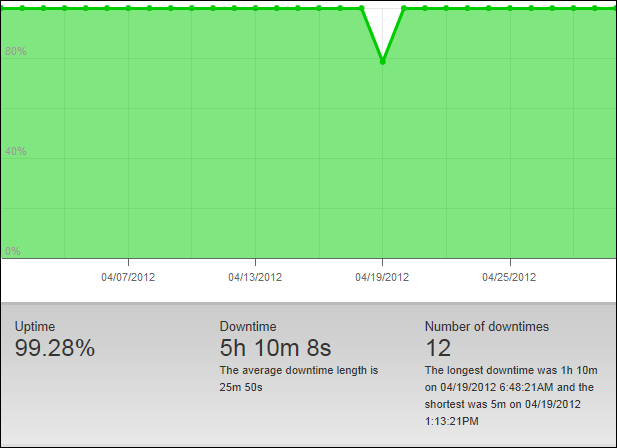

My live OakLeaf Systems Azure Table Services Sample Project demo runs two small Windows Azure Web role instances from Microsoft’s South Central US (San Antonio, TX) data center. I didn’t receive (or misplaced) the usual Pingdom Monthly Report for April, so here’s the detailed uptime report from Pingdom.com for April 2012:

This is the first case of monthly uptime below the 99.95% minimum Windows Azure Compute Service Level Agreement (SLA) guarantee since upgrading the sample app to two instances. My Flurry of Outages on 4/19/2012 for my Windows Azure Tables Demo in the South Central US Data Center post updated 4/30/2012 provides details about the outage on 4/19/2012 and its root cause analysis.

And continues with a detailed Pingdom response time data for the month of March 2012.

Joel Foreman offered Ramping Up on the Windows Azure Platform: 200 Level training documents in a 5/11/2012 post to the Slalom Consulting blog:

I was recently asked to put together some material for consultants with the goal of getting to a “200 Level” of knowledge on the Windows Azure Platform and its breadth of capabilities. I thought this would be an opportune time to revamp a previous “getting started” post that I did with some updated content. Below is a 10-hour self-paced training plan, design for bringing someone up to that 200 level…

Read: Understanding the Different Platform Components (~1 hour)

Take a few minutes to read a brief overview of some of the different features of the Windows Azure Platform. Think of these as building blocks. They can be used individually or together to solve problems and build applications.

Slalom Consultant Joel Forman specializes in cloud computing and the Windows Azure Platform.

Core Features:

In addition, take a look at these emerging features to see some of the additional possibilities with the platform:

Watch: Explore Sessions from the Learn Windows Azure Event (~3 hours)

Microsoft put on an all-day virtual training event late last year on Windows Azure via Channel9. Explore some of the sessions from notable Microsoft folks such as Scott Guthrie, Dave Campbell, and Mark Russinovich.

- Getting Started with Windows Azure

- Cloud Data & Storage

- Windows Azure Demos and Common Questions

- Developing Windows Azure Applications with Visual Studio

- Building Scalable Applications

Explore: Scenarios, Case Studies, and Platform Interoperability (~1 hour)

Explore some additional cloud topics that peak your interest…

Scenarios: Get a feel for what scenarios are being highlighted for cloud. Are you working in these areas today in your current or previous projects?

Case Studies: Get a feel for some of the different case studies that exist today. Browse the case study gallery and pick 2-3 to read.

Interoperability: The Developer Center is a great place to start to get a feel for the different languages and technologies that work with Windows Azure. Take a few minutes to browse around and check out the content for .NET, Java, Node.js, and more. Note all of the tutorials, how-to guides, and documentation of common tasks for the different languages. These can definitely come in handy down the line.

Develop: Download the Windows Azure Platform Training Kit and Start Developing (~5 hours)

The Windows Azure Platform Training Kit continues to be one of the best resources for hands-on training. It contains great hands-on labs that are perfect for building your first applications on Windows Azure. Choose from labs around compute, storage, SQL Azure, Access Control Service, and more.

Recommended Introductory Hands-On Labs:

- Introduction to Windows Azure

- Introduction to SQL Azure

- Introduction to Service Bus

- Introduction to the AppFabric Access Control Service 2.0

After spending some time in these areas, you should have a good base level of understanding of the entire platform and its capabilities.

The Windows Azure Operations Team reported the following [Windows Azure Compute] [North Central US] [Yellow] Windows Azure Outage in North Central US sub-region on 5/11/2012:

May 11 2012 4:25AM We are experiencing an issue with all Windows Azure services in the North Central US sub-region. We are actively investigating this issue and working to resolve it as soon as possible. Further updates will be published to keep you apprised of the situation. We apologize for any inconvenience this causes our customers.

- May 11 2012 5:25AM We have traced down the root cause of this outage to a faulty networking device and we are working on mitigating the impact. Further updates will be published to keep you apprised of the situation. We apologize for any inconvenience this causes our customers.

- May 11 2012 6:25AM We have isolated the faulty networking device at 11:04 PM PST and have observed network traffic improvement in the North Central US sub-region. Full restoration of the network traffic is still being validated. Further updates will be published to keep you apprised of the situation. We apologize for any inconvenience this caused our customers.

- May 11 2012 7:25AM We are still observing some network traffic disruption in the North Central US sub-region, down to less than 3% packet loss across a limited set of clusters in this sub-region, and continue to validate the mitigation in place. Further updates will be published to keep you apprised of the situation. We apologize for any inconvenience this causes our customers.

- May 11 2012 8:25AM We are still observing intermittent and limited network traffic disruption in the North Central US sub-region, but the potential customer impact is now very low. We have engaged in the repair steps on the networking device causing the traffic disruption. Further updates will be published to keep you apprised of the situation. We apologize for any inconvenience this causes our customers.

- May 11 2012 9:25AM The repair steps are still underway on the networking device that caused the traffic disruption. Network traffic has been steady at 100% with no packet loss since the previous notification. Further updates will be published to keep you apprised of the situation. We apologize for any inconvenience this causes our customers.

- May 11 2012 9:30AM The repair steps have been executed and successfully validated. Network traffic has been fully restored in the North Central US sub-region. We apologize for any inconvenience this caused our customers.

Slow news day.

Himanshu Singh (@himanshuks) posted Windows Azure Community News Roundup (Edition #18) to the Windows Azure Team blog on 5/11/2012:

Welcome to the latest edition of our weekly roundup of the latest community-driven news, content and conversations about cloud computing and Windows Azure. Here are the highlights from this week.

Articles and Blog Posts

Installing 3rd-Party Software on Windows Azure – What Are the Options? by @wely-live (posted May 9)

- Sending Text Messages from Your Windows Azure Service by @MichaelCollier (posted May 9)

- Interview Series: Four Questions with Dean Robertson by @rseroter (posted May 9)

- Add Authentication Options to Your Windows Azure Website by Zoiner Tejada (posted May 8)

- BlobShare: Corporate Filesharing with Security and Control by @buckwoody (posted May 8)

- (Video) Privacy Central Protecting Your Data Using Ruby on Windows Azure by @brucedykle (posted May 8)

Upcoming Events, and User Group Meetings

North America

- May 14: Central Ohio Computer User Group – Columbus, OH

- May 17: Windows Azure Hands-on and Node.js – Reston, VA

- May 17: Windows Azure Kick Start – Waukesha, WI

- May 30: Cloud Camp – Reston, VA

- May 30: Windows Azure Meetup - Vancouver, Canada

- June 11-14: Microsoft TechEd North America 2012 – Orlando, FL

Europe

- May 15-16:CloudConf 2012 – Moscow, Russia

- May 16: Big Data using Hadoop on Windows Azure – Antwerp, Belgium

- May 23-24: DevCon 12 – Moscow, Russia

- June 26-29: Microsoft TechEd Europe – Amsterdam, Netherlands

Other

- May 21-25: Windows Azure UK Bootcamps – Online

- May 22: Architectural Patterns for the Cloud - Online

- May 22-24: Integration Master Class with Richard Seroter – Australia (3 cities)

Recent Windows Azure Forums Discussion Threads

- How do I publish web application to azure using "Publish -> "Web Deploy"? (getting error: Web Deployment task failed) – 591 views, 8 replies

- Access Page in VM Image from IE, Not Page from VM Role – 590 views, 6 replies

- Initial Data Sync Failing – 724 views, 9 replies

Send us articles that you’d like us to highlight, or content of your own that you’d like to share. And let us know about any local events, groups or activities that you think we should tell the rest of the Windows Azure community about. You can use the comments section below, or talk to us on Twitter @WindowsAzure.

Valery Mizonov updated his Cloud Application Framework & Extensions (CloudFx) v1.2.0.16 Nuget package on 5/10/2012:

The Cloud Application Framework & Extensions (CloudFx) is a Swiss Army knife for Windows Azure developers which offers a set of production quality components and building blocks intended to jump-start the implementation of feature-rich, reliable and extensible Windows Azure-based solutions and services.

To install Cloud Application Framework & Extensions (CloudFx), run the following command in the Package Manager Console

PM> Install-Package Microsoft.Experience.CloudFx

Valery also added documentation for CloudFx Samples:

Introduction

The following sample code accompanies the Cloud Application Framework & Extensions (CloudFx) library and demonstrates some basic "How-To" style samples as well as advanced samples showing several CloudFx features altogether.

The list of samples is growing, so please check back later if you are interested in any specific scenarios not currently covered. Please also consider using the "Questions & Answers" section to submit your questions, feedback or general comments.

What is CloudFx?

The Cloud Application Framework & Extensions (CloudFx) library is comprised of production-quality components and building blocks created with a single goal in mind: to enable Windows Azure developers to build high-end cloud-based solutions without having to understand as many of the relatively complex mechanical tasks each platform service API involves.

CloudFx implements a collection of patterns derived from real customer solutions to provide professional features at reduced complexity, which results in faster development of robust and performant Windows Azure applications.

For example, when working with Windows Azure Queues, CloudFx provides a pair of simplePut and Get (send and receive) actions that understand the application’s specific business objects, reliably and efficiently invoke the underlying storage client API, handle errors, process messages that don’t fit on a queue, and so on.

For those exploring Windows Azure Service Bus, CloudFx offers straightforward Publish and Subscribe operations that make the most efficient use of the asynchronous messaging APIs and implement all the relevant best practices so that the developer doesn’t have to focus on low-level plumbing and can concentrate on business domain.

The example of how the CloudFx framework was used in the implementation of a real-world complex cloud-based solution can be foundhere.

David Linthicum (@DavidLinthicum) asserted “A recent survey from Cisco finally tells us what we suspected all along” in a deck for his This just in: Cloud computing is hard and takes a long time post of 5/10/2012 for InfoWorld’s Cloud Computing blog:

Cisco Systems has surveyed more than 1,300 IT professionals to determine the top priorities and challenges they face when migrating applications and information to the cloud. Guess what? It's harder, and it takes longer than many thought.

Duh.

Of course, these surveys have a tendency to be self-serving, so it's no surprise that this one concludes that your networks need upgrading before you can move to the cloud. After all, the survey was sponsored by a networking company.

But putting aside the obvious self-promotion, the broader conclusions confirm what many of us have suspected for some time and what anyone considering a cloud migration must understand: It's not easy. Cloud computing is a challenge that takes longer than most organizations have budgeted.

For example, only 5 percent of IT decision makers surveyed have been able to migrate at least half of their total applications to the cloud. I'm not sure it's even that much in the world at large, given what I've seen in my travels. However, the survey states that by 2013, that number is expected to significantly rise, as more than one in five will migrate at least half of their total applications to the cloud.

The survey also captures the difficulty through humor, with conclusions such as, "More than one-quarter said they could train for a marathon or grow a mullet in a shorter period of time than it would take to migrate their company's applications to the cloud" or "nearly one-quarter of IT decision makers said that, over the next six months, they are more likely to see a UFO, a unicorn, or a ghost before they see their company's cloud migration starting and finishing."

The core reason for the difficulty is, of course, the fact that moving to the cloud is a platform-migration problem -- in this case from traditional systems to private and public clouds. IT pros already know migrations are always problematic, especially if you're considering business-critical systems. But why businesses haven't equated cloud migration to every other migration is a mystery, perhaps the result of "silver bullet" sales claims by vendors.

What makes the migration to the cloud even more difficult is the lack of information about the process. Many new cloud users are lost in a sea of hype-driven desire to move to cloud computing, without many proven best practices and metrics.

I suspect we're seeing the start of the pain, but I believe things will improve as we learn how to work through the problems of migrating to the cloud. I've already started growing my mullet.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

Gavin Clarke (@gavin_clarke) quoted the “HP cloud chief: The hard work and zen of Windows open source” in an introduction to his Lessons for HP box jockeys on the Amazon warpath article of 5/12/2012 for The Register:

You might think the hard work for Hewlett-Packard is done, after it came from behind to build its own Amazon-style cloud so quickly. But the difficult part – taking on Amazon and winning with open source – lies ahead.

The world's biggest PC maker lifted the lid yesterday on the biggest change to its business in recent history.

The HP Cloud Compute free beta ended on Thursday with a rash of 40 partners announcing that they are all now available on or in support of HP's cloud – Rightscale, ActiveState, CloudBees, Dome9, EnterpriseDB and others. The period of construction and half-priced sign-ups is finished. Now it's down to business.

Forget former chief executive Carly Fiorina's folly of buying Compaq in 2002 for $25bn: that just added more computers to the existing line-up. And Mark Hurd's $13.9bn EDS buy in 2008? Services based on servers and, yup, PCs.

HP Cloud Compute is a leap from terra firma into a blue-sky world. HP is hoping to sell something frighteningly intangible for a bunch of box jockeys who feel rather more comfortable with product in, product out, margin and mark-up. Now they are selling compute cycles, storage capacity and bandwidth: stuff you can't see, touch or mark up.

A tweet from April's OpenStack design conference in San Francisco, California, hinted at the scale of what HP is selling: 2,000 nodes, "multi-petabytes" of Swift storage capacity and "pricing to match AWS". HP won't comment on the these numbers.

Stacking up

HP is doing all this using a piece of software it can't claim to own and that, in itself, doesn't confer any kind of competitive advantage. HP Cloud Compute uses the open-source cloud architecture called OpenStack – in which it is a part player along with nearly 200 other companies also fighting for a place in a gold rush era in the history of computing. Dell is putting out OpenStack reference implementations, NTT is building furiously with OpenStack and AT&T has joined up.

Worse – yes, there is worse to come for Palo Alto's box monkeys – the PC maker, along with all those other OpenStackers, is going up against Amazon's EC2, the critical mass monster of cloud.

Amazon's cloud earned the bookseller an estimated $1.08bn in the first nine months of last year – up 70.4 per cent compared to 2010 – while the amount of data Amazon holds hit 762 billion objects, more than doubling last year.

It will get bigger as Amazon becomes an infrastructure for other clouds such as Heroku and makes it easier for enterprise customers to upload their data and embrace .NET to pull in Microsoft shops.

HP's road to cloud has been laid down pretty quickly, if a little late. It only joined the OpenStack project relatively late – in July last year. That was after 90 others, including Rackspace, a co-founder which had been there 12 months before. Amazon's EC2 floated in 2006 while Microsoft opened Azure in early 2010.

HP has also delivered in spite – yes, in spite – of a partnership with its long-time PC and server operating system buddy Microsoft. The computer maker is more than just one of Redmond's biggest partners on PCs, servers and services; it was supposed to be on the inside of the cloud tent too – as an early adopter of Microsoft's cloud, Windows Azure.

HP, Dell and Fujitsu were named by Microsoft in 2010 as planning appliances running the Windows Azure code that would slot in to their own data centres as well as those of their customers. The three firms – along with eBay – were also supposed to launch services running Windows Azure appliances in their data centres. (Emphasis added.)

Yet two years later, HP has started a cloud service, and it doesn't run Windows Azure, it uses OpenStack instead. Bear in mind that HP only joined OpenStack one year after the supposed Microsoft Windows Azure deal.

HP is now delivering OpenStack compute, storage and networking running on HP servers, storage and network equipment. Also, the operating system running HP's cloud is Linux - versions of Ubuntu, Fedora and CentOS can be spun up as virtual instances.

What happened? Why did the cloud business side of HP go for open source when the PC side dumped the open-source client-side experiment of previous CEOs Hurd and Apotheker – which began with the purchase of Palm for $1.2bn – and went for Windows?

Slow Windows

Zorawar Biri Singh, the HP executive in charge HP's cloud services, who joined from IBM in early 2010 to float the service, told The Reg this week that open source and OpenStack, licensed under an Apache licence, allowed HP to get up and running faster.

He said: "In the year I've been here, my emphasis has been to get our core infrastructure up ... For HP it was about getting the infrastructure to run the stuff on and have a compelling platform."

Singh reckons HP remains committed to Windows Azure, but the language sounds clipped, as it seems Windows Azure will be a guest on OpenStack – a platform as a service (PaaS) – instead of providing the underling infrastructure as a service (IaaS). Also, its Windows Server will likely become just another guest alongside the Linuxes.

Redmond not out

"We are still working very closely with Microsoft," Singh assures The Reg. "Windows Azure is very much on our roadmap. We will talk about it shortly – we had our hands full. Microsoft is a great partner and we will leverage that."

Singh reckons Windows Azure was PaaS from the get-go, but that's not how Microsoft spun it in 2010, when the idea was for Windows Azure to run on those appliances. "PaaS might have been premature."

Let's assume that as OpenStack is under an open-source licence, HP was automatically granted the freedom to customise and tune the OpenStack code – which it couldn't have done if it had worked with the proprietary and Microsoft-controlled Windows Azure. Fine, but the real problem is there's a dearth of skilled engineers out there who know how to actually program OpenStack code.

Those who do know much about it are busy setting up their own start-ups – companies like Piston Cloud. Piston Cloud's chief technology officer Josh McKenty was chief architect for NASA on the Nebula component that comprised one half of 2010's original OpenStack announcement with Rackspace.

HP has been hiring in an attempt to close the gap: in September 2011 Singh brought on board MySQL architecture director and Drizzle lead architect Brian Aker as an HP fellow, with special responsibility for platform-as-a-service and development-as-a-service engineering.

MySQL was sold by Oracle to enterprises and OEMs but runs at web scale inside Facebook and Twitter. Aker, in turn, has recruited engineers in MySQL, Drizzle, Scala, Postgres, Ruby on Rails, Python and Open Stack. HP went on to announce MySQL-as-a-service on its cloud.

A lot of the hard work has taken place in the basic OpenStack Nova compute and Swift storage code, Singh says, especially tuning Nova to manage compute frameworks and to transfer information. "Not too many people have the experience or will of conviction to dive in - there's a ton of hard work. It's really difficult, but folks are committed to it," he said.

Singh says HP is still learning how to work with OpenStack but is bringing to bear its experience in large data centres. "We understand how to serve large web 2.0 customers doing scale. We've taken that hardware IP software stack and converted it to things in open source like OpenStack," he said.

No billion-dollar data centres

Singh declined to comment on the numbers of customers, nodes or petabytes mentioned in the tweet but reckoned HP is building on a "global scale". "This has lots to do with how do you deal with scale and how to you scale to hundreds, thousands and hundreds of thousands of nodes across geographies and giving it the robustness and service quality that customers expect over time. We are in the early stages of figuring that out," he said.

HP won't build out its own data centres to achieve that, unlike Microsoft or Amazon, he said. Rather the company will use its existing facilities and exploit relationships with service providers who will install HP servers and reference architectures running OpenStack.

Singh is particularly excited by the HP EcoPOD, a take on the old Sun Microsystems trailer-computing idea that you drop off and remove by truck, according to demand.

"You won't see HP invest billions of dollars in data centres next to a dam because those are built for specific workloads, like search clusters. You will see us populate existing data centres [and] provide eco pods to rapidly stand up to meet local demand," Singh said.

Read more: Beating the beasts, 2, Next →

Travis Wright (@radtravis) reported Open Beta for Private Cloud MOF Guide - Now Available for Download! on 5/10/2012:

Managing and Operating a Microsoft Private Cloud—How to Apply the Microsoft Operations Framework (MOF)

The Microsoft Operations Framework team is working on a new guide: Managing and Operating a Microsoft Private Cloud—How to Apply the Microsoft Operations Framework.

Get the beta here.

This guide leads you through the process of how to manage and operate a Microsoft private cloud using the service management processes of the Microsoft Operations Framework (MOF). The guide applies MOF’s IT service management principles to that conceptual architecture and technology stack. It describes how to maximize the potential of MOF’s people, process, and technical capabilities to manage and operate a Microsoft private cloud.

Follow this guidance for a private cloud that is better aligned to meet your business needs. Employ MOF’s service management functions (SMFs) to help align IT and business goals, which can enable you to perform private cloud activities effectively and cost-efficiently.

This guide focuses on the SMFs in the Operate Phase and the Manage Layer of MOF to give IT pros and managers what they need to know about managing and operating a private cloud. Management reviews—internal controls that ensure goals are met to achieve business value—are also included.

Tell us what you think! Download and review the beta guide, then send your feedback to mofpm@microsoft.com by June 11, 2012. We would especially appreciate feedback in the following areas:

· Usefulness – Is the technical depth of this guide sufficient for the topics covered? Will this guide be useful to you on a day-to-day basis? What portions of the guide are the most useful to your organization?

· Usability – Is the structure or flow of this guide effective? Is the information presented in a clear and logical manner? Can you easily find key content?

· Impact – Do you anticipate that this guide will save you time and accelerate deployment of Microsoft products in your organization? Has this guide had a positive influence on your opinion of the Microsoft technologies it addresses?

Benefits for participation:

· You get an early look at the guide.

· You will be listed on the acknowledgments page for providing usable feedback.

We look forward to hearing from you! Your input helps to make each guide as helpful and useful as possible. Thanks in advance for taking the time to review Managing and Operating a Microsoft Private Cloud—How to Apply the Microsoft Operations Framework (MOF).

Subscribe to the MOF beta program and we will notify you when new beta guides become available for your review and feedback. These are open beta downloads. If you are not already a member of the MOF Beta Program and would like to join, follow these steps:

1. Go here to join the MOF beta program:

https://connect.microsoft.com/site14/InvitationUse.aspx?ProgramID=1880&InvitationID=MOFN-M6H9-PV3X

If the link does not work for you, copy and paste it into the web browser address bar.

2. Sign in using a valid Windows Live® ID.

3. Enter your registration information.

4. Continue to the MOF program beta page, scroll down to Microsoft Operations Framework, and click the link to join the MOF beta program.

Please send your comments and feedback to mofpm@microsoft.com.

The Microsoft Server and Cloud Platform Team announced System Center Cloud Services Process Pack RTM Now Available on 5/10/2012:

The Microsoft Solution Accelerators team is pleased to announce that the System Center Cloud Services Process Pack is now available for download.

The System Center Cloud Services Process Pack is Microsoft’s Infrastructure as a Service solution built on the System Center platform. With the System Center Cloud Services Process Pack, enterprises can realize the benefits of Infrastructure as a Service while simultaneously leveraging their existing investments in the Service Manager, Orchestrator, Virtual Machine Manager, and Operations Manager platforms.

Read more about this new process pack on the System Center Service Manager Team Blog.

<Return to section navigation list>

Cloud Security and Governance

Maureen O’Gara asserted “Intel expects more than 3B connected users & 15B connected devices to drive more than 1,500 exabytes of cloud traffic by 2015” in a deck for her Intel & McAfee on Mission to End Cloud Nail-Biting post of 5/11/2012 to the Cloud Security Journal blog:

Security concerns are the biggest thing holding back cloud adoption, but Intel says it'll take it and its pricey $7.68 billion McAfee acquisition at least another five years to bring cloud security up to the best-in-class traditional enterprise security available now.

Not very reassuring is it.

Intel also says, "A private cloud added to your IT infrastructure is like adding another door to your house - it's another entry point for bad guys to get in."

Oh, great, just what we need.

Despite those sobering thoughts Intel is still expecting more than three billion connected users - that's a B, dear, as in billion - and 15 billion connected devices to be driving more than 1,500 exabytes of cloud traffic by 2015, and IDC figures that about 20% of all digital data - roughly, say, 1,400 exabytes - a mammoth load - will be stored or processed in the cloud.

That's the pickle - and since the daring and adventurous can never be kept at home - Intel and McAfee the other day sketched out what they could do now using existing technology to prevent companies from running off the cliff and their sensitive data from falling into unsavory hands.

They also said a happy word - but not much more than that - about what still needs to be done.

The object of the game is to entice enterprises still hesitant to take the plunge.

The twosome has got a two-pronged approach pairing software with hardware widgetry while they embark on a "multi-year mission" to reassure IT departments that:

- Data, applications and infrastructure will be secure.

- Corporate compliance requirements will be automatically met.

- Corporate security policies will be automatically applied throughout the workload lifecycle.

- And easy-to-implement solutions will provide 24/7 reporting.

Heady promises under the circumstances.

Intel commissioned a study that found that 61% of IT professionals are concerned about the loss of visibility in private clouds; 55% are concerned about lack of data protection in public clouds; and 57% won't put data that requires specific compliance into cloud data centers.

It figures it has to overcome these immediate reservations because it has to sell the cloud to make money.

Okay, so Intel and McAfee say they've got a fashionably holistic approach to cloud security to "establish" confidence in using private, public and hybrid clouds.

That basically means:

- Securing cloud data centers. Cloud infrastructure is typically virtualized and shared across multiple lines of business or even multiple organizations, which reduces control and visibility into infrastructure security, a situation that's amplified in off-premise public cloud infrastructure managed by third parties. Using McAfee's existing ePolicy Orchestrator (ePO) - which sets security policies across physical, virtual and cloud environments - with Intel's hardware-based Trusted Execution Technology (TXT), "trustworthy" Xeon E5 servers can be identified. Without saying exactly how, Intel and McAfee developments are expected to strengthen data protection, security enforcement and auditability across cloud infrastructures.

- Securing network connections. Multiple passwords are a hazard and e-mail and web traffic flowing between remote offices and mobile devices used by employees can be a significant source of data leakage. McAfee's Cloud Security Platform can improve security. It's supposed to evolve to protect cloud infrastructure via better integrity assessments, provide asset control and protection, and enable broader auditing and network security capabilities.