Creating An Incremental SQL Azure Data Source for OakLeaf’s U.S. Air Carrier Flight Delays Dataset

• Updated 5/11/2012 with a correction regarding free databases for Windows Azure Marketplace DataMarket datasets.

- Background

- Creating the SQL Azure On_Time_Performance Table

- Creating an On-Premises SQL Server Clone Table

- Importing *.csv Data with the BULK IMPORT Command

- Uploading Data to the SQL Azure Table with SQLAzureMW

- Calculating the Size of the SQL Azure Database and Checking for Upload Errors

- Conclusion

Background

![]() My initial U.S. Air Carrier Flight Delays, Monthly dataset for the Windows Azure Marketplace DataMarket was intended to incorporate individual tables for each month of the years 1987 through 2012 (and later.) I planned to compare performance of datasets and Windows Azure blob storage a persistent data sources for Apache Hive tables created with the new Apache Hadoop on Windows Azure feature.

My initial U.S. Air Carrier Flight Delays, Monthly dataset for the Windows Azure Marketplace DataMarket was intended to incorporate individual tables for each month of the years 1987 through 2012 (and later.) I planned to compare performance of datasets and Windows Azure blob storage a persistent data sources for Apache Hive tables created with the new Apache Hadoop on Windows Azure feature.

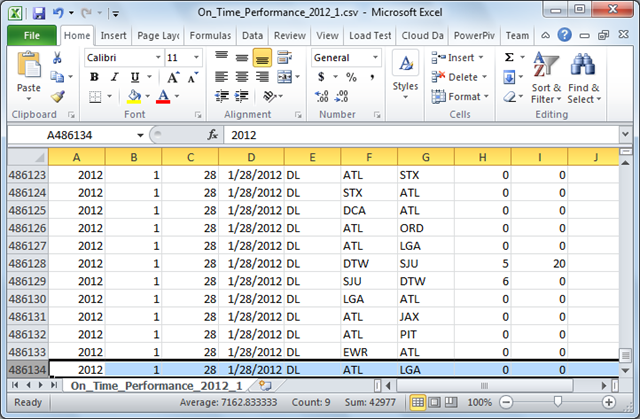

I used Microsoft Codename “Data Transfer” to create the first two of these SQL Azure tables, On_Time_Performance_2012_1 and On_Time_Performance_2012_2, from corresponding Excel On_Time_Performance_2012_1.csv and On_Time_Performance_2012_2.csv files in early May 2012. For more information about these files and the original U.S. Air Carrier Flight Delays, Monthly dataset see my Two Months of U.S. Air Carrier Flight Delay Data Available on the Windows Azure Marketplace DataMarket post of 5/4/2012.

Subsequently, I discovered that the Windows Azure Marketplace Publishing Portal had problems uploading the large (~500,000 rows, ~15 MB) On_Time_Performance_YYYY_MM.csv files. I was advised by Microsoft’s Group Program Manager for the DataMarket that the *.csv upload feature would be disabled to “prevent confusion.” For more information about this issue, see my Microsoft Codename “Data Transfer” and “Data Hub” Previews Don’t Appear Ready for BigData post updated 5/5/2012.

A further complication was the suspicion that editing the current data source to include each additional table would require a review by a DataMarket proctor. An early edit of one character in a description field had caused my dataset to be offline for a couple of days.

A workaround for the preceding two problems is to create an on-premises clone of the SQL Azure table with a RowID identity column and recreate the SQL Azure table without the identity property on the RowID column. Doing this permits using a BULK INSERT instruction to import new rows from On_Time_Peformance_YYYY_MM.csv files to the local SQL Server 2012 table and then use George Huey’s SQL Azure Migration Wizard (SQLMW) v3.8.7 or later to append new data to a single On_Time_Performance SQL Azure table. Managing primary key identity values of an on-premises SQL Server table is safer and easier than with SQL Azure.

• The downside of this solution is that maintaining access to the 1-GB SQL Azure Web database will require paying at least US$9.99 per month plus outbound bandwidth charges after your free trial expires. Microsoft provides up to four free SQL Azure 1-GB databases when you specify a new database on the Codename “Data Hub” Publishing Portal’s Connect page.

My new US Air Carrier Flight Delays dataset on the Windows Azure Marketplace DataMarket and Microsoft Codename “Data Hub” have been updated to the new data source. The “Monthly” suffix has been removed.

This post describes the process and T-SQL instructions for creating and managing the on-premises SQL Server [Express] 2012 databases, as well as incrementally uploading new data to the SQL Azure database.

Creating a SQL Azure On_Time_Performance Table

If you don’t have an SQL Azure subscription, sign up for the Windows Azure Three-Month Free Trial, which includes a 1-GB SQL Azure Web database, and use the Windows Azure Management Portal to create an SQL Azure server instance and the SQL Azure Management Portal to add a database (named On_Time_Performance for this example.)

Tip: Convert your subscription to Pay-Per-Use before the free trial expires, if you want to maintain access to the instance’s database(s).

Following is the T-SQL script generated by SQL Server Management Studio [Express] 2012 for the single On_Time_Performance table with the Include Indexes option set to true and NOT NULL specified for all fields:

/****** Object: Table [dbo].[On_Time_Performance] ******/ SET ANSI_NULLS ON GO SET QUOTED_IDENTIFIER ON GO CREATE TABLE [dbo].[On_Time_Performance]( [RowId] [bigint] NOT NULL, [Year] [int] NOT NULL, [Month] [int] NOT NULL, [DayofMonth] [int] NOT NULL, [FlightDate] [datetime] NOT NULL, [Carrier] [nvarchar](256) NOT NULL, [Origin] [nvarchar](256) NOT NULL, [Dest] [nvarchar](256) NOT NULL, [DepDelayMinutes] [int] NOT NULL, [ArrDelayMinutes] [int] NOT NULL, PRIMARY KEY CLUSTERED ( [RowId] ASC )WITH (STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF) ) GO /****** Object: Index [IX_ArrDelayMinutes] ******/ CREATE NONCLUSTERED INDEX [IX_ArrDelayMinutes] ON [dbo].[On_Time_Performance] ( [ArrDelayMinutes] ASC )WITH (STATISTICS_NORECOMPUTE = OFF, DROP_EXISTING = OFF, ONLINE = OFF) GO SET ANSI_PADDING ON GO /****** Object: Index [IX_Carrier] ******/ CREATE NONCLUSTERED INDEX [IX_Carrier] ON [dbo].[On_Time_Performance] ( [Carrier] ASC )WITH (STATISTICS_NORECOMPUTE = OFF, DROP_EXISTING = OFF, ONLINE = OFF) GO /****** Object: Index [IX_DepDelayMinutes] ******/ CREATE NONCLUSTERED INDEX [IX_DepDelayMinutes] ON [dbo].[On_Time_Performance] ( [DepDelayMinutes] ASC )WITH (STATISTICS_NORECOMPUTE = OFF, DROP_EXISTING = OFF, ONLINE = OFF) GO SET ANSI_PADDING ON GO /****** Object: Index [IX_Dest] ******/ CREATE NONCLUSTERED INDEX [IX_Dest] ON [dbo].[On_Time_Performance] ( [Dest] ASC )WITH (STATISTICS_NORECOMPUTE = OFF, DROP_EXISTING = OFF, ONLINE = OFF) GO /****** Object: Index [IX_FlightDate] ******/ CREATE NONCLUSTERED INDEX [IX_FlightDate] ON [dbo].[On_Time_Performance] ( [FlightDate] ASC )WITH (STATISTICS_NORECOMPUTE = OFF, DROP_EXISTING = OFF, ONLINE = OFF) GO /****** Object: Index [IX_Month] ******/ CREATE NONCLUSTERED INDEX [IX_Month] ON [dbo].[On_Time_Performance] ( [Month] ASC )WITH (STATISTICS_NORECOMPUTE = OFF, DROP_EXISTING = OFF, ONLINE = OFF) GO /****** Object: Index [IX_Origin] ******/ CREATE NONCLUSTERED INDEX [IX_Origin] ON [dbo].[On_Time_Performance] ( [Origin] ASC )WITH (STATISTICS_NORECOMPUTE = OFF, DROP_EXISTING = OFF, ONLINE = OFF) GO /****** Object: Index [IX_Year] ******/ CREATE NONCLUSTERED INDEX [IX_Year] ON [dbo].[On_Time_Performance] ( [Year] ASC )WITH (STATISTICS_NORECOMPUTE = OFF, DROP_EXISTING = OFF, ONLINE = OFF) GO

Note: SQL Azure requires all tables to have a clustered primary key; the RowKey primary key column is the same (except for the lack of an identity property) as that for the schema created if you specify a New Table when appending rows from *.csv files with Codename “Data Transfer” or “Data Hub”, as well as the DataMarket’s current import from *.csv file feature. Indexes are required on each field of the dataset that you specify as queryable. Indexed Year and Month fields simplifies URL querying of OData formatted datasets by those values.

Note: Lack of a primary key identity column makes this table incompatible with future use of the Marketplace Publishing Portal’s upload from *.csv feature, if its issues are corrected and it remains or is later made available for use. The bcp upload command includes the -E argument which overrides the destination table’s self-generated identity value with that from the source table. I plan to test this scenario when time is available and will update this post with my findings.

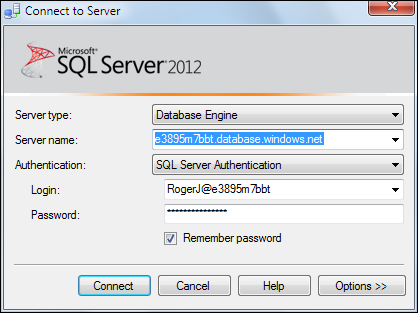

Open SQL Azure Management Studio [Express] 2012, log in to the SQL Azure server (e3895m7bbt.database.windows.net for this example), provide your administrative UserID (with @ and the server ID appended) and Password:

Connect to open the connection, click New Query to add an empty query editor window, copy and paste the preceding T-SQL DDL instruction to the window, and click Execute to create the new table and its indexes:

Creating an On-Premises SQL Server Clone Table

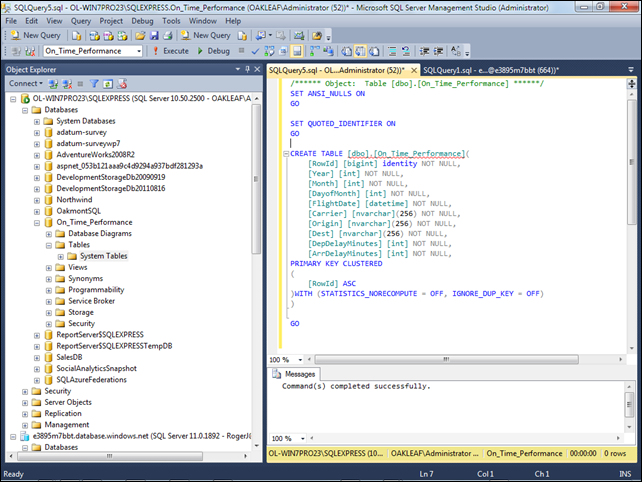

The local clone table needs a RowID primary key with the identity property but doesn’t require indexes. Connect to your local instance of SQL Server [Express] 2012, add a new database (On_Time_Performance for this example), open a new query window and paste the following code to it:

/****** Object: Table [dbo].[On_Time_Performance] ******/ SET ANSI_NULLS ON GO SET QUOTED_IDENTIFIER ON GO CREATE TABLE [dbo].[On_Time_Performance]( [RowId] [bigint] identity NOT NULL, [Year] [int] NOT NULL, [Month] [int] NOT NULL, [DayofMonth] [int] NOT NULL, [FlightDate] [datetime] NOT NULL, [Carrier] [nvarchar](256) NOT NULL, [Origin] [nvarchar](256) NOT NULL, [Dest] [nvarchar](256) NOT NULL, [DepDelayMinutes] [int] NOT NULL, [ArrDelayMinutes] [int] NOT NULL, PRIMARY KEY CLUSTERED ( [RowId] ASC )WITH (STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF) ) GO Execute the query and expand the Tables node to verify it’s schema:

Importing *.csv Data with the BULK IMPORT Command

SQL Server’s bulk copy process (bcp) utility is the most common method of migrating data from *.csv files and well as between SQL Server tables on WANs. However, bcp’s command-line syntax is somewhat arcane and today’s SQL Server DBAs and developers generally prefer T-SQL DDL and DML statements executed from GUIs.

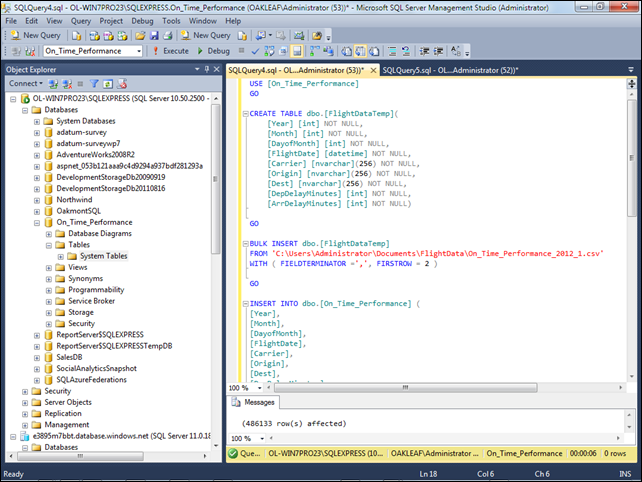

Following is a T-SQL DML statement that uses a BULK IMPORT instruction to import data from local *.csv files to a temporary FlightDataTemp table and then insert that data into the On_Time_Performance table described in the preceding section:

USE [On_Time_Performance] GO CREATE TABLE dbo.[FlightDataTemp]( [Year] [int] NOT NULL, [Month] [int] NOT NULL, [DayofMonth] [int] NOT NULL, [FlightDate] [datetime] NOT NULL, [Carrier] [nvarchar](256) NOT NULL, [Origin] [nvarchar](256) NOT NULL, [Dest] [nvarchar](256) NOT NULL, [DepDelayMinutes] [int] NOT NULL, [ArrDelayMinutes] [int] NOT NULL) GO BULK INSERT dbo.[FlightDataTemp] FROM 'C:\Users\Administrator\Documents\FlightData\On_Time_Performance_2012_1.csv' WITH ( FIELDTERMINATOR =',', FIRSTROW = 2 ) GO INSERT INTO dbo.[On_Time_Performance] ( [Year], [Month], [DayofMonth], [FlightDate], [Carrier], [Origin], [Dest], [DepDelayMinutes], [ArrDelayMinutes]) SELECT [Year], [Month], [DayofMonth], [FlightDate], [Carrier], [Origin], [Dest], [DepDelayMinutes], [ArrDelayMinutes] FROM dbo.[FlightDataTemp] GO DROP TABLE [FlightDataTemp] GO

Note: The FIRSTROW = 2 argument skips the first row, which contains column names.

Using an intermediate temporary table eliminates the need for a bcp format file to prevent mapping the RowID identity column to a field in the *.csv file. It also enables inserting rows from several *.csv files and uploading the collective rows in a single SQLAzureMW operation.

Select the local SQL Server instance in Object Explorer, create a new query editor window, copy and pastd the preceding T-SQL statements to it, edit the file path and name to suit the location and name of your *.csv file, and click Execute to run the query:

Note: Adding about 500,000 rows locally took about 10 seconds on my test machine.

Verify the rows added from Excel’s row count; the rows affected count should be one less than the number of worksheet rows:

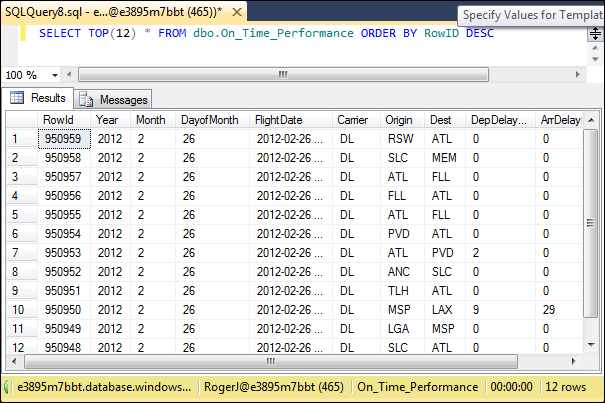

Execute a SELECT TOP(12) * FROM dbo.On_Time_Performance ORDER BY RowId DESC query and compare the resultset with the the last 12 rows of the Excel worksheet:

Add the rows from one or more additional *.csv files. Adding rows from On_Time_Performance_2012_2.csv brings the total row count to slightly less than one million rows.

Uploading Data to the SQL Azure Table with SQLAzureMW

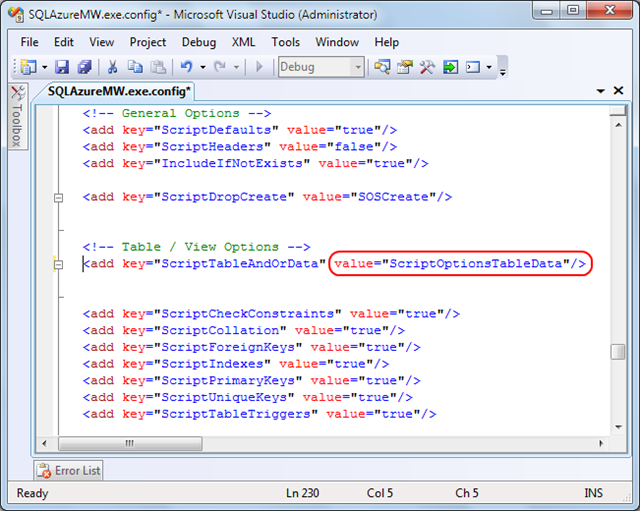

Download, install the latest SQL Azure Migration Wizard version (v3.8.7 of 4/24/2012 when this post was written), open SQLAzureMW.exe.config in Notepad or Visual Studio, search for ScriptTableAndOrData, and change the value ScriptOptionsTableSchemaData to ScriptOptionsTableData:

Making the preceding change reduces the probability of unintentionally deleting the database during an upload.

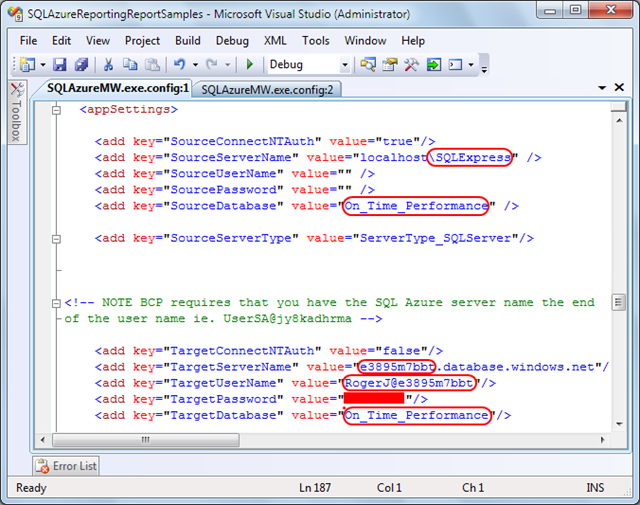

Optionally, specify the default source database and table, and destination database, table and credentials at the start of the appSettings group:

Tip: Don’t specify the TargetPassword if others have access to your computer. It is stored in plain text.

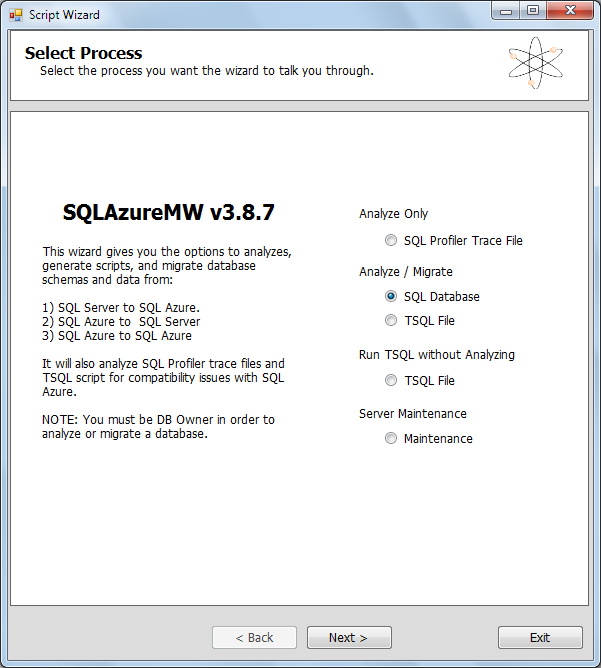

Save your changes, run SQLAzureMW.exe from the C:\Program Files (x86)\SQLAzureMW folder and select the SQL Database option:

Note: For a more detailed SQLAzureMW tutorial, see my Using the SQL Azure Migration Wizard v3.3.3 with the AdventureWorksLT2008R2 Sample Database post of 7/18/2010. Very little has changed since that version that affects SQLAzureMW’s operating methodology.

Click Next to open the Connect To Server dialog, select the on-premises instance in the Server Name list, accept the default Master DB option:

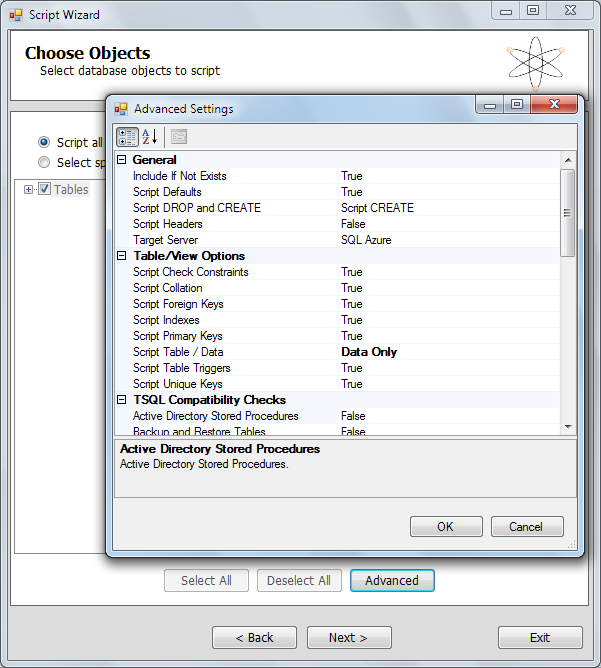

Click Connect, select the On_Time_Performance database in the list and click Next to open the Choose Objects page. Accept the default Script All Database Objects option and click the Advanced button to confirm the Data Only choice you specified in the Config file:

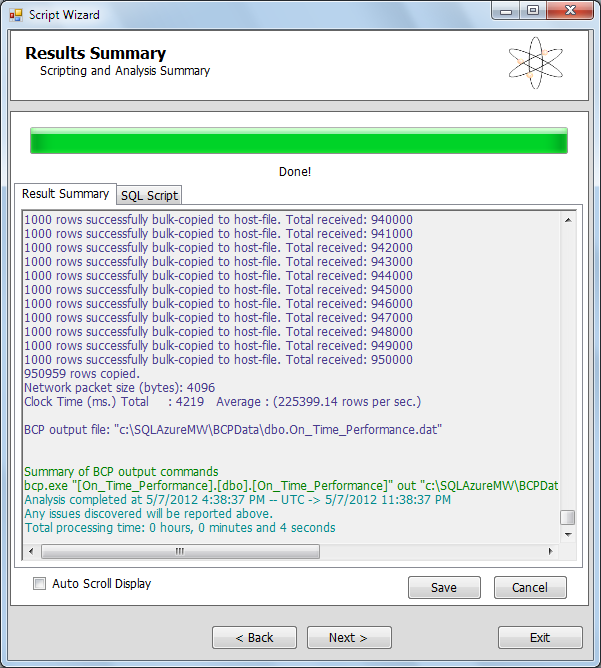

Click OK and Next to view the Script Wizard Summary, and click Next and Yes when asked if you’re Ready to Generate SQL Script to copy data from the table to a local *.csv file:

Note: Writing the 950,959 rows of the table took about 4.2 seconds.

The full bcp.exe command is:

bcp.exe "[On_Time_Performance].[dbo].[On_Time_Performance]" out "c:\SQLAzureMW\BCPData\dbo.On_Time_Performance.dat" -E -n -T -S OL-WIN7PRO23\SQLEXPRESS

For more information about the bcp commands and arguments, see MSDN Library’s bcp Utility topic.

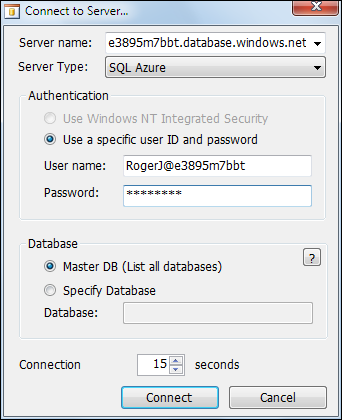

Click Next to open the Connect to Server dialog for the SQL Azure database, type the server name, your User Name with an @servername suffix and Password, accept the default Master DB option:

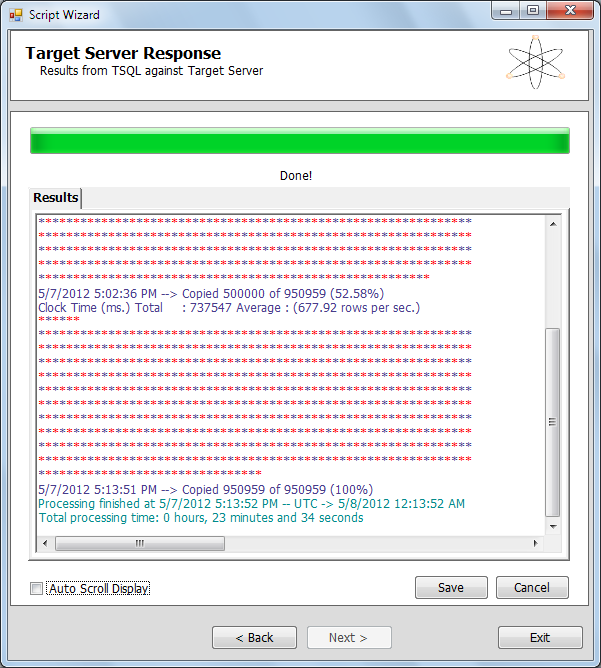

Click Connect to open the Set Up Target Server Connection page, select On_Time_Performance, click Next, and click Yes when asked if you want to Execute Script Against Destination Server to start the data upload process. Click the On_Time Performance tab to display upload progress:

The full bcp command for uploading the data to the SQL Azure database in 10,000-row batches is:

bcp.exe "On_Time_Performance.dbo.On_Time_Performance" in "c:\SQLAzureMW\BCPData\dbo.On_Time_Performance.dat" -E -n -b 10000 -a 16384 -q -S e3895m7bbt.database.windows.net -U "RogerJ@e3895m7bbt" -P xxxxxx

Here’s the final upload report:

Click Save to save a copy of the text in the preceding capture as a *.rtf file.

Here’s the last 12 rows of the uploaded data:

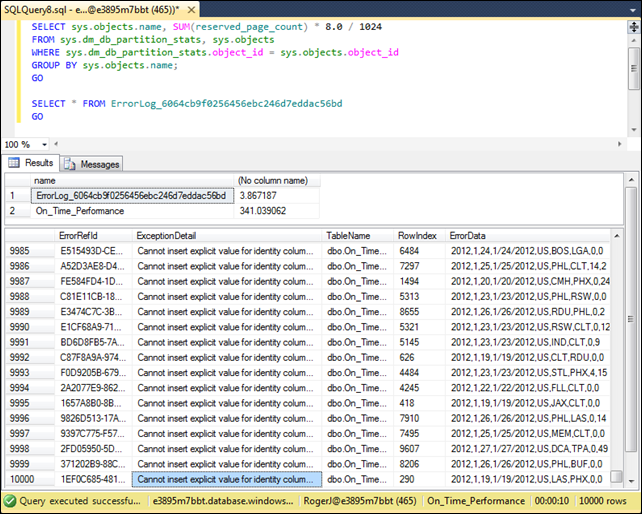

Calculating the Size of the SQL Azure Database and Checking for Upload Errors

The following T-SQL query reports the total size of the database in MB:

SELECT SUM(reserved_page_count)*8.0/1024 FROM sys.dm_db_partition_stats; GO

The size reported is 344.9 MB, which indicates that a 1 GB database will hold only six months of data without increasing its size. 345 MB / 0.950959 million rows = 363 MB / million rows.

The ALTER DATABASE syntax for changing database size is:

ALTER DATABASE database_name MODIFY (<edition_options> [, ..n]) <edition_options> ::= { (MAXSIZE = {1 | 5 | 10 | 20 | 30 … 150} GB) | (EDITION = {'web' | 'business'}) } [;]

Your only choice for Web database is upsizing to 5 GB, which costs US$25.98 per month, according to the basic pricing calculator on the Windows Azure Publishing page:

If you need a size larger than 5 GB, you must upgrade to the business version, which starts at 10 GB, increases in 10 GB increments to 50 GB, and increases to 150 GB in 50 GB increments.

After adding 1,476,325 more rows for a total of 2,427,284, the total database size was 863.8 MB = 356 MB / million rows, which is slightly less than 365 for the first million rows.

This query reports the size of each object in the database

SELECT sys.objects.name, SUM(reserved_page_count) * 8.0 / 1024 FROM sys.dm_db_partition_stats, sys.objects WHERE sys.dm_db_partition_stats.object_id = sys.objects.object_id GROUP BY sys.objects.name; GO

An Error_Log_GUID table in the master database contains a list of errors, if any, that occur during the upload process. The following 10,000 errors occurred during a previous attempt to upload 100,000 rows of data with the Windows Azure Marketplace Publishing Portal. It is one of the incidents that led to this workaround:

Note: The preceding T-SQL SELECT query samples are from the MSDN Library’s Monitoring SQL Azure Using Dynamic Management Views topic.

Conclusion

The workaround described in this post appears to be a reliable replacement for problematic Windows Azure Marketplace DataMarket uploads of large files with the *.csv source file feature, as well as with Microsoft Codename “Data Transfer” and “Data Hub.”

The drawback, of course, is that you must pay US$9.99, $25.98 or more per month, depending on your database size and usage of your data from the DataMarket.

0 comments:

Post a Comment