Windows Azure and Cloud Computing Posts for 2/16/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database, Federations and Reporting

- Marketplace DataMarket, Social Analytics and OData

- Windows Azure Access Control, Service Bus, and Workflow

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Service

Denny Lee (@dennylee) described Hadoop JavaScript– Microsoft’s VB shift for Big Data in a 2/17/2012 post:

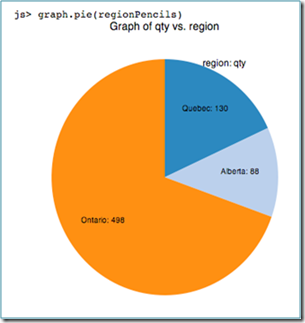

One of the cool things about the Hadoop on Azure CTP is its Interactive JavaScript Console – it allows users query and visualize data on top HDFS using a JavaScript framework. For example, below is a graph pie visualization within a browser generated by the Interactive JavaScript console using graph.pie function.

Why is this important and cool at the same time? As one can note with amazing projects like node.js, JavaScript is being seen by many as its own first class programming / application language: The rise of Node.js: JavaScript graduates to the server.

In the realm of Big Data, Hadoop on Azure is showcasing the ability to use JavaScript to create MapReduce jobs as well as interact with Pig and Hive from a browser. This opens up the possibility of a new path for the many JavaScript developers to jump onboard into the world of Big Data. Hence my opinion:

Hadoop JavaScript – Microsoft’s VB shift for Big Data

Just like Microsoft brought VB developers into the Enterprise by COM and later .NET (so VB forms creators could become Enterprise application developers back in the 90s), Hadoop JavaScript is a way to help bring JavaScript developers into the world of Hadoop using their own already powerful skillset.

And yes, the JavaScript layer is something Microsoft intends to give back to the Apache community. Check out the jira HADOOP-8079: Proposal for enhancements to Hadoop for Windows Server and Windows Azure development and runtime environments.

To know more about the Hadoop + JavaScript, check out the links below as well as the Introduction to the Hadoop on Azure Interactive JavaScript Console video.

- Fluent Queries on the Interactive JavaScript Console

- Interactive JavaScript console on MDH

- Data Visualization on the Interactive JavaScript Console

- HDFS Operations on the Interactive JavaScript Console

- Interactive JavaScript Console Session Management

As well, if you’re going to 2012 Strata Conference in Santa Clara, check out Asad Khan’s session: Hadoop + JavaScript: what we learned.

Liam Cavanagh (@liamca) continued his “What I learned” series with What I Learned Building a Startup on Microsoft Cloud Services: Part 5 – Migrating to Windows Azure Queues on 2/16/2012:

I am the founder of a startup called Cotega and also a Microsoft employee within the SQL Azure group where I work as a Program Manager. This is a series of posts where I talk about my experience building a startup outside of Microsoft. I do my best to take my Microsoft hat off and tell both the good parts and the bad parts I experienced using Azure.

In my previous post I talked about how I built the Cotega queuing system on SQL Azure. I was pleased at how well it worked. Unfortunately, it had one fundamental problem. The whole point of the Cotega service is to tell users if there are issues with their SQL Azure database such as database connectivity issues. If there was a problem with SQL Azure that was blocking users from connecting to their database, then this problem would very likely also affect the Cotega queuing system since it is also based on SQL Azure. If I am not able to connect to SQL Azure then I also cannot retrieve user’s jobs meaning I cannot tell the user there are problems.

Giving up on SQL Azure Queues

This was a big enough problem that I decided to investigate the use of Windows Azure queues. This turned out to be very easy to implement and as it turned out simplified a lot of things I had manually implemented in the SQL Azure queuing system. For example, the handling of orphaned jobs which occurred when a Worker Roles failed or crashed was automatically handled with queues.

Hiding Jobs in Windows Azure Queues

The only issue I had a little work to figure out is how to set a job to be hidden for a specific period of time. For example, if I did not want to run a job for another 5 minutes, I would need to add the job to the queue but make it invisible for 5 minutes. As it turned out, there is an overload for CloudQueue.AddMessage call which can set this value. For example, this code would set the message as invisible to 5 minutes, but set the time to live to be 10 hours:

CloudQueue queue = QueueClient.GetQueueReference(queueName);

TimeSpan runTime = new TimeSpan(10, 0, 0);

TimeSpan frequncy = new TimeSpan(0 5, 0);

queue.AddMessage(message, runTime, frequency);Windows Azure Queue Pricing

Windows Azure Queues also turned out to be a lot cheaper than I expected. For example, let’s say I have a single thread that checks the queue for a new job every 2 seconds. Even thoug this is 1,314,000 storage transactions / month ( 30 transactions / minute * 60 min * 24 hours * [365/12] days), it still only costs $1.31 / month (1.314 million transactions / 10,000 * $0.01). Even if I have multiple worker roles with multiple threads, this is extremely low pricing.

In the end I was really happy with Windows Azure queues and wish I had of made more of a time investment in learning this technology from the start.

<Return to section navigation list>

SQL Azure Database, Federations and Reporting

Cihan Biyikoglu (@cihangirb) posted Implementing MERGE command using SQL Azure Migration Wizard by @gihuey on 2/20/2012:

Well I am sure you noticed that federation’s support SPLIT AT and DROP AT commands but not MERGE AT yet today. What would this MERGE command do? It would do the symmetric opposite of SPLIT. SPLIT introduces a new split point into the federation. So MERGE would glue that back together. Here is what that would look like in real life; imagine a member layout like member#1: 0 to 50 and member#2: 50 to 100 range. MERGE would bring back these 2 members into a single member with a command like this: (note: by the time we are done with it it could be a different syntax.)

ALTER FEDERATION … MERGE AT (key=50)

This operation would move the data back into a single member that would cover all federated data in 0..100. MERGE AT would also allow transactions to still continue to happen on the 2 source members: 0..50 and 50..100, while the system rearranges the data back into a single member and would not require any downtime.

Some do ask why we did not implement MERGE? Sure, we could have waited until MERGE is implemented to light up federations but many customers told us that they won’t need MERGE right away and they do need SPLIT AT badly. Given the original pricing model in SQL Azure MERGE also didn’t make sense. Not so any more… The new pricing model explained here, gives you benefits when you combine members into larger sizes.

So what can you do today, until we get MERGE done? Big thanks to George Huey (@gihuey), here is a very timely article that talks about how to implement MERGE yourself using SQL Azure Migration Wizard to take advantage of the new pricing model and pay less. There are a few limitations; it isn’t a single simple high level command like ALTER FEDERATION and it does require downtime for your app so not online but it can be done! Take a look under the section [of the “Scaling Out with SQL Azure Federation[s]” article linked below] titled “scaling down” for details.

Cihan Biyikoglu (@cihangirb) described The New Pricing Model for SQL Azure Explained! in a 2/16/2012 post:

The new pricing model is an amazing improvement over the original model. In 60 seconds here are the 2 major improvements you will notice:

#1 - New 100MB Option: Smaller databases has been a big ask. If you do not have a multi-tenant database tier, this may be the way to go given you can have many tenants. The story is told better with this picture. This is just going up to database capacity going up to 10GB. Look at that bottom left corner. that is the 0.1GB database.

#2 - Granular Increments: The new model introduces 1GB Increments as opposed to the original jump points : 1-5-10-20-30-40-50-150…GB. The picture looks so different that I think it is easier to begin telling the story with the only that didn’t change: 1GB database capacity. That is still $9.99 but everything else simply improved for customers. Obviously the engineering team is in tears – just like that VW commercial. Just to help you appreciate the price difference, here is the full picture to show you savings all the way up to 150GB databases. We have saving over %78 for some of the segments.

One important point to remember is; for this price, you are not only getting database storage for this price! Beyond just storage in database, the simplified price measurement on database-GBs in SQL Azure also include rich TSQL language with stored procedures for high performance query processing as well as transactional processing, the built in high availability and worry free physical administration you get with SQL Azure.

For more information here are a few useful links;

- Price Calculator: http://www.windowsazure.com/en-us/pricing/calculator/

- Pricing Details: http://www.windowsazure.com/en-us/pricing/details/

- Offers: http://www.windowsazure.com/en-us/pricing/purchase-options/

- Pay by Invoice option: http://www.windowsazure.com/en-us/pricing/invoicing/

Mark Scurrell @mscurell alerted users with Info on Latest Data Sync Update on 2/15/2012:

We updated the service again at the end of January. Details about that update, a summary of changes in previous updates, as well as pointers to introductory videos can be found on this post to the Windows Azure blog:

http://blogs.msdn.com/b/windowsazure/archive/2012/01/26/announcing-sql-azure-data-sync-preview-refresh.aspx.

<Return to section navigation list>

MarketPlace DataMarket, Social Analytics, Big Data and OData

Neep posted an OData for ComicRack provider on 2/19/2012:

I'm hoping other developers with find this stuff useful. I think I've seen people mention how to get their catalog information as a web page to be used on any kind of device. I want to keep the application itself at the center because it's way better at organizing than anything I want to write. For example, I want to have an iPad application (or anything really) that points a URL to my own machine where ComicRack is running to look at comics.

What I've done is just made a simple OData provider for ComicRack that is hosted as a plugin inside the ComicRack application to use it's API as a datasource. I did this just using WCF Data Services from microsoft.

- What OData is: www.odata.org/

- WCF Data Services: msdn.microsoft.com/en-us/library/cc668792.aspx

- WCF Data Services blog: blogs.msdn.com/b/astoriateam/

The attached plugin zip (I haven't bothered making it a real nice plugin yet) should able to be installed into ComicRack. A blank button should appear and a little dialog to start or stop the service will be presented. You can specify a port to bind to on localhost. !!You have to curry run ComicRack with admin privileges because .NET wants them to able to bind to a port!! Once it starts, you should be able to browse to it (e.g. localhost:8080/Comics) It's always /Comics.

In IE, you'll see an atom feed. Chrome will render this as XML like:

<?xml version="1.0" encoding="iso-8859-1"?><feed xml:base="http://localhost:8080/" xmlns="http://www.w3.org/2005/Atom" xmlns:d="http://schemas.microsoft.com/ado/2007/08/dataservices" xmlns:m="http://schemas.microsoft.com/ado/2007/08/dataservices/metadata" xmlns:georss="http://www.georss.org/georss" xmlns:gml="http://www.opengis.net/gml"><id>http://localhost:8080/Comics</id><title type="text">Comics</title><updated>2012-02-19T17:21:42Z</updated><link rel="self" title="Comics" href="Comics" /><entry><id>http://localhost:8080/Comics('2aed8ceb-0a41-43da-bfdf-1ab16ef36032')</id><category term="ComicRackODataService.Comic" scheme="http://schemas.microsoft.com/ado/2007/08/dataservices/scheme" /><link rel="edit" title="Comic" href="Comics('2aed8ceb-0a41-43da-bfdf-1ab16ef36032')" /><title type="text">The Black Ring, Part Three; A Look at Things to Come in... Superboy</title><summary type="text">Action Comics</summary><updated>2012-02-19T17:21:42Z</updated><author><name /></author><link rel="edit-media" title="Comic" href="Comics('2aed8ceb-0a41-43da-bfdf-1ab16ef36032')/$value" /><content type="application/zip" src="Comics('2aed8ceb-0a41-43da-bfdf-1ab16ef36032')/$value" /><m:properties><d:Id>2aed8ceb-0a41-43da-bfdf-1ab16ef36032</d:Id><d:Description>Action Comics</d:Description></m:properties></entry><entry><id>http://localhost:8080/Comics('7795a343-76a6-4185-add1-9b8b704135cc')</id><category term="ComicRackODataService.Comic" scheme="http://schemas.microsoft.com/ado/2007/08/dataservices/scheme" /><link rel="edit" title="Comic" href="Comics('7795a343-76a6-4185-add1-9b8b704135cc')" /><title type="text">The Black Ring, Part Four; Jimmy Olsen's Big Week, Day One</title><summary type="text">Action Comics</summary><updated>2012-02-19T17:21:42Z</updated><author><name /></author>A lot of stuff in there. This is intended to be consumed by anything that understands OData. To test it for a single comic, you can go to one of the URLs (e.g. http://localhost:8080/Comics('7795a343-76a6-4185-add1-9b8b704135cc'))

To stream the actual comic file, you can go to:

http://localhost:8080/Comics('7795a343-76a6-4185-add1-9b8b704135cc')/$valueThis always gives you a zip file in Chrome because I made the content type be application/zip but it's really whatever format you wish.

All of this is intended to be used by an app. My first plan to convert my Silverlight app (comictool.codeplex.com/) so that it can read the ComicRack data just to prove it all out. Then maybe a mobile device.Download from here: hathcock.co.uk/ComicRackODataService.zip

Let me know what you guys think.

David Linthicum (@DavidLinthicum) asserted “Although big data and cloud computing are linked, there are technical reasons you shouldn't combine them by default” in a deck for his Big data and the cloud: A far from perfect fit article of 2/17/2012 for InfoWorld’s Cloud Computing blog:

Big data seems to be all the rage these days. It's big, it's new, it's Hadoop-related, and it's typically in the public cloud. New startups and cloud offerings show up weekly, promising they'll finally get your data issues under control. They all promote the same idea: the migration to huge petabyte databases with almost "unlimited scalability" through the elasticity of the public cloud.

The reality is different than the hype lets on. As organizations try to consolidate their enterprise data into large databases that exist in the public cloud, they may be overlooking a few technical realities.

First, big data means big integration challenges. Thus, the ability to get the data from the enterprise to the public cloud may be problematic. Although you can certainly ship up a couple hundred thousand data records each day over the open Internet, in many cases we're talking millions of data records that must be transformed, translated, and synced from existing enterprise systems.

You bump your head on the bandwidth limitations quickly. Indeed, many enterprises are actually shipping USB drives via Federal Express to their public cloud providers to pump their big data system with current data.

Second, although security is indeed what you make of it in the cloud, it's typically cheaper to deal with data-level security in on-premise systems or in private clouds. In many cases, their security models and technology are less expensive. For example, you may have to encrypt data at rest in the public cloud -- but not in your old data center. Compliance is typically easier and cheaper when keeping data locally as well.

By the way, before you send in the hate mail, I'm not saying that big data is never a fit for public clouds, but you need to consider all the issues with the technologies. As with other architectural problems, you have to judge them on a case-by-case basis.

<Return to section navigation list>

Windows Azure Access Control, Service Bus and Workflow

Vittorio Bertocci (@vibronet) announced WIF Runtime Now Available on WebPI on 2/20/2012:

You asked for it, and there it is. The Windows Identity Foundation runtime is now available on WebPI for your deployment pleasure, just search for “identity” and it will pop out.

Thanks to Marc Goodner for making this happen!

Steve Peschka continued his series with The Azure Custom Claim Provider for SharePoint Project Part 3 on 2/20/2012:

In Part 1 of this series, I briefly outlined the goals for this project, which at a high level is to use Windows Azure table storage as a data store for a SharePoint custom claims provider. The claims provider is going to use the CASI Kit to retrieve the data it needs from Windows Azure in order to provide people picker (i.e. address book) and type in control name resolution functionality.

In Part 2, I walked through all of the components that run in the cloud – the data classes that are used to work with Azure table storage and queues, a worker role to read items out of queues and populate table storage, and a WCF front end that lets a client application create new items in the queue as well as do all the standard SharePoint people picker stuff – provide a list of supported claim types, search for claim values and resolve claims.

In this, the final part in this series, we’ll walk through the different components used on the SharePoint side. It includes a custom component built using the CASI Kit to add items to the queue as well as to make our calls to Azure table storage. It also includes our custom claims provider, which will use the CASI Kit component to connect SharePoint with those Azure functions.

To begin with let’s take a quick look at the custom CASI Kit component. I’m not going to spend a whole lot of time here because the CASI Kit is covered extensively on this blog. This particular component is described in Part 3 of the CASI Kit series. Briefly though, what I’ve done is created a new Windows class library project. I’ve added references to the CASI Kit base class assembly and the other required .NET assemblies (that I describe in part 3). I’ve added a Service Reference in my project to the WCF endpoint I created in Part 2 of this project. Finally, I added a new class to the project and have it inherit the CASI Kit base class, and I’ve added the code to override the ExecuteRequest method. As you have hopefully seen in the CASI Kit series, here’s what my code looks like to override ExecuteRequest:

public class DataSource : AzureConnect.WcfConfig { public override bool ExecuteRequest() { try { //create the proxy instance with bindings and endpoint the base class //configuration control has created for this AzureClaims.AzureClaimsClient cust = new AzureClaims.AzureClaimsClient(this.FedBinding, this.WcfEndpointAddress); //configure the channel so we can call it with //FederatedClientCredentials. SPChannelFactoryOperations.ConfigureCredentials<AzureClaims.IAzureClaims> (cust.ChannelFactory, Microsoft.SharePoint.SPServiceAuthenticationMode.Claims); //create a channel to the WCF endpoint using the //token and claims of the current user AzureClaims.IAzureClaims claimsWCF = SPChannelFactoryOperations.CreateChannelActingAsLoggedOnUser <AzureClaims.IAzureClaims>(cust.ChannelFactory, this.WcfEndpointAddress, new Uri(this.WcfEndpointAddress.Uri.AbsoluteUri)); //set the client property for the base class this.WcfClientProxy = claimsWCF; } catch (Exception ex) { Debug.WriteLine(ex.Message); } //now that the configuration is complete, call the method return base.ExecuteRequest(); } }“AzureClaims” is the name of the Service Reference I created, and it uses the IAzureClaims interface that I defined in my WCF project in Azure. As explained previously in the CASI Kit series, this is basically boilerplate code, I’ve just plugged in the name of my interface and class that is exposed in the WCF application. The other thing I’ve done, as is also explained in the CASI Kit series, is to create an ASPX page called AzureClaimProvider.aspx. I just copied and pasted in the code I describe in Part 3 of the CASI Kit series and substituted the name of my class and the endpoint it can be reached at. The control tag in the ASPX page for my custom CASI Kit component looks like this:

<AzWcf:DataSource runat="server" id="wcf" WcfUrl="https://spsazure.vbtoys.com/ AzureClaims.svc" OutputType="Page" MethodName="GetClaimTypes" AccessDeniedMessage="" />

The main things to note here are that I created a CNAME record for “spsazure.vbtoys.com” that points to my Azure application at cloudapp.net (this is also described in Part 3 of the CASI Kit). I’ve set the default MethodName that the page is going to invoke to be GetClaimTypes, which is a method that takes no parameters and returns a list of claim types that my Azure claims provider supports. This makes it a good test to validate the connectivity between my Azure application and SharePoint. I can simply go to http://anySharePointsite/_layouts/AzureClaimProvider.aspx and if everything is configured correctly I will see some data in the page. Once I’ve deployed my project by adding the assembly to the Global Assembly Cache and deploying the page to SharePoint’s _layouts directory that’s exactly what I did – I hit the page in one of my sites and verified that it returned data, so I knew my connection between SharePoint and Azure was working.

Now that I have the plumbing in place, I finally get to the “fun” part of the project, which is to do two things:

- 1. Create “some component” that will send information about new users to my Azure queue

- 2. Create a custom claims provider that will use my custom CASI Kit component to provide claim types, name resolution and search for claims.

This is actually a good point to stop and step back a little. In this particular case I just wanted to roll something out as quickly as possible. So, what I did is I created a new web application and I enabled anonymous access. As I’m sure you all know, just enabling it at the web app level does NOT enable it at the site collection level. So for this scenario, I also enabled at the root site collection only; I granted access to everything in the site. All other site collections, which would contain any information for members only, does NOT have anonymous enabled so users have to be granted rights to join.

The next thing to think about is how to manage the identities that are going to use the site. Obviously, that’s not something I want to do. I could have come up with a number of different methods to sync accounts into Azure or something goofy like that, but as I explained in Part 1 of this series, there’s a whole bunch of providers that do that already so I’m going to let them keep doing what they do. What I mean by that is that I took advantage of another Microsoft cloud service called ACS, or Access Control Service. In short ACS acts like an identity provider to SharePoint. So I just created a trust between my SharePoint farm and the instance of ACS that I created for this POC. In ACS I added SharePoint as a relying party, so ACS knows where to send users once they’ve authenticated. Inside of ACS, I also configured it to let users sign in using their Gmail, Yahoo, or Facebook accounts. Once they’ve signed in ACS gets a single claim back that I’ll use – email address – and sends it back to SharePoint.

Okay, so that’s all of the background on the plumbing – Azure is providing table storage and queues to work with the data, ACS is providing authentication services, and CASI Kit is providing the plumbing to the data.

So with all that plumbing described, how are we going to use it? Well I still wanted the process to become a member pretty painless, so what I did is I wrote a web part to add users to my Azure queue. What it does is it checks to see if the request is authenticated (i.e. the user has clicked the Sign In link that you get in an anonymous site, signed into one of the providers I mentioned above, and ACS has sent me back their claim information). If the request is not authenticated the web part doesn’t do anything. However if the request is authenticated, it renders a button that when clicked, will take the user’s email claim and add it to the Azure queue. This is the part about which I said we should step back a moment and think about it. For a POC that’s all fine and good, it works. However you can think about other ways in which you could process this request. For example, maybe you write the information to a SharePoint list. You could write a custom timer job (that the CASI Kit works with very nicely) and periodically process new requests out of that list. You could use the SPWorkItem to queue the requests up to process later. You could store it in a list and add a custom workflow that maybe goes through some approval process, and once the request has been approved uses a custom workflow action to invoke the CASI Kit to push the details up to the Azure queue. In short – there’s a LOT of power, flexibility and customization possible here – it’s all up to your imagination. At some point in fact I may write another version of this that writes it to a custom list, processes it asynchronously at some point, adds the data to the Azure queue and then automatically adds the account to the Visitors group in one of the sub sites, so the user would be signed up and ready to go right away. But that’s for another post if I do that.

So, all that being said – as I described above I’m just letting the user click a button if they’ve signed in and then I’ll use my custom CASI Kit component to call out the WCF endpoint and add the information to the Azure queue. Here’s the code for the web part – pretty simple, courtesy of the CASI Kit:

public class AddToAzureWP : WebPart { //button whose click event we need to track so that we can //add the user to Azure Button addBtn = null; Label statusLbl = null; protected override void CreateChildControls() { if (this.Page.Request.IsAuthenticated) { addBtn = new Button(); addBtn.Text = "Request Membership"; addBtn.Click += new EventHandler(addBtn_Click); this.Controls.Add(addBtn); statusLbl = new Label(); this.Controls.Add(statusLbl); } } void addBtn_Click(object sender, EventArgs e) { try { //look for the claims identity IClaimsPrincipal cp = Page.User as IClaimsPrincipal; if (cp != null) { //get the claims identity so we can enum claims IClaimsIdentity ci = (IClaimsIdentity)cp.Identity; //look for the email claim //see if there are claims present before running through this if (ci.Claims.Count > 0) { //look for the email address claim var eClaim = from Claim c in ci.Claims where c.ClaimType == "http://schemas.xmlsoap.org/ws/2005/05/identity/claims/emailaddress" select c; Claim ret = eClaim.FirstOrDefault<Claim>(); if (ret != null) { //create the string we're going to send to the Azure queue: claim, value, and display name //note that I'm using "#" as delimiters because there is only one parameter, and CASI Kit //uses ; as a delimiter so a different value is needed. If ; were used CASI would try and //make it three parameters, when in reality it's only one string qValue = ret.ClaimType + "#" + ret.Value + "#" + "Email"; //create the connection to Azure and upload //create an instance of the control AzureClaimProvider.DataSource cfgCtrl = new AzureClaimProvider.DataSource(); //set the properties to retrieve data; must configure cache properties since we're using it programmatically //cache is not actually used in this case though cfgCtrl.WcfUrl = AzureCCP.SVC_URI; cfgCtrl.OutputType = AzureConnect.WcfConfig.DataOutputType.None; cfgCtrl.MethodName = "AddClaimsToQueue"; cfgCtrl.MethodParams = qValue; cfgCtrl.ServerCacheTime = 10; cfgCtrl.ServerCacheName = ret.Value; cfgCtrl.SharePointClaimsSiteUrl = this.Page.Request.Url.ToString(); //execute the method bool success = cfgCtrl.ExecuteRequest(); if (success) { //if it worked tell the user statusLbl.Text = "<p>Your information was successfully added. You can now contact any of " + "the other Partner Members or our Support staff to get access rights to Partner " + "content. Please note that it takes up to 15 minutes for your request to be " + "processed.</p>"; } else { statusLbl.Text = "<p>There was a problem adding your info to Azure; please try again later or " + "contact Support if the problem persists.</p>"; } } } } } catch (Exception ex) { statusLbl.Text = "There was a problem adding your info to Azure; please try again later or " + "contact Support if the problem persists."; Debug.WriteLine(ex.Message); } } }So a brief rundown of the code looks like this: I first make sure the request is authenticated; if it is I added the button to the page and I add an event handler for the click event of the button. In the button’s click event handler I get an IClaimsPrincipal reference to the current user, and then look at the user’s claims collection. I run a LINQ query against the claims collection to look for the email claim, which is the identity claim for my SPTrustedIdentityTokenIssuer. If I find the email claim, I create a concatenated string with the claim type, claim value and friendly name for the claim. Again, this isn’t strictly required in this scenario, but since I wanted this to be usable in a more generic scenario I coded it up this way. That concatenated string is the value for the method I have on the WCF that adds data to the Azure queue. I then create an instance of my custom CASI Kit component and configure it to call the WCF method that adds data to the queue, then I call the ExecuteRequest method to actually fire off the data.

If I get a response indicating I was successful adding data to the queue then I let the user know; otherwise I let him know there was a problem and hey may need to check again later. In a real scenario of course I would have even more error logging so I could track down exactly what happened and why. Even with this as is though, the CASI Kit will write any error information to the ULS logs in a SPMonitoredScope, so everything it does for the request will have a unique correlation ID with which we can view all activity associated with the request. So I’m actually in a pretty good state right now.

Okay – we’ve walked through all the plumbing pieces, and I’ve shown how data gets added to the Azure queue and from there pulled out by a worker process and added into table storage. That’s really the ultimate goal because now we can walk through the custom claims provider. It’s going to use the CASI Kit to call out and query the Azure table storage I’m using. Let’s look at the most interesting aspects of the custom claims provider.

First let’s look at a couple of class level attributes:

//the WCF endpoint that we'll use to connect for address book functions //test url: https://az1.vbtoys.com/AzureClaimsWCF/AzureClaims.svc //production url: https://spsazure.vbtoys.com/AzureClaims.svc public static string SVC_URI = "https://spsazure.vbtoys.com/AzureClaims.svc"; //the identity claimtype value private const string IDENTITY_CLAIM = "http://schemas.xmlsoap.org/ws/2005/05/identity/claims/emailaddress"; //the collection of claim type we support; it won't change over the course of //the STS (w3wp.exe) life time so we'll cache it once we get it AzureClaimProvider.AzureClaims.ClaimTypeCollection AzureClaimTypes = new AzureClaimProvider.AzureClaims.ClaimTypeCollection();First, I use a constant to refer to the WCF endpoint to which the CASI Kit should connect. You’ll note that I have both my test endpoint and my production endpoint. When you’re using the CASI Kit programmatically, like we will in our custom claims provider, you always have to tell it where that WCF endpoint is that it should talk to.

Next, as I’ve described previously I’m using the email claim as my identity claim. Since I will refer to it a number of times throughout my provider, I’ve just plugged into a constant at the class level.

Finally, I have a collection of AzureClaimTypes. I explained in Part 2 of this series why I’m using a collection, and I’m just storing it here at the class level so that I don’t have to go and re-fetch that information each time my FillHierarchy method is invoked. Calls out to Azure aren’t cheap, so I minimize them where I can.

Here’s the next chunk of code:

internal static string ProviderDisplayName { get { return "AzureCustomClaimsProvider"; } } internal static string ProviderInternalName { get { return "AzureCustomClaimsProvider"; } } //******************************************************************* //USE THIS PROPERTY NOW WHEN CREATING THE CLAIM FOR THE PICKERENTITY internal static string SPTrustedIdentityTokenIssuerName { get { return "SPS ACS"; } } public override string Name { get { return ProviderInternalName; } }The reason I wanted to point this code out is because since my provider is issuing identity claims, it MUST be the default provider for the SPTrustedIdentityTokenIssuer. Explaining how to do that is outside the scope of this post, but I’ve covered it elsewhere in my blog. The main thing to remember about doing that is that you must have a strong relationship between the name you use for your provider and the name used for the SPTrustedIdentityTokenIssuer. The value I used for the ProviderInternalName is the name that I must plug into the ClaimProviderName property for the SPTrustedIdentityTokenIssuer. Also, I need to use the name of the SPTrustedIdentityTokenIssuer when I’m creating identity claims for users. So I’ve created an SPTrustedIdentityTokenIssuer called “SPS ACS” and I’ve added that to my SPTrustedIdentityTokenIssuerName property. That’s why I have these values coded in here.

Since I’m not doing any claims augmentation in this provider, I have not written any code to override FillClaimTypes, FillClaimValueTypes or FillEntityTypes. The next chunk of code I have is FillHierarchy, which is where I tell SharePoint what claim types I support. Here’s the code for that:

try { if ( (AzureClaimTypes.ClaimTypes == null) || (AzureClaimTypes.ClaimTypes.Count() == 0) ) { //create an instance of the control AzureClaimProvider.DataSource cfgCtrl = new AzureClaimProvider.DataSource(); //set the properties to retrieve data; must configure cache properties since we're using it programmatically //cache is not actually used in this case though cfgCtrl.WcfUrl = SVC_URI; cfgCtrl.OutputType = AzureConnect.WcfConfig.DataOutputType.None; cfgCtrl.MethodName = "GetClaimTypes"; cfgCtrl.ServerCacheTime = 10; cfgCtrl.ServerCacheName = "GetClaimTypes"; cfgCtrl.SharePointClaimsSiteUrl = context.AbsoluteUri; //execute the method bool success = cfgCtrl.ExecuteRequest(); if (success) { //if it worked, get the list of claim types out AzureClaimTypes = (AzureClaimProvider.AzureClaims.ClaimTypeCollection)cfgCtrl.QueryResultsObject; } } //make sure picker is asking for the type of entity we return; site collection admin won't for example if (!EntityTypesContain(entityTypes, SPClaimEntityTypes.User)) return; //at this point we have whatever claim types we're going to have, so add them to the hierarchy //check to see if the hierarchyNodeID is null; it will be when the control //is first loaded but if a user clicks on one of the nodes it will return //the key of the node that was clicked on. This lets you build out a //hierarchy as a user clicks on something, rather than all at once if ( (string.IsNullOrEmpty(hierarchyNodeID)) && (AzureClaimTypes.ClaimTypes.Count() > 0) ) { //enumerate through each claim type foreach (AzureClaimProvider.AzureClaims.ClaimType clm in AzureClaimTypes.ClaimTypes) { //when it first loads add all our nodes hierarchy.AddChild(new Microsoft.SharePoint.WebControls.SPProviderHierarchyNode( ProviderInternalName, clm.FriendlyName, clm.ClaimTypeName, true)); } } } catch (Exception ex) { Debug.WriteLine("Error filling hierarchy: " + ex.Message); }So here I’m looking to see if I’ve grabbed the list of claim types I support already. If I haven’t then I create an instance of my CASI Kit custom control and make a call out to my WCF to retrieve the claim types; I do this by calling the GetClaimTypes method on my WCF class. If I get data back then I plug it into the class-level variable I described earlier called AzureClaimTypes, and then I add it to the hierarchy of claim types I support.

The next methods we’ll look at is the FillResolve methods. The FillResolve methods have two different signatures because they do two different things. In one scenario we have a specific claim with value and type and SharePoint just wants to verify that is valid. In the second case a user has just typed some value into the SharePoint type in control and so it’s effectively the same thing as doing a search for claims. Because of that, I’ll look at them separately.

In the case where I have a specific claim and SharePoint wants to verify the values, I call a custom method I wrote called GetResolveResults. In that method I pass in the Uri where the request is being made as well as the claim type and claim value SharePoint is seeking to validate. The GetResolveResults then looks like this:

//Note that claimType is being passed in here for future extensibility; in the //current case though, we're only using identity claims private AzureClaimProvider.AzureClaims.UniqueClaimValue GetResolveResults(string siteUrl, string searchPattern, string claimType) { AzureClaimProvider.AzureClaims.UniqueClaimValue result = null; try { //create an instance of the control AzureClaimProvider.DataSource cfgCtrl = new AzureClaimProvider.DataSource(); //set the properties to retrieve data; must configure cache properties since we're using it programmatically //cache is not actually used in this case though cfgCtrl.WcfUrl = SVC_URI; cfgCtrl.OutputType = AzureConnect.WcfConfig.DataOutputType.None; cfgCtrl.MethodName = "ResolveClaim"; cfgCtrl.ServerCacheTime = 10; cfgCtrl.ServerCacheName = claimType + ";" + searchPattern; cfgCtrl.MethodParams = IDENTITY_CLAIM + ";" + searchPattern; cfgCtrl.SharePointClaimsSiteUrl = siteUrl; //execute the method bool success = cfgCtrl.ExecuteRequest(); //if the query encountered no errors then capture the result if (success) result = (AzureClaimProvider.AzureClaims.UniqueClaimValue)cfgCtrl.QueryResultsObject; } catch (Exception ex) { Debug.WriteLine(ex.Message); } return result; }So here I’m creating an instance of the custom CASI Kit control then calling the ResolveClaim method on my WCF. That method takes two parameters, so I pass that in as semi-colon delimited values (because that’s how CASI Kit distinguishes between different param values). I then just execute the request and if it finds a match it will return a single UniqueClaimValue; otherwise the return value will be null. Back in my FillResolve method this is what my code looks like:

protected override void FillResolve(Uri context, string[] entityTypes, SPClaim resolveInput, List<PickerEntity> resolved) { //make sure picker is asking for the type of entity we return; site collection admin won't for example if (!EntityTypesContain(entityTypes, SPClaimEntityTypes.User)) return; try { //look for matching claims AzureClaimProvider.AzureClaims.UniqueClaimValue result = GetResolveResults(context.AbsoluteUri, resolveInput.Value, resolveInput.ClaimType); //if we found a match then add it to the resolved list if (result != null) { PickerEntity pe = GetPickerEntity(result.ClaimValue, result.ClaimType, SPClaimEntityTypes.User, result.DisplayName); resolved.Add(pe); } } catch (Exception ex) { Debug.WriteLine(ex.Message); } }So I’m checking first to make sure that the request is for a User claim, since that the only type of claim my provider is returning. If the request is not for a User claim then I drop out. Next I call my method to resolve the claim and if I get back a non-null result, I process it. To process it I call another custom method I wrote called GetPickerEntity. Here I pass in the claim type and value to create an identity claim, and then I can add that PickerEntity that it returns to the List of PickerEntity instances passed into my method. I’m not going to go into the GetPickerEntity method because this post is already incredibly long and I’ve covered how to do so in other posts on my blog.

Now let’s talk about the other FillResolve method. As I explained earlier, it basically acts just like a search so I’m going to combine the FillResolve and FillSearch methods mostly together here. Both of these methods are going to call a custom method I wrote called SearchClaims, that looks like this:

private AzureClaimProvider.AzureClaims.UniqueClaimValueCollection SearchClaims(string claimType, string searchPattern, string siteUrl) { AzureClaimProvider.AzureClaims.UniqueClaimValueCollection results = new AzureClaimProvider.AzureClaims.UniqueClaimValueCollection(); try { //create an instance of the control AzureClaimProvider.DataSource cfgCtrl = new AzureClaimProvider.DataSource(); //set the properties to retrieve data; must configure cache properties since we're using it programmatically //cache is not actually used in this case though cfgCtrl.WcfUrl = SVC_URI; cfgCtrl.OutputType = AzureConnect.WcfConfig.DataOutputType.None; cfgCtrl.MethodName = "SearchClaims"; cfgCtrl.ServerCacheTime = 10; cfgCtrl.ServerCacheName = claimType + ";" + searchPattern; cfgCtrl.MethodParams = claimType + ";" + searchPattern + ";200"; cfgCtrl.SharePointClaimsSiteUrl = siteUrl; //execute the method bool success = cfgCtrl.ExecuteRequest(); if (success) { //if it worked, get the array of results results = (AzureClaimProvider.AzureClaims.UniqueClaimValueCollection)cfgCtrl.QueryResultsObject; } } catch (Exception ex) { Debug.WriteLine("Error searching claims: " + ex.Message); } return results; }In this method, as you’ve seen elsewhere in this post, I’m just creating an instance of my custom CASI Kit control. I’m calling the SearchClaims method on my WCF and I’m passing in the claim type I want to search in, the claim value I want to find in that claim type, and the maximum number of records to return. You may recall from Part 2 of this series that SearchClaims just does a BeginsWith on the search pattern that’s passed in, so with lots of users there could easily be over 200 results. However 200 is the maximum number of matches that the people picker will show, so that’s all I ask for. If you really think that users are going to scroll through more than 200 results looking for a result I’m here to tell you that ain’t likely.

So now we have our colletion of UniqueClaimValues back, let’s look at how we use it our two override methods in the custom claims provider. First, here’s what the FillResolve method looks like:

protected override void FillResolve(Uri context, string[] entityTypes, string resolveInput, List<PickerEntity> resolved) { //this version of resolve is just like a search, so we'll treat it like that //make sure picker is asking for the type of entity we return; site collection admin won't for example if (!EntityTypesContain(entityTypes, SPClaimEntityTypes.User)) return; try { //do the search for matches AzureClaimProvider.AzureClaims.UniqueClaimValueCollection results = SearchClaims(IDENTITY_CLAIM, resolveInput, context.AbsoluteUri); //go through each match and add a picker entity for it foreach (AzureClaimProvider.AzureClaims.UniqueClaimValue cv in results.UniqueClaimValues) { PickerEntity pe = GetPickerEntity(cv.ClaimValue, cv.ClaimType, SPClaimEntityTypes.User, cv.DisplayName); resolved.Add(pe); } } catch (Exception ex) { Debug.WriteLine(ex.Message); } }It just calls the SearchClaims method, and for each result it gets back (if any), it creates a new PickerEntity and adds it to the List of them passed into the override. All of them will show up in then in the type in control in SharePoint. The FillSearch method uses it like this:

protected override void FillSearch(Uri context, string[] entityTypes, string searchPattern, string hierarchyNodeID, int maxCount, SPProviderHierarchyTree searchTree) { //make sure picker is asking for the type of entity we return; site collection admin won't for example if (!EntityTypesContain(entityTypes, SPClaimEntityTypes.User)) return; try { //do the search for matches AzureClaimProvider.AzureClaims.UniqueClaimValueCollection results = SearchClaims(IDENTITY_CLAIM, searchPattern, context.AbsoluteUri); //if there was more than zero results, add them to the picker if (results.UniqueClaimValues.Count() > 0) { foreach (AzureClaimProvider.AzureClaims.UniqueClaimValue cv in results.UniqueClaimValues) { //node where we'll stick our matches Microsoft.SharePoint.WebControls.SPProviderHierarchyNode matchNode = null; //get a picker entity to add to the dialog PickerEntity pe = GetPickerEntity(cv.ClaimValue, cv.ClaimType, SPClaimEntityTypes.User, cv.DisplayName); //add the node where it should be displayed too if (!searchTree.HasChild(cv.ClaimType)) { //create the node so we can show our match in there too matchNode = new SPProviderHierarchyNode(ProviderInternalName, cv.DisplayName, cv.ClaimType, true); //add it to the tree searchTree.AddChild(matchNode); } else //get the node for this team matchNode = searchTree.Children.Where(theNode => theNode.HierarchyNodeID == cv.ClaimType).First(); //add the match to our node matchNode.AddEntity(pe); } } } catch (Exception ex) { Debug.WriteLine(ex.Message); } }In FillSearch I’m calling my SearchClaims method again. For each UniqueClaimValue I get back (if any), I look to see if I’ve added the claim type to the results hierarchy node. Again, in this case I’ll always only return one claim type (email), but I wrote this to be extensible so you could use more claim types later. So I add the hierarchy node if it doesn’t exist, or find it if it does. I take the PickerEntity that I create created from the UniqueClaimValue and I add it to the hierarchy node. And that’s pretty much all there is too it.

I’m not going to cover the FillSchema method or any of the four Boolean property overrides that every custom claim provider must have, because there’s nothing special in them for this scenario and I’ve covered the basics in other posts on this blog. I’m also not going to cover the feature receiver that’s used to register this custom claims provider because – again – there’s nothing special for this project and I’ve covered it elsewhere. After you compile it you just need to make sure that your assembly for the custom claim provider as well as custom CASI Kit component is registered in the Global Assembly Cache in each server on the farm, and you need to configure the SPTrustedIdentityTokenIssuer to use your custom claims provider as the default provider (also explained elsewhere in this blog).

That’s the basic scenario end to end. When you are in the SharePoint site and you try and add a new user (email claim really), the custom claim provider is invoked first to get a list of supported claim types, and then again as you type in a value in the type in control, or search for a value using the people picker. In each case the custom claims provider uses the custom CASI Kit control to make an authenticated call out to Windows Azure to talk to our WCF, which uses our custom data classes to retrieve data from Azure table storage. It returns the results and we unwrap that and present it to the user. With that you have your complete turnkey SharePoint and Azure “extranet in a box” solution that you can use as is, or modify to suit your purposes. The source code for the custom CASI Kit component, web part that registers the user in an Azure queue, and custom claims provider is all attached to this posting. Hope you enjoy it, find it useful, and can start to visualize how you can tie these separate services together to create solutions to your problems. Here’s some screenshots of the final solution:

Root site as anonymous user:

Here’s what it looks like after you’ve authenticated; notice that the web part now displays the Request Membership button:

Here’s an example of the people picker in action, after searching for claim values that start with “sp”:

SharePoint.zip

Scott Densmore (@scottdensmore) recommended Your own lightweight & robust STS in Windows Azure in a 2/18/2012 post:

The guys at thinktecture have come up with a cool way for you to have your own STS in your application that is simple and lightweight. These guys review our Claims Identity Guide and I can say these guys know Identity.

Go download yourself a copy and get your identity on!

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Steve Fox (@redmondhockey) promotes other blogs about SharePoint Online & CRM Online using BCS & Windows Azure in a 2/20/2012 post:

If you’ve been following my recent blog-posts, I’ve been talking more about how to integrate SharePoint Online and Windows Azure—as a key part of the customization story for SharePoint Online. It’s good to see some other folks beginning to post on this as well. For example, recently Nick Swan blogged on how to integrate SharePoint Online & CRM Online using Business Connectivity Services (BCS) and Windows Azure.

You can check it out here: http://www.lightningtools.com/blog/archive/2012/02/20/crm-2011-online-and-sharepoint-2010-integration-through-business-connectivity.aspx.

The model he uses is interesting; he is employing a similar pattern to this post where you use a WCF service that is deployed to Windows Azure to act as the intermediary. The service that Nick uses, though, is a little more beefy: he leverages the CRM SDK to have the service manage the authentication of the call into CRM Online. This is interesting in a couple of ways. The first is that the service does the ‘look-up.’ In fact, in his service you see he’s hard-coded the credentials but this could serve as a look-up point for CRM Online users. The second is that his post doesn’t use an Application ID; fewer moving parts to manage the connection to SharePoint Online.

Anyway, thought I would post because it’s interesting to see more patterns emerging for what is definitely a scenario we’re seeing a lot of interest in; that is, the connection of CRM Online with SharePoint Online to build custom reporting mechanisms in SharePoint Online.

Bruce Kyle posted ISV Case Study: Smartphone Users Share Privately With Groups on Glassboard over Windows Azure to the US ISV Evangelism blog on 2/20/2012:

Glassboard is a mobile service for sharing privately with groups. With Glassboard, you create 'boards' which are groups of people around a common interest where you can share messages, comments, photos, videos, and even your location (when appropriate).

When the Glassboard team was developing Glassboard, they looked for a platform that offered scale, worry-free operation, replicated data, and privacy for their apps on Windows Phone, iPhone, Android, and Office 365. The Glassboard team chose the Windows Azure platform for these capabilities.

This article provides and overall architecture of the Glassboard backend in Azure.

Architecture Overview

The architecture of Glassboard includes native smartphone and desktop applications, a Web Service to handle requests, a data store to hold the messages and videos, and a notification service to alert users of incoming messages.

Glassboard Architecture

Client Architecture

Each mobile platform has a native application. The Windows Phone 7 app is in the Windows Phone Marketplace, the Android & iPhone apps are available in their respective stores, and the Silverlight desktop client is available through the Office 365 Marketplace. Each of these clients makes calls directly to the custom Glassboard backend built in Azure.

Services Architecture

Each of the phone applications talk to Web Services hosted in Windows Azure. The connections are all made over SSL, and they use digest authentication. Authorization is provided by a custom-built service of user names and passwords. Once the user is authenticated through the Web Service, a token is returned to the device that is attached to subsequent service requests.

WCF Services Web Role

The Web Service is written using REST on Windows Communications Foundation (WCF) that is part of .NET 4. WCF services receive and validate the user log in, and receives the messages, pictures, and videos. The Web Service is hosted in a Windows Azure Web Role. You can think of a Web Role as Windows Server virtual machine that includes Internet Information Services (IIS).

Data Store

Incoming messages from the apps are stored in Windows Azure Table Storage as entities

The message is encrypted prior to writing the data into the table store. The Glassboard team used Azure Table Encryption by Attribute that is available in CodePlex. A single attribute is used to transparently encrypt and decrypt data when saving or reading data from Azure Table storage. The technique ensures that "data at rest" will be encrypted. This means no external parties can read your content – not the authorized infrastructure personnel at your company, the Glassboard team, nor anyone at Microsoft will not be able decrypt this data. Incoming pictures and videos are stored in Azure Blob Storage in the same way.

Encoding Videos Using Queues and Worker Role

Videos need to be encoded so they can be seen on each of the other devices. The Web Role receives the incoming media file and stores the file in Windows Azure Blob Storage. Then it places an item in Windows Azure Queue Storage to alert the Worker Role that encoding is needed. The Worker Role queries to queue, which acts as a "to do" list tasks that can take several minutes.

Think of a Worker Role as a service that runs in a never-ending loop. When the Worker Role cycles, it checks for an item in the queue. The item in the queue points to the location in blob storage that needs to be encoded. The Worker Role picks up the item, marks the item in the queue as being in progress, and begins its work. If for some reason the encoding fails, the item in the queue is set back to restart the encoding. Or when the encoding is completed, the Worker Role removes the item from the queue.

As the Worker Role completes encoding each video, it places the media into Windows Azure Blob Storage that can then be sent in chunks to the users.

Notifications

Notifications are also handled by the Worker Role. When the Worker Role detects that an new notification is to be sent, the notification is made to the smartphone provider. In Windows Phone, the notification is sent using Push Notification Services.

For Windows Phone devices, the Glassboard app running on the phone intially requests to receive a push notification URI from the Push client service. Through a negotiation with the Push service, it receives a URI that identifies the device. The client software sends that URI to the Glassboard Web Service.

Glassboard maintains a list of these URIs in Windows Azure Table Storage. When it comes time to notify each device, the service walks through the user network, gets the URI for each member's device, and sends a push notification to the Microsoft Push Notification Service, which in turn routes the push notification to the application running on a Windows Phone device.

For Windows Phone, the notification is delivered as raw data to the Phone application, the application's Tile is visually updated, or a toast notification is displayed. The Microsoft Push Notification Service sends a response code to your web service after a push notification is sent indicating that the notification has been received and will be delivered to the device at the next possible opportunity. However, the Microsoft Push Notification Service does not provide an end-to-end confirmation that your push notification was delivered from your web service to the device.

Other devices have a similar service.

Additional Clients

Because the primary interface into the Glassboard service is a Web Service, Glassboard messages can be integrated into other applications. For example, you can access Glassboard messages from within NewsGator Social Sites. NewsGator integrates Glassboard into Social Sites so users can see mico-blogging messages and links from within an enterprise.

Windows Azure Advantages

With this architecure, Windows Azure provides advantages of storage replications, scale and provides service guarantees.

Storage

Windows Azure Blobs, Tables and Queues stored on Windows Azure are replicated three times in the same data center for resiliency against hardware failure. No matter which storage service you use, your data will be replicated across different fault domains to increase availability and be fault-tolerant.

Windows Azure Blobs and Tables are also geo-replicated between two data centers hundreds of miles apart from each other on the same continent, to provide additional data durability in the case of a major disaster, at no additional cost.

Scale

The loosely coupled architecture provides the ability to scale. When many users connect Glassboard can increase the number of Web Roles to process the incoming messages. When the number of videos and notification out paces the ability for the Worker Role to do the encoding, additional Worker Roles can be enabled.

Because the architecture is loosely coupled, compute cycles can be added as needed.

Conversely, during late evenings when messages and videos are fewer, the system can scale back to a small number of compute instances ready to receive the next message.

Using Windows Azure Queue allows decoupling of different parts of a cloud application, enabling cloud applications to be easily built with different technologies and easily scale with traffic needs.

Privacy, Security

Security on Windows Azure is a shared responsibility. Glassboard provides a high degree of security for the individual messages because it takes advantage of application best practices. Traffic between phones and the Web Service are encrypted using https and confidential data is encrypted prior to storage.

Glassboard users can provide location information and photos without sharing those details with others, even the Glassboard staff has no access.

Windows Azure operates in the Microsoft Global Foundation Services (GFS) infrastructure, which is ISO 27001-certified. ISO 27001 is recognized worldwide as one of the premiere international information security management standards. Windows Azure is in the process of evaluating further industry certifications. In addition to the internationally recognized ISO27001 standard, Microsoft Corporation is a signatory to Safe Harbor and is committed to fulfill all of its obligations under the Safe Harbor Framework.

Get Glassboard

For iPhone, Android, and Windows Phone 7.

Additional Resources

- Glassboard

- ISV Video: Social Media Goes Mobile with Glassboard on Azure

- Azure Table Encryption via Attribute

- REST on WCF

- Windows Azure Toolkit for Windows Phone

- Developing and Deploying Windows Azure Apps in Visual Studio 2010

- Video Series: Everything You Need to Know About Azure as a Developer

About Web Roles and Worker Roles

About Security on Azure

Himanshu Singh posted Windows Azure Community News Roundup (Edition #7) on 2/20/2012:

Welcome to the latest edition of our weekly roundup of the latest community-driven news, content and conversations about cloud computing and Windows Azure. Here are the highlights from last week.

- Developing a Hello World Java Application and Deploying it in Windows Azure – Part 1 by JananiK, DotNetSlackers.com (posted Feb. 17.)

- Tutorial – Going Live with Your First Application on Windows Azure – Part 1 Cloud.Story.in (posted Feb. 17.)

- Combining Multiple Windows Azure Worker Roles into a Windows Azure Web Role blog post by Dina Berry (posted Feb. 16.)

- New Course: Building Windows Phone Applications with Windows Azure by Jason Salmond, the pluralsight blog (posted Feb. 15.)

- Think Big and Microsoft BizSpark Offer Startups $60,000 in Cloud Services on Windows Azure by Allison Way, Think Big blog (posted Feb. 15.)

- Deploying a Windows Azure & SQL Azure Solution with Visual Studio Team Build blog post by Michael Collier (posted Feb. 14.)

- (Windows) Azure Revolution blog post by Juha Harkonen (posted Feb. 14.)

Upcoming Events, User Group Meetings

[See the Cloud Computing Events section below]

Recent Windows Azure Forums Discussion Threads

- Creating a secure session key – 243 views, 6 replies

- Reg Data-tier Applications Node in SQL Management Studio R2 – 205 views, 8 replies

- Blob URL address case sensitive – 332 views, 4 replies

- Store an array as an element in (Windows) Azure Table – 539 views, 5 replies

- Websockets vis Node.js/socket.io – 701 views, 7 replies

Please feel free to send us any articles you come across that you think we should highlight, or content of your own that you’d like to share. And let us know about any local events, groups or activities that you think we should tell the rest of the Windows Azure community about. You can use the comments section below, or talk to us on Twitter @WindowsAzure.

Ryan Dunn (@dunnry) described Choosing What To Monitor In Windows Azure in a 2/19/2012 post:

One of the first questions I often get when onboarding a customer is "What should I be monitoring?". There is no definitive list, but there are certainly some things that tend to be more useful. I recommend the following Performance Counters in Windows Azure for all role types at bare minimum:

- \Processor(_Total)\% Processor Time

- \Memory\Available Bytes

- \Memory\Committed Bytes

- \.NET CLR Memory(_Global_)\% Time in GC

You would be surprised how much these 4 counters tell someone without any other input. You can see trends over time very clearly when monitoring over weeks that will tell you what is a 'normal' range that your application should be in. If you start to see any of these counters spike (or spike down in the case of Available Memory), this should be an indicator to you that something is going on that you should care about.

Web Roles

For ASP.NET applications, there are some additional counters that tend to be pretty useful:

- \ASP.NET Applications(__Total__)\Requests Total

- \ASP.NET Applications(__Total__)\Requests/Sec

- \ASP.NET Applications(__Total__)\Requests Not Authorized

- \ASP.NET Applications(__Total__)\Requests Timed Out

- \ASP.NET Applications(__Total__)\Requests Not Found

- \ASP.NET Applications(__Total__)\Request Error Events Raised

- \Network Interface(*)\Bytes Sent/sec

If you are using something other than the latest version of .NET, you might need to choose the version specific instances of these counters. By default, these are going to only work for .NET 4 ASP.NET apps. If you are using .NET 2 CLR apps (including .NET 3.5), you will want to choose the version specific counters.

The last counter you see in this list is somewhat special as it includes a wildcard instance (*). This is important to choose in Windows Azure as the names of the actual instance adapter can (and tends to) change over time and deployments. Sometimes it is "Local Area Connection* 12", sometimes it is "Microsoft Virtual Machine Bus Network Adapter". The latter one tends to be the one that you see most often with data, but just to be sure, I would include them all. Note, this is not an exhaustive list - if you have custom counters or additional system counters that are meaningful, by all means, include them. In AzureOps, we can set these remotely on your instances using the property page for your deployment.

Choosing a Sample Rate

You should not need to sample any counter faster than 30 seconds. Period. In fact, in 99% of all cases, I would actually recommend 120 seconds (that is our default we recommend in AzureOps). This might seem like you are losing too much data or that you are going to miss something. However, experience has shown that this sample rate is more than sufficient to monitor the system over days, weeks, and months with enough resolution to know what is happening in your application. The difference between 30 seconds and 120 seconds is 4 times as much data. When you sample at 1 and 5 second sample rates, you are talking about 120x and 24x the amount of data. That is per instance, by the way. If you are have more than 1 instance, now multiply that by number of instances. It will quickly approach absurd quantities of data that costs you money in transactions and storage to store, and that has no additional value to parse, but a lot more pain to keep. Resist the urge to put 1, 5, or even 10 seconds - try 120 seconds to start and tune down if you really need to.

Tracing

The other thing I recommend for our customers is to use tracing in their application. If you only use the built-in Trace.TraceInformation (and similar), you are ahead of the game. There is an excellent article in MSDN about how to setup more advanced tracing with TraceSources that I recommend as well.

I recommend using tracing for a variety of reasons. First, it will definitely help you when your app is running in the cloud and you want to gain insight into issues you see. If you had logged exceptions to Trace or critical code paths to Trace, then you now have potential insight into your system. Additionally, you can use this as a type of metric in the system to be mined later. For instance, you can log length of time a particular request or operation is taking. Later, you can pull those logs and analyze what was the bottleneck in your running application. Within AzureOps, we can parse trace messages in variety of ways (including semantically). We use this functionality to alert ourselves when something strange is happening (more on this in a later post).

The biggest obstacle I see with new customers is remembering to turn on transfer for their trace messages. Luckily, within AzureOps, this is again easy to do. Simply set a Filter Level and a Transfer Interval (I recommend 5 mins).

The Filter Level will depend a bit on how you use the filtering in your own traces. I have seen folks that trace rarely, so lower filters are fine. However, I have also seen customers trace upwards of 500 traces/sec. As a point of reference, at that level of tracing, you are talking about 2GB of data on the wire each minute if you transfer at that verbosity. Heavy tracers, beware! I usually recommend verbose for light tracers and Warning for tracers that are instrumenting each method for instance. You can always change this setting later, so don't worry too much right now.

Coming Up

In the next post, I will walk you through how to setup your diagnostics in Windows Azure and point out some common pitfalls that I see.

AzureOps is Opstera’s deployment monitoring, application log reading and alert/notification setup program for Windows Azure (see post below). Opstera is the new organization to which Cumulux transferred its ManageAxis project.

Ryan Dunn (@dunnry) posted Monitoring in Windows Azure on 2/19/2012, after several months of blog silence:

For the last year since leaving Microsoft, I have been deeply involved in building a world class SaaS monitoring service called AzureOps. During this time, it was inevitable that I see not only how to best monitor services running in Windows Azure, but also see the common pitfalls amongst our beta users. It is one thing to be a Technical Evangelist like I was and occasionally use a service for a demo or two, and quite another to attempt to build a business on it.

Monitoring a running service in Windows Azure can actually be daunting if you have not worked with it before. In this coming series, I will attempt to share the knowledge we have gained building AzureOps and from our customers. The series will be grounded in these 5 areas:

Choosing what to monitor in Windows Azure.

- Setting up the Diagnostics Monitor in Windows Azure

- Getting the diagnostics data from Windows Azure

- Interpreting diagnostics data and making adjustments.

- Maintaining your service in Windows Azure.

Each one of these areas will be a post in the series and I will update this post to keep a link to the latest. I will use AzureOps as an example in some cases to highlight both what we learned as well as the approach we take now due to this experience.

If you are interested in monitoring your own services in Windows Azure, grab an invite and get started today!.

Steve Plank (@plankytronixx) asserted Phone developers should use the cloud in a 2/17/2012 post:

When you think about the monetization (awful word!) of apps for the phone, you can narrow it down to either:

- The app is free – it was fun building it, I don’t want to be rich.

- The app is paid for and entirely standalone. It doesn’t rely on any service that is firmly rooted in terra firma.

- The app is free, but you pay for the service that is delivered through the app.

There are a few others which are basically combinations of the above. It’s the last one, and its variants, that probably scares a lot of phone developers off because it' has more to do with running a service and the phone app is probably the smallest part of the whole thing. Running a service means service management: making sure it’s still running, capacity planning, scaling, and obvious bread-and-butter jobs like patching, service packs, security fixes and so on.

Although the responsibility of continuing to provide a service to customers of your app still rests with you in these scenarios, PaaS cloud operators truly take away almost all of the time-consuming, headache-inducing part of running a reliable, scalable, available service.

I’ve made a video to describe the benefits and the approach to how a phone developer can deliver services which run in massively scalable, multi-million-dollar cloud-computing-datacentres and test out their ideas for just a few pence, or in many cases, using free cloud service trial subscriptions – meaning the only risk they endure is the time they spend developing the service.

From the video description:

If your phone app needs a resilient, scalable, highly available back-end service, a PaaS cloud service is the logical platform. You don't need to worry about service management, operating system updates, service packs, security patches, server hardening and just simply keeping a service running. This video explains how and why the top-end IT landscape is leveled, meaning the smallest companies can now compete with the world's largest. It's now about who has the best business idea, not who has several $million to spend on a huge infrastructure.

Had to search YouTube for Plankytronixx to insert a link to the video, which didn’t play on 2/20/2012 at 11:00 AM PST.

Janakiram MSV continued his series with Going Live with Your First Application on Windows Azure – Part 2 with Visual Web Developer Express 2010 on 2/16/2012:

In part 1 of this series, we have been through the process of signing up with Windows Azure. In this part, we will setup the development environment required to get started with your first Cloud application.

Firstly, you need to download and install Microsoft Visual Studio. For this, visit microsoft.com/visualstudio and click on Visual Web Developer Express from the Product link.

Click on Install to download the dependencies and the complete the installation of Visual Web Developer 2010 Express.

Just follow the steps to complete the installation. After a while, Visual Web Developer will be available in the Start menu.

The next step is to download the Windows Azure SDK for .NET. Visit https://www.windowsazure.com/en-us/develop/net/ to download the SDK.

This will launch the Microsoft Web Platform Installer and after a few clicks, you should have Windows Azure SDK installed and integrated with Visual Studio Web Developer Express.

Launch Visual Studio Web Developer from the Start Menu.

Click on File->New Project to open the New Project dialogue. From this select Cloud and give a name to the Project.

The next dialogue shows various options. Choose ASP.NET Web Role and add it to the Solution.

Before we launch the application, we need to restart Visual Web Developer Express in elevated mode. To do this, right click on Microsoft Visual Web Developer 2010 Express and open the properties. Click on the Compatibility tab and click the check box to run the program as an administrator.

Restart Visual Web Developer 2010 Express and open the same Cloud project that you saved last time. We need not make any changes to the default project and launch it as is. As you notice in the Solution Explorer, The project has the complete web application which can be used as a starting point.

Let’s launch this by selecting Debug -> Start debugging.

This will launch the Windows Azure Emulator which includes both the Storage and the Compute emulator to test the applications locally.

After the first time initialization is done, you can see the Windows Azure Emulator in the system tray.

After few seconds, you will see Internet Explorer come up showing the ASP.NET page which is a part of our first Cloud application.

Congratulations! That was your first step towards the Cloud. In the next part, we will customize this application and deploy it on Windows Azure. Stay tuned!

Read More:

I thought this was the second step towards the cloud.

Janakiram MSV started a series with Tutorial: Going Live with Your First Application on Windows Azure – Part I on 2/16/2012:

Windows Azure is the Platform as a Service offering from Microsoft. Though it started as the preferred Cloud platform for .NET developers, it has come a long way to become a true polyglot PaaS. Windows Azure supports running .NET, Java, PHP, Node.js and even PERL, Ruby, Python and other applications. Its tighter integration with Visual Studio and Eclipse makes it productive for the developers to design, develop, test and deploy applications on Windows Azure.

In this series, we will see how to develop and deploy your first application on Windows Azure. Since the objective of this tutorial is to make you familiar with the life cycle of a Cloud application development, the application in itself is kept simple. We will focus on the key concepts and steps involved in going live with your first application.

For the big picture of Windows Azure, take a look at the detailed analysis covered on CloudStory.in.

The very first step is to visit WindowsAzure.com and complete the sign up process. Windows Azure has a 90 day free trial that gives you enough resources to get started. If you exceed the limits set during the trial period, Microsoft will disable your account for that month but it gets reset at the beginning of the next month.

Step 4 – Enter your mobile number and click on Send text message. Once you receive the verification code, enter the code and click on Verify Code and click next.

Step 5 – In the next step, enter the Credit Card and billing address and click Next.

Step 6 – Finally, click on the Account Center to see your account details. This should tell you that you have 89 days more to evaluate Windows Azure.

Clicking on the manage link will take you to the Windows Azure Management Portal.

The Management Portal is the interface to deal with your the deployments, storage, network and databases.

Congratulations! You now have a valid Windows Azure account!

In the next part of this tutorial, we will setup the development environment to create the first Cloud application.

Read More:

Where are steps 1, 2 and 3?

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

Michael Washington (@ADefWebserver) described Integrating LightSwitch Into An ASPNET Application To Provide Single Sign On in a 2/20/2012 post to his Visual Studio LightSwitch Help Website:

The LightSwitchHelpWebsite.com is an ASP.NET Web Application that currently has four LightSwitch applications integrated into it (you can access them from the DEMOS tab). The integration means that a user will have Single-Sign-On between the ASP.NET Website and the LightSwitch application. This integration has ben covered in the article: Easy DotNetNuke LightSwitch Deployment.

However, it may be helpful to cover the steps for integration using a simple ASP.NET Web Application rather than a large complex application such as the DotNetNuke application that the LightSwitchHelpWebsite.com uses.

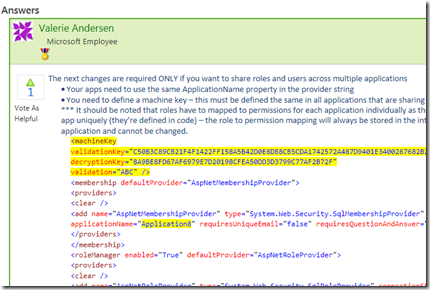

Everything covered here is from the directions in this post by LightSwitch team member Valerie Andersen:

Essentially we need to:

- ASP.NET Website Application

- Set it to use the ASP.NET Membership Provider

- Create the membership provider tables and point it to them in the Web.config

- Set the application name in the Web.config

- Create a Machine Key and put it in the Web.config

- Set the Forms Name in the Web.config

- Set an additional Profile property in the Web.config

- LightSwitch application

- Set our LightSwitch application to be a web application and to use Forms Authentication

- Create a second connection string pointing to the ASP.NET Website Application, and point the ASP.NET Membership Provider settings to it

- Set the application name in the Web.config

- Copy the Machine Key from the ASP.NET Website Application to the LightSwitch application’s Web.config