Windows Azure and Cloud Computing Posts for 12/30/2011+

| A compendium of Windows Azure, Service Bus, EAI & EDI Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

• Updated 12/31/2011 at 3:50 PM PST with new articles marked •

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

Windows Azure Blob, Drive, Table, Queue and Hadoop Services

Windows Azure Blob, Drive, Table, Queue and Hadoop Services - SQL Azure Database and Reporting

- Marketplace DataMarket, Social Analytics and OData

- Windows Azure Access Control, Service Bus, and Workflow

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Services

• Avkash Chauhan (@avkashchauhan) continued his Hadoop on Azure series on New Years Eve with Apache Hadoop on Windows Azure Part 7 – Writing your very own WordCount Hadoop Job in Java and deploying to Windows Azure Cluster:

In this article, I will help you writing your own WordCount Hadoop Job and then deploy it to Windows Azure Cluster for further processing.

Let’s create Java code file as “AvkashWordCount.java” as below:

package org.myorg; import java.io.IOException; import java.util.*; import org.apache.hadoop.fs.Path; import org.apache.hadoop.conf.*; import org.apache.hadoop.io.*; import org.apache.hadoop.util.*; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.Reducer; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.conf.Configured; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputForma public class AvkashWordCount { public static class Map extends Mapper <LongWritable, Text, Text, IntWritable> { private final static IntWritable one = new IntWritable(1); private Text word = new Text(); public void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { String line = value.toString(); StringTokenizer tokenizer = new StringTokenizer(line); while (tokenizer.hasMoreTokens()) { word.set(tokenizer.nextToken()); context.write(word, one); } } } public static class Reduce extends Reducer <Text, IntWritable, Text, IntWritable> { public void reduce(Text key, Iterator<IntWritable> values, Context context) throws IOException, InterruptedException { int sum = 0; while (values.hasNext()) { sum += values.next().get(); } context.write(key, new IntWritable(sum)); } } public static void main(String[] args) throws Exception { Configuration conf = new Configuration(); Job job = new Job(conf); job.setJarByClass(AvkashWordCount.class); job.setJobName("avkashwordcountjob"); job.setOutputKeyClass(Text.class); job.setOutputValueClass(IntWritable.class); job.setMapperClass(AvkashWordCount.Map.class); job.setCombinerClass(AvkashWordCount.Reduce.class); job.setReducerClass(AvkashWordCount.Reduce.class); FileInputFormat.addInputPath(job, new Path(args[0])); FileOutputFormat.setOutputPath(job, new Path(args[1])); job.waitForCompletion(true); } }

Let’s Compile the Java code first. You must have Hadoop 0.20 or above installed in your machined to use this code:

C:\Azure\Java>C:\Apps\java\openjdk7\bin\javac -classpath c:\Apps\dist\hadoop-core-0.20.203.1-SNAPSHOT.jar -d . AvkashWordCount.java

Now let’s crate the JAR file

C:\Azure\Java>C:\Apps\java\openjdk7\bin\jar -cvf AvkashWordCount.jar org

added manifest

adding: org/(in = 0) (out= 0)(stored 0%)

adding: org/myorg/(in = 0) (out= 0)(stored 0%)

adding: org/myorg/AvkashWordCount$Map.class(in = 1893) (out= 792)(deflated 58%)

adding: org/myorg/AvkashWordCount$Reduce.class(in = 1378) (out= 596)(deflated 56%)

adding: org/myorg/AvkashWordCount.class(in = 1399) (out= 754)(deflated 46%)

Once Jar is created please deploy it to your Windows Azure Hadoop Cluster as below:

In the page below please follow all the steps as described below:

- Step 1: Click Browse to select your "AvkashWordCount.Jar" file here

- Step 2: Enter the Job name as defined in the source code

- Step 3: Add the parameter as below

- Step 4: Add folder name where files will be read to word count

- Step 5: Add output folder name where the results will be stored

- Step 6: Start the Job

Note: Be sure to have some data in your input folder. (Avkash I am using /user/avkash/inputfolder which has a text file with lots of word to be used as Word Count input file)

Once the job is stared, you will see the results as below:

avkashwordcountjob

•••

Job Info

Status: Completed Sucessfully

Type: jar

Start time: 12/31/2011 4:06:51 PM

End time: 12/31/2011 4:07:53 PM

Exit code: 0Command

call hadoop.cmd jar AvkashWordCount.jar org.myorg.AvkashWordCount /user/avkash/inputfolder /user/avkash/outputfolder

Output (stdout)

Errors (stderr)

11/12/31 16:06:53 INFO input.FileInputFormat: Total input paths to process : 1

11/12/31 16:06:54 INFO mapred.JobClient: Running job: job_201112310614_0001

11/12/31 16:06:55 INFO mapred.JobClient: map 0% reduce 0%

11/12/31 16:07:20 INFO mapred.JobClient: map 100% reduce 0%

11/12/31 16:07:42 INFO mapred.JobClient: map 100% reduce 100%

11/12/31 16:07:53 INFO mapred.JobClient: Job complete: job_201112310614_0001

11/12/31 16:07:53 INFO mapred.JobClient: Counters: 25

11/12/31 16:07:53 INFO mapred.JobClient: Job Counters

11/12/31 16:07:53 INFO mapred.JobClient: Launched reduce tasks=1

11/12/31 16:07:53 INFO mapred.JobClient: SLOTS_MILLIS_MAPS=29029

11/12/31 16:07:53 INFO mapred.JobClient: Total time spent by all reduces waiting after reserving slots (ms)=0

11/12/31 16:07:53 INFO mapred.JobClient: Total time spent by all maps waiting after reserving slots (ms)=0

11/12/31 16:07:53 INFO mapred.JobClient: Launched map tasks=1

11/12/31 16:07:53 INFO mapred.JobClient: Data-local map tasks=1

11/12/31 16:07:53 INFO mapred.JobClient: SLOTS_MILLIS_REDUCES=18764

11/12/31 16:07:53 INFO mapred.JobClient: File Output Format Counters

11/12/31 16:07:53 INFO mapred.JobClient: Bytes Written=123

11/12/31 16:07:53 INFO mapred.JobClient: FileSystemCounters

11/12/31 16:07:53 INFO mapred.JobClient: FILE_BYTES_READ=709

11/12/31 16:07:53 INFO mapred.JobClient: HDFS_BYTES_READ=234

11/12/31 16:07:53 INFO mapred.JobClient: FILE_BYTES_WRITTEN=43709

11/12/31 16:07:53 INFO mapred.JobClient: HDFS_BYTES_WRITTEN=123

11/12/31 16:07:53 INFO mapred.JobClient: File Input Format Counters

11/12/31 16:07:53 INFO mapred.JobClient: Bytes Read=108

11/12/31 16:07:53 INFO mapred.JobClient: Map-Reduce Framework

11/12/31 16:07:53 INFO mapred.JobClient: Reduce input groups=7

11/12/31 16:07:53 INFO mapred.JobClient: Map output materialized bytes=189

11/12/31 16:07:53 INFO mapred.JobClient: Combine output records=15

11/12/31 16:07:53 INFO mapred.JobClient: Map input records=15

11/12/31 16:07:53 INFO mapred.JobClient: Reduce shuffle bytes=0

11/12/31 16:07:53 INFO mapred.JobClient: Reduce output records=15

11/12/31 16:07:53 INFO mapred.JobClient: Spilled Records=30

11/12/31 16:07:53 INFO mapred.JobClient: Map output bytes=153

11/12/31 16:07:53 INFO mapred.JobClient: Combine input records=15

11/12/31 16:07:53 INFO mapred.JobClient: Map output records=15

11/12/31 16:07:53 INFO mapred.JobClient: SPLIT_RAW_BYTES=126

11/12/31 16:07:53 INFO mapred.JobClient: Reduce input records=15Finally you can open output folder /user/avkash/outputfolder and read the Word Count results.

Avkash Chauhan (@avkashchauhan) continued his Hadoop on Azure series with Apache Hadoop on Windows Azure Part 6 - Running 10GB Sort Hadoop Job with TeraSort Option and understanding MapReduce Job administration on 12/30/2011:

In this section we will run the same 10GB sorting Hadoop job with TERASORT option. With TeraSort option the parameters are changed as below:

With above parameters couple of things to remember:

- You must have /example/data/10GB-sort-input folder along with data (This is created when you use teragen option first as explained in Exercise 5)

- You also need to have

Once you start the job you will see the reads data from the /example/data/10GB-sort-input folder

Now you can login to your cluster using your RD credentials and launch local IP with port 50030 for node administration as below:

Above if you want to know how many nodes I have you would need to click “Nodes” section in the cluster Summary table to find each node details and its IP Address:

In the Map/Reduce Administration page, once you click on the running job, you can get further details about your job progress as below:

If you want to further dig into individual pending/running or completed job just click on either Map or Reduce tasks counter above and you will see details as below:

Pending Tasks:

Completed Tasks:

Now if you select a completed task and open for more info you will see:

When your Job is running you can also visualize the Map/Reduce process either at Hadoop on Azure Portal or directly in your Node Admin section inside the cluster as below:

Job Progress at Hadoop on Azure Portal:

11/12/30 19:41:45 INFO mapred.JobClient: map 57% reduce 4%

11/12/30 19:41:57 INFO mapred.JobClient: map 59% reduce 4%

11/12/30 19:42:06 INFO mapred.JobClient: map 60% reduce 4%

11/12/30 19:42:07 INFO mapred.JobClient: map 61% reduce 4%

11/12/30 19:42:15 INFO mapred.JobClient: map 62% reduce 4%

11/12/30 19:42:18 INFO mapred.JobClient: map 63% reduce 4%

11/12/30 19:42:21 INFO mapred.JobClient: map 64% reduce 4%Job Progress directly seen inside the cluster directly at http://10.186.42.44:50030/jobdetails.jsp?jobid=job_201112290558_0006&refresh=30

Finally Job will be completed when both Map and Reduce jobs are 100% completed

10GB Terasort Example

Job Info

Status: Completed Successfully

Type: jar

Start time: 12/30/2011 7:36:50 PM

End time: 12/30/2011 8:48:36 PM

Exit code: 0Command

call hadoop.cmd jar hadoop-examples-0.20.203.1-SNAPSHOT.jar terasort "-Dmapred.map.tasks=50 -Dmapred.reduce.tasks=25" /example/data/10GB-sort-input /example/data/10GB-sort-out

Output (stdout)

Making 1 from 100000 records

Step size is 100000.0Errors (stderr)

11/12/30 19:36:51 INFO terasort.TeraSort: starting

11/12/30 19:36:51 INFO mapred.FileInputFormat: Total input paths to process : 50

11/12/30 19:36:52 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

11/12/30 19:36:52 INFO compress.CodecPool: Got brand-new compressor

11/12/30 19:36:53 INFO mapred.FileInputFormat: Total input paths to process : 50

11/12/30 19:36:54 INFO mapred.JobClient: Running job: job_201112290558_0006

11/12/30 19:36:55 INFO mapred.JobClient: map 0% reduce 0%

11/12/30 19:37:24 INFO mapred.JobClient: map 2% reduce 0%

11/12/30 19:37:26 INFO mapred.JobClient: map 5% reduce 0%

11/12/30 19:37:44 INFO mapred.JobClient: map 7% reduce 0%

11/12/30 19:37:48 INFO mapred.JobClient: map 8% reduce 0%

11/12/30 19:37:50 INFO mapred.JobClient: map 9% reduce 0%

11/12/30 19:37:52 INFO mapred.JobClient: map 10% reduce 0%……

……

11/12/30 20:47:24 INFO mapred.JobClient: map 100% reduce 98%

11/12/30 20:47:51 INFO mapred.JobClient: map 100% reduce 99%

11/12/30 20:48:21 INFO mapred.JobClient: map 100% reduce 100%

11/12/30 20:48:35 INFO mapred.JobClient: Job complete: job_201112290558_0006

11/12/30 20:48:35 INFO mapred.JobClient: Counters: 27

11/12/30 20:48:35 INFO mapred.JobClient: Job Counters

11/12/30 20:48:35 INFO mapred.JobClient: Launched reduce tasks=1

11/12/30 20:48:35 INFO mapred.JobClient: SLOTS_MILLIS_MAPS=3766703

11/12/30 20:48:35 INFO mapred.JobClient: Total time spent by all reduces waiting after reserving slots (ms)=0

11/12/30 20:48:35 INFO mapred.JobClient: Total time spent by all maps waiting after reserving slots (ms)=0

11/12/30 20:48:35 INFO mapred.JobClient: Rack-local map tasks=1

11/12/30 20:48:35 INFO mapred.JobClient: Launched map tasks=153

11/12/30 20:48:35 INFO mapred.JobClient: Data-local map tasks=152

11/12/30 20:48:35 INFO mapred.JobClient: SLOTS_MILLIS_REDUCES=4244002

11/12/30 20:48:35 INFO mapred.JobClient: File Input Format Counters

11/12/30 20:48:35 INFO mapred.JobClient: Bytes Read=10013107300

11/12/30 20:48:35 INFO mapred.JobClient: File Output Format Counters

11/12/30 20:48:35 INFO mapred.JobClient: Bytes Written=10000000000

11/12/30 20:48:35 INFO mapred.JobClient: FileSystemCounters

11/12/30 20:48:35 INFO mapred.JobClient: FILE_BYTES_READ=26766944216

11/12/30 20:48:35 INFO mapred.JobClient: HDFS_BYTES_READ=10013124850

11/12/30 20:48:35 INFO mapred.JobClient: FILE_BYTES_WRITTEN=36970291186

11/12/30 20:48:35 INFO mapred.JobClient: HDFS_BYTES_WRITTEN=10000000000

11/12/30 20:48:35 INFO mapred.JobClient: Map-Reduce Framework

11/12/30 20:48:35 INFO mapred.JobClient: Map output materialized bytes=10200000900

11/12/30 20:48:35 INFO mapred.JobClient: Map input records=100000000

11/12/30 20:48:35 INFO mapred.JobClient: Reduce shuffle bytes=10132903050

11/12/30 20:48:35 INFO mapred.JobClient: Spilled Records=362419016

11/12/30 20:48:35 INFO mapred.JobClient: Map output bytes=10000000000

11/12/30 20:48:35 INFO mapred.JobClient: Map input bytes=10000000000

11/12/30 20:48:35 INFO mapred.JobClient: Combine input records=0

11/12/30 20:48:35 INFO mapred.JobClient: SPLIT_RAW_BYTES=17550

11/12/30 20:48:35 INFO mapred.JobClient: Reduce input records=100000000

11/12/30 20:48:35 INFO mapred.JobClient: Reduce input groups=100000000

11/12/30 20:48:35 INFO mapred.JobClient: Combine output records=0

11/12/30 20:48:35 INFO mapred.JobClient: Reduce output records=100000000

11/12/30 20:48:35 INFO mapred.JobClient: Map output records=100000000

11/12/30 20:48:35 INFO terasort.TeraSort: done

Avkash Chauhan (@avkashchauhan) continued his Hadoop on Azure series with Apache Hadoop on Windows Azure Part 5 - Running 10GB Sort Hadoop Job with Teragen, TeraSort and TeraValidate Options

This example consists of the 3 map/reduce applications that Owen O'Malley and Arun Murthy used win the annual general purpose (daytona) terabyte sort benchmark @ sortbenchmark.org. This sample is part of prebuilt package in your Hadoop on Azure portal so Just like any other prebuilt sample you can deploy it to cluster as below:

There are three steps to this example:

1. TeraGen is a map/reduce program to generate the data.

2. TeraSort samples the input data and uses map/reduce to sort the data into a total order.

3. TeraValidate is a map/reduce program that validates the output is sorted.The example deployment is pre-loaded with the first Teragen job.

1. Teragen (sample loaded default)

> hadoop jar hadoop-examples-0.20.203.1-SNAPSHOT.jar teragen "-Dmapred.map.tasks=50" 100000000 /example/data/10GB-sort-input

Once sample is deployed to the cluster, you can verify the parameters first and then start the Job:

Once the Job is started it, first creates the input data in 50 different files on HDFS...

....which you can verify in HDFS management as below:

Finally when the Job is completed the results are displayed as below:

10GB Terasort Example

•••••

Job Info

Status: Completed Sucessfully

Type: jar

Start time: 12/30/2011 5:54:16 PM

End time: 12/30/2011 6:04:59 PM

Exit code: 0Command

call hadoop.cmd jar hadoop-examples-0.20.203.1-SNAPSHOT.jar teragen "-Dmapred.map.tasks=50" 100000000 /example/data/10GB-sort-input

Output (stdout)

Generating 100000000 using 50 maps with step of 2000000

Errors (stderr)

11/12/30 17:54:20 INFO mapred.JobClient: map 0% reduce 0%

11/12/30 17:54:49 INFO mapred.JobClient: map 2% reduce 0%

11/12/30 17:54:52 INFO mapred.JobClient: map 4% reduce 0%

11/12/30 17:54:55 INFO mapred.JobClient: map 5% reduce 0%

11/12/30 17:55:01 INFO mapred.JobClient: map 6% reduce 0%

11/12/30 17:55:22 INFO mapred.JobClient: map 7% reduce 0%

11/12/30 17:55:28 INFO mapred.JobClient: map 8% reduce 0%

11/12/30 17:55:43 INFO mapred.JobClient: map 9% reduce 0%

11/12/30 17:55:46 INFO mapred.JobClient: map 12% reduce 0%

11/12/30 17:55:49 INFO mapred.JobClient: map 14% reduce 0%

11/12/30 17:56:10 INFO mapred.JobClient: map 15% reduce 0%

11/12/30 17:56:13 INFO mapred.JobClient: map 16% reduce 0%

11/12/30 17:56:28 INFO mapred.JobClient: map 18% reduce 0%

11/12/30 17:56:31 INFO mapred.JobClient: map 19% reduce 0%

11/12/30 17:56:34 INFO mapred.JobClient: map 20% reduce 0%

11/12/30 17:56:43 INFO mapred.JobClient: map 21% reduce 0%

11/12/30 17:56:49 INFO mapred.JobClient: map 22% reduce 0%

11/12/30 17:56:52 INFO mapred.JobClient: map 23% reduce 0%

11/12/30 17:56:58 INFO mapred.JobClient: map 24% reduce 0%

11/12/30 17:57:01 INFO mapred.JobClient: map 25% reduce 0%

11/12/30 17:57:04 INFO mapred.JobClient: map 26% reduce 0%

11/12/30 17:57:10 INFO mapred.JobClient: map 28% reduce 0%

11/12/30 17:57:19 INFO mapred.JobClient: map 29% reduce 0%

11/12/30 17:57:22 INFO mapred.JobClient: map 30% reduce 0%

11/12/30 17:57:28 INFO mapred.JobClient: map 31% reduce 0%

11/12/30 17:57:31 INFO mapred.JobClient: map 32% reduce 0%

11/12/30 17:58:04 INFO mapred.JobClient: map 33% reduce 0%

11/12/30 17:58:07 INFO mapred.JobClient: map 35% reduce 0%

11/12/30 17:58:10 INFO mapred.JobClient: map 36% reduce 0%

11/12/30 17:58:13 INFO mapred.JobClient: map 37% reduce 0%

11/12/30 17:58:19 INFO mapred.JobClient: map 38% reduce 0%

11/12/30 17:58:25 INFO mapred.JobClient: map 39% reduce 0%

11/12/30 17:58:34 INFO mapred.JobClient: map 40% reduce 0%

11/12/30 17:58:37 INFO mapred.JobClient: map 42% reduce 0%

11/12/30 17:58:44 INFO mapred.JobClient: map 43% reduce 0%

11/12/30 17:58:47 INFO mapred.JobClient: map 44% reduce 0%

11/12/30 17:58:52 INFO mapred.JobClient: map 45% reduce 0%

11/12/30 17:58:59 INFO mapred.JobClient: map 46% reduce 0%

11/12/30 17:59:23 INFO mapred.JobClient: map 48% reduce 0%

11/12/30 17:59:26 INFO mapred.JobClient: map 49% reduce 0%

11/12/30 17:59:32 INFO mapred.JobClient: map 50% reduce 0%

11/12/30 17:59:40 INFO mapred.JobClient: map 51% reduce 0%

11/12/30 17:59:44 INFO mapred.JobClient: map 52% reduce 0%

11/12/30 17:59:46 INFO mapred.JobClient: map 53% reduce 0%

11/12/30 17:59:47 INFO mapred.JobClient: map 54% reduce 0%

11/12/30 17:59:58 INFO mapred.JobClient: map 55% reduce 0%

11/12/30 18:00:11 INFO mapred.JobClient: map 56% reduce 0%

11/12/30 18:00:14 INFO mapred.JobClient: map 58% reduce 0%

11/12/30 18:00:16 INFO mapred.JobClient: map 59% reduce 0%

11/12/30 18:00:20 INFO mapred.JobClient: map 60% reduce 0%

11/12/30 18:00:23 INFO mapred.JobClient: map 61% reduce 0%

11/12/30 18:00:31 INFO mapred.JobClient: map 62% reduce 0%

11/12/30 18:00:50 INFO mapred.JobClient: map 63% reduce 0%

11/12/30 18:00:53 INFO mapred.JobClient: map 65% reduce 0%

11/12/30 18:00:59 INFO mapred.JobClient: map 66% reduce 0%

11/12/30 18:01:10 INFO mapred.JobClient: map 67% reduce 0%

11/12/30 18:01:13 INFO mapred.JobClient: map 68% reduce 0%

11/12/30 18:01:14 INFO mapred.JobClient: map 69% reduce 0%

11/12/30 18:01:17 INFO mapred.JobClient: map 70% reduce 0%

11/12/30 18:01:20 INFO mapred.JobClient: map 71% reduce 0%

11/12/30 18:01:23 INFO mapred.JobClient: map 72% reduce 0%

11/12/30 18:01:37 INFO mapred.JobClient: map 73% reduce 0%

11/12/30 18:01:38 INFO mapred.JobClient: map 74% reduce 0%

11/12/30 18:01:50 INFO mapred.JobClient: map 75% reduce 0%

11/12/30 18:02:07 INFO mapred.JobClient: map 76% reduce 0%

11/12/30 18:02:11 INFO mapred.JobClient: map 77% reduce 0%

11/12/30 18:02:14 INFO mapred.JobClient: map 78% reduce 0%

11/12/30 18:02:17 INFO mapred.JobClient: map 79% reduce 0%

11/12/30 18:02:20 INFO mapred.JobClient: map 80% reduce 0%

11/12/30 18:02:32 INFO mapred.JobClient: map 81% reduce 0%

11/12/30 18:02:44 INFO mapred.JobClient: map 82% reduce 0%

11/12/30 18:02:53 INFO mapred.JobClient: map 83% reduce 0%

11/12/30 18:02:59 INFO mapred.JobClient: map 84% reduce 0%

11/12/30 18:03:05 INFO mapred.JobClient: map 85% reduce 0%

11/12/30 18:03:08 INFO mapred.JobClient: map 87% reduce 0%

11/12/30 18:03:14 INFO mapred.JobClient: map 88% reduce 0%

11/12/30 18:03:20 INFO mapred.JobClient: map 89% reduce 0%

11/12/30 18:03:38 INFO mapred.JobClient: map 90% reduce 0%

11/12/30 18:03:41 INFO mapred.JobClient: map 92% reduce 0%

11/12/30 18:03:47 INFO mapred.JobClient: map 93% reduce 0%

11/12/30 18:03:50 INFO mapred.JobClient: map 94% reduce 0%

11/12/30 18:03:56 INFO mapred.JobClient: map 95% reduce 0%

11/12/30 18:04:05 INFO mapred.JobClient: map 96% reduce 0%

11/12/30 18:04:11 INFO mapred.JobClient: map 97% reduce 0%

11/12/30 18:04:14 INFO mapred.JobClient: map 98% reduce 0%

11/12/30 18:04:23 INFO mapred.JobClient: map 99% reduce 0%

11/12/30 18:04:47 INFO mapred.JobClient: map 100% reduce 0%

11/12/30 18:04:58 INFO mapred.JobClient: Job complete: job_201112290558_0005

11/12/30 18:04:58 INFO mapred.JobClient: Counters: 16

11/12/30 18:04:58 INFO mapred.JobClient: Job Counters

11/12/30 18:04:58 INFO mapred.JobClient: SLOTS_MILLIS_MAPS=4761149

11/12/30 18:04:58 INFO mapred.JobClient: Total time spent by all reduces waiting after reserving slots (ms)=0

11/12/30 18:04:58 INFO mapred.JobClient: Total time spent by all maps waiting after reserving slots (ms)=0

11/12/30 18:04:58 INFO mapred.JobClient: Launched map tasks=54

11/12/30 18:04:58 INFO mapred.JobClient: SLOTS_MILLIS_REDUCES=0

11/12/30 18:04:58 INFO mapred.JobClient: File Input Format Counters

11/12/30 18:04:58 INFO mapred.JobClient: Bytes Read=0

11/12/30 18:04:58 INFO mapred.JobClient: File Output Format Counters

11/12/30 18:04:58 INFO mapred.JobClient: Bytes Written=10000000000

11/12/30 18:04:58 INFO mapred.JobClient: FileSystemCounters

11/12/30 18:04:58 INFO mapred.JobClient: FILE_BYTES_READ=113880

11/12/30 18:04:58 INFO mapred.JobClient: HDFS_BYTES_READ=4288

11/12/30 18:04:58 INFO mapred.JobClient: FILE_BYTES_WRITTEN=1180870

11/12/30 18:04:58 INFO mapred.JobClient: HDFS_BYTES_WRITTEN=10000000000

11/12/30 18:04:58 INFO mapred.JobClient: Map-Reduce Framework

11/12/30 18:04:58 INFO mapred.JobClient: Map input records=100000000

11/12/30 18:04:58 INFO mapred.JobClient: Spilled Records=0

11/12/30 18:04:58 INFO mapred.JobClient: Map input bytes=100000000

11/12/30 18:04:58 INFO mapred.JobClient: Map output records=100000000

11/12/30 18:04:58 INFO mapred.JobClient: SPLIT_RAW_BYTES=4288

Avkash Chauhan (@avkashchauhan) continued his Hadoop on Azure series with Apache Hadoop on Windows Azure Part 4- Remote Login to Hadoop node for MapReduce Job and HDFS administration on 12/29/2011:

When you are running Apache Hadoop job in Windows Azure, you have ability to remote into the main node (It is a virtual machine) and then perform all the regular tasks i.e.:

- Hadoop Map/Reduce Job Administration

- HDFS management

- Regular Name Node Management tasks

To login you just need to select the “Remote Desktop” button in your cluster management interface as below:

You will be using the same username and password, which you have selected during cluster creation. Once you are logged into your cluster main node VM, first thing you will do is to get the machine IP address. And then you just need to do open the web browser to run specific tasks along with IP address and specific administration ports.

- For Map/Reduce job administration the port is 50030.

- For HDFS Management the port is 50070.

For Hadoop Map/Reduce Job Administration use http://<LocalMachine_IP_Address:50030/ as below:

For Hadoop Name Node details use http://<LocalMachine_IP_Address:50070/ as below:

Above please select “Browse File System Link” to open the files in your HDFS:

<Return to section navigation list>

SQL Azure Database and Reporting

• Dhananjay Kumar (@debug_mode) posted SQL Azure to Developers: Some Basic Concepts on New Years Eve:

Objective

In this post we will focus on overview of SQL Azure along with a first look on SQL Azure Management Portal. Essentially we will cover in this part

- What is Cloud Database

- What is SQL Azure

- Create and Manage Database in SQL Azure

- Fire Wall in SQL Azure

SQL Azure new portal

▶

<p>JavaScript required to play <a hreflang="en" type="video/mp4" href="http://videos.videopress.com/0IJUxh1g/untitled_dvd.mp4">SQL Azure new portal</a>.</p>

What is Cloud Database?

For a developer a better perspective on database is always better and it helps to write different layers of application in effective and efficient way. Database in cloud is new buzz and very much appreciative technology. There are two words constitute CLOUD DATABASE. We are very much aware of term DATABASE whereas CLOUD may be newer or ambiguous to us.

In very broader term, Cloud can be termed as next generation of Internet. On a normal scenario you find on which server your database is residing. You have very minute level administrative control on database server and you have a physical sense of database server. Whereas imagine if you are not aware of where your database is residing? , you access and perform all operation on database via Internet. Scalability, Manageability and all other administration task on database is performed by some third party. You only pay for the amount of data residing on the database provided by third party. You work on use and pay model. In that case you can say database is in cloud.

There are many cloud service providers like Microsoft, Amazon etc. to name a few. All vendors do have their own cloud servicing model and pricing. Microsoft cloud platform is known as Windows Azure. Essentially Windows Azure is cloud operating system offered by Microsoft. As part of Windows Azure, there are five services offered by Microsoft

- Windows Azure

- SQL Azure

- Office 365

- App Fabric and Caching services

- Marketplace

In this three part article, we are going to focus our discussion to SQL Azure. We will focus on essential aspects need to know about SQL Azure as dot net developer. To be very precise on SQL Azure, we can say “SQL Server in cloud is known as SQL Azure “. When you have chosen to create relational database in Microsoft data center then you can say your database is in cloud or in SQL Azure.

In this article, we will cover

- What is SQL Azure?

- A first look on SQL Azure Management portal

- SQL Azure Database edition

- Firewall setting for SQL Azure

What is SQL Azure?

SQL Azure is cloud based service from Microsoft. It allows you to create your Database in one of the Microsoft Data center. In a very generic statement we can say SQL Azure allows creating Database on the cloud. It provides highly available database. It is based on SQL Server. It supports built in Fault tolerance and no physical administration is required. It supports TSQL and SSMS.

Advantage of using SQL Azure

- In built Fault tolerance support

- No Physical Administration required

- Very high availability

- Multitenant

- Pay as you go pricing

- Support of TSQL

- Highly scalable

We have done enough of theoretical discussion, now let us login to SQL Azure portal and create a database in the cloud.

Create and Manage Database in SQL Azure

To create Database in SQL Azure, You need to follow below steps

Step1

Login SQL Azure portal with your live credential

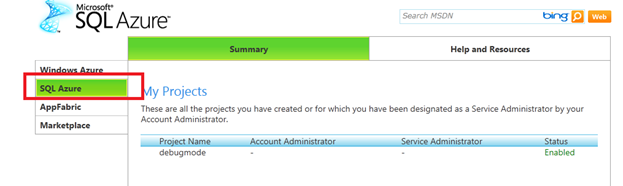

Step 2

Click on SQL Azure tab and select Project

Step3

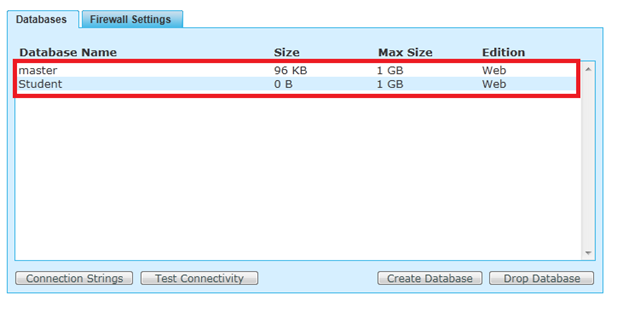

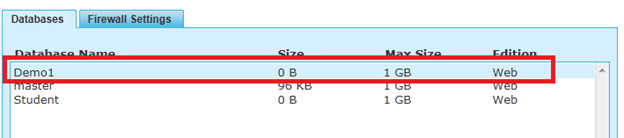

Click on the project. In this case project name is debugmode. After clicking on project, you will get listed the entire database created in your SQL Azure account.

Here in this account there are two database already created. They are master and student database. Master database is default database created by SQL Azure.

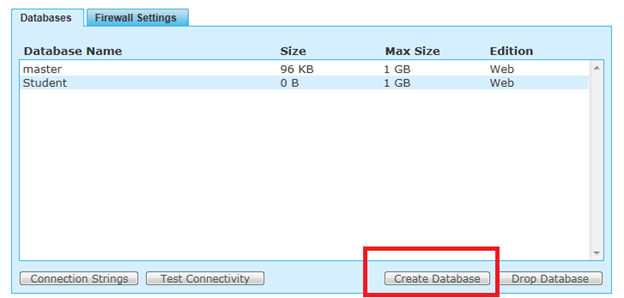

Step 4

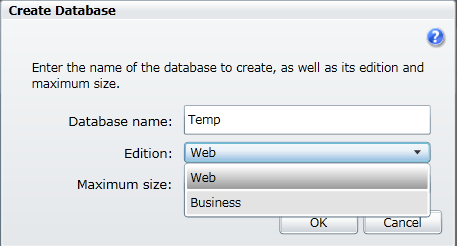

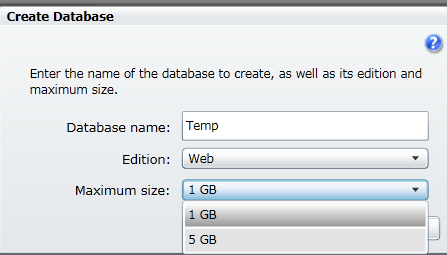

Click on Create Database

Step 5

Give the name of Database. Select the edition as Web or Business and specify the max size of database.

Step 6

After that click on Create you can see on Databases tab that Demo1 database has been created.

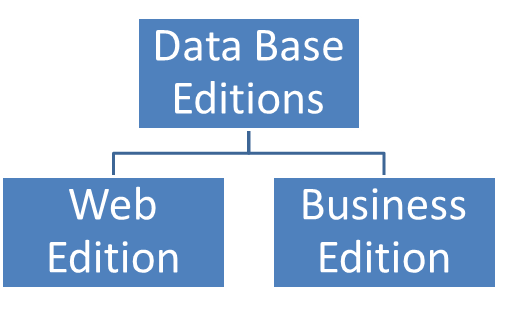

Different types of SQL Azure Database edition

At time of creating Database you might have seen, there were two Database editions

Web Edition Relational Database includes:

- Up to 5 GB of T-SQL based relational database

- Self-managed DB, auto high availability and fault tolerance

- Support existing tools like Visual Studio, SSMS, SSIS, BCP

- Best suited for Web application, Departmental custom apps

Business Edition DB includes:

- Up to 150 GB of T-SQL based relational database*

- Self-managed DB, auto high availability and fault tolerance

- Additional features in the future like auto-partition, CLR, fanouts etc

- Support existing tools like Visual Studio, SSMS, SSIS, BCP

- Best suited for Saas ISV apps, custom Web application, Departmental apps

While creating Database in SQL Azure you can choose among either of two options

If we choose Web Edition then Maximum size we can choose is 5 GB

If we choose Business Edition then Maximum size we can choose is 150 GB

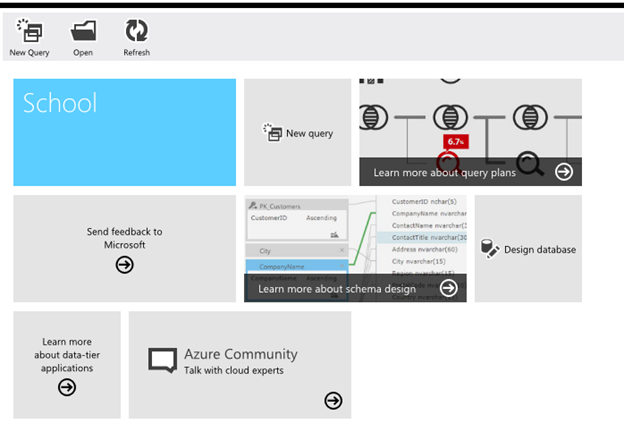

SQL Azure portal is having very effective Silverlight based user interactive UI and many more operations can be performed through the UI. There is new database manager and it allows us to perform operations at table and row level.

Now we can perform many more operations through Database option of new Windows azure portal.

- Create a database

- Create/ delete a table

- Create/edit/delete rows of table.

- Create/edit stored procedure

- Create/edit views

- Create / execute queries etc. . . .

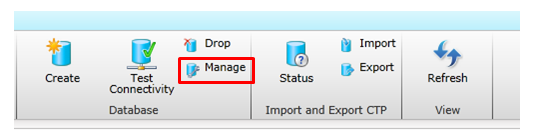

You can manage database using Data Base Manager.

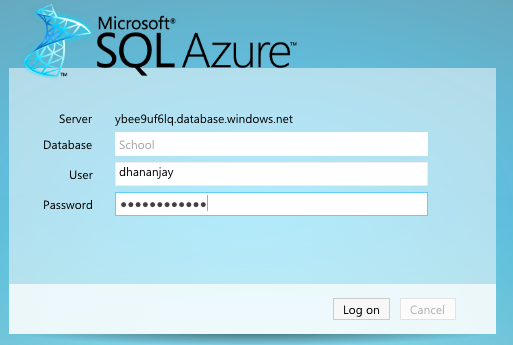

Accept given term and conditions and click Ok. you will get popup asking password to connect to database. Provide password and click on Connect.

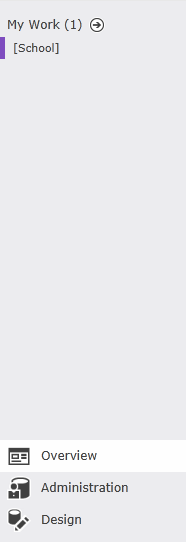

After logon you will get Mange user interface as below,

And in left hand panel you will have options as below,

For more on this watch the video of the post.

Firewall in SQL Azure

In this way, you can perform almost all the basic operations from new SQL Azure Data Base Manager.

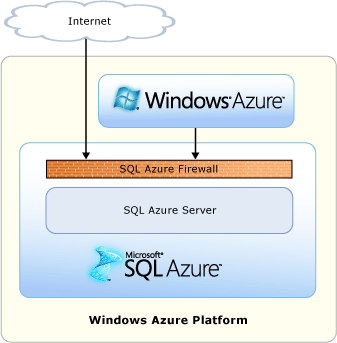

SQL Azure provides security via Firewall. By default Database created on SQL Azure is blocked by firewall for the security reason. Any try to external access or access from any other Azure application is blocked by firewall.

Image taken from MSDN

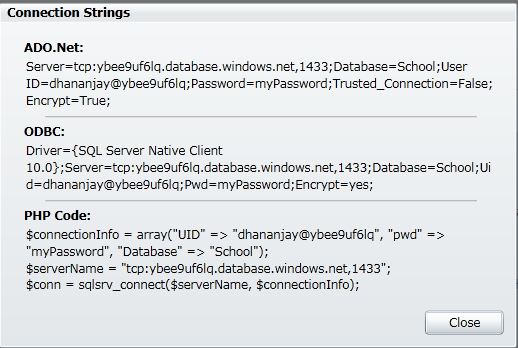

Connection Strings

You can copy Connection string from SQL Azure portal as well.

Connecting from Local system

When we want to connect SQL Azure portal from network system or local system then we need to configure firewall at local system. We need to create an exception for port 1433 at local firewall

Connecting from Internet

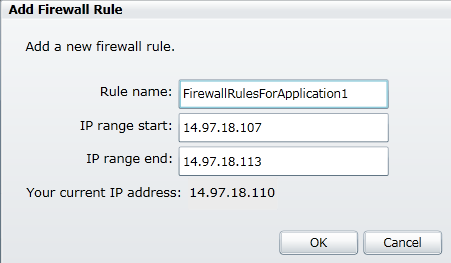

The entire request to connect to SQL Azure from Internet is blocked by SQL Azure firewall. When a request comes from Internet

- SQL Azure checks the IP address of system making the request

- f IP address is in between the range of IP address set as firewall rule of SQL Azure portal then connection get established.

Firewall rules can be Added, Updated and Deleted in two ways

- Using SQL Azure Portal

- Using SQL Azure API

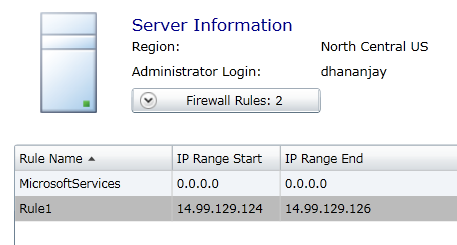

Manipulating Firewall rules using SQL Azure Portal

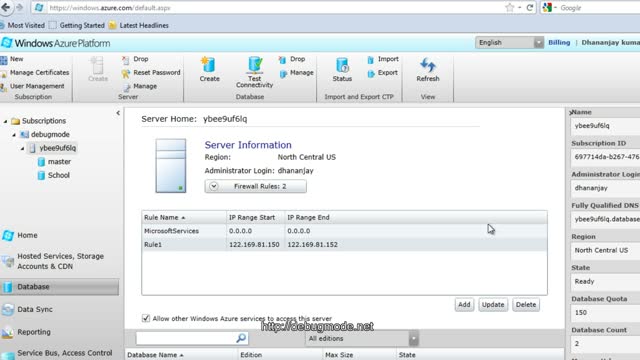

After login to Windows Azure portal, click on Database option and select Database server from left tab. You can see firewall rules listed there.

A new rule can be added by clicking on Add button.

To connect from other Windows Azure application for same subscription check the check box

An existing firewall rules can be edited and deleted also by selecting Edit and delete option respectively.

Conclusion

In this part we discussed various elementary concepts of cloud database and SQL Azure. In further part we will go deep to understand other essential concepts needed to know as developer.

<Return to section navigation list>

MarketPlace DataMarket, Social Analytics and OData

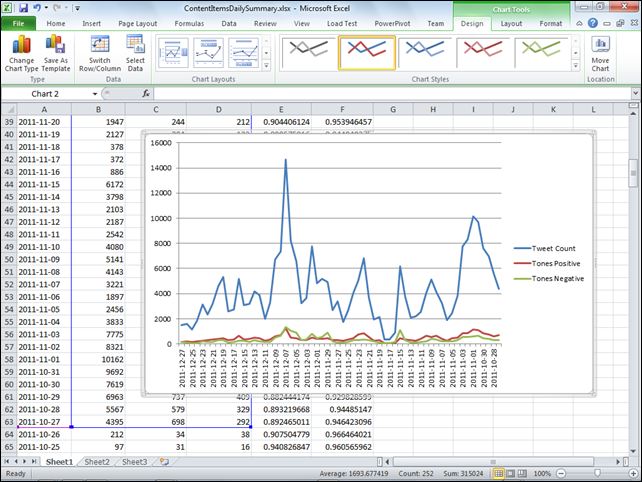

Updated my (@rogerjenn) Problems with Microsoft Codename “Data Explorer” - Aggregate Values and Merging Tables - Solved post with details of creating ContentItemsDailySummary.csv or ContentItemsDailySummary.xlsx and graphs from the DailySummary table with the Data Explorer Add-in for Excel on 12/30/2011:

Running the DataExplorer.msi file to install the Desktop Client also installs an Data Explorer Excel Add-in to Excel 2007 or 2010. The Add-in adds a Data Explorer group to Excel’s Data ribbon:

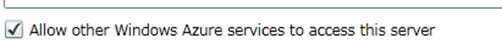

11. Click the Import Data button to open the eponymous dialog and select the mashup and resource to use, SocialAnalyticsMashup2 and Daily Summary for this example:

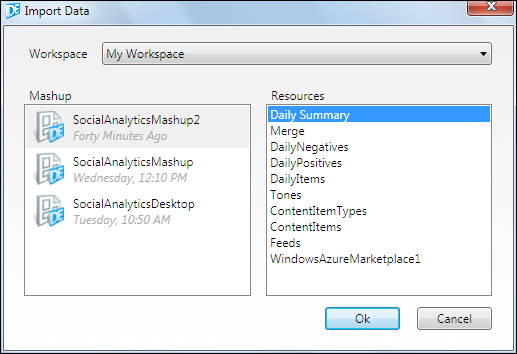

12. If you have Excel open, click OK to select whether to import the data to an existing (open) worksheet or a new worksheet:

13. Click OK to populate the worksheet with the mashup data:

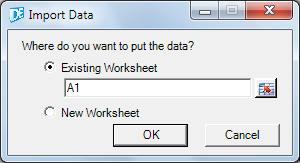

14. Click the File tab, choose Save As to open the Save As dialog and choose CSV (Comma Delimited) (*.csv) or Excel Workbook (*.xlsx) to save the file:

15. To make an overall comparison of the summary data with that of the WinForm client, create a graph of the Tweet Counts, Tones Positive and Tones Negative:

Note: The first valid data appears to occur on 10/27/2011, about two weeks after the Data Explorer team announced availability of the private CTP on 10/12/2011.

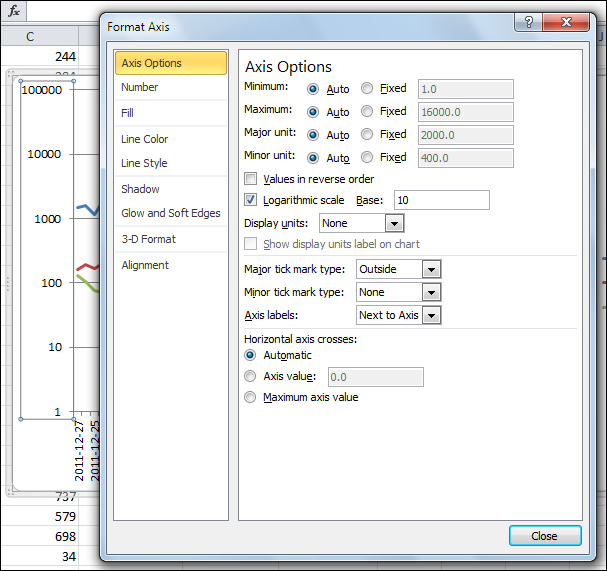

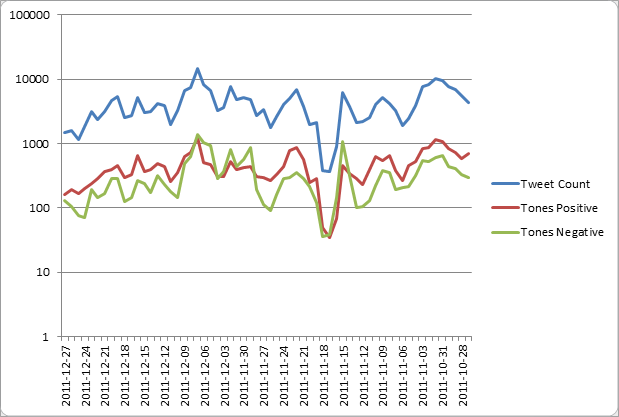

16. Double click the graph’s abscissa (y-axis) to open the Format Axis dialog, change its scale to logaritmic to math the WinForm client’s scale:

17. Click Close to display the reformatted graph:

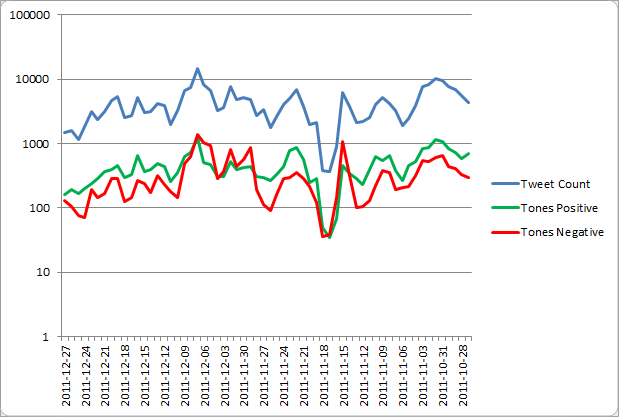

18. Optionally, select and right-click the Tones Positives line, choose Format Data Series to open the Format Data Series Dialog, select the Line Color option, open the Color picker, and select Green. Do the same for the Tones Negative line, but select Red:

<Return to section navigation list>

Windows Azure Access Control, Service Bus and Workflow

• Thirumalai Muniswamy (@thirumalai_pm) started a Implementing Azure AppFabric Service Bus - Part 1 series on 12/31/2011:

Introduction

When business opportunity grows to global market, the requirement also grows globally with that in all level of interaction with different types of customers. When the business interacts with lots of customers, the data interaction also grows heavily. So there is a big demand to collect all the data exposed by other partner’s related to our system and process it with our company data to get meaningful reports how business is doing. It would also good to consider exposing data by web services and consume it using program instead of re-feeding/importing the data into manually into our system.

Microsoft Azure AppFabric Service Bus provides a seamless way of connecting other systems and interact the data with it. The Service Bus uses WCF service as the basic architecture to expose the data to outside and to consume; so all the features available in WCF can be reused while developing Service Bus.

In a normal, when connecting two systems each one sitting in different premise becomes very tedious job for the developer to do custom coding and testing. It may also break the security policies from the organizational point of view. Using Service Bus, it is very easy to expose the data to other organization thro’ internet using no security (firewall) breaking mechanism and can control everything by on-premise itself.Some of the organizations hold very critical data such as financial, Parma sectors may not accept to keep the database in cloud (in Azure Storage Services, SQL Azure or Amazon S3). But they required exporting some data to public in some business requirements and provide a secure mechanism to access the data by third party vendors. Service Bus can play a perfect role where the database can sit in on-premise and expose the data to public via a service call in very standard fashion defined in WCF.

Currently Service bus provides two different way of messaging capabilities with Service Bus.

- Relayed Messaging:

In Relayed Message, the Service (Service Bus) and the consumer will be in online at the same time and communicate each other synchronously using relay service.

In Relayed Messaging, the on premise Service Bus first reaches the Relay Service sits in Azure environment using an outbound port and creates a bidirectional socket communication between the Relay Service and the on premise service. When client wants to connect service for communication, it sends message to Relay Service. The Relay Service makes connectivity between on-premise service and requested client. The Relay Service acts as a communication channel between client and service. So the client does not required to know where the service running.

The Relay Service also supports various protocol and Web service standards including SOAP, WS-* and REST. It supports traditional one-way messaging, request/response messaging, peer-to-peer messaging and also support event distribution scenarios such as publish/subscribe and bi-directional socket communication.

In this messaging, both the service bus and client required to be in online for any communication.- Brokered Messaging:

The Brokered Messaging provides asynchronous communication channel between service and client. So the service and the consumer do not required to be in online at the same time.

When service and client sends any message, the messages will get stored in Brokered Message infrastructure such as Queues, Topics, and Subscription until other end ready to receive the messages. This pattern allows the applications to be disconnected each other and connect on demand.

The Brokered messaging enables asynchronous messaging scenarios such as temporal decoupling, publish/subscribe and load balancing.

In this post, we will see how Relayed Messaging works with a real time implementation.

How Relayed Messaging Works:

The Relayed Messaging communications accomplished using a central Relay Service, which provides connectivity between the service and client. Below is the architecture of how Service Bus works.As per the diagram, the service and client can sit in the same premise or in different premise. The communications are happening as defined below.

- When the service hosted in an on-premise IIS server, it connects the azure relying service using an outbound port.

There are some ports requires to be opened on on-premise server for this connection. This depends on the binding method which is used with service bus. The below link provides the ports configuration requirements for each binding.

http://msdn.microsoft.com/en-us/library/ee732535.aspx- The service reaching relying service via on-premise firewall. So, all the security policies applied to the firewall will be applied with this connection.

- Once the relying service receives the request from service, it creates a bi-directional socket connection with the service using a rendezvous address created. This address will be used by relying service for all the communication with the service.

- When the client requires communication to the client, it first sends the request to relying service.

- The relying service forward the request to the service using the bi-directional socket connection already happened using a rendezvous address.

- The service gets the request and returns back the required response to the relying service.

- The relying service will again return back to the client.

Here, the relying service acts as a mediator between service and client. As client never knows where the service is running, the client won’t be able to connect to the service at initial request. But once an initial connection happened with the service using relay service, the client can communicate to the service directly using Hybrid connection mode.

This architecture provides more flexibility for controlling and managing the communications. With this model, it is easy to communicate with one-way messaging, request-response, publish-subscribe (multicast), and asynchronous messaging using message buffers.

Security in ServiceBus

As the service is published using a public endpoint and any one can consume the service using internet, how this service is secured? As the service exposing critical data to public how it can be controlled using security mechanism? To answer this question, there are various factors comes into picture. Some points are follows:

The Service Bus will be exposed to public with endpoint addresses, secret name and key. When third party vendors consume the service using a public endpoint, it can be done using the secret name and key values when it was exposed with. In case, if there is a suspect somebody consuming the service without source organization knowledge can immediately stop the service consume by changing the secret key and informing to the genuine vendors with new key value. The full service can also be stopped by stopping the web sites from on-premise IIS console where the service exposed, so no one can consume the service.Another important point to mention related to security is Access Control Service (ACS). ACS is a part of Azure AppFabric and used to provide identity and security to the services using standard identity provides such as Windows Live ID, Google, Yahoo, Facebook and Active Directory Identity of Organization. So the end user can access the services using any/multiple identity provides.

For Ex: If a service secured by Windows Live ID, the service can be accessed after successful login with Live ID credentials. It also provides security using ADFS integration with on-premise Active Directory, so any organization employee/customer can access the service from anywhere by using their organization AD login credentials. It also helps to not create and manage any separate login credentials for each application and the employees not to remember many credentials.

This post will give an introduction about What is Service Bus and how Relayed Messaging works. For more information on Azure App Fabric Service Bus, please refer the following links:

- http://www.microsoft.com/windowsazure/features/servicebus/

- http://msdn.microsoft.com/en-us/library/ee732537.aspx

From the next post, I will be taking a real time implementation for implementing Relayed Messaging Service Bus.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

• Ted Heng described how to Deploy a Windows Azure Geographical Failover Solution in a 12/29/2011 post to his EMC Consulting blog:

Windows Azure provides a rich platform with adequate built-in features for failover and load balancing. For instance, when you develop a Web Role, you can deploy multiple load-balanced instances of the Web Role for high availability. On top of that, you can create policies in Traffic Manager to manage routing of incoming user requests so they will be routed to the Web Role located in the closest data center. Combining the built-in load-balancing feature with the Traffic Manager policies, you can develop a world-class geographical failover solution very quickly. Let’s get started on how you can do that.

Prerequisites

1. Visual Studio 2010

2. Windows Azure SDK (http://www.windowsazure.com/en-us/develop/net/)

3. I assumed that you have the ability to create a Web Role cloud application

Deploy Multiple Instances of the Web Role

Once you have the Web Role application, you can change the instance count to 2 or more in ServiceConfiguration.Cloud.cscfg. The content would be something like this.

<?xml version="1.0" encoding="utf-8"?>

<ServiceConfiguration serviceName="TestSQLAzureOData" xmlns="http://schemas.microsoft.com/ServiceHosting/2008/10/ServiceConfiguration" osFamily="1" osVersion="*">

<Role name="WCFServiceWebRole1">

<Instances count="2" />

<ConfigurationSettings>

...

</ConfigurationSettings>

<Certificates>

<Certificate name="Microsoft.WindowsAzure.Plugins.RemoteAccess.PasswordEncryption" thumbprint="ABEE1F9453061DBAE8B364743736622639D12DCE" thumbprintAlgorithm="sha1" />

</Certificates>

</Role>

</ServiceConfiguration>

Next, you can deploy the Web Role via Visual Studio by publishing it to Windows Azure or you can also create a package and deploy it using the Windows Azure Portal (https://windows.azure.com). To publish to Windows Azure, click “Publish…” in the context menu in Solution Explorer.

If you have not already created a hosted service, you will need to create a hosted service located in a datacenter when you deploy the service.

The next step is to deploy the Web Role to another hosted service located in a different data center like so.

If the deployment is successful, we should now have 4 instances of the Web Role in two geographical datacenters that looks something like this.

Create a Routing Policy

Now, we will create a routing policy using the Windows Azure Traffic Manager. You will have a choice of Performance, Failover, and Round Robin. As discussed earlier, the Performance policy routes the users’ requests to the nearest datacenter yielding the best performance from bandwidth perspective and also provide the failover feature in case one datacenter is down.

Once you created the Traffic Manager policy successfully, your solution should now be complete. The complete policy would look something like this.

Conclusion

Creating a geographical failover solution using the Windows Azure platform can be accomplished with simple steps, but can provide a robust and viable solution that would otherwise be very difficult and expensive to accomplish.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Arjen de Blok (@arjendeblok) posted a Windows Azure Diagnostics Viewer project to CodePlex on 12/21/2011 (missed when published):

Provides an easy UI for viewing Windows Azure Diagnostics tables in Windows Azure Table Storage.

There are two applications available: a Windows application and a console application.

Both applications needs the full .NET 4.0 framework.

In the application.config files the connection parameters to Windows Azure Table Storage must be set.

I use Cerebrata’s Azure Diagnostics Manager (I was given a free copy) and haven’t tried Arjen’s project yet.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

• Paul Patterson posted Microsoft LightSwitch – Championing the Citizen Developer on 12/31/2011:

I recently watched a great webcast by Rich Dudley in which Rich made some very interesting points about what Gartner Research calls “Citizen Developers”. I was immediately intrigued by this Gartner information so I dug a little deeper into this citizen developer thing, and here is what I found…

According to Gartner, “Citizen developers will be building at least a quarter of new business applications by 2014…”. That, according to the report titled “Citizen Developers Are Poised to Grow”.

Represented by about 6 million information workers, these “latent” application developers make up that same Microsoft Visual Studio LightSwitch target market.

Very interesting, indeed!

“Citizen Developers” probably will be first to take advantage of Microsoft Codenames “Data Explorer” and “Social Analytics” also.

• Ayende Rahien (@ayende) panned the Northwind.net project’s Entity Framework code in an Application analysis: Northwind.NET post of 12/30/2011:

For an article I am writing, I wanted to compare a RavenDB model to a relational model, and I stumbled upon the following Northwind.NET project.

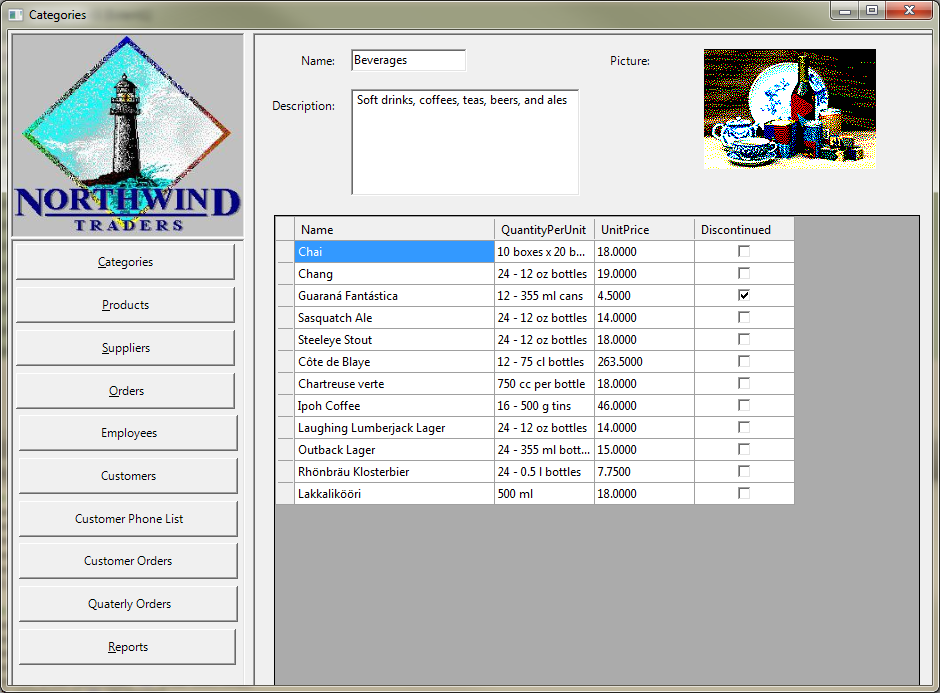

I plugged in the Entity Framework Profiler and set out to watch what was going on. To be truthful, I expected it to be bad, but I honestly did not expect what I got. Here is a question, how many queries does it take to render the following screen?

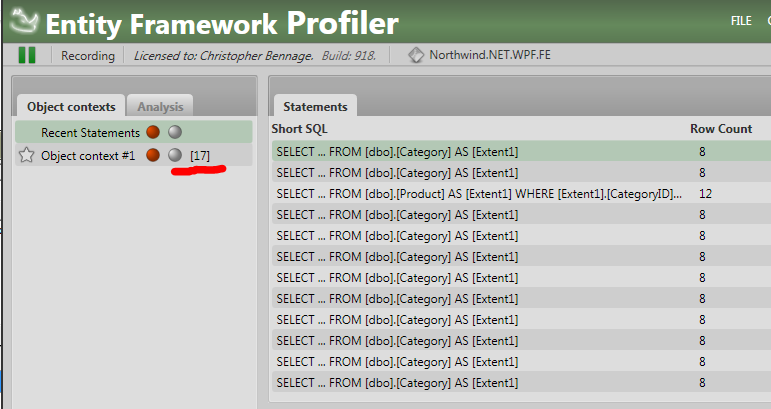

The answer, believe it or no, is 17:

You might have noticed that most of the queries look quite similar, and indeed, they are. We are talking about 16(!) identical queries:

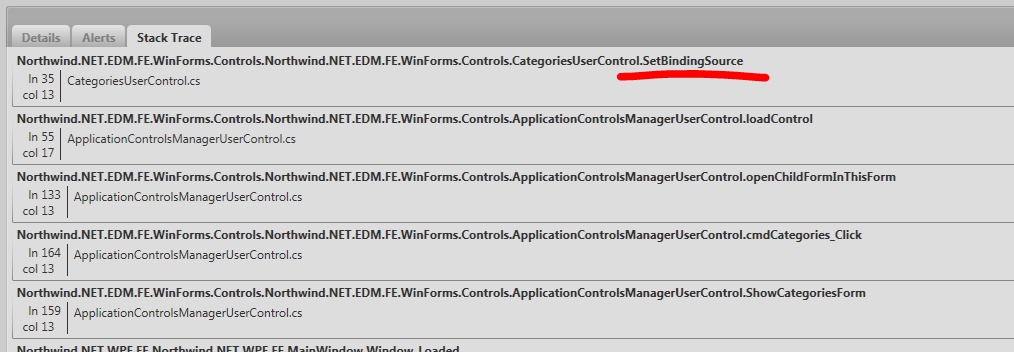

SELECT [Extent1].[ID] AS [ID], [Extent1].[Name] AS [Name], [Extent1].[Description] AS [Description], [Extent1].[Picture] AS [Picture], [Extent1].[RowTimeStamp] AS [RowTimeStamp] FROM [dbo].[Category] AS [Extent1].csharpcode, .csharpcode pre {font-size:small;color:black;font-family:consolas, "Courier New", courier, monospace;background-color:#ffffff;} .csharpcode pre {margin:0em;} .csharpcode .rem {color:#008000;} .csharpcode .kwrd {color:#0000ff;} .csharpcode .str {color:#006080;} .csharpcode .op {color:#0000c0;} .csharpcode .preproc {color:#cc6633;} .csharpcode .asp {background-color:#ffff00;} .csharpcode .html {color:#800000;} .csharpcode .attr {color:#ff0000;} .csharpcode .alt {background-color:#f4f4f4;width:100%;margin:0em;} .csharpcode .lnum {color:#606060;}Looking at the stack trace for one of those queries led me to:

And to this piece of code:

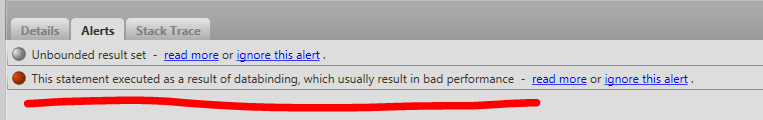

You might note that dynamic is used there, for what reason, I cannot even guess. Just to check, I added a ToArray() to the result of GetEntitySet, and the number of queries dropped from 17 to 2, which is more reasonable. The problem was that we passed an IQueryable to the data binding engine, which ended up evaluating the query multiple times.

And EF Prof actually warns about that, too:

At any rate, I am afraid that this project suffer from similar issues all around, it is actually too bad to serve as the bad example that I intended it to be.

Entity Framework Profiler is a product of Hibernating Rhinos, Ayende’s company.

Julie Lerman (@julielerman) reported Oracle Releases Provider with EF 4 Support (but not really EF 4.1 and EF4.2) in a 12/30/2011 post:

Oracle has finally released it’s version of ODP.NET that supports Entity Framework for use in production environments.

And they report that this release supports EF 4.1 and EF 4.2. That should mean Code First and DbContext but thanks to Frans Bouma sending me the limitations doc (that is part of the installation ) they aren’t!

7. ODP.NET 11.2.0.3 does not support Code First nor the DbContext APIs.

So, that means “no EF 4.1 and EF 4.2 support” in my opinion.

I’ll follow up with Oracle on clarification.

More from Oracle here: http://www.oracle.com/technetwork/topics/dotnet/whatsnew/index.html

Return to section navigation list>

Windows Azure Infrastructure and DevOps

• Bart Robertson (@bartr) posted Another Availability Story on New Years Eve:

In our recently updated case study - http://aka.ms/sxp, we write about our great experiences with Windows Azure deployments and how confident we are in our ability to deploy updates quickly with no impact to our customers. Since our services are powering parts of Microsoft.com and are receiving millions of requests per day, any downtime is highly visible. In our case study, we write:

Availability

With Windows Azure, SXP service releases are literally push-button activities with zero planned downtime. The SXP team uses the Virtual IP (VIP) Swap feature on the Windows Azure Platform Management Portal to promote its staging environment to a production environment. Service releases have occurred approximately every six weeks since SXP launched in April 2010. The process on Windows Azure is so refined and so simple that the team no longer accounts for service releases when anticipating events that may cause downtime ...

We put this theory to the test earlier this week. Most of our engineering and operations team were on vacation for the holidays when the recent ASP.NET security advisory was published - http://technet.microsoft.com/en-us/security/advisory/2659883 This seemed like a pretty significant issue but fortunately had a straight forward work-around that we chose to implement. Engineering made the code changes, built the packages, and ran our test suite against the new services.

Operations was able to patch 3 services in 16 minutes without missing a single request. This is another data point in the ease of running services on Windows Azure and one more reason we prefer to deploy on Windows Azure whenever possible.

Bart is a cloud architect with Microsoft Corporation, where he is responsible for architecting and implementing highly scalable, on-premise and cloud-based software plus services solutions for one of the largest websites in the world.

Damon Edwards (@damonedwards) described the Value of DevOps Culture: It's not just hugs and kumbaya in a 12/29/2011 post to his dev2ops blog:

The importance of culture is a recurring theme in most DevOps discussions. It's often cited as the thing your should start with and the thing you should worry about the most.

But other than the rather obvious idea that it's beneficial for any company to have a culture of trust, communication, and collaboration... can using DevOps thinking to change your culture actually provide a distinct business advantage?

Let's take the example of Continuous Deployment (or it's sibling, Continuous Delivery). This is an operating model that embodies a lot of the ideals that you'll hear about in DevOps circles and is impossible to properly implement if your org suffers from DevOps problems.

Continuous Deployment is not just a model where companies can release services quicker and more reliably (if you don't understand why that is NOT a paradox, please go read more about Continuous Deployment). Whether or not you think it could work for your organization, Continuous Deployment is a model that has been proven to unleash the creative and inventive potential of other organizations. Because of this, Continuous Deployment is a good proxy for examining the effects of solving DevOps problems.

Eric Ries sums it up better than I can when he describes the transformative effect that takes place the further you can reduce the cost, friction, and time between releases (i.e. tests to see if you can better please the customer).

"When you have only one test, you don’t have entrepreneurs, you have politicians, because you have to sell. Out of a hundred good ideas, you’ve got to sell your idea. So you build up a society of politicians and salespeople. When you have five hundred tests you’re running, then everybody’s ideas can run. And then you create entrepreneurs who run and learn and can retest and relearn as opposed to a society of politicians."

-Eric Ries

The Lean Startup (pg. 33)That's a business advantage. That's value derived from a DevOps-style change in culture.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

No significant articles today.

<Return to section navigation list>

Cloud Computing Events

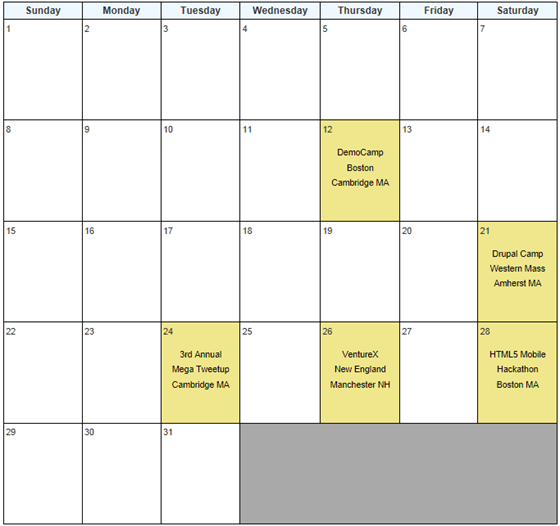

Jim O’Neil published his January Tech Calendar on 12/30/2011:

The calendar looks a bit lean at the moment, but I suspect a few more additions will occur as people (and organizers!) return from the holiday break. Keep in mind too there are plenty of user groups, meetups, and other regularly occurring events to keep you busy!

Read Jim’s entire post to gain access to live links.

Michael Collier (@MichaelCollier) reported that he’s Looking forward to CodeMash 2012 in a 12/29/2011 post:

I’ve spent some time this week looking back on 2011 and preparing for a few initiatives I want to get done in early 2012. As I was looking through my calendar for the next month, I noticed a nice gem coming up in a few weeks – CodeMash 2012!

I’ve been attending CodeMash since the conference’s start in 2007. It’s been really exciting to see the conference grow from a somewhat small regional conference to what is really now a fairly major national event. Heck, CodeMash sold out in around 20 minutes this year!! Attendees come from all over the U.S. I believe some speakers are even coming from overseas this year. All this for a technology conference in Sandusky, OH in January – wow!!!

So why am I so excited about CodeMash again this year? For starters, just take a look at the session line up! There are some great speakers and topics. I’m starting to prep my “must see” list for CodeMash, and a few sessions I’m really looking forward to checking out are:

- Introduction to Signal R (Brady Gaster)

- What they heck are they doing over there? Inside the Microsoft Web Stack of Love (Scott Hanselman)

- Introducing Heroku – The Polyglot Cloud Application Platform (Sandeep Bhanot)

- Effective Data Visualization: The Ideas of Edward Tufte (David Giard)

Breaking the Barrier with Node.js on Windows and Azure (Glenn Block)

- Blazing Fast Backend Services using Node.js and MongoDB (Mark Gustetic)

- PHP with Windows Azure (Brent Stineman)

- Android: Where You Can Stick Your Data (Ted Neward & Jessica Kerr)

The keynote sessions by Ted Neward and Barry Hawkins also look to be really great! Besides the great sessions and keynotes, the side hallway conversations with the crazy smart CodeMash attendees are always invaluable! Some would go so far as to say it is the side conversations themselves that are the reason they attend CodeMash.

This year I’m happy to be attending CodeMash as a member of Neudesic. When I joined Neudesic earlier this year, I made it a point to spread the word about CodeMash. I’m very pleased to have a few of my Neudesic colleagues joining me at CodeMash this year. Joining me this year are Monish Nagisetty, Bryce Calhoun, and Ted Neward. All of us are really looking forward to meeting all the smart folks at CodeMash and experiencing all that CodeMash has to offer . . . like the bacon bar!

If you would like some quality one-on-one time with Ted Neward, or other Neudesic rock stars who can answer your most pressing questions, at CodeMash, I’d definitely encourage you to do so. Ted’s always a blast to talk with! He’s a crazy smart guy with great insights into today’s technology issues. Kelli Piepkow is coordinating all the logistics for those meetings, and you can reach her here.

The CodeMash countdown is on. Less the 2 weeks to go!!

<Return to section navigation list>

Other Cloud Computing Platforms and Services

No significant articles today.

<Return to section navigation list>