| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

To use the above links, first click the post’s title to display the single article you want to navigate.

No significant articles today.

No significant articles today.

<Return to section navigation list>

No significant articles today.

No significant articles today.

<Return to section navigation list>

Dare Obasanjo suggested Learning from our Mistakes: The Failure of OpenID, AtomPub and XML on the Web (including OData) in a 1/30/2011 post:

I’ve now been working and blogging about web technology long enough to see technologies that we once thought were the best thing since sliced bread turn out to be rather poor solutions to the problem or even worse that they create more problems than they solve. Since I’ve written favorably about all of the technologies mentioned below this is also a mea culpa where I try to see what I can learn about judging the suitability of technologies to solving problems on the web without being blinded by the hype from the “cool kids” on the web.

I’ve now been working and blogging about web technology long enough to see technologies that we once thought were the best thing since sliced bread turn out to be rather poor solutions to the problem or even worse that they create more problems than they solve. Since I’ve written favorably about all of the technologies mentioned below this is also a mea culpa where I try to see what I can learn about judging the suitability of technologies to solving problems on the web without being blinded by the hype from the “cool kids” on the web.

The Failure of OpenID

According to Wikipedia, “OpenID is an open standard that describes how users can be authenticated in a decentralized manner, obviating the need for services to provide their own ad hoc systems and allowing users to consolidate their digital identities”. So the problem that OpenID solves is having to create multiple accounts on different websites but instead being able to re-use from the identity provider (i.e. website) of your choice. OpenID was originally invented in 2005 by Brad Fitzpatrick to solve the problem of having bloggers having to create an account on a person’s weblog or blogging service before being able to leave a comment.

OpenID soon grew beyond it’s blog-centric origins and has had a number of the big name web companies either implement it in some way or be active in it's community. Large companies and small companies alike have been lobbied to implement OpenID and accused of not being “open” when they haven’t immediately jumped on the band wagon. However now that we’ve had five years of OpenID, there are a number of valid problems that have begun to indicate the emperor may either have no close or at least is just in his underwear.

The most recent set of hand wringing about the state of OpenID has been inspired by 37 Signals announcing they'll be retiring OpenID support but the arguments against OpenID have been gathering steam for months if not years.

First of all, there have been the arguments that OpenID is too complex and yet doesn't have enough features from people who’ve been supporting the technology for years like David Recordon. Here is an excerpt from David Recordon’s writings on the need for an OpenID Connect

In 2005 I don't think that Brad Fitzpatrick or I could have imagined how successful OpenID would become. Today there are over 50,000 websites supporting it and that number grows into the millions if you include Google FriendConnect. There are over a billion OpenID enabled URLs and production implementations from the largest companies on the Internet.

…

We've heard loud and clear that sites looking to adopt OpenID want more than just a unique URL; social sites need basic things like your name, photo, and email address. When Joseph Smarr and I built the OpenID/OAuth hybrid we were looking for a way to provide that functionality, but it proved complex to implement. So now there's a simple JSON User Info API similar to those already offered by major social providers.

We have also heard that people want OpenID to be simple. I've heard story after story from developers implementing OpenID 2.0 who don't understand why it is so complex and inevitably forgot to do something. With OpenID Connect, discovery no longer takes over 3,000 lines of PHP to implement correctly. Because it's built on top of OAuth 2.0, the whole spec is fairly short and technology easy to understand. Building on OAuth provides amazing side benefits such as potentially being the first version of OpenID to work natively with desktop applications and even on mobile phones.

50,000 websites sounds like a lot until you think about the fact that Facebook Connect which solves a similar problem had been adopted by 250,000 websites during the same time frame and had been around less than half as long as OpenID. It’s also telling to ask yourself how often you as an end user actually have used OpenID or even seen that it is available on a site.

The reason for why you can count the instances you’ve had this occur on one or two hands is eloquently articulated in Yishan Wong’s answer to the question What's wrong with OpenID on Quora which is excerpted below

The short answer is that OpenID is the worst possible "solution" I have ever seen in my entire life to a problem that most people don't really have. That's what's "wrong" with it.

To answer the most immediate question of "isn't having to register and log into many sites a big problem that everyone has?," I will say this: No, it's not. Regular normal people have a number of solutions to this problem. Here are some of them:

- use the same username/password for multiple sites

- use their browser's ability to remember their password (enabled by default)

- don't register for the new site

- don't ever log in to the site

- log in once, click "remember me"

- click the back button on their browser and never come back to the site

- maintain a list of user IDs and passwords in an offline document

These are all perfectly valid solutions that a regular user finds acceptable. A nerd will wrinkle up his nose at these solutions and grumble about the "security vulnerabilities" (and they'll be right, technically) but the truth is that these solutions get people into the site and doing what they want and no one really cares about security anyways. On the security angle, no one is going to adopt a product to solve a problem they don't care about (or in many cases, even understand).

…

The fact that anyone even expects that OpenID could possibly see any amount of adoption is mind-boggling to me. Proponents are literally expecting people to sign up for yet another third-party service, in some cases log in by typing in a URL, and at best flip away to another branded service's page to log in and, in many cases, answer an obscurely-worded prompt about allowing third-party credentials, all in order to log in to a site. This is the height of irony - in order to ease my too-many-registrations woes, you are asking me to register yet again somewhere else?? Or in order to ease my inconvenience of having to type in my username and password, you are having me log in to another site instead??

Not only that, but in the cases where OpenID has been implemented without the third-party proxy login, the technical complexity behind what is going on in terms of credential exchange and delegation is so opaque that even extremely sophisticated users cannot easily understand it (I have literally had some of Silicon Valley's best engineers tell me this). At best, a re-directed third-party proxy login is used, which is the worst possible branding experience known on the web - discombobulating even for savvy internet users and utterly confusing for regular users. Even Facebook Connect suffers from this problem - people think "Wait, I want to log into X, not Facebook..." and needs to overcome it by making the brand and purpose of what that "Connect with Facebook" button ubiquitous in order to overcome the confusion.

I completely agree with Yishan’s analysis here. Not only does OpenID complicate the sign-in/sign-up experience for sites that adopt it but also it is hard to confidently make the argument that end users actually consider the problem OpenID is trying to solve be worth the extra complication.

The Failure of XML on the Web

At the turn of the last decade, XML could do no wrong. There was no problem that couldn’t be solved by applying XML to it and every technology was going to be replaced by it. XML was going to kill HTML. XML was going to kill CORBA, EJB and DCOM as we moved to web services. XML was a floor wax and a dessert topping. Unfortunately, after over a decade it is clear that XML has not and is unlikely to ever be the dominant way we create markup for consumption by browsers or how applications on the Web communicate.

James Clark has XML vs the Web where he talks about this grim realization

Twitter and Foursquare recently removed XML support from their Web APIs, and now support only JSON. This prompted Norman Walsh to write an interesting post, in which he summarised his reaction as "Meh". I won't try to summarise his post; it's short and well-worth reading.

From one perspective, it's hard to disagree. If you're an XML wizard with a decade or two of experience with XML and SGML before that, if you're an expert user of the entire XML stack (eg XQuery, XSLT2, schemas), if most of your data involves mixed content, then JSON isn't going to be supplanting XML any time soon in your toolbox.

…

There's a bigger point that I want to make here, and it's about the relationship between XML and the Web. When we started out doing XML, a big part of the vision was about bridging the gap from the SGML world (complex, sophisticated, partly academic, partly big enterprise) to the Web, about making the value that we saw in SGML accessible to a broader audience by cutting out all the cruft. In the beginning XML did succeed in this respect. But this vision seems to have been lost sight of over time to the point where there's a gulf between the XML community and the broader Web developer community; all the stuff that's been piled on top of XML, together with the huge advances in the Web world in HTML5, JSON and JavaScript, have combined to make XML be perceived as an overly complex, enterprisey technology, which doesn't bring any value to the average Web developer.

This is not a good thing for either community (and it's why part of my reaction to JSON is "Sigh"). XML misses out by not having the innovation, enthusiasm and traction that the Web developer community brings with it, and the Web developer community misses out by not being able to take advantage of the powerful and convenient technologies that have been built on top of XML over the last decade.

So what's the way forward? I think the Web community has spoken, and it's clear that what it wants is HTML5, JavaScript and JSON. XML isn't going away but I see it being less and less a Web technology; it won't be something that you send over the wire on the public Web, but just one of many technologies that are used on the server to manage and generate what you do send over the wire.

The fact that XML based technologies are no longer required tools in the repertoire of the Web developer isn’t news to anyone who follows web development trends. However it is interesting to look back and consider that there was once a time when the W3C and the broader web development community assumed this was going to be the case. The reasons for its failure on the Web are self evident in retrospect.

There have been many articles published about the failure of XML as a markup language over the past few years. My favorites being Sending XHTML as text/html Considered Harmful and HTML5, XHTML2, and the Future of the Web which do a good job of capturing all of the problems with using XML with its rules about draconian error handling on the web where ill-formed, hand authored markup and non-XML savvy tools rule the roost.

As for XML as the protocol for intercommunication between Web apps, the simplicity of JSON over the triumvirate of SOAP, WSDL and XML Schema is so obvious it is almost ridiculous to have to point it out.

The Specific Failure of the Atom Publishing Protocol

Besides the general case of the failure of XML as a data interchange format for web applications, I think it is still worthwhile to call out the failure of the Atom Publishing Protocol (AtomPub) which was eventually declared a failure by the editor of the spec, Joe Gregorio. AtomPub arose from the efforts of a number of geeks to build a better API for creating blog posts. The eventual purpose of AtomPub was to create a generic application programming interface for manipulating content on the Web. In his post titled AtomPub is a Failure, Joe Gregorio discussed why the technology failed to take off as follows

Besides the general case of the failure of XML as a data interchange format for web applications, I think it is still worthwhile to call out the failure of the Atom Publishing Protocol (AtomPub) which was eventually declared a failure by the editor of the spec, Joe Gregorio. AtomPub arose from the efforts of a number of geeks to build a better API for creating blog posts. The eventual purpose of AtomPub was to create a generic application programming interface for manipulating content on the Web. In his post titled AtomPub is a Failure, Joe Gregorio discussed why the technology failed to take off as follows

So AtomPub isn't a failure, but it hasn't seen the level of adoption I had hoped to see at this point in its life. There are still plenty of new protocols being developed on a seemingly daily basis, many of which could have used AtomPub, but don't. Also, there is a large amount of AtomPub being adopted in other areas, but that doesn't seem to be getting that much press, ala, I don't see any Atom-Powered Logo on my phones like Tim Bray suggested.

So why hasn't AtomPub stormed the world to become the one true protocol? Well, there are three answers:

- Browsers

- Browsers

- Browsers

…

Thick clients, RIAs, were supposed to be a much larger component of your online life. The cliche at the time was, "you can't run Word in a browser". Well, we know how well that's held up. I expect a similar lifetime for today's equivalent cliche, "you can't do photo editing in a browser". The reality is that more and more functionality is moving into the browser and that takes away one of the driving forces for an editing protocol. Another motivation was the "Editing on the airplane" scenario. The idea was that you wouldn't always be online and when you were offline you couldn't use your browser. The part of this cliche that wasn't put down by Virgin Atlantic and Edge cards was finished off by Gears and DVCS's.

…

The last motivation was for a common interchange format. The idea was that with a common format you could build up libraries and make it easy to move information around. The 'problem' in this case is that a better format came along in the interim: JSON. JSON, born of Javascript, born of the browser, is the perfect 'data' interchange format, and here I am distinguishing between 'data' interchange and 'document' interchange. If all you want to do is get data from point A to B then JSON is a much easier format to generate and consume as it maps directly into data structures, as opposed to a document oriented format like Atom, which has to be mapped manually into data structures and that mapping will be different from library to library.

As someone who has tried to both use and design APIs based on the Atom format, I have to agree that it is painful to have to map your data model to what is effectively a data format for blog entries instead of keeping your existing object model intact and using a better suited format like JSON.

The Common Pattern in these Failures

When I look at all three of these failures I see a common pattern which I’ll now be on the look out for when analyzing the suitability of technologies for my purposes. In each of these cases, the technology was designed for a specific niche with the assumption that the conditions that applied within that niche were general enough that the same technology could be used to solve a number of similar looking but very different problems.

-

The argument for OpenID is a lot stronger when limiting the audience to bloggers who all have a personal URL for their blog AND where it actually be a burden to sign up for an account on the millions of self hosted blogs out there. However it isn’t true that same set of conditions applies universally when trying to log-in or sign-up for the handful of websites I use regularly enough to decide I want to create an account.

-

XML arose from the world of SGML where experts created custom vocabularies for domain-specific purposes such as DocBook and EDGAR. The world of novices creating markup documents in a massively decoupled environment such as the Web needed a different set of underlying principles.

-

AtomPub assumed that the practice of people creating blog posts via custom blog editing tools (like the one I’m using the write this post) would be a practice that would spread to other sorts of web content and that these forms of web content wouldn’t be much distinguishable from blog posts. It turns out that most of our content editing still takes place in the browser and in the places where we do actually utilize custom tools (e.g. Facebook & Twitter clients), an object-centric domain specific data format is better than an XML-centric blog based data format.

So next time you’re evaluating a technology that is being much hyped by the web development blogosphere, take a look to see that the fundamental assumptions that the fundamental assumptions that led to the creation of the technology actually generalize to your use case. An example that comes to mind that developers should consider doing with this sort of evaluation given the blogosphere hype is NoSQL.

Fortunately, WCF Data Services supports both JSON and AtomPub formats for RESTful SQL Azure queries.

<Return to section navigation list>

Freddy Kristiansen continued his NAV series with Connecting to NAV Web Services from the Cloud–part 4 out of 5 on 1/27/2011:

If you haven’t already read part 3 you should do so here, before continuing to read this post.

If you haven’t already read part 3 you should do so here, before continuing to read this post.

By now you have seen how to create a WCF Service Proxy connected to NAV with an endpoint hosted on the Servicebus (Windows Azure AppFabric). By now, I haven’t written anything about security yet and the Proxy1 which is hosted on the Servicebus is available for everybody to connect to anonymously.

In the real world, I cannot imagine a lot of scenarios where a proxy which can be accessed anonymously will be able to access and retrieve data from your ERP system, so we need to look into this and there are a lot of different options – but unfortunately, a lot of these doesn’t work from Windows Phone 7.

ACS security on Windows Azure AppFabric

The following links points to documents describing how to secure services hosted on the Servicebus through the Access Control Service (ACS) built into Windows Azure AppFabric.

The following links points to documents describing how to secure services hosted on the Servicebus through the Access Control Service (ACS) built into Windows Azure AppFabric.

A lot of good stuff and if you (like me) try to dive deep into some of these topics, you will find that using this technology, you can secure services without changing the signature of your service. Basically you are using claim based security, asking a service for a token with which you can connect to a service. You get a token back (which will be valid for a given timeframe) and while this token is valid you can call the service by specifying this token when calling the service.

This functionality has two problems:

- It doesn’t work with Windows Phone 7 (nor any other mobile device) – YET! In the future, it probably will – but nobody made a servicebus assembly for Windows Phone, PHP, Java, iPhone or any of those. See also: http://social.msdn.microsoft.com/Forums/en-US/netservices/thread/12660485-86fb-4b4c-9417-d37fe183b4e1

- Often we need to connect to NAV with different A/D users depending on which user is connecting from the outside.

I am definitely not a security expert – but whatever security system I want to put in place must support the following two basic scenarios:

- My employees, who are using Windows Phone 7 (or other applications), needs to authenticate to NAV using their own domain credentials – to have the same permissions in NAV as they have when running the Client.

- Customers and vendors should NOT have a username and a password for a domain user in my network, but I still want to control which permissions they have in NAV when connecting.

Securing the access to NAV

I have created the following poor mans authentication to secure the access to NAV and make sure that nobody gets past the Proxy unless they have a valid username and password. I will of course always host the endpoint on a secure connection (https://) and the easiest way around adding security is to extend the methods in the proxy to contain a username and a password – like:

[ServiceContract]

public interface IProxyClass

{

[OperationContract]

string GetCustomerName(string username, string password, string No);

}

This username and password could then be a domain user and a domain password, but depending on the usage this might not be the best of ideas.

In the case of the Windows Phone application, where a user is connecting to NAV through a proxy, I would definitely use the domain username and password (like it is the case with Outlook on the Windows Phone). In the case, where an application on a customer/vendor site should connect to your local NAV installation over the Servicebus – it doesn’t seem like a good idea to create a specific domain user and password for this customer/vendor and maintain that.

In my sample here – I will allow both. Basically I will just say, that if the username is of the format domain\user – then I will assume that this is a domain user and password – and use that directly as credentials on the service connection to NAV.

If the username does NOT contain a backslash, then I will lookup in a table for this username and password combination and find the domain, username and password that should be used for this connection. This table can either be a locally hosted SQL Express – or it could be a table in NAV and then I could access this through another web service. I have done the latter.

In NAV, I have created a table called AuthZ:

This table will map usernames and passwords to domain usernames and passwords.

As a special option I have added a UseDefault – which basically means that the Service will use default authentication to connect to NAV, which again would be the user which is running the Service Host Console App or Windows Service. I have filled in some data into this table:

and created a Codeunit called AuthZ (which I have exposed as a Web Service as well):

GetDomainUserPassword(VAR Username : Text[80];VAR Password : Text[80];VAR Domain : Text[80];VAR UseDefault : Boolean) : Boolean

IF NOT AuthZ.GET(Username) THEN

BEGIN

AuthZ.INIT;

AuthZ.Username := Username;

AuthZ.Password := CREATEGUID;

AuthZ.Disabled := TRUE;

AuthZ.Attempts := 1;

AuthZ.AllTimeAttempts := 1;

AuthZ.INSERT();

EXIT(FALSE);

END;

IF AuthZ.Password <> Password THEN

BEGIN

AuthZ.Attempts := AuthZ.Attempts + 1;

AuthZ.AllTimeAttempts := AuthZ.AllTimeAttempts + 1;

IF AuthZ.Attempts > 3 THEN

AuthZ.Disabled := TRUE;

AuthZ.MODIFY();

EXIT(FALSE);

END;

IF AuthZ.Disabled THEN

EXIT(FALSE);

IF AuthZ.Attempts > 0 THEN

BEGIN

AuthZ.Attempts := 0;

AuthZ.MODIFY();

END;

UseDefault := AuthZ.UseDefault;

Username := AuthZ.DomainUsername;

Password := AuthZ.DomainPassword;

Domain := AuthZ.DomainDomain;

EXIT(TRUE);

As you can see, the method will log the number of attempts done on a given user and it will automatically disable a user if it has more than 3 failed attempts. In my sample, I actually create a disabled account for attempts to use wrong usernames – you could discuss whether or not this is necessary.

In my proxy (C#) I have added a method called Authenticate:

private void Authenticate(SoapHttpClientProtocol service, string username, string password)

{

if (string.IsNullOrEmpty(username))

throw new ArgumentNullException("username");

if (username.Length > 80)

throw new ArgumentException("username");

if (string.IsNullOrEmpty(password))

throw new ArgumentNullException("password");

if (password.Length > 80)

throw new ArgumentException("password");

string[] creds = username.Split('\\');

if (creds.Length > 2)

throw new ArgumentException("username");

if (creds.Length == 2)

{

// Username is given by domain\user - use this

service.Credentials = new NetworkCredential(creds[1], password, creds[0]);

}

else

{

// Username is a simple username (no domain)

// Use AuthZ web Service in NAV to get the Windows user to use for this user

AuthZref.AuthZ authZservice = new AuthZref.AuthZ();

authZservice.UseDefaultCredentials = true;

string domain = "";

bool useDefault = true;

if (!authZservice.GetDomainUserPassword(ref username, ref password, ref domain, ref useDefault))

throw new ArgumentException("username/password");

if (useDefault)

service.UseDefaultCredentials = true;

else

service.Credentials = new NetworkCredential(username, password, domain);

}

}

The only task for this function is to authenticate the user and set the Credentials on a NAV Web Reference (SoapHttpClientProtocol derived). If something goes wrong, this function will throw and exception and will return to the caller of the Service.

The implementation of GetCustomerName is only slightly different in relation to the one implemented in part 3 – only addition is to call Authenticate after creating the service class:

public string GetCustomerName(string username, string password, string No)

{

Debug.Write(string.Format("GetCustomerName(No = {0}) = ", No));

CustomerCard_Service service = new CustomerCard_Service();

try

{

Authenticate(service, username, password);

}

catch (Exception ex)

{

Debug.WriteLine(ex.Message);

throw;

}

CustomerCard customer = service.Read(No);

if (customer == null)

{

Debug.WriteLine("Customer not found");

return string.Empty;

}

Debug.WriteLine(customer.Name);

return customer.Name;

}

So, based on this we now have an endpoint on which we will have to specify a valid user/password combination in order to get the Customer Name – without having to create / give out users in the Active Directory.

Note that with security it is ALWAYS good to create a threat model and depending on the data you expose might want to add more security – options could be:

- Use a local SQL Server for the AuthZ table, remove the useDefault flag and run the Windows Service as a user without access to NAV – so that every user in the table has a 1:1 relationship with a NAV user

- Host the endpoint on the servicebus with a random name, which is calculated based on an algorithm – and restart the proxy on a timer

- Securing the endpoint with RelayAuthenticationToken (which then disallows Windows Phone and other mobile devices to connect)

- Firewall options for IP protection is unfortunately not possible

and many many more…

In the last post in this series, I will create a small useful Windows Phone 7 application, which uses the ServiceBus to connect directly to NAV and retrieve data. I will also make this application available for free download from Windows Phone marketplace.

Freddy is a PM Architect on the Microsoft Dynamics NAV Team

<Return to section navigation list>

No significant articles today.

No significant articles today.

<Return to section navigation list>

Christian Weyer posted Colorful Windows Azure Compute monitoring diagnostics with Azure Diagnostics Manager on 1/30/2010:

One of the open secrets of Windows Azure is that diagnostics is powerful but also very tedious and partly error-prone. In addition, there is no really good and useful tooling provided by Microsoft. We want to have a light-weight means to monitor application, look at performance counters, see event logs etc.

There is the SCOM MP for Windows Azure, but this is more an enterprise-targeted solution which cannot really be called light-weight. This leaves us with 3rd party tools.

There is the SCOM MP for Windows Azure, but this is more an enterprise-targeted solution which cannot really be called light-weight. This leaves us with 3rd party tools.

My 3rd party tool of choice for doing diagnostics and monitoring of Windows Azure applications is Cerebrata’s Azure Diagnostics Manager (ADM).

But before we use any tools to visualize data we need to set up and instruct our code to collect diagnostics data. In the following example we set up three performance counters and two event logs to be collected and be transferred over to the configured storage account:

public class WebRole : RoleEntryPoint

{

public override bool OnStart()

{

var diagConfig = DiagnosticMonitor.GetDefaultInitialConfiguration();

var procTimeConfig = new PerformanceCounterConfiguration();

procTimeConfig.CounterSpecifier = @"\Processor(*)\% Processor Time";

procTimeConfig.SampleRate = System.TimeSpan.FromSeconds(5.0);

diagConfig.PerformanceCounters.DataSources.Add(procTimeConfig);

var diskBytesConfig = new PerformanceCounterConfiguration();

diskBytesConfig.CounterSpecifier = @"\LogicalDisk(*)\Disk Bytes/sec";

diskBytesConfig.SampleRate = System.TimeSpan.FromSeconds(5.0);

diagConfig.PerformanceCounters.DataSources.Add(diskBytesConfig);

var workingSetConfig = new PerformanceCounterConfiguration();

workingSetConfig.CounterSpecifier =

@"\Process(" +

System.Diagnostics.Process.GetCurrentProcess().ProcessName +

@")\Working Set";

workingSetConfig.SampleRate = System.TimeSpan.FromSeconds(5.0);

diagConfig.PerformanceCounters.DataSources.Add(workingSetConfig);

diagConfig.PerformanceCounters.ScheduledTransferPeriod =

TimeSpan.FromSeconds(30);

diagConfig.WindowsEventLog.DataSources.Add("System!*");

diagConfig.WindowsEventLog.DataSources.Add("Application!*");

diagConfig.WindowsEventLog.ScheduledTransferPeriod =

TimeSpan.FromSeconds(30);

DiagnosticMonitor.Start(

"Microsoft.WindowsAzure.Plugins.Diagnostics.ConnectionString",

diagConfig);

return base.OnStart();

}

}

.csharpcode, .csharpcode pre {font-size:small;color:black;font-family:consolas, "Courier New", courier, monospace;background-color:#ffffff;} .csharpcode pre {margin:0em;} .csharpcode .rem {color:#008000;} .csharpcode .kwrd {color:#0000ff;} .csharpcode .str {color:#006080;} .csharpcode .op {color:#0000c0;} .csharpcode .preproc {color:#cc6633;} .csharpcode .asp {background-color:#ffff00;} .csharpcode .html {color:#800000;} .csharpcode .attr {color:#ff0000;} .csharpcode .alt {background-color:#f4f4f4;width:100%;margin:0em;} .csharpcode .lnum {color:#606060;}

When you look at your storage account after a while you see that for the above example two tables have been created (if they haven’t been already in place), namely WADPerformanceCountersTable and WADWindowsEventLogsTable:

Of course you can use your storage explorer of choice and look at the raw data collected  Or we choose the above mentioned ADM to use its Dashboard feature to visualize our performance counter and event logs (note that ADM polls the data from the storage account and you can specify the poll interval):

Or we choose the above mentioned ADM to use its Dashboard feature to visualize our performance counter and event logs (note that ADM polls the data from the storage account and you can specify the poll interval):

If we like we can even drill into each of the diagnostics categories. The following picture shows a detailed view of three performance counters:

Again: the Azure Diagnostics Manager is a handy tool and is what I personally have been using in projects and recommending our clients.

<Return to section navigation list>

Arthur Vickers continued his Entity Framework CTP series with a Using DbContext in EF Feature CTP5 Part 5: Working with Property Values on 1/30/2011:

Introduction

In December we released ADO.NET Entity Framework Feature Community Technology Preview 5 (CTP5). In addition to the Code First approach this CTP also contains a preview of a new API that provides a more productive surface for working with the Entity Framework. This API is based on the DbContext class and can be used with the Code First, Database First, and Model First approaches.

This is the fifth post of a twelve part series containing patterns and code fragments showing how features of the new API can be used. Part 1 of the series contains an overview of the topics covered together with a Code First model that is used in the code fragments of this post.

The posts in this series do not contain complete walkthroughs. If you haven’t used CTP5 before then you should read Part 1 of this series and also Code First Walkthrough or Model and Database First with DbContext before tackling this post.

Working with property values

The Entity Framework keeps track of two values for each property of a tracked entity. The current value is, as the name indicates, the current value of the property in the entity. The original value is the value that the property had when the entity was queried from the database or attached to the context.

There are two general mechanisms for working with property values:

- The value of a single property can be obtained in a strongly typed way using the Property method.

- Values for all properties of an entity can be read into a DbPropertyValues object. DbPropertyValues then acts as a dictionary-like object to allow property values to be read and set. The values in a DbPropertyValues object can be set from values in another DbPropertyValues object or from values in some other object, such as another copy of the entity or a simple data transfer object (DTO).

The sections below show examples of using both of the above mechanisms.

Getting and setting the current or original value of an individual property

The example below shows how the current value of a property can be read and then set to a new value:

using (var context = new UnicornsContext())

{

var unicorn = context.Unicorns.Find(3);

// Read the current value of the Name property

string currentName1 = context.Entry(unicorn).Property(u => u.Name).CurrentValue;

// Set the Name property to a new value

context.Entry(unicorn).Property(u => u.Name).CurrentValue = "Franky";

// Read the current value of the Name property using a

// string for the property name

object currentName2 = context.Entry(unicorn).Property("Name").CurrentValue;

// Set the Name property to a new value using a

// string for the property name

context.Entry(unicorn).Property("Name").CurrentValue = "Squeaky";

}

Use the OriginalValue property instead of the CurrentValue property to read or set the original value.

Note that the returned value is typed as “object” when a string is used to specify the property name. On the other hand, the returned value is strongly typed if a lambda expression is used.

Setting the property value like this will only mark the property as modified if the new value is different from the old value.

When a property value is set in this way the change is automatically detected even if AutoDetectChanges is turned off. (More about automatically detecting changes is covered in Part 12.)

Getting and setting the current value of an unmapped property

The current value of a property that is not mapped to the database can also be read. For example:

using (var context = new UnicornsContext())

{

var lady = context.LadiesInWaiting.Find(1, "The EF Castle");

// Read the current value of an unmapped property

var name1 = context.Entry(lady).Property(p => p.Name).CurrentValue;

// Use a string to specify the property name

var name2 = context.Entry(lady).Property("Name").CurrentValue;

}

The current value can also be set if the property exposes a setter.

Reading the values of unmapped properties is useful when performing Entity Framework validation of unmapped properties. For the same reason current values can be read and set for properties of entities that are not currently being tracked by the context. For example:

using (var context = new UnicornsContext())

{

// Create an entity that is not being tracked

var unicorn = new Unicorn { Name = "Franky" };

// Read and set the current value of Name as before

var currentName1 = context.Entry(unicorn).Property(u => u.Name).CurrentValue;

context.Entry(unicorn).Property(u => u.Name).CurrentValue = "Franky";

var currentName2 = context.Entry(unicorn).Property("Name").CurrentValue;

context.Entry(unicorn).Property("Name").CurrentValue = "Squeaky";

}

Note that original values are not available for unmapped properties or for properties of entities that are not being tracked by the context.

Checking whether a property is marked as modified

The example below shows how to check whether or not an individual property is marked as modified:

using (var context = new UnicornsContext())

{

var unicorn = context.Unicorns.Find(1);

var nameIsModified1 = context.Entry(unicorn).Property(u => u.Name).IsModified;

// Use a string for the property name

var nameIsModified2 = context.Entry(unicorn).Property("Name").IsModified;

}

The values of modified properties are sent as updates to the database when SaveChanges is called.

Marking a property as modified

The example below shows how to force an individual property to be marked as modified:

using (var context = new UnicornsContext())

{

var unicorn = context.Unicorns.Find(1);

context.Entry(unicorn).Property(u => u.Name).IsModified = true;

// Use a string for the property name

context.Entry(unicorn).Property("Name").IsModified = true;

}

Marking a property as modified forces an update to be send to the database for the property when SaveChanges is called even if the current value of the property is the same as its original value.

It is not currently possible to reset an individual property to be not modified after it has been marked as modified. This is something we plan to support in a future release.

Reading current, original, and database values for all properties of an entity

The example below shows how to read the current values, the original values, and the values actually in the database for all mapped properties of an entity.

using (var context = new UnicornsContext())

{

var unicorn = context.Unicorns.Find(1);

// Make a modification to Name in the tracked entity

unicorn.Name = "Franky";

// Make a modification to Name in the database

context.Database.SqlCommand("update Unicorns set Name = 'Squeaky' where Id = 1");

// Print out current, original, and database values

Console.WriteLine("Current values:");

PrintValues(context.Entry(unicorn).CurrentValues);

Console.WriteLine("\nOriginal values:");

PrintValues(context.Entry(unicorn).OriginalValues);

Console.WriteLine("\nDatabase values:");

PrintValues(context.Entry(unicorn).GetDatabaseValues());

}

PrintValues is defined like so:

public static void PrintValues(DbPropertyValues values)

{

foreach (var propertyName in values.PropertyNames)

{

Console.WriteLine("Property {0} has value {1}",

propertyName, values[propertyName]);

}

}

Using the data set by the initializer defined in Part 1 of this series, running the code above will print out:

Current values:

Property Id has value 1

Property Name has value Franky

Property Version has value System.Byte[]

Property PrincessId has value 1

Original values:

Property Id has value 1

Property Name has value Binky

Property Version has value System.Byte[]

Property PrincessId has value 1

Database values:

Property Id has value 1

Property Name has value Squeaky

Property Version has value System.Byte[]

Property PrincessId has value 1

Notice how the current values are, as expected, the values that the properties of the entity currently contain—in this case the value of Name is Franky.

In contrast to the current values, the original values are the values that were read from the database when the entity was queried—the original value of Name is Binky.

Finally, the database values are the values as they are currently stored in the database. The database value of Name is Squeaky because we sent a raw command to the database to update it after we performed the query. Getting the database values is useful when the values in the database may have changed since the entity was queried such as when a concurrent edit to the database has been made by another user. (See Part 9 for more details on dealing with optimistic concurrency.)

Setting current or original values from another object

The current or original values of a tracked entity can be updated by copying values from another object. For example:

using (var context = new UnicornsContext())

{

var princess = context.Princesses.Find(1);

var rapunzel = new Princess { Id = 1, Name = "Rapunzel" };

var rosannella = new PrincessDto { Id = 1, Name = "Rosannella" };

// Change the current and original values by copying the values

// from other objects

var entry = context.Entry(princess);

entry.CurrentValues.SetValues(rapunzel);

entry.OriginalValues.SetValues(rosannella);

// Print out current and original values

Console.WriteLine("Current values:");

PrintValues(entry.CurrentValues);

Console.WriteLine("\nOriginal values:");

PrintValues(entry.OriginalValues);

}

This code uses the following DTO class:

public class PrincessDto

{

public int Id { get; set; }

public string Name { get; set; }

}

Using the data set by the initializer defined in Part 1 of this series, running the code above will print out:

Current values:

Property Id has value 1

Property Name has value Rapunzel

Original values:

Property Id has value 1

Property Name has value Rosannella

This technique is sometimes used when updating an entity with values obtained from a service call or a client in an n-tier application. Note that the object used does not have to be of the same type as the entity so long as it has properties whose names match those of the entity. In the example above, an instance of PrincessDTO is used to update the original values.

Note that only properties that are set to different values when copied from the other object will be marked as modified.

Setting current or original values from a dictionary

The current or original values of a tracked entity can be updated by copying values from a dictionary or some other data structure. For example:

using (var context = new UnicornsContext())

{

var lady = context.LadiesInWaiting.Find(1, "The EF Castle");

var newValues = new Dictionary<string, object>

{

{ "FirstName", "Calypso" },

{ "Title", " Prima donna" },

};

var currentValues = context.Entry(lady).CurrentValues;

foreach (var propertyName in newValues.Keys)

{

currentValues[propertyName] = newValues[propertyName];

}

PrintValues(currentValues);

}

Use the OriginalValues property instead of the CurrentValues property to set original values.

Setting current or original values from a dictionary using Property

An alternative to using CurrentValues or OriginalValues as shown above is to use the Property method to set the value of each property. This can be preferable when you need to set the values of complex properties. For example:

using (var context = new UnicornsContext())

{

var castle = context.Castles.Find("The EF Castle");

var newValues = new Dictionary<string, object>

{

{ "Name", "The EF Castle" },

{ "Location.City", "Redmond" },

{ "Location.Kingdom", "Building 18" },

{ "Location.ImaginaryWorld.Name", "Magic Astoria World" },

{ "Location.ImaginaryWorld.Creator", "ADO.NET" },

};

var entry = context.Entry(castle);

foreach (var propertyName in newValues.Keys)

{

entry.Property(propertyName).CurrentValue = newValues[propertyName];

}

}

In the example above complex properties are accessed using dotted names. For other ways to access complex properties see the two sections below specifically about complex properties.

Creating a cloned object containing current, original, or database values

The DbPropertyValues object returned from CurrentValues, OriginalValues, or GetDatabaseValues can be used to create a clone of the entity. This clone will contain the property values from the DbPropertyValues object used to create it. For example:

using (var context = new UnicornsContext())

{

var unicorn = context.Unicorns.Find(1);

var clonedUnicorn = context.Entry(unicorn).GetDatabaseValues().ToObject();

}

Note that the object returned is not the entity and is not being tracked by the context. The returned object also does not have any relationships set to other objects.

The cloned object can be useful for resolving issues related to concurrent updates to the database, especially where a UI that involves data binding to objects of a certain type is being used. (See Part 9 for more details on dealing with optimistic concurrency.)

Getting and setting the current or original values of complex properties

The value of an entire complex object can be read and set using the Property method just as it can be for a primitive property. In addition you can drill down into the complex object and read or set properties of that object, or even a nested object. Here are some examples:

using (var context = new UnicornsContext())

{

var castle = context.Castles.Find("The EF Castle");

// Get the Location complex object

var location = context.Entry(castle)

.Property(c => c.Location)

.CurrentValue;

// Get the nested ImaginaryWorld complex object using chained calls

var world1 = context.Entry(castle)

.ComplexProperty(c => c.Location)

.Property(l => l.ImaginaryWorld)

.CurrentValue;

// Get the nested ImaginaryWorld complex object using a single lambda expression

var world2 = context.Entry(castle)

.Property(c => c.Location.ImaginaryWorld)

.CurrentValue;

// Get the nested ImaginaryWorld complex object using a dotted string

var world3 = context.Entry(castle)

.Property("Location.ImaginaryWorld")

.CurrentValue;

// Get the value of the Creator property on the nested complex object using

// chained calls

var creator1 = context.Entry(castle)

.ComplexProperty(c => c.Location)

.ComplexProperty(l => l.ImaginaryWorld)

.Property(w => w.Creator)

.CurrentValue;

// Get the value of the Creator property on the nested complex object using a

// single lambda expression

var creator2 = context.Entry(castle)

.Property(c => c.Location.ImaginaryWorld.Creator)

.CurrentValue;

// Get the value of the Creator property on the nested complex object using a

// dotted string

var creator3 = context.Entry(castle)

.Property("Location.ImaginaryWorld.Creator")

.CurrentValue;

}

Use the OriginalValue property instead of the CurrentValue property to get or set an original value.

Note that either the Property or the ComplexProperty method can be used to access a complex property. However, the ComplexProperty method must be used if you wish to drill down into the complex object with additional Property or ComplexProperty calls.

Using DbPropertyValues to access complex properties

When you use CurrentValues, OriginalValues, or GetDatabaseValues to get all the current, original, or database values for an entity, the values of any complex properties are returned as nested DbPropertyValues objects. These nested objects can then be used to get values of the complex object. For example, the following method will print out the values of all properties, including values of any complex properties and nested complex properties.

public static void WritePropertyValues(string parentPropertyName, DbPropertyValues propertyValues)

{

foreach (var propertyName in propertyValues.PropertyNames)

{

var nestedValues = propertyValues[propertyName] as DbPropertyValues;

if (nestedValues != null)

{

WritePropertyValues(parentPropertyName + propertyName + ".", nestedValues);

}

else

{

Console.WriteLine("Property {0}{1} has value {2}",

parentPropertyName, propertyName,

propertyValues[propertyName]);

}

}

}

To print out all current property values the method would be called like this:

using (var context = new UnicornsContext())

{

var castle = context.Castles.Find("The EF Castle");

WritePropertyValues("", context.Entry(castle).CurrentValues);

}

Using the data set by the initializer defined in Part 1 of this series, running the code above will print out:

Property Name has value The EF Castle

Property Location.City has value Redmond

Property Location.Kingdom has value Rainier

Property Location.ImaginaryWorld.Name has value Magic Unicorn World

Property Location.ImaginaryWorld.Creator has value ADO.NET

Summary

In this part of the series we looked at different ways to get information about property values and manipulate the current, original, and database property values of entities and complex objects.

As always we would love to hear any feedback you have by commenting on this blog post.

For support please use the Entity Framework Pre-Release Forum.

Arthur Vickers

Developer

ADO.NET Entity Framework

Arthur Vickers continued his Entity Framework CTP series with a Using DbContext in EF Feature CTP5 Part 4: Add/Attach and Entity States on 1/29/2011:

Introduction

In December we released ADO.NET Entity Framework Feature Community Technology Preview 5 (CTP5). In addition to the Code First approach this CTP also contains a preview of a new API that provides a more productive surface for working with the Entity Framework. This API is based on the DbContext class and can be used with the Code First, Database First, and Model First approaches.

This is the fourth post of a twelve part series containing patterns and code fragments showing how features of the new API can be used. Part 1 of the series contains an overview of the topics covered together with a Code First model that is used in the code fragments of this post.

The posts in this series do not contain complete walkthroughs. If you haven’t used CTP5 before then you should read Part 1 of this series and also Code First Walkthrough or Model and Database First with DbContext before tackling this post.

Entity states and SaveChanges

An entity can be in one of five states as defined by the EntityState enumeration. These states are:

- Added: the entity is being tracked by the context but does not yet exist in the database

- Unchanged: the entity is being tracked by the context and exists in the database, and its property values have not changed from the values in the database

- Modified: the entity is being tracked by the context and exists in the database, and some or all of its property values have been modified

- Deleted: the entity is being tracked by the context and exists in the database, but has been marked for deletion from the database the next time SaveChanges is called

- Detached: the entity is not being tracked by the context

SaveChanges does different things for entities in different states:

- Unchanged entities are not touched by SaveChanges. Updates are not sent to the database for entities in the Unchanged state.

- Added entities are inserted into the database and then become Unchanged when SaveChanges returns.

- Modified entities are updated in the database and then become Unchanged when SaveChanges returns.

- Deleted entities are deleted from the database and are then detached from the context.

The following examples show ways in which the state of an entity or an entity graph can be changed.

Adding a new entity to the context

A new entity can be added to the context by calling the Add method on DbSet. This puts the entity into the Added state, meaning that it will be inserted into the database the next time that SaveChanges is called. For example:

using (var context = new UnicornsContext())

{

var unicorn = new Unicorn { Name = "Franky" };

context.Unicorns.Add(unicorn);

context.SaveChanges();

}

Another way to add a new entity to the context is to change its state to Added. For example:

using (var context = new UnicornsContext())

{

var unicorn = new Unicorn { Name = "Franky" };

context.Entry(unicorn).State = EntityState.Added;

context.SaveChanges();

}

Finally, you can add a new entity to the context by hooking it up to another entity that is already being tracked. This could be by adding the new entity to the collection navigation property of another entity or by setting a reference navigation property of another entity to point to the new entity. For example:

using (var context = new UnicornsContext())

{

// Add a new princess by setting a reference from a tracked unicorn

var unicorn = context.Unicorns.Find(1);

unicorn.Princess = new Princess { Name = "Belle" };

// Add a new unicorn by adding to the collection of a tracked princess

var princess = context.Princesses.Find(2);

princess.Unicorns.Add(new Unicorn { Name = "Franky" });

context.SaveChanges();

}

Note that for all of these examples if the entity being added has references to other entities that are not yet tracked then these new entities will also added to the context and will be inserted into the database the next time that SaveChanges is called.

Attaching an existing entity to the context

If you have an entity that you know already exists in the database but which is not currently being tracked by the context then you can tell the context to track the entity using the Attach method on DbSet. The entity will be in the Unchanged state in the context. For example:

var existingUnicorn = GetMyExistingUnicorn();

using (var context = new UnicornsContext())

{

context.Unicorns.Attach(existingUnicorn);

// Do some more work...

context.SaveChanges();

}

In the example above the GetMyExistingUnicorn returns a unicorn that is known to exist in the database. For example, this object might have come from the client in an n-tier application.

Note that no changes will be made to the database if SaveChanges is called without doing any other manipulation of the attached entity. This is because the entity is in the Unchanged state.

Another way to attach an existing entity to the context is to change its state to Unchanged. For example:

var existingUnicorn = GetMyExistingUnicorn();

using (var context = new UnicornsContext())

{

context.Entry(existingUnicorn).State = EntityState.Unchanged;

// Do some more work...

context.SaveChanges();

}

Note that for both of these examples if the entity being attached has references to other entities that are not yet tracked then these new entities will also attached to the context in the Unchanged state.

Attaching an existing but modified entity to the context

If you have an entity that you know already exists in the database but to which changes may have been made then you can tell the context to attach the entity and set its state to Modified. For example:

var existingUnicorn = GetMyExistingUnicorn();

using (var context = new UnicornsContext())

{

context.Entry(existingUnicorn).State = EntityState.Modified;

context.SaveChanges();

}

When you change the state to Modified all the properties of the entity will be marked as modified and all the property values will be sent to the database when SaveChanges is called. More about working with property values is covered in Part 5 of this series.

Note that if the entity being attached has references to other entities that are not yet tracked, then these new entities will attached to the context in the Unchanged state—they will not automatically be made Modified. If you have multiple entities that need to be marked Modified you should set the state for each of these entities individually.

Changing the state of a tracked entity

You can change the state of an entity that is already being tracked by setting the State property on its entry. For example:

var existingUnicorn = GetMyExistingUnicorn();

using (var context = new UnicornsContext())

{

context.Unicorns.Attach(existingUnicorn); // Entity is in Unchanged state

context.Entry(existingUnicorn).State = EntityState.Modified;

context.SaveChanges();

}

Note that calling Add or Attach for an entity that is already tracked can also be used to change the entity state. For example, calling Attach for an entity that is currently in the Added state will change its state to Unchanged.

Insert or update pattern

A common pattern for some applications is to either Add an entity as new (resulting in a database insert) or Attach an entity as existing and mark it as modified (resulting in a database update) depending on the value of the primary key. For example, when using database generated integer primary keys it is common to treat an entity with a zero key as new and an entity with a non-zero key as existing. This pattern can be achieved by setting the entity state based on a check of the primary key value. For example:

public void InsertOrUpdate(DbContext context, Unicorn unicorn)

{

context.Entry(unicorn).State = unicorn.Id == 0 ?

EntityState.Added :

EntityState.Modified;

context.SaveChanges();

}

Note that when you change the state to Modified all the properties of the entity will be marked as modified and all the property values will be sent to the database when SaveChanges is called. More about working with property values is covered in Part 5 of this series.

Summary

In this part of the series we talked about entity states and showed how to add and attach entities and change the state of an existing entity.

As always we would love to hear any feedback you have by commenting on this blog post.

For support please use the Entity Framework Pre-Release Forum.

Arthur’s posts are included in the Visual Studio LightSwitch section because LightSwitch is depenedent on Entity Framework V4, but not new feature CTPs (yet/)No significant articles

Arthur’s posts are included in the Visual Studio LightSwitch section because LightSwitch is depenedent on Entity Framework V4, but not new feature CTPs (yet/)No significant articles

Return to section navigation list>

No significant articles today.

No significant articles today.

<Return to section navigation list>

No significant articles today.

<Return to section navigation list>

See Tanya Forsheit of the InfoLaw Group asked readers to Please Tune In Monday, January 31, 2011 to hear Tanya be interviewed by KUCI-FM’s Mari Frank in the Cloud Computing Events section below.

<Return to section navigation list>

Tanya Forsheit of the InfoLaw Group asked readers to Please Tune In Monday, January 31, 2011 to hear Tanya be interviewed by KUCI-FM’s Mari Frank:

I hope you will tune in Monday, January 31, 2011, 8-9 am Pacific (11-12 Eastern), to Privacy Piracy, audio streaming on www.kuci.org (or locally in Southern California on KUCI 88.9 FM in Irvine, CA). Mari Frank will interview me about the following topics and more:

I hope you will tune in Monday, January 31, 2011, 8-9 am Pacific (11-12 Eastern), to Privacy Piracy, audio streaming on www.kuci.org (or locally in Southern California on KUCI 88.9 FM in Irvine, CA). Mari Frank will interview me about the following topics and more:

- If an organization has the time and resources to do only one thing to improve its privacy and data security compliance programs in 2011, what should that one thing be?

- What are the hottest topics in information law in 2011?

- What can an organization using or considering using cloud services do today to protect itself?

KUCI-FM broadcasts 24 hours per day from the campus of the University of California Irvine.

Bill Zack announced on 1/30/2011 a New York [City]–Azure Presentation to the New York City .NET Developers group to be held on 2/17/2011:

If you are a developer working for a software company in the New York metropolitan area you may be interested in this event.

If you are a developer working for a software company in the New York metropolitan area you may be interested in this event.

On February 17th I will be presenting an overview of developing applications that run on Windows Azure to the New York City .NET Developers Group.

We will cover Windows Azure compute and storage capabilities and talk about upcoming features such as Azure Reporting Services and Windows Azure AppFabric Caching as well as the longer term Windows Azure Roadmap.

We will also give you guidance on how you can start learning and developing Windows Azure applications using Free Windows Azure resources.

See the New York City .NET Developers Group web site for additional details.

<Return to section navigation list>

No significant articles today.

<Return to section navigation list>

Technorati Tags: Windows Azure, Windows Azure Platform, Azure Services Platform, Azure Storage Services, Azure Table Services, Azure Blob Services, Azure Drive Services, Azure Queue Services, SQL Azure Database, SADB, Open Data Protocol, OData, Windows Azure AppFabric, Azure AppFabric, Windows Server AppFabric, Server AppFabric, Cloud Computing, Visual Studio LightSwitch, LightSwitch

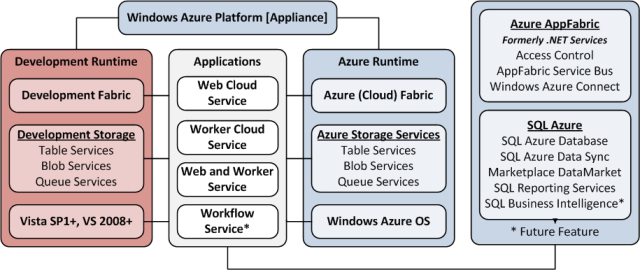

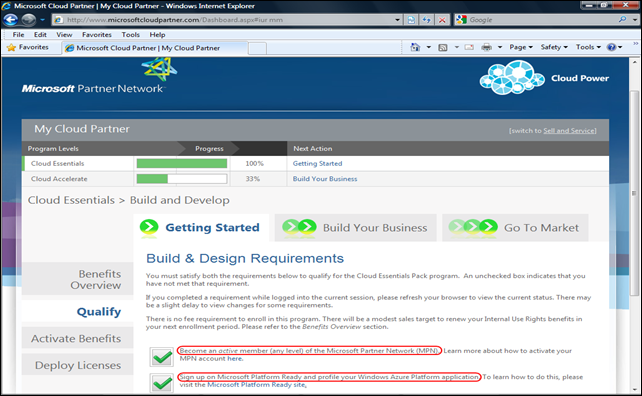

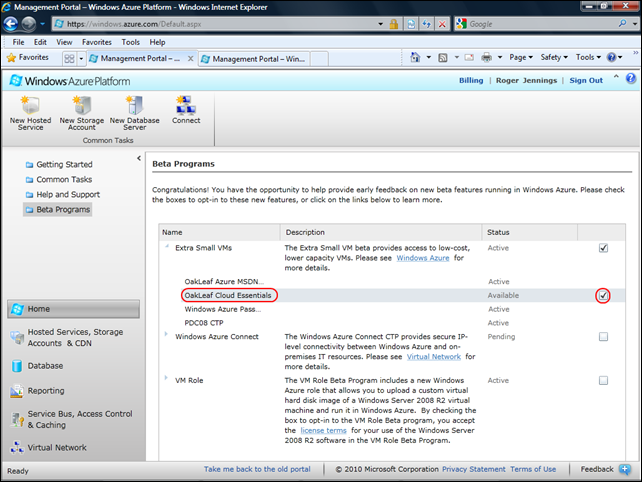

Figure 7

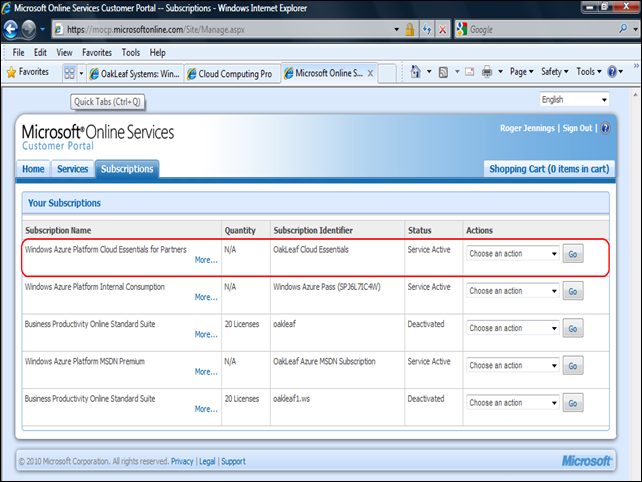

Figure 7 Figure 12

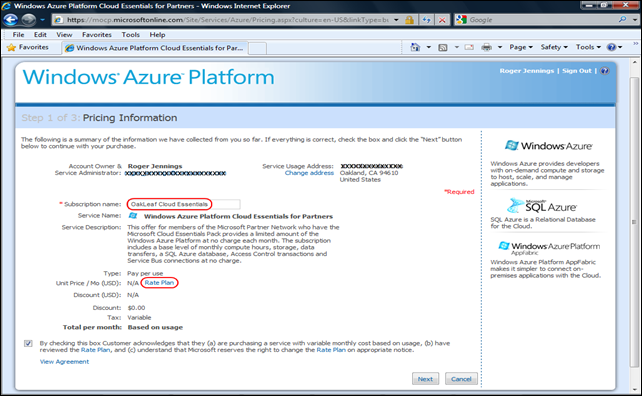

Figure 12

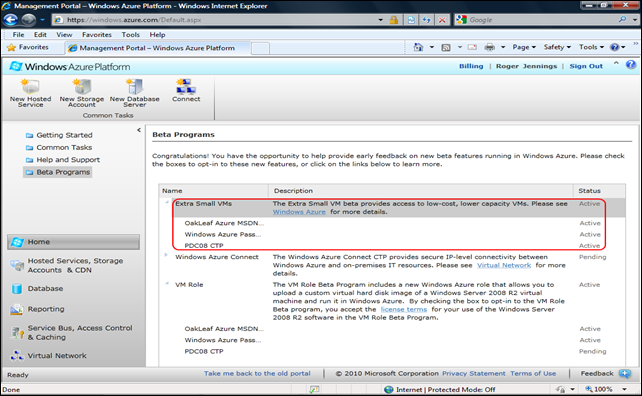

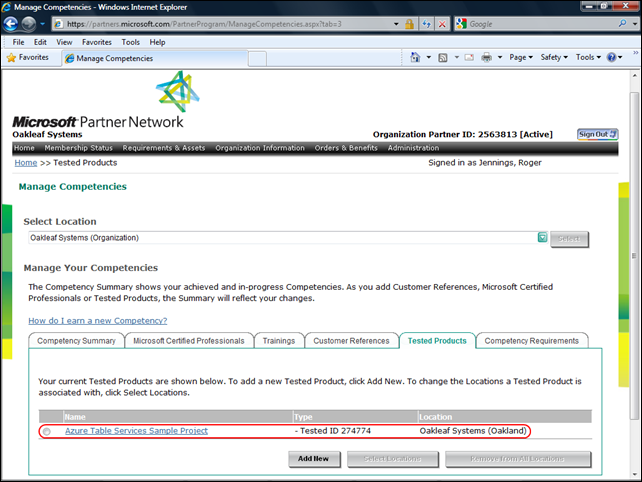

[Figure 19]

![image[80] image[80]](http://lh3.ggpht.com/_GdO7DQgAn3w/TUcY1-howmI/AAAAAAAAIko/tvvU1yEfol8/image%5B80%5D%5B3%5D.png?imgmax=800)

I’ve now been working and blogging about web technology long enough to see technologies that we once thought were the best thing since sliced bread turn out to be rather poor solutions to the problem or even worse that they create more problems than they solve. Since I’ve written favorably about all of the technologies mentioned below this is also a mea culpa where I try to see what I can learn about judging the suitability of technologies to solving problems on the web without being blinded by the hype from the “cool kids” on the web.

I’ve now been working and blogging about web technology long enough to see technologies that we once thought were the best thing since sliced bread turn out to be rather poor solutions to the problem or even worse that they create more problems than they solve. Since I’ve written favorably about all of the technologies mentioned below this is also a mea culpa where I try to see what I can learn about judging the suitability of technologies to solving problems on the web without being blinded by the hype from the “cool kids” on the web.  If you haven’t already read part 3 you should do so

If you haven’t already read part 3 you should do so

Arthur’s posts are included in the Visual Studio LightSwitch section because LightSwitch is depenedent on Entity Framework V4, but not new feature CTPs (yet/)No significant articles

Arthur’s posts are included in the Visual Studio LightSwitch section because LightSwitch is depenedent on Entity Framework V4, but not new feature CTPs (yet/)No significant articles  I hope you will tune in Monday, January 31, 2011, 8-9 am Pacific (11-12 Eastern), to Privacy Piracy, audio streaming on

I hope you will tune in Monday, January 31, 2011, 8-9 am Pacific (11-12 Eastern), to Privacy Piracy, audio streaming on  If you are a developer working for a software company in the New York metropolitan area you may be interested in this event.

If you are a developer working for a software company in the New York metropolitan area you may be interested in this event.