Windows Azure and Cloud Computing Posts for 12/9/2010+

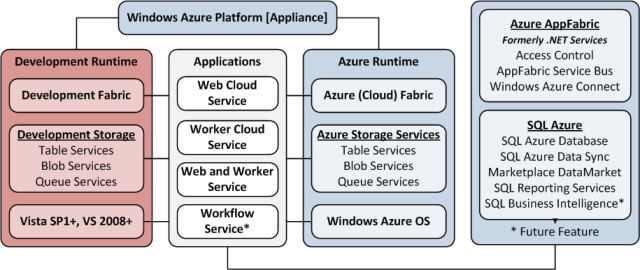

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Update 12/10/2010: Catching up on Thursday’s and Friday’s articles due to publishing/updating my Preliminary Cost Comparison of Database.com and SQL Azure Databases for 3 to 500 Users and Preliminary Comparison of Database.com and SQL Azure Features and Capabilities articles on 12/8 and 12/9/2010.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

My (@rogerjenn) updated Preliminary Cost Comparison of Database.com and SQL Azure Databases for 3 to 500 Users analysis of 12/10/2010 shows Database.com is about 50 times more expensive than SQL Azures for 100 and 500 typical users:

Steve Fisher’s Introducing Database.com post of 12/6/2010 states:

- Last quarter there were 25B transactions against our cloud database.

- Last year there were 10B records in our cloud database. … This year it’s 20B.

The The Multitenant Architecture of Database.com states:

Database.com is the most proven, reliable, and secure cloud database offering today that serves 2 million users:

- 20 billion records / 2 million users = 10,000 records per user.

- 25 billion transactions / 2 million users / quarter = 12,500 / 3 = 4,167 transactions/user/month

New users are likely to be at least twice as active as average Salesforce.com users because of initial ETL (Extract, Transform, Load) operations, more participatory apps and newly added social networking features. This results in an estimated 20,000 records/user and 8,333 transactions/user/month, which populate this alternative comparison:

Giving the benefit of the doubt to Database.com, the above still looks to me like more than a 50:1 cost advantage for SQL Azure in the range of 100 to 500 users. You can open a SkyDrive copy of the second comparison here.

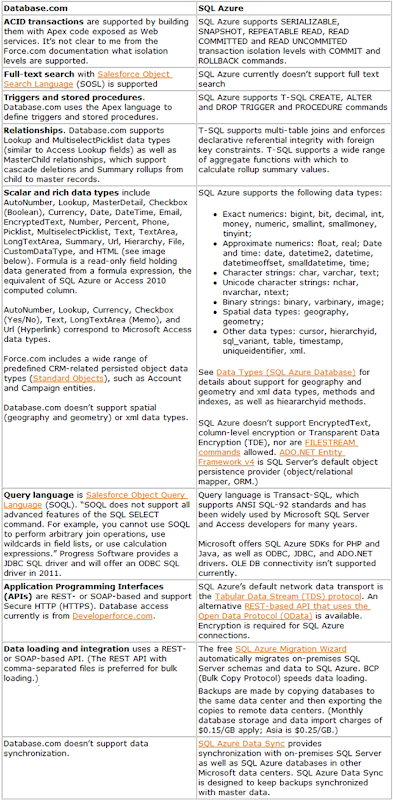

My (@rogerjenn) updated Preliminary Comparison of Database.com and SQL Azure Features and Capabilities of 12/10/2010 includes a comparison of:

… Relational Features and Capabilities

You can read more detailed information about the Force.com’s current database features in An Introduction to the Force.com Database. The Multitenant Architecture of Database.com describes Database.com’s design and implementation.

Database.com’s support for encrypted text and full-text search might increase the SQL Azure’s team allocation of resources to implementing those features. I’ve been requesting full-text search since it was promised for SQL Azure’s predecessor, SQL Server Data Services in late 2008. I requested Transparent Data Encryption (TDE) immediately after the transition from SQL Data Services to SQL Azure. …

Srinivasan Sundara Rajan posted Microsoft SQL Azure Reporting: Enterprise Reporting on Cloud as PaaS on 12/10/2010:

Enterprise application needs more or less boil down to reporting needs as reporting makes the acceptance of an enterprise application by the business users. There are different kind of reporting needs.

- Adhoc Reporting: It is the reporting tool support for creating simple queries and reports. Casual or novice users use these kind of reporting tools to create self-serve reports that answer simple business questions. With minimal steps, you can view data, author basic reports, change the report layout, filter and sort data, add formatting, and create charts.

- Custom Reporting: Apart from adhoc reporting, most enterprises needed sophisticated, multi page and dynamically altered reports which reflect the high value items like organization dash boards and legal documents etc. Enterprise reporting also needs the facilities to publish reports and schedule and make the output available to multiple users in multiple formats in a secured way.

As with other enterprise needs, reporting as a SaaS for adhoc reporting and as a PaaS for custom reporting will be the big need for future enterprises.

Microsoft SQL Azure Reporting

SQL Azure Reporting enables developers to enhance their applications by embedding cloud based reports on information stored in a SQL Azure database. Developers can author reports using familiar SQL Server Reporting Services tools and then use these reports in their applications which may be on-premises or in the cloud.SQL Azure Reporting is not yet commercially available, you can register to be invited to the community technology preview (CTP).

SQL Azure Reporting also currently can connect only to SQL Azure databases.

While there are limitations, this platform once become available commercially will become a very valuable cloud platform delivering rich reporting, without having to maintain an infrastructure.

Enterprise Reporting Features Likely to Be Available in SQL Azure Reporting

The following features are likely to be available in Sql Azure Reporting based on the analysis of its ‘on premise' counter part SSRS (Sql Server Reporting Services).

- SSRS reports work on a multi tiered reporting model and there is a Rendering extension services as part of Reporting Services

- Rendering Extensions Supported in SSRS 2008: HTML, CSV, XML, PDF, Excel, Word

- SSRS supports the following types of reports:

- Out of the Box Reports

- Server Based Reports

- User Defined Ad hoc Reports

- Reports integrated into Web Applications (Asp.net..)

- Reports integrated into Share point portal using web parts

- Custom built application features that render reports using programming code (C#..)

- The following features also exist as part of Report Builder:

- Filters

- Sort order

- Adding calculations with expressions

- Formatting considerations

- Pagination and printing considerations

- End-user perspective:

- Report Builder 2.0 designer sports much like the office 2007 applications. Large icon buttons are arranged on ribbons that may be accessed using tabs

- You set properties for different objects in the designer using three different methods

Dynamic formatting:

- Dynamic grouping based on expressions

- Dynamic Fields & Columns

- Dynamically hiding and showing a row or column

- Links and drill-through reports are powerful features that enable a text box or image to be used as a link to another report by passing parameter values to the target report

Summary

With several features as mentioned above for both Adhoc Reporting and Custom Reporting, SQL Azure Reporting which will be available shortly leads the Reporting Platform on Cloud. There are draw backs like at this time it connects only to SQL Azure databases, but it will provide a viable alternative for enterprise reporting needs.Windows Azure Cloud Platform is expected to automatically scales up and down the Reporting instances and provides the dynamic elasticity while providing the performance and fault tolerance.

This will also ensure that enterprises need not spend effort and cost on laborious upgrades to the reporting platform, which are generally amount to rewriting full or part of the reporting application.

Microsoft execs said at the company's October 2010 PDC that Microsoft will deliver the final version of SQL Azure Reporting Services in the first half of 2011.

<Return to section navigation list>

Dataplace DataMarket and OData

Alex James (@adjames) presented a 2:06:14 OData - Open Data for the Open Web video presentation to Microsoft Research on 12/9/2010:

There is a vast amount of data available today and data is now being collected and stored at a rate never seen before. Much, if not most, of this data, however, is locked into specific applications or formats and difficult to access or to integrate into new uses.

The Open Data Protocol (OData) is a web protocol for querying and updating data that provides a way to unlock your data and free it from silos that exist in applications today. OData is being used to expose and access information from a variety of sources including, but not limited to, relational databases, file systems, content management systems and traditional websites.

Join us in this tutorial to learn how OData can enable a new level of data integration and interoperability across a broad range of clients, servers, services, and tools. Bring your laptop and you will have a chance to work OData into your own projects on whatever platform you choose.

This might be a new record for length of an OData presentation.

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

Shashi Ranjan posted Integrating Microsoft Dynamics CRM via App Fabric (using SAML token) to the Microsoft Dynamics CRM blog on 12/10/2010:

In an earlier article called Integrating Microsoft Dynamics CRM via App Fabric, we saw how we can integrate Microsoft Dynamics CRM 2011 with an external application via the Windows Azure platform App Fabric. We converted a classical pull model of integration to a more efficient push model. The data was queried only when needed.

I would like to draw your attention to the authentication mechanism that was employed to authenticate with the Access Control Service (ACS). We used the management key approach and this implicitly made assumption that the key can be shared between CRM system and the owner of the syncing module. In many of the integration scenarios the CRM system and the external application (including the syncing module) may not be owned by the same business. The sharing of the ACS account’s management key is not possible.

Editor’s Note: For more overview material on AppFabric’s ACS there is an excellent article here: Access Control in the Cloud: Windows Azure AppFabric’s ACS.

Consider the scenario where an ISV has setup an endpoint on the App Fabric to which they want other CRM systems, its customers, to post data so that it can provide appropriate service. The ISV owns the Azure account and the rules on the ACS. It needs to enable its customer’s CRM systems to be able to post data to its endpoint and also be able to easily control and filter who is allowed to do so.

ACS provides the use of SAML tokens to authenticate and this is a good alternative for our need. Our goal is to allow CRM system to authenticate via SAML token and also allow ISV to configure rules in ACS based on the token’s issuer signature.

We start by first procuring an X509 certificate. You can use a self signed certificate too. Generate both private (.pfx) and public (.cer) certificate parts of the certificate. Add the .pfx certificate to the certificate store of the Async box, as we are running the plug-in asynchronously, under Computer account -> Local computer -> Personal -> Certificates.

Update the RetrieveAuthBehavior() show in the earlier blog sample with the code below.

</!-->private TransportClientEndpointBehavior RetrieveAuthBehavior() { // Behavior TransportClientEndpointBehavior behavior = new TransportClientEndpointBehavior(); behavior.CredentialType = TransportClientCredentialType.Saml; behavior.Credentials.Saml.SamlToken = GetTokenString(); return behavior; } private string GetTokenString() { // Generate Saml assertions.. string issuerName = "localhost"; Saml2NameIdentifier saml2NameIdentifier = new Saml2NameIdentifier(issuerName); // this is the issuer name. Saml2Assertion saml2Assertion = new Saml2Assertion(saml2NameIdentifier); Uri acsScope = ServiceBusEnvironment.CreateAccessControlUri(SolutionName); saml2Assertion.Conditions = new Saml2Conditions(); saml2Assertion.Conditions.AudienceRestrictions.Add( new Saml2AudienceRestriction(acsScope)); // this is the ACS uri. saml2Assertion.Conditions.NotOnOrAfter = DateTime.UtcNow.AddHours(1); saml2Assertion.Conditions.NotBefore = DateTime.UtcNow.AddHours(-1); X509Certificate2 localCert = RetrieveCertificate(StoreLocation.LocalMachine, StoreName.My, X509FindType.FindBySubjectName, issuerName); if (!localCert.HasPrivateKey) { throw new InvalidPluginExecutionException("Certificate should have private key."); } saml2Assertion.SigningCredentials = new X509SigningCredentials(localCert); // Add organization assertion. saml2Assertion.Statements.Add( new Saml2AttributeStatement( new Saml2Attribute("http://schemas.microsoft.com/crm/2007/Claims", "Org1"))); // The submitter should always be a bearer. saml2Assertion.Subject = new Saml2Subject(new Saml2SubjectConfirmation(Saml2Constants.ConfirmationMethods.Bearer)); // Wrap it into a security token. Saml2SecurityTokenHandler tokenHandler = new Saml2SecurityTokenHandler(); Saml2SecurityToken securityToken = new Saml2SecurityToken(saml2Assertion); // Serialize the security token. StringBuilder sb = new StringBuilder(); using (XmlWriter writer = XmlTextWriter.Create(new StringWriter(sb, CultureInfo.InvariantCulture))) { tokenHandler.WriteToken(writer, securityToken); writer.Close(); } return sb.ToString(); } </!-- code>

The code generates a self-signed token to authenticate to the app fabric. We could add any claims to the generated token, for example, adding the organization claim would be quite useful.

Configuring new rules in the App fabric

Since we are trying to authenticate via the signed SAML token, we would need to add the issuer and rules into the ACS to identify the token and issue “Send” claim in response. I am using the acm.exe that ships with the App Fabric sdk to configure rules in ACS.

First create the issuer entry in ACS-SB.

>Acm.exe create issuer -name:LocalhostIssuer -issuername:localhost -certfile:localhost.cer -algorithm:X509

Object created successfully (ID:'iss_334389048b872a533002b34d73f8c29fd09efc50')

Next retrieve the base scope of the service bus: >Acm.exe getall scope

Use the scope id and issuer id to create the following rule.

>Acm.exe create rule -scopeid:scp_b693af91ede5d4767c56ef3df8de8548784e51cb -inclaimissuerid:iss_334389048b872a533002b34d73f8c29fd09efc50 -inclaimtype:Issuer -inclaimvalue:localhost -outclaimtype:net.windows.servicebus.action -outclaimvalue:Send -name:RuleSend

The rule basically says to output “Send” claim when the input claim (any claim) is signed by the specific issuer

With the above rule in place any organization in the customers CRM system will be allowed to post. If the CRM system is multi tenant, i.e. with multiple organizations, we can make the rule more granular to allow only certain organizations.

Rule using organization claim.

>Acm.exe create rule -scopeid:scp_b693af91ede5d4767c56ef3df8de8548784e51cb -inclaimissuerid:iss_334389048b872a533002b34d73f8c29fd09efc50 -inclaimtype: http://schemas.microsoft.com/crm/2007/Claims -inclaimvalue:Org1 -outclaimtype:net.windows.servicebus.action -outclaimvalue:Send -name:RuleOrgSend

Wade Wegner (@wadewegner) posted a reminder about his Article: Re-Introducing the Windows Azure AppFabric Access Control Service (co-authored with @vibronet) in MSDN Magazine’s 12/2010 issue:

I had the great pleasure of co-authoring an article with my teammate Vittorio Bertocci on the September LABS release of the Windows Azure AppFabric Access Control. This article, entitled Re-Introducing the Windows Azure AppFabric Access Control Service, walks you through the process of authenticating and authorizing users on your Web site by leveraging existing identity providers such as Windows Live ID, Facebook, Yahoo!, and Google. Specifically, this article covers:

Outsourcing Authentication of a Web Site to the Access Control Service (ACS)

- Creating the Initial Visual Studio Solution

- Configuring an ACS Project

- Choosing the Identity Providers You Want

- Getting the ACS to Recognize Your Web Site

- Adding Rules

- Collecting the WS-Federation Metadata Address

- Configuring the Web Site to Use the ACS

- Testing the Authentication Flow

- ACS: Structure and Features

Sounds like a lot, but hopefully you will find the article straightforward and helpful.

Bill Zack posted BizTalk–The Road Ahead to his Architecture & Stuff MSDN blog on 12/8/2010:

If you are an ISV concerned with the long-term future of BizTalk you should be aware of the BizTalk Roadmap which charts the future of the product.

The future for BizTalk holds plans for achieving more On-Premise Server and Cloud Service Symmetry. This symmetry will facilitate the connection from on-premise applications to cloud services on Windows Azure platform and from cloud services to on-premise applications. Including the option to deploy some integration services as cloud services on the Windows Azure platform.

In the releases beyond 2010, BizTalk Server will continue to be Microsoft’s Integration Server, further facilitating the implementation of solutions such as Enterprise Application Integration (EAI), Enterprise Service Bus (ESB) and Business to Business (B2B) Integration. Efficiently managing large scale deployments and ensuring business continuity for mission-critical workloads is going to continue to be crucial for EAI, ESB and B2B solutions.

We are planning to invest in the following main areas:

Deep Microsoft Application Platform Alignment

Enterprise Connectivity for Windows Server AppFabric

On-Premise Server and Cloud Service Symmetry

<Return to section navigation list>

Windows Azure Virtual Network, Connect, and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

The Windows Azure Team reported Microsoft Shares Cloud Technology with Top Australian Research Organizations in a 12/10/2010 press release:

Microsoft has announced new partnerships with three of Australia's top research organisations as part of the company's Global Cloud Research Engagement Initiative launched earlier this year. Partnerships with National ICT Australia (NICTA), The Australian National University (ANU), and the Commonwealth Scientific and Industrial Research Organisation (CSIRO) will enable scientific researchers across the continent access to advanced client plus cloud computing resources and technical support.

These grants will provide researchers in these organizations with three years of free access to the Windows Azure platform, as well as access to technical support and client tools from Microsoft. This will enable researchers to easily access the power of the cloud from their desktop to explore a range of topics including the analysis of online social networks, a cloud-based geophysical imaging platform, computational chemistry and other eScience applications.

This program will also help Australia's university research infrastructure better align with recommendations of Cloud computing: opportunities and challenges for Australia, a study by the Academy of Technological Sciences and Engineering.

Read the full press release to learn more about how each of these organizations will benefit from these grants.

Earlier this year, Microsoft announced similar partnerships with the National Science Foundation (NSF) in the U.S., the National Institute of Informatics (NII) in Japan and in Europe with the European Commission's VENUS-C project, INRIA in France and the University of Nottingham in the U.K.

These grants are related to Microsoft's efforts bring technical computing to the mainstream with Windows Azure. To learn more about technical computing in the cloud, read this recent blog post by Bill Hilf, general manager, Technical Computing, Microsoft.

Chris J. T. Auld described what you need to get ready for Windows Azure development in his Getting Started with the Windows Azure SDK v1.3 post of 12/9/2010:

Had some questions in my Windows Azure training course here in Melbourne as to what resources need to be installed to get going with Windows Azure Development.

So, herewith my guide (links) of what you need.

- .NET Framework 4.0 (Windows Update)

- Visual Studio 2010 (Trial here, get Ultimate so you have Intellitrace)

- ASP.NET MVC 2.0 (here)

- Windows Powershell (Get Windows 7 or Windows Server 2008 R2)

- Microsoft Internet Information Server 7 (Get Windows 7 or Windows Server 2008 R2)

Windows Azure Tools for Microsoft Visual Studio 1.3 (here)

- Windows Azure platform AppFabric SDK V1.0 - October Update (here)

- Windows Azure platform AppFabric SDK V2.0 CTP - October Update (here)

- Microsoft SQL Server Express 2008 (or later) (http://www.microsoft.com/express/Database/InstallOptions.aspx get the installer with Management tools)

- SQL Server Management Studio 2008 R2 Express Edition (see above)

- Microsoft Windows Identity Foundation Runtime (here)

- Microsoft Windows Identity Foundation SDK (as above)

The Windows Azure Team posted Real World Windows Azure: Interview with Kevin Lam, President, IMPACTA on 12/9/2010:

The Real World Windows Azure series spoke to Kevin Lam, the Founder and President of IMPACTA, about using the Windows Azure platform to deliver LOCKBOX SFT, a cloud-based, fully managed secure file transfer (SFT) service for the company's customers:

MSDN: Can you give us a quick summary of what IMPACTA does and who you serve?

Lam: We help companies assess the security of their information systems, find potential weaknesses, and then address those issues. Then we've taken the lessons we learned from dealing with our customers' security threats to develop custom technology solutions that can help organizations address common security problems and cope with emerging threats.

MSDN: Was there a particular challenge you were trying to overcome that led you to use the Windows Azure platform?

Lam: Many of our customers were just transferring their sensitive files with email and FTP web services. So their data was being potentially exposed to malicious hackers, identity thieves, and other online threats. But most of the available solutions required firms to purchase, install, and manage expensive hardware and software. The entry point for some solutions can be more than U.S.$24,000, plus we could see first-hand the challenges our customers faced trying to manage their growing infrastructures.

We wanted to develop a competitively priced SFT solution, but we were a startup, and acquiring the data center space and the array of powerful servers we would need just wasn't feasible. We thought we could use cloud computing to overcome the existing cost barriers and provide our customers with a higher level of protection. Basically, when we looked at the costs, and the pain our customers were going through, and then saw what we could do with cloud computing, bringing an on-premises solution to market was just not compelling for us.

MSDN: Can you describe the solution that you developed with Windows Azure?

Lam: In July 2010, we introduced LOCKBOX SFT, a fully managed SFT service built on Windows Azure, the latest Microsoft .NET Framework, the ASP.NET web application framework, Windows Communication Foundation, Internet Information Services (IIS) 7.0, and Microsoft SQL Server 2008. As the LOCKBOX SFT database grows, we will transfer it to the Microsoft SQL Azure self-managed database.

To use LOCKBOX SFT, companies only need Internet access and a web browser, and they can subscribe to the service online in just minutes, with no setup fee to pay and no hardware or software to buy. When users log on, they can monitor an inbox and a sent folder, or click a tab to send a new data package. When the sender clicks send, LOCKBOX SFT encrypts the data, transfers it to Windows Azure for storage, and notifies the recipient by email that they have received an encrypted data package.

MSDN: How will using Windows Azure help IMPACTA deliver more advanced solutions to its customers?

Lam: With Windows Azure we could keep our operation costs low enough to provide customers with enterprise-level reliability and data protection in a solution that's affordable for the non-enterprise. Any type of organization can use LOCKBOX SFT to store and transfer sensitive data while protecting it from online threats and from physical threats such as theft or damage of hardware. And we can use Windows Azure to deliver the service our customers need when they need it, without making them pay for computing resources they're not using.

In 2010, IMPACTA introduced LOCKBOX SFT, built on Windows Azure from Microsoft. To send a data package safeguarded from malicious software, identity thieves, and other online threats, registered LOCKBOX SFT users simply log on to the application interface to create a package name, select files for the package, and specify a recipient.

Read the full story at: http://www.microsoft.com/casestudies/casestudy.aspx?casestudyid=4000008570

To read more Windows Azure customer success stories, visit: www.windowsazure.com/evidence

<Return to section navigation list>

Visual Studio LightSwitch

No significant articles today.

No significant articles today.

Return to section navigation list>

Windows Azure Infrastructure

David Linthicum asserted “With TV commercials focused on the consumer, Redmond's simplistic definition is obscuring the cloud's full value” in a deck for his How Microsoft is hijacking the cloud post of 12/9/2010 to InfoWorld’s Cloud Computing blog:

We've all seen the commercials. Some problem is occurring, and the exuberant Windows 7 user shouts, "To the cloud!" From there, he or she accesses services on the (presumably) Microsoft cloud to render photos with the family or share documents with other at a startup company. While this commercial campaign may seem innocuous brand marketing, it is building the perception among the masses that Microsoft invented the cloud.

By now, nearly everyone has heard of the cloud, but most people have no idea what it really is. Case in point: The media's reaction to Amazon.com expelling WikiLeaks from its cloud file-serving service. Over and over, I heard puzzled reporters in the popular press who didn't understand what a bookseller had to do with WikiLeaks document hosting.

Microsoft, though traditionally bad at marketing, is perhaps on to something here. It saw a gap between the popularity of the term "the cloud" and the rank and file's understanding of the cloud, and the company took the opportunity to fill in the gap. Microsoft has defined it in easy-to-understand examples.

The downside is that the general public now considers cloud computing as simple document sharing and photo processing, and it has no idea that the cloud also encompasses elastic, on-demand enterprise-grade storage and compute. Indeed, I've been in a few meetings where businesspeople have learned the Microsoft commercial definition and tell me, when I explain why they should use the cloud, that "we don't need to share photos."

Of course, Microsoft is offering more than photo and document sharing, as a look at the beta version of Office 365 attests. But Microsoft is smart to start with the simple and personal for this commercial campaign. That could reap some real benefits for Microsoft, though it could also lead to more confusion, as an oversimplified definition of cloud computing obscures all its potential.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

Patrick Joubert (@Patricksclouds) posted Session – Implement your Private Cloud with Microsoft in English and French on 12/8/2010:

What about moving in a Private Cloud with Microsoft ?

The Microsoft’s Journey for Private Cloud is split in 5 steps

1- Standardize on evolutive servers,

2- Improve your skills on the virtualization area,

3- Standardize your administration on a unify admin platform,

4- Lean your processes,

5- Architect your resources in pools.Let’s detail each part.

1- Standardize on evolutive servers

Microsoft built strong partnerships with Fujtsu, IBM, NEC, DELL, Hitachi and HP.

Cisco will come soon with UCS.Microsoft special site here.

2- Improve your skills on the virtualization area

The virtualization solution is Hyper-V.

Service pack 1 with two new features, next version is linked with Widows Server roadmap. Its release is estimated within 18 to 24 month (H2 2012). Service pack new features are Dynamic memory for dynamic allocation on the virtual machine and Remote fx for VDI video improvement. More details here.

3- Standardize your Administration on a unify admin platform

Your standardized and unify administration will be based on :

- System Center Configuration Manager,

- System Center Virtual Machine Manager,

- System Center Operation Manager,

- System Center Data Protection Manager.Each product is closely linked with the Hyper-V and all other Microsoft’s components like Active Directory for example.

4- Lean your processes

Your processes can be defined in the System Center tools. You have to first work on the Service definition and then on the SLAs. The virtualization introduces new roles like VM Admin. You can delegate in the defined processes different part of the process. You can have a VM Admin, Delegated Admin, Network Admin and Storage Admin as actors in the process.

A new Microsoft’s acquisition will help you on the Run Book Automation.

Opalis will leverage the automation side of System Center suite.

5- Architect your resources in pools

Microsoft introduces Virtual Manager Self Service Portal (VM SSP) for Private Cloud implementation. You can download it for free here.

It could be the first stone for your IaaS Private Cloud.

Microsoft’s partners can help you building your Internal Private Cloud with professional service support. Microsoft Consulting Service with “DCS” and Avanade with “Storm“.

It was an interesting session. I should suggest three improvements.

- Check before starting the session, what are the needs, concerns and issues of the attendees. It could help for a better highlighting on some key points.

- Introduce a “question and share” part, to help the attendees for more interactions.

- Badly, the world is not only using Microsoft’s products. Interoperability is a key success factor for better introduction of Microsoft’s technology. Spend time on this.Are you moving forward with your Private Cloud Implementation with Microsoft ?

Feel free to share with me !

<Return to section navigation list>

Cloud Security and Governance

Lydia Leong (@cloudpundit) posted Just enough privacy on 12/9/2010:

For a while now, I’ve been talking to Gartner clients about what concerns keep them off public cloud infrastructure, and the diminishing differences between private and public cloud from service providers. I’ve been testing a thesis with our clients for some time, and I’ve been talking to people here at Gartner’s data center conference about it, as well.

That thesis is this: People will share a data center network, as long as there is reasonable isolation of their traffic, and they are able to get private non-Internet connectivity, and there is a performance SLA. People will share storage, as long as there is reasonable assurance that nobody else can get at their data, which can be handled via encryption of the storage at rest and in flight, perhaps in conjunction with other logical separation mechanisms, and again, there needs to be a performance SLA. But people are worried about hypervisor security, and don’t want to share compute. Therefore, you can meet most requirements for private cloud functionality by offering temporarily dedicated compute resources.

Affinity rules in provisioning can address this very easily. Simply put, the service provider could potentially maintain a general pool of public cloud compute capacity — but set a rule for ‘psuedo-private cloud’ customers that says that if a VM is provisioned on a particular physical server for customer X, then that physical server can only be used to provision more VMs for customer X. (Once those VMs are de-provisioned, the hardware becomes part of the general pool again.) For a typical customer who has a reasonable number of VMs (most non-startups have dozens, usually hundreds, of VMs), the wasted capacity is minimal, especially if live VM migration techniques are used to optimize the utilization of the physical servers — and therefore the additional price uplift for this should be modest.

That gets you public cloud compute scale, while still assuaging customer fears about server security. (Interestingly, Amazon salespeople sometimes tell prospects that you can use Amazon as a private cloud — you just have to use only the largest instances, which eat the resources of the full physical server.)

Lydia Leong is an analyst at Gartner, where she covers Web hosting, colocation, content delivery networks, cloud computing, and other Internet infrastructure services.

Scott N. Godes posted My Chapter on Insurance Coverage for Cybersecurity and Intellectual Property Claims Now Available in the New Appleman Law of Liability Insurance Treatise on 12/8/2010:

Looking for a treatise on insurance coverage? How about one that has an entire chapter on insurance coverage for cybersecurity and intellectual property claims and risks?

Remember when I wrote that I had written a chapter on insurance coverage for cybersecurity and intellectual property claims for the New Appleman Law of Liability Insurance Treatise? Of course you do.

And you probably were wondering, “When will I be able to buy that treatise, so that I can have it on my bookshelf and refer to it regularly for all of my questions about insurance coverage for cybersecurity and intellectual property claims?!?” Well, here’s your answer. The treatise is available on the Lexis website. That’s right! Although you really will want to race right to Chapter 18 – Insurance Coverage for Intellectual Property and Cybersecurity Risks, so that you can read about insurance for data breaches, DDoS attacks, viruses, hackers, cybercrime, and IP losses, you’ll get the whole treatise, too. It’s a five volume looseleaf set that gets updated with supplements.

So what are you waiting for? Click here to order your very own treatise.

Randy Sklar asked The Cloud – Will my data be safe? on 12/8/2010:

Once you put your corporate data in the hands of a cloud provider you lose control over how much care is put into keeping the data secure and handled correctly. Here are some suggestions and ideas to help ensure that your data is handled carefully.

1. Personnel – Get as much information as you can about people that are going to be handling your data. Ask about hiring procedures, if through back ground checks are performed, maybe even interview some of the employees, etc.

2. Compliance – Request a Risks Assessment or some type of security assessment. If the provider won’t provide this then it is an indicator that this provider should only be utilized to host non-critical or non-sensitive data. In the end you are responsible for the security and integrity of the data, not the provider.

3. Visit the site- Ask the provider for some type of documentation stating where the data will be stored and if it is going to be moved that you must be notified so you can decide whether you are comfortable with the changes being made.

4. Data separation - The provider is obviously hosting data and applications for many other clients. It is important that your information is stored separately and securely from these other clients. You should be able to have your own virtual or even physical server just for your use only.

5. Recovery – A few years ago I was visiting a local data warehouse where many local providers shared space from Cavalier Telephone to provide hosted services to their clients or for themselves. This space is called a bunker and is located right behind Cavalier Telephone here in Richmond, Va. As I walked down the isle I couldn’t believe how many tape backup systems I saw in the enclosures. There were other people there and it seemed like most of them were there to change out tapes. Tapes! Are you kidding! Don’t they know how unreliable tape backup is and how long it takes to recover from a failure with tape. It is vital to ask about the recovery process and how much data could be lost and how long the system will be down should it fail.

6. Business stability – Ask the provider to show you that they are going to be around. Ask for financial information, ask for references, do a credit check (this is very inexpensive) and see if this is a stable business. Ask them how you will get your data back if you decide to pull it out later, what is the procedure for this, how long will it take?

Take these measures to be sure that your companies most valuable assets aren’t going to be at risk.

<Return to section navigation list>

Cloud Computing Events

Microsoft Worldwide Events reminded potential Windows Azure developers about the three-day Developing Cloud Applications with Windows Azure Jump Start on 12/15 through 12/17/2010:

- Event ID: 103247278

- Language(s): English.

- Product(s): Windows Azure.

- Audience(s): Pro Dev/Programmer.

Event Overview: Developing Cloud Applications with Windows Azure Jump Start (Session 1)

Whether you’re in the midst of moving applications to the cloud or just wondering how and why to leverage the Windows Azure Platform, Microsoft Learning is excited to enable you and your team. The Windows Azure Platform Jump Start provides live access to world-class instructors, hands-on labs, and samples that will help enable you and your team to build applications that utilize Windows Azure, SQL Azure & Windows Azure platform AppFabric.

Join us December 14-16, 2010 at 12pm PST (What time is this in my region?) for 12 hours of game-changing virtual classroom training tailored for development on the Windows Azure platform.

Click the “Register Online” button above to register for Session One.

Please register separately for each session (course outline below):

- Session 1 | Dec. 15, 2010 12pm-4pm PST

- Session 2 | Dec. 16, 2010 12pm-4pm PST

- Session 3 | Dec. 17, 2010 12pm-4pm PST

Who is teaching?

- David S. Platt | A Microsoft Software Legend since 1992, David is a developer, consultant, author, and “.NET Professor” at Harvard University. He’s written nearly a dozen books on web services and various aspects of programming, and is an accomplished instructor and presenter.

- Manu Cohen-Yasher | Manu is a top global expert in Microsoft Cloud technologies, Distributed Systems and Application Security. Through his hands-on development with enterprise customers and his position as professor at SELA Technical College in Tel Aviv, Manu brings a unique pragmatic approach to software development. He is author to many courses and is a regular top-rated speaker at Microsoft TechEd & Metro Early Adoption Programs.

What is a Jump Start?

Jump Start training classes are specifically designed for developers and product leaders who need to know how to best leverage a new Microsoft technology. Typically, they assume a certain level of development expertise and domain knowledge, so they move quickly and cover topics in a fashion that enables teams to recognize progress quickly. Not sure you’re ready? Prerequisite skills include experience with the .NET Framework and Visual Studio.Who should attend?

- Application Developers

- Software Engineers

- Application & Solutions Architects

- Software Product Managers

- CTOs & Enterprise Architects

Course Requirements / Prerequisites

This course is designed for experienced developers and software architects as listed in the “Who should attend” section above. In order to effectively practice these new skills, you will need access to the Windows Azure platform to complete most of the labs. If you do not have access currently, here are your options:

- US-based students: Free Windows Azure Platform 30-day pass. No credit card required, just enter this promo code: MSL001

- MSDN Subscribers: Take advantage of the special offer for MSDN subscribers: http://msdn.microsoft.com/subscriptions/ee461076.aspx

- CPLS, Partners: Take advantage of this offer to try a base amount of the Windows Azure platform at no charge. https://partner.microsoft.com/40118760

- All others: Choose from the following offers (must have Windows Azure core): http://www.microsoft.com/windowsazure/offers

Here is a list of the software used in the Hands-on Labs: .NET Framework 4.0, Visual Studio 2010, ASP.NET MVC2.0, Windows Powershell, IIS7, Windows Azure Tools for Microsoft Visual Studio 1.3, Windows Azure platform AppFabric SDK V1.0 - July Update, Windows Azure platform AppFabric SDK V2.0 CTP - October Update, Microsoft SQL Server Express 2008 (or later),SQL Server Management Studio 2008 R2 Express Edition, Microsoft Windows Identity Foundation Runtime, Microsoft Windows Identity Foundation SDK.

Course Schedule | Windows Azure Platform Jump Start (Subject to Change)

Session One: Dec. 14, 2010 12pm-4pm PST

Windows Azure Platform Overview

Windows Azure Compute

Windows Azure Storage

Intro to SQL Azure

Intro to the Windows Azure AppFabricSession Two: Dec. 15, 2010 12pm-4pm PST

Scalability, Caching and Elasticity

Asynchronous Workloads

Asynchronous Work and Azure Instance Control

Storage Strategies and Data Modeling

Exploring Windows Azure StorageSession Three: Dec. 16, 2010 12pm-4pm PST

The Azure Platform Application Lifecycle

Deploying Applications in Windows Azure

Windows Azure Diagnostics

Building ASP.NET Applications for Windows Azure

Building Web Forms/MVC Apps with Windows Azure

AppFabric Deep Dive

Service Remoting with Windows AppFabric Service Bus

Introduction to the Azure Marketplace

Mithun Dhar reminded developers on 12/8/2010 about Redmond Startup Weekend…be sure to be there! on 12/10/2010:

Entrepreneurs:

If you are looking for a co-founder, new hire or have an idea you are kicking around, check out Startup Weekend. It's being hosted at Microsoft in Redmond on December 10th-12th in Redmond. This Startup Weekend is a Mobile and Gaming themed event, so if you have an idea brewing for a mobile app, new game or both, this is a great time to pitch it.

There are great prizes for teams including $15,000 in prototyping help from Microsoft.

We have seen several Startup Weekend teams go on to get funding, including FoodSpotting, MemoLane, Score.ly, and Seattle’s own Giant Thinkwell, so think big!Friends of Microsoft get a discount, just use the code "microsoft". Hope to see you in Redmond!

Developers:

I wanted to invite you to the next Startup Weekend in Redmond, December 10th-12th. It's a great place to connect with developers, designers and marketers who want to work on cool projects or applications.

Redmond Startup Weekend is a Mobile and Gaming themed event so if you have an idea brewing for a mobile app, new game or both, this is a great time to pitch it and find some people who would love to work on it with you. You can find out more about the event at http://redmond.startupweekend.org and if you use the code "microsoft" you get a discount.

Note that there will be about 3 Windows Phone 7’s that we will be raffling off at this event! We will also bring a few Codes that will waive your fees for Marketplace. Amongst other stuff, we’ll have tee shirts, posters and a whole lot of developer love from Microsoft.

Tune in this Friday at the Startup weekend….

See you there!

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Jeff Barr (@jeffbarr) posted Amazon S3 - Object Size Limit Now 5 TB to his Amazon Web Services blog on 12/9/2010:

A number of our customers want to store very large files in Amazon S3 -- scientific or medical data, high resolution video content, backup files, and so forth. Until now, they have had to store and reference the files as separate chunks of 5 gigabytes (GB) or less. So, when a customer wanted to access a large file or share it with others, they would either have to use several URIs in Amazon S3 or stitch the file back together using an intermediate server or within an application.

No more.

We've raised the limit by three orders of magnitude. Individual Amazon S3 objects can now range in size from 1 byte all the way to 5 terabytes (TB). Now customers can store extremely large files as single objects, which greatly simplifies their storage experience. Amazon S3 does the bookkeeping behind the scenes for our customers, so you can now GET that large object just like you would any other Amazon S3 object.

In order to store larger objects you would use the new Multipart Upload API that I blogged about last month to upload the object in parts. This opens up some really interesting use cases. For example, you could stream terabytes of data off of a genomic sequencer as it is being created, store the final data set as a single object and then analyze any subset of the data in EC2 using a ranged GET. You could also use a cluster of EC2 Cluster GPU instances to render a number of frames of a movie in parallel, accumulating the frames in a single S3 object even though each one is of variable (and unknown at the start of rendering) size.

The limit has already been raised, so the race is on to upload the first 5 terabyte object!

Was the “number of customers” = 1 = Netflix?

Werner Vogels (@werner) added background with Big Just Got Bigger - 5 Terabyte Object Support in Amazon S3 on 12/9/2010:

Today, Amazon S3 announced a new breakthrough in supporting customers with large files by increasing the maximum supported object size from 5 gigabytes to 5 terabytes. This allows customers to store and reference a large file as a single object instead of smaller 'chunks'. When combined with the Amazon S3 Multipart Upload release, this dramatically improves how customers upload, store and share large files on Amazon S3.

Who has files larger than 5GB?

Amazon S3 has always been a scalable, durable and available data repository for almost any customer workload. However, as use of the cloud as grown, so have the file sizes customers want to store in Amazon S3 as objects. This is especially true for customers managing HD video or data-intensive instruments such as genomic sequencers. For example, a 2-hour movie on Blu-ray can be 50 gigabytes. The same movie stored in an uncompressed 1080p HD format is around 1.5 terabytes.

By supporting such large object sizes, Amazon S3 better enables a variety of interesting big data use cases. For example, a movie studio can now store and manage their entire catalog of high definition origin files on Amazon S3 as individual objects. Any movie or collection of content could be easily pulled in to Amazon EC2 for transcoding on demand and moved back into Amazon S3 for distribution through edge locations throughout the word with Amazon CloudFront. Or, BioPharma researchers and scientists can stream genomic sequencer data directly into Amazon S3, which frees up local resources and allows scientists to store, aggregate, and share human genomes as single objects in Amazon S3. Any researcher anywhere in the world then has access to a vast genomic data set with the on-demand compute power for analysis, such as Amazon EC2 Cluster GPU Instances, previously only available to the largest research institutions and companies.

Multipart Upload and moving large objects into Amazon S3

To make uploading large objects easier, Amazon S3 also recently announced Multipart Upload, which allows you to upload an object in parts. You can create parallel uploads to better utilize your available bandwidth and even stream data into Amazon S3 as it's being created. Also, if a given upload runs into a networking issue, you only have to restart the part, not the entire object allowing you recover quickly from intermittent network errors.

Multipart Upload isn't just for customers with files larger than 5 gigabytes. With Multipart Upload, you can upload any object larger than 5 megabytes in parts. So, we expect customers with objects larger than 100 megabytes to extensively use Multipart Upload when moving their data into Amazon S3 for a faster, more flexible upload experience.

More information

For more information on Multipart Upload and managing large objects in Amazon S3, see Jeff Barr's blog posts on Amazon S3 Multipart Upload and Large Object Support as well as the Amazon S3 Developer Guide.

James Governor published Software CEOs talking fluent dork: its the developers, stupid. New Kingmakers to his Monkchips blog on 12/9/2010:

A little after I wrote up the news yesterday that salesforce.com was acquiring Heroku I came across a quote from Marc Benioff, tweeted by @timanderson:

“Ruby is the language of the cloud 2″

Computerweekly has a fuller version:

“Ruby is the language of Cloud 2 [applications for real-time mobile and social platforms]. Developers love Ruby. it’s a huge advancement,” said Benioff. “It offers rapid development, productive programming, mobile and social apps and massive scale. We could move the whole industry to Ruby on Rails.”

Apart from conflating Ruby on Rails the development framework with Ruby the programming language, something which many Ruby developers hate, but which its easy to slip into (so easy I slipped into it yesterday) Benioff was clearly not talking the language of the line of business, but rather of technology.

It seems to me we’re witnessing a sea-change at the moment. Many of the commentariat, industry analysts and so on, still seem to think the purchaser is king – that IT will simply be bypassed by savvy business users. But what about developers? We are currently emerging, blinking, from a dark period when many enterprises, and their advisors, truly believed we could just draw up a business process diagram and ship it offshore for coding by commodity developers. There was a Taylorist view of software development, an artefact of Waterfall development. But the model was broken. Innovation comes from code, and code comes from software developers. Coding is social. The idea developers are a commodity is as broken as the notion that a free market consists of independent actors making independent decisions that always lead to better outcomes. We are people, we are herds, we might as well be wildebeests. Well Benioff wants to be the alpha male, showing the way to the next watering hole. He isn’t alone.

I wrote a couple of months ago about a bravura performance from VMWare CEO Paul Maritz. He said:

“Developers are moving to Django and Rails. Developers like to focus on what’s important to them. Open frameworks are the foundation for new enterprise application development going forward. By and large developers no longer write windows or Linux apps. Rails developers don’t care about the OS – they’re more interested in data models and how to construct the UI.”

And this was an IT Operations audience!

The bottom line is this – if we’re really going to consumerise enterprise IT then that means developerising it (please excuse the hideous neologism). Just try and find me a successful consumer tech company which hasn’t fostered amazing, more often that not local, relationships with software developers. The old model was busted. Great users experiences generally come from developers and designers working closely together. That’s the bottom line. We all owe Apple a debt for helping business owners to understand the value of developers.

It seems to me that Benioff and Maritz are reflecting a powerful change. As I said yesterday:

Salesforce avoids IT to sell to the business, while Heroku avoids IT to sell to developers.

Powerful macro forces are at work, driven by the cloud, the appstore, open source, social media and so on. CEOs need to get a lot more developer savvy. Its not enough to pimp your own apps and APIs, you need to embrace the herd. Maritz and Benioff are wise to that. Developerforce. Yup.

Disclosure: salesforce and vmware are both clients. Further notes: in RedMonk parlance dorks, geeks and nerds are good things. We are all about the makers.

Tim Anderson (@timanderson) posted The Salesforce.com platform play on 12/9/2010:

I’ve been mulling over the various Salesforce.com announcements here at Dreamforce, which taken together attempt to transition Salesforce.com from being a cloud CRM provider to becoming a cloud platform for generic applications. Of course this transition is not new – it began years ago with Force.com and the creation of the Apex language – and it might not be successful; but that is the aim, and this event is a pivotal moment with the announcement of database.com and the Heroku acquisition.

One thing I’ve found interesting is that Salesforce.com sees Microsoft Azure as its main competition in the cloud platform space – even though alternatives such as Google and Amazon are better known in this context. The reason is that Azure is perceived as an enterprise platform whereas Google and Amazon are seen more as commodity platforms. I’m not convinced that there is any technical justification for this view, but I can see that Salesforce.com is reassuringly corporate in its approach, and that customers seem generally satisfied with the support they receive, whereas this is often an issue with other cloud platforms. Salesforce.com is also more expensive of course.

The interesting twist here is that Heroku, which hosts Ruby applications, is more aligned with the Google/Amazon/open source community than with the Salesforce.com corporate culture, and this divide has been a topic of much debate here. Salesforce.com says it wants Heroku to continue running just as it has done, and that it will not interfere with its approach to pricing or the fact that it hosts on Amazon’s servers – though it may add other options. While I am sure this is the intention, the Heroku team is tiny compared to that of its acquirer, and some degree of change is inevitable.

The key thing from the point of view of Salesforce.com is that Heroku remains equally attractive to developers, small or large. While Force.com has not failed exactly, it has not succeeded in attracting the diversity of developers that the company must have hoped for. Note that the revenue of Salesforce.com remains 75%-80% from the CRM application, according to a briefing I had yesterday.

What is the benefit to Salesforce.com of hosting thousands of Ruby developers? If they remain on Heroku as it is at the moment, probably not that much – other than the kudos of supporting a cool development platform. But I’m guessing the company anticipates that a proportion of those developers will want to move to the next level, using database.com and taking advantage of its built-in security features which require user accounts on Force.com. Note that features such as row-level security only work if you use the Force.com user directory. Once customers take that step, they have a significant commitment to the platform and integrating with other Salesforce.com services such as Chatter for collaboration becomes easy.

The other angle on this is that the arrival of Heroku and VMForce gives existing Salesforce.com customers the ability to write applications in full Java or Ruby rather than being restricted to tools like Visualforce and the Apex language. Of course they could do this before by using the web services API and hosting applications elsewhere, but now they will be able to do this entirely on the Salesforce.com cloud platform.

That’s how the strategy looks to me; and it will fascinating to look back a year from now and see how it has played out. While it makes some sense, I am not sure how readily typical Heroku customers will transition to database.com or the Force.com identity platform.

There is another way in which Salesforce.com could win. Heroku knows how to appeal to developers, and in theory has a lot to teach the company about evangelising its platform to a new community.

Related posts:

Jeff Barr (@jeffbarr) posted New AWS SDKs for Mobile Development (Android and iOS) to his Amazon Web Services blog on 12/8/2010:

We want to make it easier for developers to build AWS applications on mobile devices. Today we are introducing new SDKs for AWS Development on devices that run the Google's Android and Apple's iOS operating systems (iPhones, iPads, and the iPod Touch).

The AWS SDK for Android and the AWS SDK for iOS provide developers with access to storage (Amazon S3), database (Amazon SimpleDB), and messaging facilities (Amazon SQS and Amazon SNS). Both SDKs are lean and mean to allow you to make the most of the limited memory found on a mobile device. The libraries take care of a number of low-level concerns such as authentication, retrying of requests, and error handling.

Both of the SDKs include libraries, full documentation and some sample code. Both of the libraries are available in source form on GitHub (iOS and Android) and we will be more than happy to accept external contributions.

In order to allow code running on the mobile device to make calls directly to AWS, we've also outlined a number of ways to store and protect the AWS credentials needed to make the calls in our new Credential Management in Mobile Applications document.

I am really looking forward to seeing all sorts of cool and creative (not to mention useful) AWS-powered applications show up on mobile devices in the future. If you build such an app, feel free to leave a comment on this post or send email to awseditor@amazon.com.

Dennis Howlett answered “no” to his Salesforce's Database.com as a game changer now they've acquired Heroku? question posted to ZDNet’s Irregular Enterprise blog on 12/8/2010:

Yesterday, Salesforce.com pre-announced a cloud based database. On its face this sounds exciting. Or at least you’d think that from the drooling Tweets I saw. As Marc Benioff, CEO Salesforce bounced around on stage extolling the ‘amazingness’ of Database.com I just could not get excited about what to me seems like a breaking down of the Force.com platform into bite sized pieces for individual consumption. If that’s what you want to do. For me, the more pressing problem comes in figuring out what does a ‘record’ or ‘transaction’ mean in Mr Benioff’s cloudy world? Especially when the hook sounds like a generous free for three users plus up to 100,000 records and 50,000 transactions per month. More to the point, when does a user hit the trigger point for pay to use?

Bob Warfield has a partial answer:

Who will be the first to put up a service on Amazon AWS that delivers exactly the same function using MySQL and for a lot less money? You see, Salesforce’s initial pricing on the thing is their Achille’s heel. I won’t even delve into their by-the-transaction and by-the-record pricing. $10 a month to autheticate the user is a deal killer. How can I afford to give up that much of my monthly SaaS billing just to authenticate? The answer is I won’t, but Salesforce won’t care, because they want bigger fish who will. I suspect their newfound Freemium interest for Chatter is just their discovery that they can’t get a per seat price for everything, or at least certainly not one as expensive as they’ve tried in the past.

I was more blunt about it: It won’t fly. Period. Such trifles aside, Phil Wainewright reckons Salesforce.com just steamrollered many of the situational app vendors:

Of course they have established customer bases who will remain loyal, and which they will continue to serve, for many years to come. But the impact of Database.com on their ability to attract new prospects must be highly damaging.

He backs up the contention with a comment from Matt Robinson, CEO of Rollbase:

“Regarding the impact of this on Rollbase and others like LongJump, we will likely feel it in the form of less prospects for our respective hosted offerings,” he told me. Indeed, the momentum of Force.com has already pushed Rollbase towards providing its platform as an installable package for use by ISVs or within individual enterprises.

OK - so Phil caught one CEO with his eyeballs caught in the Salesforce headlights. That does not make a wholly convincing case.

Despite Phil’s reporting from a visit to the oracle (sic) I think he’s way off base. Bob Warfield offers the open source card as evidence that Database.com is not the killer Phil believes. I’ll offer different fare: the millions of Microsoft and SAP developers who are not going to abandon what they know (and make plenty of money upon) any time soon. While I am willing to bet that as outlined below, many of those will take a sniff at Database.com, few will bite in the short to medium term unless they see significant development advantage in going the Database.com route as tied to Salesforce. Right now, the cost model alone won’t cut it.

It took an early evening shared beer with Parker Harris, Salesforce.com co-founder to get a bead on what this means to the the company.

When you strip away all the hype and attempts at nuancing this announcement it’s all a bit prosaic but with a clear intent. Mr Harris says that internally to Salesforce.com, this is about its own people finding a way to connect to the outside world of developers: “We’ve got this Force.com platform and that’s amazing but what we really need is to make a connection to the vast number of developers who don’t know who we are or what we do.” That makes sense given growth over the last few years at SAP’s Community Network. But that’s very much a first step.

Database.com doesn’t let you get access to ‘The Database’ underpinning Salesforce.com. That would be a step into territory almost certain to break the multi tenancy upon which the company has built so much of its business model. Instead, it allows access to a meta layer that leads you to think you are interacting with the database. The difference is subtle but important in that Salesforce.com will allow you to create tables with rows and columns - sort of - that are in fact objects which inherit stuff like Salesforce.com’s security and inherent search. Is that a database or not? More to the point is it a new database or simply a rehashing of something that’s 11 years old? Geeks will decide the answer to that question. So is it a big deal or what?

I suggested to Mr Harris that from what I saw, Database.com will make an ideal proving ground for situational applications of the kind Phil describes but only in the context of Salesforce. By that I mean the kind of app that has a temporary use case and needs lashing up over days, doesn’t need to be bullet proof in the first instance and which can be easily embedded into Salesforce for testing and limited runtime use. He got that: “I’m sure that’s where we’re likely to see a lot of early adoption. Make a Facebook app, deploy, run but you know that can quickly become the place where people start to build out business critical apps.” That’s essentially Phil’s argument.

Salesforce.com has been careful to make sure that developers don’t see this as a way to build high volume transaction based systems. Database.com won’t be capable of doing that. At least not for the next few iterations. Even so, the fact Database.com is being touted as open (build in whatever you like, run on whatever device you like) doesn’t get past the fact this is another form of vendor lock on an as yet untested (and unavailable) database platform.

See also:

- Salesforce.com builds out its cloud stack, plots Appforce, Siteforce

- Salesforce revs up the cloud data tier era with Database.com

- Dreamforce: Salesforce-Oracle rivalry heats up with launch of Database.com

Later, I spoke with Anshu Sharma who is the operational brains behind the marketing of this initiative. Anshu is no slouch. I asked him about the community aspects of Database.com. He said: “We don’t think there should be any difference whether a person is asking a support style question, posting a query or offering a code sample. We should be able to use Chatter as a way of figuring out which is which and allowing our people access to the community to deal with whatever is occurring in the stream. We’ve got the scale to do that but we want to scale much more. Community should help us all.” An excellent point and one that is slightly differentiated from SAP’s approach but along similar lines.

So…is this a big deal? That’s hard to tell. A clue comes from Mr Harris’s last words with me: “Last year when we launched Chatter, plenty of people kinda looked at it and shrugged. Adoption has been phenomenal [I can attest to that] and it’s going in directions we didn’t think at that time. Who’s to say about Database.com? We use Dreamforce to throw out these ideas and then see what happens.”

That seemed a fitting point at which to end our conversation. And then what do you know? Salesforce goes and acquires Heroku. That gives it an instant leg up in the community stakes with a claimed 1 million developers using the Ruby platform and 105,000 Heroku built applications. Or put another way, if you’re not sure you can build a community then why not buy into one and especially one that ticks all the marketing buzz word compliant cool companies like Twitter, Groupon and 37 Signals? Add in the enterprise pull of companies like Best Buy as Heroku users and you can see where this goes. Larry Dignan certainly see it:

The game plan here is pretty clear. Salesforce.com wants to ramp its platform-as-a-service efforts. Salesforce.com expects that it will combine Heroku with VMforce, an enterprise Java platform, to offer a broad platform.

Fast forward a year to Dreamforce 2011. Salesforce.com lines up developers who do a kind of Demo Jam where they showcase some of the stuff they’ve built and the direction they are taking Salesforce.com. If that happens then everyone else better watch out.

Despite my stated reservations, Salesforce.com has an uncanny knack of building estraordinary momentum behind what it does, orchestrated by Marc Benioff, the PT Barnum of the enterprise apps world. That just got ramped a notch. Provided Salesforce.com finds the right way to set up valuable two way communication with developers, preserves the Heroku community AND delivers what enterprise developers really want, I see no reason to think Database.com will be any different. And if you think that’s nutty then check this: during a conversation about Mr Benioff I said he’s on the cusp of becoming an adjective as in: “doing a Benioff” Anyone disagree?

Dennis’s Salesforce and SAP: an obvious comparison post of 12/10/2010 is an equally interesting read.

Paul Krill claimed “The company lays out its platform-as-a-service plans in hopes of appealing to next-generation app developers” as a preface to his Salesforce.com looks to be a developer destination with Heroku Ruby buy article of 12/8/2010 for InfoWorld’s Cloud Comptuing blog:

Salesforce.com, with its addition of Heroku's Ruby cloud application platform, wants to position itself as a destination for next-generation application developers. But rival cloud vendor Engine Yard believes the Heroku/Salesforce.com pairing, while validating Ruby on the cloud, is the wrong way to go.

Salesforce.com's $212 million acquisition of Heroku was one of several announcements made this morning at the company's Dreamforce 2010 conference in San Francisco. The company also promoted its Cloud 2 concept involving social, mobile, and real-time computing and announced a partnership for IT service management with BMC. Salesforce.com also enunciated its vision of eight different Salesforce.com clouds, which includes the Database.com platform announced this week as well as Heroku.

"Heroku is really designed by developers for developers. It's a true development environment," Salesforce.com CEO Mark Benioff said. He also cited Ruby as "the true language of Cloud 2." Ruby on Rails is supported by Heroku as well. The acquisition, added Parker Harris, Salesforce.com executive vice president, "puts us solidly in the platform-as-a-service market."

Heroku powers more than 105,000 Web applications. Salesforce.com argues that its Heroku property, combined with its VMforce Java cloud, makes the company the "unparalleled" platform provider for Cloud 2 applications. But at Engine Yard, which also offers cloud services for Ruby on Rails applications, an official was dismissive of Salesforce's grand plans.

"No respectable developer wants to be on Salesforce.com. This could drive even more developers [to Engine Yard's platform]," said Tom Mornini, Engine Yard CTO and co-founder, in an email. "Ruby is the language for the cloud. If you are building apps, and you are building on the cloud, you have to build with Ruby," Mornini said. The deal validates that, he said. "However, both Salesforce.com and Heroku's multi-tenancy approach is the wrong way to go. We believe virtualization is a better value for any type of company that has applications."

The acquisition, said analyst Al Hilwa of IDC, is about Salesforce.com's desire to build a fuller platform. "Firms that provide a hosted platform for Ruby represented the kind of new application workloads that Salesforce.com hopes to attract to its own cloud offering. In theory, Heroku customers will be candidates for Database.com, and so the synergies for cross-selling are one of the main attractions," Hilwa said.

Heroku CEO Byron Sebastian argued that Ruby presents a more contemporary option for developers than Java. "I think there's a lot that we've learned over the last 10 years in terms of what's needed. Ruby sort of comes from that new generation of programming languages, frameworks and, frankly, of developers," Sebastian said.

Darren Cunningham posted Migrating On-Premise Databases To Database.com to Informatica’s Perspectives blog on 12/7/2010:

I noted in a 2006 blog post

that, “in many organizations getting access to information locked away in the data warehouse is still considered to be “in demand” not on demand.” Four years later, the terminology has changed, and adoption of cloud applications and platforms like Salesforce CRM and Force.com has skyrocketed, but for many developers the same challenge persists. Gaining access to corporate databases to build mobile, social or even analytical applications is next to impossible. And if access is granted, too much time and resources are required to implement, manage and maintain software and hardware infrastructure, instead of building innovative applications. That’s what’s so exciting about today’s Database.com announcement

.

As Marc Benioff notes in this TechCrunch article

: “We see cloud databases as a massive market opportunity that will power the shift to real-time enterprise applications that are natively cloud, mobile and social.”

Fantastic!

Now what if there was a way to easily migrate your current databases to this mulitentent database in the sky? That’s where Informatica Cloud

comes in. Today more than 1000 companies

, large enterprises to SMBs, rely on Informatica Cloud to integrate over 10 billion records a month between cloud and on-premise applications. Our goal is to make it easy for organizations to migrate to Salesforce CRM and Force.com and then synchronize their cloud data with other systems. This ensures that Salesforce is integrated tightly with their business processes and ultimately ensures that organizations get the most value from all of their business applications and platforms.

Many companies

rely on Informatica to deliver real-time integration between Salesforce and back office systems such as Oracle or SAP. Replicating cloud-based data with on-premise data warehouses is also a very common use case

for Informatica Cloud. Today, we announced

that we are extending Informatica Cloud to make it even easier to migrate any legacy database – the schema AND the data – to the cloud, with Database.com. And the best part is you can do this without setting up any new hardware or software – or even writing any code.

For those of you at Dreamforce 2010

, Informatica will be demonstrating the ease with which data can be replicated automatically from an on-premise Oracle and Microsoft SQL Server databases to Database.com with Informatica Cloud. On Wednesday December 8 at 3:15pm in the Database.com First Look session (room West 2001), we will show the demo of this new cloud integration capability. We’ll also be running the demo today at Informatica’s booth #500 of the partner expo. Stay tuned for the recording for those of you not attending the conference.

As Juan Carlos Soto, senior vice president and general manager, Cloud and B2B, put it in Informatica’s supporting announcement for Database.com

:

“With its self-service approach to cloud integration, Informatica Cloud

provides a powerful and cost-effective means to integrate Database.com with enterprise applications and information using just a few clicks of a mouse.”

At Informatica, we’re excited about Database.com

and we want to make it as easy as possible for developers to take advantage of it.

Darren Cunningham is the VP of Marketing for Informatica Cloud.

Derrick Harris posted Benioff Delivers on Promise to Democratize Databases to GigaOm’s Structure blog on 12/7/2010:

Salesforce.com today gave the world true cloud data portability. Kind of. As its name suggests, Salesforce.com’s new Database.com offering is a cloud database, one that’s designed for enterprise, social and mobile applications. What the name doesn’t tell is that users can tie to Database.com applications written in most any language and running atop most any public cloud. Yes, users are tied to Database.com to achieve this capability, but it’s another step toward true cloud choice.

Technologically, Database.com should be very familiar to anyone that has used Salesforce.com. As Gordon Evans, senior director of public relations, told me in an email, it’s the “same infrastructure that powers our Salesforce apps and Force.com platform, now available as a standalone service.” What’s new, however, is the “social data model” designed to handle things like feeds, status updates and user profiles, and development kits for everything from Java to iOS to Windows Azure. Furthermore, as Evans clarified, “Database.com can serve as the database for any cloud application connected to the Internet.”

But it’s the openness – or the choice, at least – that’s the real story here. If developers are willing to commit to Database.com as the data layer, they can have their choice as to what types of applications they run and atop which clouds they run them. It’s not the Promised Land of true interoperability for which openness advocates are calling, but early on, at least, we’ll have to live with proprietary innovation in cloud computing. That makes choice the best alternative to true openness.

Oh, and Database.com marks the fulfillment of Marc Benioff’s suggestion last month that he would democratize databases in the cloud. The suggestion seemed strange at the time considering how only Salesforce.com CRM customers and Force.com developers could access the company’s database infrastructure, but, clearly, Benioff had a plan in place. Part of democratization is a low price, and with free introductory pricing and $10-per-month increments for additional users and usage tiers, pricing shouldn’t prove too big a hindrance.

Where Database.com might suffer is for webscale applications requiring NoSQL tools to handle massive scale and mountains of unstructured data, but no product can be everything to everyone.

Related content from GigaOM Pro (sub req’d):

Just how Database.com “democratizes databases in the cloud” remains a mystery to me. Equating Database.com’s current user, storage, and transation fees with “a low price” is ludicrous (see my Preliminary Comparison of Database.com and SQL Azure Features and Capabilities post of 12/10/2010.)

Eric Knorr [pictured below] asserted “The new Database.com service unveiled by Salesforce aspires to be the database engine for cloud application developers” in a deck for his What Salesforce's Database.com really means article of 12/7/2010 for InfoWorld’s Cloud Computing blog: