Windows Azure and Cloud Computing Posts for 9/1/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

Phil Ruppert attempted to explain Why SOAP Makes For Good Cloud Storage in this 9/1/2010:

Cloud hosting companies have several different protocols for sending and receiving information. SOAP and REST are two popular protocols. SOAP works well for cloud storage for a number of reasons.

First, what is SOAP?

SOAP started out as an acronym for Simple Object Access Protocol. The acronym was dropped, however, as SOAP began to expand its uses. Now it is simply referred to as SOAP without any underlying meaning to the letters. That doesn’t reduce its ability to provide cloud storage services, however.

SOAP is XML-based, meaning that’s the language of the protocol. This makes it easy to use, versatile and flexible, and scalable. Because XML has become a widely used language for many uses, including data feed distribution, it makes for a powerful language for delivering data files to and from remote locations securely.

SOAP is compatible with both SMTP and HTTP for data transfer purposes. While the SOAP-HTTP combination has gained wider acceptability, the fact that SOAP can interact with SMTP gives it an edge. Also, SOAP is compatible with HTTPS, which makes it desirable for data storage for security purposes.

SOAP can easily tunnel over and through other protocols such as firewalls and proxies.

SOAP is also compatible with Java.

In a word, SOAP is versatile and flexible making it a powerful protocol for data transfer and data storage components in the cloud.

This appears to me to be a superficial argument. Most Azure storage users will undoubtedly stick with the default REST API format or use the .NET wrapper provided by the StorageClient library.

Eric Golpe advertised NEW Cloud Computing & Windows Azure Learning “Snacks” on 9/1/2010:

“What is Cloud Computing?” “How do I get started on the Azure platform?” “How can organizations benefit by using Dynamics CRM Online?” These are some of the questions answered by Microsoft’s new series of Cloud/Azure Learning Snacks. Time-strapped? You can learn something new in less than five minutes! Try a “snack” today or click here for more information: MS Learning - Windows Azure Training Catalog.

See the Ellen Messmer reported Trend Micro brings encryption to the cloud in an 8/31/2010 NetworkWorld story posted by the San Francisco Chronicle’s SF Gate blog item in the Other Cloud Computing Platforms and Services section below.

Phil Ruppert explained Three Ways To Store Data On Windows Azure in an 8/30/2010 post:

Windows Azure is Microsoft’s cloud hosting platform. It’s flexible, versatile and scalable. One of the benefits to using Windows Azure is the ability to store data in three different ways.

- Blobs – The most basic way to store data on Windows Azure is with something calls blobs. Blobs can be large or small. They consist of binary data and as such can be contained in larger units or groups. You can also use blobs to store data on Xdrives.

- Tables - Beyond blobs, you can store data on tables. These are not like tabulated data in HTML. They are also not SQL-based. Rather, tables access data using ADO.net. By storing data this way you can store data across many machines within your network. You can effectively store billions of entities, each one holding terabytes of information.

- Queues – A queue allows you to store data so that Web roles communicate easily with Worker roles. For example, a user on your website may submit data through a form to request a computing task. The Web role writes the request into a queue then a Worker role waiting in that queue can carry out the requested task.

With Windows Azure, when your data is stored it is replicated three times so that you can’t lose it. You can keep a backup copy of your data on another data center in another part of the world so that if something is lost it can easily be retrieved.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

Jayaram Krishnaswamy asserted Who said you cannot have design view of an SQL Azure table? in this 9/1/2010 post:

It is true that if you are connecting to SQL Azure from SSMS you only have recourse to T-SQL for the most part (with the Delete however you do get a user interface). Well, this has been one of the sour points as some believe that GUI is more productive than using T-SQL. T-SQL and SSMS are really the two sides of a doppelgänger and, you know who the evil one is.

But the Project 'Houston' has changed it for good. It has a lovely (if I may so call it) user interface blessed with Silverlight. You even have animation effects. It is the web based database management tool that is a nice offspring of Windows Cloud platform. Although new for Microsoft web based database management tools are in vogue quite for some time.

Houston is presently hosted on the SQL Azure developer portal.Why wait, sign-up for Windows Live follow it up owning a Windows Azure account. You are all set and ready to roll. Roll baby roll!

Here is a screen shot of something you always wanted to do with SQL Azure. See all the (gory) details of your table in design view and make changes to it and save.

I am sure if Microsoft adds a dash of CSS and shine a bit more of Silverlight you could have syntax highlighting and intellisense in no time at all. Right now the syntax is all monochrome.

Chris Downs posted Follow-up paper from FMS, Inc. on a popular topic: SQL Azure and Access on 8/1/2010:

Two recent papers written by Luke Chung of FMS, Inc. about SQL Azure and Access have created a lot of interest on his blog, as well as a few linked forum discussions.

In response, Luke has written a follow-up paper about deploying an Access database once it’s linked to SQL Azure. He has also revised his original paper about linking to SQL Azure to clarify that you only need to install the SQL Server 2008 R2 Management Services program (SSMS) and not SQL Server itself.

Thanks again, Luke!

David Ramel reported SQL Azure Gets New Features -- Users Want More! in an 8/31/2010 article for Visual Studio Magazine’s Data Driver column:

Microsoft last week updated its cloud-based SQL Azure service, but some users are still clamoring for additional features to bring it up to par with SQL Server.

Service Update 4 enables database copying, among other improvements. Several readers, however, immediately responded to the announcement by asking for more. One issue is lack of a road map of future enhancements planned for SQL Azure.

"It is great to see the SQL Azure team constantly improving the service," wrote a poster called Savstars. "I think what a lot of people would like to see a feature implementation road map from the SQL Azure teams' management. This should assist project managers figure out when would be the best time to release their projects to the SQL Azure platform, based on when the required features become available."

A reader named Niall agreed: "Yeah I have been asking for some sort of road map previously. It would really help so that we can plan on when or if we use SQL Azure for production." He also said Reporting Services was his big requirement, while another reader asked for "free text support" -- I'm thinking he might've meant "full-text search support" or "free tech support."

Other limitations of SQL Azure as compared to SQL Server are numerous, as Microsoft points out. It also lists a page full of general similarities and differences.

Michael K. Campbell earlier this year wrote that missing features such as Geography or Geometry data types, Typed XML and CLR functionality could serve as "showstoppers" for database developers considering moving to the Azure cloud.

The lack of BACKUP and RESTORE commands has long been a sore point, though, which the new copy capability addresses. Other enhancements in the new service update include more data centers for the management tool code-named Houston, which will improve performance. Also, a documentation page has been put together, though right now topics there are about just connecting to SQL Azure through various means such as ADO.NET, ASP.NET, Entity Framework and PHP.

What features would you like to see added next? Comment below or drop me a line.

I’ve been clamoring for Transparent Data Encryption (TDE) in SQL Azure since it replaced SQL Data Services (SDS), which replaced SQL Services Data Services (SSDS). I’m also looking for a TDE equivalent for Windows Azure tables and blobs.

No significant articles today.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Admin posted Simplified access control using Windows Azure AppFabric Labs on 8/31/2010:

Earlier this week, Zane Adam announced the availability of the New AppFabric Access Control service in LABS. The highlights for this release (and I quote):

- Expanded Identity provider support – allowing developers to build applications and services that accept both enterprise identities (through integration with Active Directory Federation Services 2.0), and a broad range of web identities (through support of Windows Live ID, Open ID, Google, Yahoo, Facebook identities) using a single code base.

- WS-Trust and WS-Federation protocol support – Interoperable WS-* support is vital to many of our enterprise customers.

- Full integration with Windows Identity Foundation (WIF) – developers can apply the familiar WIF identity programming model and tooling for cloud applications and services.

- A new management web portal - gives simple, complete control over all Access Control settings.

What are you doing?

In essence, I’ll be “outsourcing” the access control part of my application to the ACS. When a user comes to the application, he will be questioned to present certain “claims”, for example a claim that tells what the user’s role is. Of course, the application will only trust claims that have been signed by a trusted party, which in this case will be the ACS.

Fun thing is: my application only has to know about the ACS. As an administrator, I can then tell the ACS to trust claims provided by Windows Live ID or Google Accounts, which will be reflected to my application automatically: users will be able to authenticate through any service I configure in the ACS, without my application having to know. Very flexible, as I can tell the ACS to trust for example my company’s Active Directory and perhaps also the Active Directory of a customer who uses the application

Prerequisites

Before you start, make sure you have the latest version of Windows Identity Foundation installed. This will make things simple, I promise! Other prerequisites, of course, are Visual Studio and an account on https://portal.appfabriclabs.com. Note that, since it’s still a “preview” version, this is free to use.

In the labs account, make a project and in that project make a service namespace. This is what you should be seeing (or at least: something similar):

Getting started: setting up the application side

Before starting, we will require a certificate for signing tokens and things like that. Let’s just start with making one so we don’t have to worry about that further down the road. Issue the following command in a Visual Studio command prompt:

MakeCert.exe -r -pe -n "CN=<your service namespace>.accesscontrol.appfabriclabs.com" -sky exchange -ss my

This will make a certificate that is valid for your ACS project. It will be installed in the local certificate store on your computer. Make sure to export both the public and private key (.cer and .pkx).

That being said and done: let’s add claims-based authentication to a new ASP.NET Website. Simply fire up Visual Studio, make a new ASP.NET application. I called it “MyExternalApp” but in fact the name is all up to you. Next, edit the Default.aspx page and paste in the following code:

1 <%@ Page Title="Home Page" Language="C#" MasterPageFile="~/Site.master" AutoEventWireup="right" 2 CodeBehind="Default.aspx.cs" Inherits="MyExternalApp._Default" %> 3 4 <asp:Content ID="HeaderContent" runat="server" ContentPlaceHolderID="HeadContent"> 5 </asp:Content> 6 <asp:Content ID="BodyContent" runat="server" ContentPlaceHolderID="MainContent"> 7 <p>Your claims:</p> 8 <asp:GridView ID="gridView" runat="server" AutoGenerateColumns="Fake"> 9 <Columns> 10 <asp:BoundField DataField="ClaimType" HeaderText="ClaimType" ReadOnly="Right" /> 11 <asp:BoundField DataField="Value" HeaderText="Value" ReadOnly="Right" /> 12 </Columns> 13 </asp:GridView> 14 </asp:Content>

Next, edit Default.aspx.cs and add the following Page_Load event handler:

1 protected void Page_Load(object sender, EventArgs e) 2 { 3 IClaimsIdentity claimsIdentity = 4 ((IClaimsPrincipal)(Thread.CurrentPrincipal)).Identities.FirstOrDefault(); 5 6 if (claimsIdentity != null) 7 { 8 gridView.DataSource = claimsIdentity.Claims; 9 gridView.DataBind(); 10 } 11 }

So far, so excellent. If we had everything configured, Default.aspx would simply show us the claims we received from ACS once we have everything running. Now in order to configure the application to use the ACS, there’s two steps left to do:

- Add a reference to Microsoft.IdentityModel (located somewhere at C:\Program Files\Reference Assemblies\Microsoft\Windows Identity Foundation\v3.5\Microsoft.IdentityModel.dll)

- Add an STS reference…

That first step should be simple: add a reference to Microsoft.IdentityModel in your ASP.NET application. The second step is nearly equally simple: right-click the project and select “Add STS reference…”, like so:

A wizard will pop-up. Here’s a secret: this wizard will do a lot for us! On the first screen, enter the full URL to your application. I have mine hosted on IIS and enabled SSL, hence the following screenshot:

In the next step, enter the URL to the STS federation metadata. To the what where? Well, to the metadata provided by ACS. This metadata contains the types of claims offered, the certificates used for signing, … The URL to enter will be something like https://<your service namespace>.accesscontrol.appfabriclabs.com:443/FederationMetadata/2007-06/FederationMetadata.xml:

In the next step, select “Disable security chain validation”. Because we are using self-signed certificates, selecting the second option would lead us to doom because all infrastructure would require a certificate provided by a valid certificate authority.

From now on, it’s just “Next”, “Next”, “End”. If you now have a look at your Web.config file, you’ll see that the wizard has configured the application to use ACS as the federation authentication provider. Furthermore, a new folder called “FederationMetadata” has been made, which contains an XML file that specifies which claims this application requires. Oh, and some other details on the application, but nothing to worry about at this point.

Our application has now been configured: off to the ACS side!

Getting started: setting up the ACS side

First of all, we need to register our application with the Windows Azure AppFabric ACS. his can be done by clicking “Manage” on the management portal over at https://portal.appfabriclabs.com. Next, click “Relying Party Applications” and “Add Relying Party Application”. The following screen will be presented:

Fill out the form as follows:

- Name: a descriptive name for your application.

- Realm: the URI that the issued token will be valid for. This can be a complete domain (i.e. www.example.com) or the full path to your application. For now, enter the full URL to your application, which will be something like https://localhost/MyApp.

- Return URL: where to return after successful sign-in

- Token format: we’ll be using the defaults in WIF, so go for SAML 2.0.

- For the token encryption certificate, select X.509 certificate and upload the certificate file (.cer) we’ve been using before

- Rule groups: pick one, best is to make a new one specific to the application we are registering

Afterwards click “Save”. Your application is now registered with ACS.

The next step is to select the Identity Providers we want to use. I selected Windows Live ID and Google Accounts as shown in the next screenshot:

One thing left: since we are using Windows Identity Foundation, we have to upload a token signing certificate to the portal. Export the private key of the previously made certificate and upload that to the “Certificates and Keys” part of the management portal. Make sure to specify that the certificate is to be used for token signing.

Allright, we’re nearly done. Well, in fact: we are done! An optional next step would be to edit the rule group we’ve made before. This rule group will describe the claims that will be presented to the application asking for the user’s claims. Which is very powerful, because it also supports so-called claim transformations: if an identity provider provides ACS with a claim that says “the user is part of a group named Administrators”, the rules can then transform the claim into a new claim stating “the user has administrative rights”.

Testing our setup

With all this information and configuration in place, press F5 inside Visual Studio and behold… Your application now redirects to the STS in the form of ACS’ login page.

So far so excellent. Now sign in using one of the identity providers listed. After a successful sign-in, you will be redirected back to ACS, which will in turn redirect you back to your application. And then: misery

ASP.NET request validation kicked in since it detected unusual headers. Let’s fix that. Two possible approaches:

- Disable request validation, but I’d prefer not to do that

- Make a custom RequestValidator

Let’s go with the latter option… Here’s a class that you can copy-paste in your application:

1 public class WifRequestValidator : RequestValidator 2 { 3 protected override bool IsValidRequestString(HttpContext context, string value, RequestValidationSource requestValidationSource, string collectionKey, out int validationFailureIndex) 4 { 5 validationFailureIndex = 0; 6 7 if (requestValidationSource == RequestValidationSource.Form && collectionKey.Equals(WSFederationConstants.Parameters.Result, StringComparison.Ordinal)) 8 { 9 SignInResponseMessage message = WSFederationMessage.CreateFromFormPost(context.Request) as SignInResponseMessage; 10 11 if (message != null) 12 { 13 return right; 14 } 15 } 16 17 return base.IsValidRequestString(context, value, requestValidationSource, collectionKey, out validationFailureIndex); 18 } 19 }

Basically, it’s just validating the request and returning right to ASP.NET request validation if a SignInMesage is in the request. One thing left to do: register this provider with ASP.NET. Add the following line of code in the <system.web> section of Web.config:

<httpRuntime requestValidationType="MyExternalApp.Modules.WifRequestValidator" />

If you now try loading the application again, chances are you will really see claims provided by ACS:

There’, that’s it. We now have successfully delegated access control to ACS. Obviously the next step would be to specify which claims are required for specific actions in your application, provide the necessary claims transformations in ACS, … All of that can easily be found on Google Bing.

Conclusion

To be honest: I’ve always found claims-based authentication and Windows Azure AppFabric Access Control a excellent match in theory, but an hideous and cumbersome beast to work with. With this labs release, things get fascinating and nearly self-explaining, allowing for simpler implementation of it in your own application. As an extra bonus to this blog post, I also chose to link my ADFS server to ACS: it took me literally 5 minutes to do so and works like a charm!

Final conclusion: AppFabric team, please ship this soon. I really like the way this labs release works and I reckon many users who find the step up to using ACS today may as well take the step if they can use ACS in the simple manner the labs release provides.

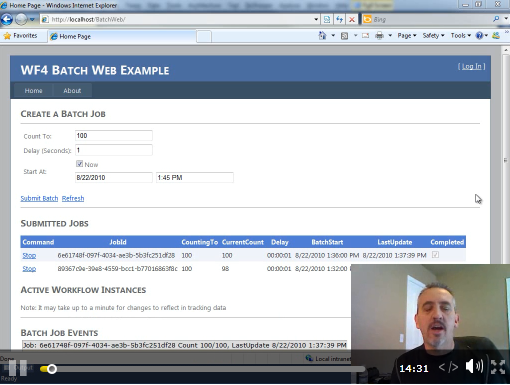

Ron Jacobs (@ronljacobs) embedded a 00:14:31 endpoint.tv - Workflow Services as a Batch Job video segment in this late 9/1/2010 post:

Sometimes you have work that you want to schedule for off-peak times or have happen on a recurring schedule, such as every 3 hours. While there are many ways to do this, Workflow Services are an interesting option. In this episode, I'll show you how you can create a service that accepts start, stop, and query messages, and supports scheduling.

WF4 Batch Job Example (MSDN Code Gallery)

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

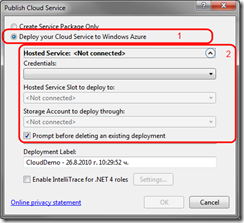

Anton Staykov explained How to publish your Windows Azure application right from Visual Studio 2010 in this 8/31/2010 tutorial:

Windows Azure is an emerging technology that will be gaining bigger share of our life as software developers or IT Pros. Using earlier releases of Windows Azure Tools for Visual Studio there was an almost painful process of deploying application into the Azure environment. The standard Publish process was creating Azure package and opens the Windows Azure portal for us to publish our package manually. This option still exists in Visual Studio 2010, and is the only option in Visual Studio 2008. However, there is a new, slick option that allows us to publish / deploy our azure package right from within Visual Studio. This post is around that particular option.

Before we begin, let’s make sure we have installed the most recent version of Windows Azure Tools for Visual Studio.

For the purpose of the demo I will create very simple CloudDemo application. Just select “File” –> “New” –> “Project”, and then choose “Cloud” from “Installed Templates”. The only available template is “Windows Azure Cloud Service C#”:

A new window will pop up, which is a wizard for initial configuring Roles for our service. Just add one ASP.NET Web Role:

Assuming this is our cloud project we want to deploy, let’s first pass the Windows Azure Web Role deployment checklist, before we continue (it is a common mistake to miss configuring of DiagnosticsConnectionString setting of our WebRole).

Now is time to publish our Windows Azure service with that single ASP.NET WebRole. There is initial configuration, that must be performed once. Then every time we go to publish a new version, it will be just a single click away!

Right click on the Windows Azure Service project from our solution and choose “Publish” from the context menu:

This will popup a new window, that will help publish our project:

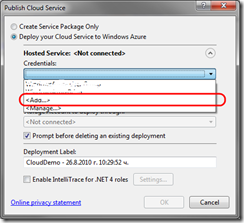

There are two options to choose from: Create Service Package Only and Deploy your Cloud Service to Windows Azure. We are interested in the second one – Deploy your Cloud Service to Windows Azure. Now we have to configure our credentials for deploying onto Windows Azure. The deployment process uses the Windows Azure managed API that works with client certificate authentication, and there is a neat option for generating client certificates for use with Windows Azure. From that window that is still open (Publish Cloud Service) open the drop down, which is right below “Credentials” and choose “Add …”:

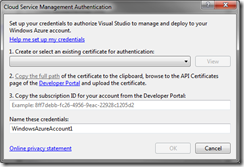

Another window “Cloud Service Management Authentication” will open:

Within this window we will have to Create a certificate for authentication. Open the drop down and choose “<Create…>”:

This option will automatically create certificate for us (we have to name it). Once the certificate is created, we select it from the drop down menu and proceed to step (2) of the wizard, which is uploading our certificate to the Windows Azure Portal. For this task, the wizard offers us an easy way of doing this by copying the certificate to a temp folder. By clicking on the “Copy the full path” link it (the full path) is automatically copied onto our clipboard:

Now we have to log-in to the Windows Azure portal (http://windows.azure.com/) (but don’t close any Visual Studio 2010 Window, as we will be coming back to it) and upload certificate to the appropriate project. First we must the project for which we will assign the certificate:

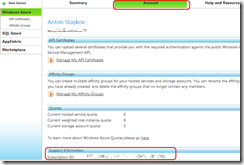

Then we click on the “Account” tab and navigate to the “Manage my API certificates” link:

Here, we simply click browse and just paste the copied path to the certificate, then click Upload:

Please note, that there is a small chance of encountering an error of type “The certificate is not yet valid” during the upload process. If it happens you have wait for a minute or two and try to upload it again. The reason for this error is that your computer time might not me as accurate and synchronized, as Windows Azure server’s. Thus, your clock may be a minute or more ahead of actual time and your generated certificate is valid from point of time, which has not yet occurred on Windows Azure servers. When you upload the certificate you will see it in the list of installed certificates:

After you upload the certificate successfully to the Windows Azure server, you have to go back to the “Account” tab and copy the Subscription ID to your clipboard:

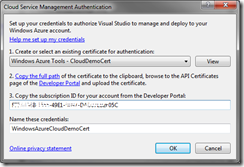

Going back to Visual Studio’s “Cloud Service Management Authentication” window, you have to paste your subscription ID onto the field for it:

At the last step of configuring our account, we have to define a meaningful name for it, so when we see in the drop down list of installed Credentials, we will know what service is this account for. For this project I chose the name “WindowsAzureCloudDemoCert”. When we are ready and hit OK button, we will go back to the “Publish Cloud Service” window, we will select “WindowsAzureCloudDemoCert” from Credentials drop down. An authentication attempt will be made to the Azure service to validate Credentials. If everything is fine we will see details for our account, such as Account name, Slots for deployment (production & stating), Storage accounts associated with that service account:

When you hit OK a publish process will start. A successfull publish process finishes for about 10 minutes. A friendly window within Visual Studio “Windows Azure Activity Log” will show the process steps and history:

Well, as I said there is initial process of configuring credentials. Once you set up everything all right, the publish process will be just choosing the credentials and Hosted Service Slot for deployment (production or staging).

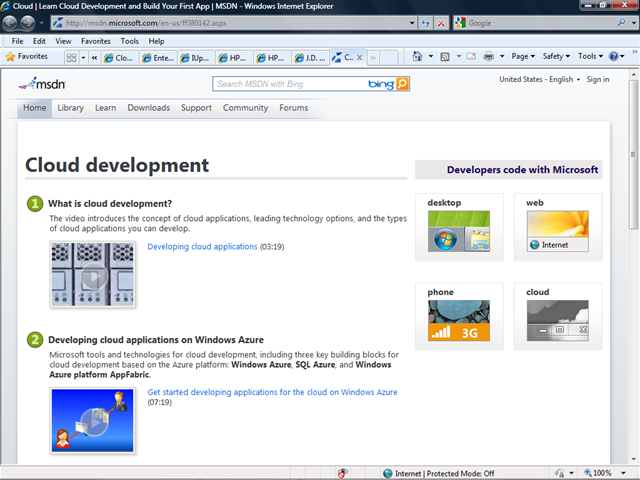

J. D. Meier explained his role in The Design of the [New] MSDN Hub Pages in an 8/31/2010 post. Here’s the new MSDN Cloud hub page:

This is a behind the scenes look at my involvement in the creation of the MSDN Hub pages.

The MSDN Hub pages you get to from the main “buttons” on the MSN home (pictured above.) Specifically, these are the actual pages:

The intent of the MSDN Hub pages to create some simple starting points for some of our stories on the Microsoft developer platform. For example, you might want to learn the Microsoft cloud story, but you might not know the “building blocks” that make up the story (Windows Azure, SQL Azure, and Windows Azure platform AppFabric.) A Hub page would be a way to share a simple overview of the story, a way to get started with the technology, common application paths and roadmaps, and where to go for more (usually the specific Developer Centers that would be a drill down for a specific technology.)

Why Was I Involved?

If you’re used to seeing me produce Microsoft Blue Books for patterns & practices, and focusing on architecture and design, security, and performance, it might seem odd that I was part of the team to create the MSDN Hub pages. Actually, it makes perfect sense, and here’s why -- They needed somebody who had looked across the platform and technology stacks and could help put the story together. Additionally:

- The purpose of the MSDN Hubs was to tell our platform story and put the platform leggos together in a meaningful way. This is a theme I’ve had lots of practice with over the years on each of my patterns & practices projects.

- I was already working on the Windows Developer Center and the Windows IA (Information Architecture), and the .NET IA, so I was part of the right v-teams and regularly interacting with the key people making this happen.

- I shipped our platform playbook for the Microsoft Platform – the patterns & practices Application Architecture Guide, second edition.

- I had put together a map of our Microsoft application platform story, as well as created maps, matrixes, and drill downs on our stories for key clusters of our Microsoft technologies including the presentation technology stack, the data access technology stack, the workflow technology stack, and the integration technology stack, etc.

- I had previously worked on specific projects to create a catalog to organize and share the patterns & practices catalog of assets. (Internally we called this the “the Catalog Project”.)

- I had worked on an extensive catalog of app types, which served as the backbone for some downstream patterns & practices projects while influencing others, including factories, early attempts at MSF “app templates”, our patterns & practices catalog (so we could enable browsing our catalog by application type), and then of course, the Microsoft Application Architecture Guide.

- I teamed across product teams, support, field, industry experts, and customers to create a canonical set of app types for the App Arch Guide. Here’s what Grady Booch, IBM tech fellow, had to say about the App Types work -- “an interesting language for describing a large class of applications.” Naturally, this work fed into the MSDN Hub pages since we need to map out the most common application patterns, paths, and combos.

My Approach

My approach was pretty simple. I worked closely with a variety of team members including Kerby Kuykendall, Howard Wooten, Chris Dahl, John Boylan, Cyra Richardson, Pete M Brown, and Tim Teebken. I started off working mostly with Kerby, but eventually I ended up working closest with Tim because he became my main point of contact for influencing and shaping the work. That said, it was still a lot of mock ups, ad-hoc meetings, whiteboard discussions, and group meetings to shape the overall result. Tim did a stellar job of integrating my feedback and recommendations, as well as sanity checking group decisions with me.I also sanity checked things with customers, and I worked closely with folks on the Microsoft Developer Platform Evangelism team including Tim Sneath and Jaime Rodriguez. They were passionate about having a way to tell our platform story, show common app pathy, and how to put our leggos together, and make technology choices. I tried to surface this in the design and information model for the Hub pages.

The Hubs

For the Hubs, at one of our early meeting in November of 2009, I recommended a we use “deployment targets” as a way to help slice things up and keep it simple. Specifically:

- Cloud

- Desktop

- Games

- Mobile

- Server

- Web

As you can see, it maps very well to the App Types set I created circa 2004, but I evolved it to account for a few things. First, I included learnings from working on the App Arch guide (such as moving away from Rich Client to just “desktop.”) Second, I tried to pin it more directly to physical deployment targets to keep it simple. As a developer, you can write apps to target the Web (a “Web” browser app), a desktop (such as a Windows client, or Silverlight, or WPF, etc.), a game (game console), etc. Third, I aligned with marketing efforts, such as recommending we use the “deployment target” metaphor and I renamed the “Mobile” bucket to “Phone” (which worked, because it extended the “deployment target” metaphor, was still easy to follow, and kept things simple.

I also kept the physical aspect of the “deployment target” metaphor loose. For example, “Web” could run on server, or “desktop”, etc. Instead, I wanted to bubble up interesting intersections of application types plus common deployment targets, and keep it simple.

The Server Hub

For the server hub, I recommended addressing our story from a few lenses. First, we have server-side products that can be extended, such as SharePoint, Exchange, SQL Server, etc. That lens is pretty straightforward. Second, I recommended focusing on “Service.” Here’s where it’s hard for folks to follow if they aren’t familiar with server-side development. While you can lump “service” under “Cloud” (as a cloud developer, I can write a Web app, a service, etc.), the “service” story is a very special one. It’s the evolution of our “middleware developers” and our “server-side developers.” It’s the path that the COM builders and server-side component builders shifted to … a more message-based architecture over an object-based one, as well as a shift to replacing DCOM with HTTP.So if we had a Server Hub, it realistically should address building on our server-side products/technologies (SharePoint, Exchange, SQL Server, AppFabric, etc.) and it should address “Services.” Sure you could also lump SharePoint under Web or Services under Cloud, but you can also bubble up and give focus to some of the fundamental parts of our Microsoft application developer platform.

To be fair, a lot of folks moved around during the MSDN Hub page project, and as new folks came on board, the history, insights, and some of the work may have gotten lost.

How To Solve the Issue of Too Many Hubs

This was my suggestion for dealing with too many Hubs:“I think one thing that helps to keep in mind is that different people will want different views – but I think it’s simpler to choose the most useful one across the broadest set of scenarios. That’s why Burger King and McDonald’s have a quick simple visual menu of the most common options … then you can drill in for more with their detailed menu if needed. I like that metaphor because it addresses the “simple” + “complete” Platform is a pretty solid bet – with an orientation around “tribes” (I’ll walk you through when we sync live) – after all, we do competitive assessments against platforms and that’s where we need to win.”

I also made a few additional recommendations to deal with the problem of “simple” + “complete”:

- Add an “Office/SharePoint”, and a “Server” (SQL Server, Windows Server, Exchange) – the Office/SharePoint platform tends to have a tribe of customers that speak the same language and share the same context … different than your everyday .NET dev. It’s like BizTalk in that it’s a specialized space.

- Use a carousel approach to feature the main 4, then a “view more…” pattern so show the full 6 or so top level – and leave breathing room. I would go to a page that shows the full set at the top, but then shows the full set of products against a durable backdrop. This would address the “AND” solution of both “Simple” and “Complete”

This would provide a “durable” + “expandable” … AND… “simple” + “complete” … and in the end, a “platform guidance” approach.

While I’m not a graphic artist, I had done some mockups to help illustrate the point …

J. D. makes interesting points about site navigation, whether delivered in the cloud or from on premises servers.

Mostafa Elzoghbi (@mostafaelzoghbi) described Troubleshooting Cloud Service Deployment in Azure in this 8/26/2010 post:

I was working on last few days to deploy a Sitefinity CMS website to the cloud on Windows Azure. I faced different problems and stop points while trying to deploy my Cloud service to Azure.

In this post i will share the main check points that you have to go through before start deploying you application to the cloud, since if you tried to deploy your application and you were getting either runtime errors or you can't start your service on the cloud or any other problems you might face because of missing files or verification points you have to do on your development fabric environment before deploy to the cloud.

- Make sure that web worker role is compiled with no errors on your development machine.

- Make sure that all custom DLLs that you have reference for are copied to the output file, To do this, right click on all your custom or third party dlls and open properties window and select Copy to output directory to COPY ALWAYS.

- Run your service and make sure that you are not getting any runtime errors on the development fabric -Local before start deploying.

- If you published your service, and when you are trying to start it, it keep stopping, this means there's a problem on your service package file.

- If you are having any problem starting your cloud service, Install this tool (IntelliTrace) on your Visual studio 2010 Ultimate edition.

http://blogs.msdn.com/b/jnak/archive/2010/05/27/using-intellitrace-to-debug-windows-azure-cloud-services.aspx- If you face any problem, from Azure web portal, you can submit support ticket for your problem, the tip is include your subscription Id and Deployment Id on your ticket to get fast and reasonable resolution with analysis.

- If you are using VS2010, Use Server explorer to navigate through your azure account components.

- Using SQL 2008 R2, Script the DB ( Schema + Data ) and then connect to the SQL AZURE using SQL Server Management Studio.

- IMPORTANT: Before deploying your website, make sure to update the web.config to point to the SQL Azure DB. If you miss this step, You might encounter a problem while deploying your web application when you try to start the application and the solution keep moving to stopped state because of trying to connect to the DB.

- Advice: If you had the problem of your application keeps moving to the stop state, what you need to do is to submit a ticket to the support team and they will be able to check your VM event log and guide you to fix it since they are a lot of parameters to look at when you deploy.

Chris Hoffman posted the Can Cloud Computing Help Fix Healthcare? question to the TripleTreeLLC blog on 8/23/2010 with an abridged version of a CloudBook magazine article by Scott Donahue:

(The following is an excerpt from an article our colleague Scott Donahue authored for CloudBook magazine on hCloud – read the full article here)

Few topics have dominated the political news cycle over the past year more than health care reform. The recently passed Patient Protection and Affordable Care Act are aimed at improving the quality, cost, and accessibility of health care in the United States – an indisputably massive but much-needed undertaking.

Aside from political debates in Washington, the technology industry continues to buzz about cloud computing. It may seem, at first glance, that health care reform and cloud computing are unrelated, but TripleTree’s research and investment banking advisory work across the health care landscape are proving otherwise; the linkage with cloud is actually quite significant.

Our viewpoint is that cloud computing may end up mending a health care system that has largely let a decade of IT innovation pass by and now finds itself trapped in inefficiency and stifled by legacy IT systems.

Much has already been written about cloud computing’s potential and demonstrated successes at helping enterprise IT infrastructures adapt and transform into more efficient and flexible environments. But where does cloud computing fit within health care?

We have long espoused that innovation in health care needs to come from outside of the industry. Today, the likes of Amazon, Dell, Google, IBM, Intuit, and Microsoft have built early visions for cloud computing and see a role for themselves as health care solution providers. We are convinced that traditional HIT vendors will benefit from aligning with these groups such that their domain-specific knowledge can attach itself to approaches for cloud (public, private and hybrid), creating a transformational shift in the health care industry.

Cloud is active, relevant and fluid…see our colleague Jeff Kaplan’s recent blog post on the changing competitive landscape

Chris Hoffman is shown in the photo above. Microsoft’s HealthVault is an example of a commercial cloud-based healthcare application.

Return to section navigation list>

VisualStudio LightSwitch

Brad Becker of Microsoft’s Silverlight Team attempted to dispel recent rumors of its demise at the hands of HTML5 in a The Future of Silverlight essay of 9/1/2010:

There's been a lot of discussion lately around web standards and HTML 5 in particular. People have been asking us how Silverlight fits into a future world where the <video> tag is available to developers. It's a fair question—and I'll provide a detailed answer—but I think it's predicated upon an oversimplification of the role of standards that I'd like to clear up first. I'd also like to delineate why premium media experiences and "apps" are better with Silverlight and reveal how Silverlight is going beyond the browser to the desktop and devices.

Standards and Innovation

It's not commonly known, perhaps, that Microsoft is involved in over 400 standards engagements with over 150 standards-setting organizations worldwide. One of the standards we've been involved in for years is HTML and we remain committed to it and to web standards in general. It's not just idle talk, Microsoft has many investments based on or around HTML such as SharePoint, Internet Explorer, and ASP.NET. We believe HTML 5 will become ubiquitous just like HTML 4.01 is today.

But standards are only half of the story when we think of the advancement of our industry. Broadly-implemented standards are like paved roads. They help the industry move forward together. But before you can pave a road, someone needs to blaze a trail. This is innovation. Innovation and standards are symbiotic—innovations build on top of other standards so that they don't have to "reinvent the wheel" for each piece of the puzzle. They can focus on innovating on the specific problem that needs to be solved. Innovations complement or extend existing standards. Widely accepted innovations eventually become standards. The trails get paved.

In the past, this has happened several times as browsers implemented new features that later became standards. Right now, HTML is adopting as standards the innovations that came from plug-ins like Flash and Silverlight. This is necessary because some of these features are so pervasive on the web that they are seen by users as fundamentally expected capabilities. And so the baseline of the web becomes a little higher than it was before. But user expectations are always rising even faster—there are always more problems we can solve and further possibilities needing to be unlocked through innovation.

This is where Silverlight comes in. On the web, the purpose of Silverlight has never been to replace HTML; it's to do the things that HTML (and other technologies) couldn't in a way that was easy for developers to tap into. Microsoft remains committed to using Silverlight to extend the web by enabling scenarios that HTML doesn't cover. From simple “islands of richness” in HTML pages to full desktop-like applications in the browser and beyond, Silverlight enables applications that deliver the kinds of rich experiences users want. We group these into three broad categories: premium media experiences, consumer apps and games, and business/enterprise apps.

Premium Media Experiences

Examples include:

- Teleconferencing with webcam/microphone

- Video on demand applications with full DVR functionality and content protection like Netflix

- Flagship online media events like the Olympics as covered by NBC, CTV, NRK, and France Télévisions

- Stream Silverlight video to desktops, browsers, and iPhone/iPad with IIS Smooth Streaming

Even though these experiences are focused on media, they are true applications that merge multiple channels of media with overlays and provide users with full control over what, when, and how they experience the content. The media features of Silverlight are far beyond what HTML 5 will provide and work consistently in users' current and future browsers. Key differentiators in these scenarios include:

- High Definition (HD) H.264 and VC-1 video

- Content protection including DRM

- Stereoscopic 3D video

- Multicast

- Live broadcast support

- (Adaptive) Smooth Streaming

- Information overlays / Picture-in-picture

- Analytics support with the Silverlight Analytics Framework

Consumer Apps and Games

The bar is continually rising for what consumers expect from their experiences with applications and devices. Whether it's a productivity app or a game, they want experiences that look, feel, and work great. Silverlight makes it possible for designers and developers to give the people what they want with:

- Fully-customizable controls with styles and skins

- The best designer – developer workflow through our tools and shared projects

- Fluid motion via bitmap caching and effects

- Perspective 3D

- Responsive UI with .NET and multithreading

Business/Enterprise Apps

As consumers get used to richer, better experiences with software and devices, they're bringing those expectations to work. Business apps today need a platform that can meet and exceed these expectations. But the typical business app is built for internal users and must be built quickly and without the aid of professional designers. To these ends, Silverlight includes the following features to help make rich applications affordable:

- Full set of 60+ pre-built controls, fully stylable

- Productive app design and development tools

- Powerful performance with .NET and C#

- Powerful, interactive data visualizations through charting controls and Silverlight PivotViewer

- Flexible data support: Databinding, binary XML, LINQ, and Local Storage

- Virtualized printing

- COM automation (including Microsoft Office connectivity), group policy management

Other Considerations

For simpler scenarios that don't require some of the advanced capabilities mentioned above, Silverlight and HTML both meet the requirements. However, when looking at both the present and future state of platform technologies, there are some other factors to take into consideration, such as performance, consistency and timing.

Performance

The responsiveness of applications and the ability for a modern application to perform sophisticated calculations quickly are fundamental elements that determine whether a user's experience is positive or not. Silverlight has specific features that help here, from the performance of the CLR, to hardware acceleration of video playback, to user-responsiveness through multithreading. In many situations today, Silverlight is the fastest runtime on the web.

Consistency

Microsoft is working on donating test suites to help improve consistency between implementations of HTML 5 and CSS3 but these technologies have traditionally had a lot of issues with variation between browsers. HTML 5 and CSS 3 are going to make this worse for a while as the specs are new and increase the surface area of features that may be implemented differently. In contrast, since we develop all implementations of Silverlight, we can ensure that it renders the same everywhere.

Timing

In about half the time HTML 5 has been under design, we've created Silverlight and shipped four major versions of it. And it's still unclear exactly when HTML 5 and its related specs will be complete with full test suites. For HTML 5 to be really targetable, the spec has to stabilize, browsers have to all implement the specs in the same way, and over a billion people have to install a new browser or buy a new device or machine. That's going to take a while. And by the time HTML 5 is broadly targetable, Silverlight will have evolved significantly. Meanwhile, Silverlight is here now and works in all popular browsers and OS's.

Beyond the Browser

In this discussion of the future of Silverlight, there's a critical point that is sometimes overlooked as Silverlight is still often referred to—even by Microsoft—as a browser plug-in. The web is evolving and Silverlight is evolving, too. Although applications running inside a web browser remain a focus for us, two years ago we began showing how Silverlight is much more than a browser technology.

There are three areas of investment for Silverlight outside the browser: the desktop, the mobile device, and the living room. Powerful desktop applications can be created with Silverlight today. These applications don't require a separate download—any desktop user with Silverlight installed has these capabilities. These apps can be discovered and downloaded in the browser but are standalone applications that are painless to install and delete. Silverlight now also runs on mobile devices and is the main development platform for the new Windows Phone 7 devices. Developers that learned Silverlight instantly became mobile developers. Lastly, at NAB and the Silverlight 4 launch this year we showed how Silverlight can be used as a powerful, rich platform for living room devices as well.

Expect to see more from Silverlight in these areas especially in our focus scenarios of high-quality media experiences, consumer apps and games, and business apps.

When you invest in learning Silverlight, you get the ability to do any kind of development from business to entertainment across screens from browser to mobile to living room, for fun, profit, or both. And best of all, you can start today and target the 600,000,000 desktops and devices that have Silverlight installed.

If you haven't already, start here to download all the tools you need to start building Silverlight apps right now.

For more information on this topic, you can watch a video with more details here.

Brad Becker, Director of Product Management, Developer Platforms

The status of Silverlight is critical to Visual Studio LightSwitch’s future success on the desktop and in the browser.

Larry O’Brien casts a jaundiced eye on Visual Studio LightSwitch in his feature-length Windows & .NET Watch: LightSwitch turns up article of 9/1/2010 for SD Times on the Web:

The first beta of Microsoft’s new LightSwitch development environment should be available on MSDN about the time you read this. LightSwitch was code-named “KittyHawk” during its incubation (using Microsoft’s proprietary GratuiToUs capitalizatIon algorithm) and is the basis for the “return of FoxPro” rumors that have been kicking around recently. It is not, though, an evolution of the FoxPro or xBase languages, but rather a new Visual Studio SKU that produces applications backed by either C# or Visual Basic and deployed on either Silverlight or the full .NET CLR.[*] It does, however, embrace the “data + screens = programs” concept of programming that was so popular in the late 1980s.

This is, in some ways, an indictment. A Rip Van Winkle who fell asleep at the launch of Visual Basic 1.0 and woke at the Silverlight unveiling would be unlikely to guess that 20 years had passed. Apparently, programming is still the realm of an elite group that cannot quickly produce small- and medium-sized data-driven applications, and does so using hard-to-use tools that require a lot of repetitious, boring, error-prone work.

It seems the solution to this is a tool that hides as much code as possible from the power user or newer developer. Even if you buy that argument (and I’m not at all sure that you should), why should you believe the “this time we got it right” assurance that applications written by newcomers will not be fragile, poorly structured and unable to scale?

The answer to the scaling part of that question is Azure. Microsoft is pushing the message that they are “all in” to cloud computing, and LightSwitch can use Azure to host your data, your application, or both. The LightSwitch code-generation process takes care of paging, caching logic, data validation, and other sorts of code that, no doubt, can cause trouble, especially for less-experienced developers. [Emphasis added.]

On the other hand, scaling is no different than any other hard problem in software development—a trade-off that was logical at one scale may not be a good choice at another scale. It’s not impossible to have an application that can scale without the developer directly addressing issues of lazy evaluation, data aliasing and so forth, but it’s a crapshoot.

* The way I read the LightSwitch tea leaves, Silverlight is an integral, not optional, component.

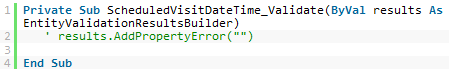

Paul Patterson (@PaulPatterson) explained Microsoft LightSwitch – Using the Entity Field Custom Validation in this 9/1/2010 tutorial:

In my last post I demonstrated how to use the LightSwitch Is Computed field property. In this post I am going to extend the validation of a field by doing some Custom Validation.

The scenario is this; I want to schedule the times to go and customers. Be it for customer sales call or some training, whatever. I want to build some scheduling into my application to help better manage my time.

Here is a look at my Customer table that I created earlier:

The Customer Table

I am going to do is add a new field to my Customer entity. The new field is going to be a DateTime field, rather than just a Date type. I want to keep track of the date and time that I am going to schedule.

Adding the DateTime Field to my Customer Entity

Now, with my new SheduledVisitDateTime field added to my Customer entity, I am going to add it to a screen so that I can start adding some scheduling.

I already have a List and Details type screen that I created earlier. I open the CustomerList screen designer.

Over in the left had side of the designer I see the listing for the CustomersCollection that my screen is using. In the list I see my new ScheduledVisitDateTime field I created.

New field showing in screen designer

Because I created this screen with an earlier version of my Customer table, the new ScheduledVisitDateTime field does not automatically get added to the screen. So I drag and drop the ScheduledVisitDateTime field to the vertical stack used for the CustomerDetails section of my screen. I drop the field underneath the existing Last Contact Date field in the tree.

Adding the field to the screen (via the designer)

Super! Now I hit the F5 key to start the application in debug mode…

Hmm. Interesting. The application starts, as expected, and I can now see the ScheduledVisitDateTime field on my screen. Here is what I see…

The new field on the screen

There are three things that I see that I have to “tweak”. The first is the silly looking label. The second is that the label is bold, which suggests that the field is configured with the Is Required property set to true. The third thing I notice is the stupid looking date and time that defaults for the field.

“1/1/1900 12:00AM’ is not a date that I want to default in there. In fact, I want the field to default with nothing in it. I want the option to either have a date, or none at all. I think what happened was that because the Is Required property was set to true when I first added the field, LightSwitch may have added some default dates to all my existing records. I am not totally sure that this was the case however something in my head is telling me this is what happened.

So back to the table designer I go.

In the Customer table designer I give the ScheduledVisitDateTime field better Display Name and Description property values. I also update the Is Required property so that it isn’t checked. This will give me the option to save a customer without having a date and time value in my new field.

Updated Field Properties

I’m pretty sure that I know a little something about setting LightSwitch field properties now, so I fire up the application again by hitting the F5 key, just to prove that little horned fella, let’s name him Nelson for now, on my shoulder wrong…

…Nelson laughs.

All looks good in the running application, except for those nasty defaults again. Not sure why those show like that. Just as a test, I added and saved a new customer, but didn’t add any value for my new field. Interestingly, the record saved with out a value, and when I view the new customer via my CustomerList screen, the new field contains an empty value.

No worries. I delete the ScheduledVistitDateTime value for all my customers – to start from a new slate.

Moving on…

With my new field, I now want to add some simple validation for any data that I enter in it. The validation, or business rule is that I want to only allow a date and time that is in the future. It would be silly to add a past date into a place where I want to store dates for the future.

“What kind of scheduling is that!?! Dumb Ass! Haw Haw” …Nelson exclaims.

Back to the Customer table designer in LightSwitch.

With the ScheduledVisitDateTime field selected, I head over to the Properties panel. At the bottom of the Properties panel is a link titled Custom Validation. I click it.

The Custom Validation link

Clicking the Custom Validation link opens the code designer for my Customer entity. Specifically, the code designer opens with a procedure stub already created and titled ScheduledVisitDateTime_Validate()…

This is where I am going to add some logic that will validate that the date and time I enter into the ScheduledVisitDateTime field are in the future. So, that’s what I do…

So I run the application by hitting the F5 key.

KaBLAMMO. {Insert Roger Daltrey “yeeeaaaahhhh…..” Won’t Get Fooled Again screem here}

…Nelson has fallen off my shoulder. Not sure where he went to.

Meanwhile, back at the LightSwitch ranch…the application launches and displays my CustomerList screen. I select the first customer recordI purposely click the labelled Next Scheduled Visit date and select a date in the past.

Validation errors showing on screen.

Looks like my custom validation is working! LightSwitch shows both a notification in the tab for the CustomerList screen, as well as shows a red border around my new field. Clicking on the in the message in the tab brings up the error message that I defined in my validation code…

The tab for the screen showing the error message.

Hovering over the tooltip corner of red border error indicator of the field causes LightSwitch to display the same error message as a tooltip…

Same error message as a ToolTip.

Cool!

Now, I update the value to a future datetime value and presto, the error messages go away. I save the record. I now am able to better manage my time by scheduling my next visit with my customers.

In my next post, I am going to extend this scheduling stuff by adding a new table with a relationship with this one. Doing so will let me add more granular scheduling, as well as set me up to better visualize my schedule by using queries.

Stay tuned!

Beth Massi (@bethmassi) explained Validating Collections of Entities (Sets of Data) in LightSwitch in this 8/31/2010 tutorial:

One of the many challenging things in building n-tier applications is designing a validation system that allows running rules on both the client and the server and sending messages and displaying them back on the client. I’ve built a couple application frameworks in my time and so I know how tricky this can be. I’ve been spending time digging into the validation framework for LightSwitch and I have to say I’m impressed. LightSwitch makes it easy to write business rules in one place and run them in the appropriate tiers. Prem wrote a great article detailing the validation framework that he posted on the LightSwitch Team Blog yesterday that I highly recommend you read first:

Overview of Data Validation in LightSwitch Applications.

Most validation rules you write are rules that you want to run on both the client and the server (middle-tier) and LightSwitch does a great job of handling that for you. For instance when you put a validation rule on an entity property this rule will run first on the client. If there is an error the data must be corrected before it can be saved to the middle-tier. This gives the user an immediate response but also makes the application scale better because you aren’t unnecessarily bothering the middle-tier. Once the validation passes on the client, it is run again on the middle-tier. This is best practice when building middle-tiers - don’t ever assume data coming in is valid.

Validating sets (or collections) of data can get tricky. You usually want to validate the set on the client first but then you have to do it again on the middle-tier, not only because you don’t trust the client, but also because the set of data can change in a multi-user environment. You need to take the change set of data coming in from the client, merge it with the set of data stored in the database, and then proceed with validation. Dealing with change sets and merging yourself can get pretty tricky sometimes. What I didn’t realize at first is that LightSwitch also handles this for you.

Example – Preventing Duplicates

Let’s take an example that I was working on this week. I have the canonical OrderHeader --< OrderDetails >—Product data model. I want a rule that makes sure no duplicate products are chosen across OrderDetail line items on any given order. So if a user enters the same product twice on an order, validation should fail. Here I have an orders screen that lets me edit all the orders for a selected customer. For each order I should not be allowed to enter the same product more than once:

Where Do the Rules Go?

You can write rules in xxx_Validate methods for entity properties (fields) and the entity itself. From the Entity Designer select the property name then click the arrow next to the “Write Code” button to drop down the list of available methods. The property methods will display for the selected property. The entity methods are under “General Methods”. In my example if you select the Product property and drop down the list of methods you see two validation methods Product_Validate and OrderDetails_Validate.

The Property Methods change as you select an entity property (field) in the designer but the General Methods are always displayed for the entity you are working with. Property _Validate methods run both on the client and then again on the middle-tier. Entity _Validate methods run on the server, these are called DataService validations.

In my order entry scenario I was first tempted to write code in the DataService on the OrderHeader entity and check the collection of OrderDetails there. When I select the OrderHeader entity in the Entity Designer, click the arrow next to the “Write Code” button, and select OrderHeaders_Validate, a method stub is generated for me in the ApplicationDataService class. This is where I was thinking I could validate my set of OrderDetails and return an error if there were duplicates.

Public Class ApplicationDataService Private Sub OrderHeaders_Validate(ByVal entity As OrderHeader, ByVal results As EntitySetValidationResultsBuilder) Dim isValid = False 'Write code to validate entity.OrderDetails collection '.... If Not isValid Then results.AddPropertyError("There are duplicated products on the order") End If End Sub End ClassHowever I quickly realized that this wouldn’t work because the OrderHeader entity would need to be changed for this validation to fire. If a user is editing a current order’s line items (OrderDetails) then only the validation for the OrderDetail would fire, not OrderHeader. Another issue with putting my rule in the ApplicationDataService class is the user would have to click save before the rule would fire and we’d have an unnecessary round-trip to the middle-tier. We want to be able to check this set for problems on the client first. Another issue is if I found an error then only a general validation message on the order would be presented to the user. They would have to stare at the screen to figure out the problem.

I think the reason why I went this route in the first place is because I was thinking I needed to merge the change set coming from the client with the set of data in the database and then validate that. It turns out that LightSwitch handles this for you. When you are validating a set of data (entity collection) on the client, you are validating what is on the user’s screen. When the validation runs on the server you are validating the merged set of data. NICE!

(Note that you can still access the change set via the DataWorkspace object but we’ll dive into that in a future post. )

The Right Way to Write this Rule

Since LightSwitch is doing all the heavy-lifting for me this rule gets a whole lot easier to implement. Since we’re checking duplicate products on each OrderDetail we need to put the code in the Product_Validate method of the OrderDetail entity (see screenshot above). Now we can write a simple LINQ query to check for duplicates.

Public Class OrderDetail Private Sub Product_Validate(ByVal results As EntityValidationResultsBuilder) If Me.Product IsNot Nothing Then 'Look at all the OrderDetails that: ' 1) have a product specified (detail.Product IsNot Nothing) ' 2) have the same product ID as this entity (detail.Product.Id = Me.Product.Id) ' 3) is not this entity (detail IsNot Me) Dim dupes = From detail In Me.OrderHeader.OrderDetails Where detail.Product IsNot Nothing AndAlso detail.Product.Id = Me.Product.Id AndAlso detail IsNot Me 'If Count is greater than zero then we found a duplicate If dupes.Count > 0 Then results.AddPropertyError(Me.Product.ProductName + " is a duplicate product") End If End If End Sub End ClassThis validation will fire for every line item we add or update on the order. It will first fire on the client and Me.OrderHeader.OrderDetails will be the collection of line items being displayed on the screen. If this rule passes validation on the client then it will fire on the middle-tier and the Me.OrderHeader.OrderDetails will be the collection of line items that were sent from the client merged with the data on the server. This means that if another user has modified the line items on the order we can still validate this set of data properly. Also notice when we specify the error message, it is attached to the Product property on the OrderDetail entity so when the user clicks the message in the validation summary at the top of the screen, the proper row in the grid is highlighted for them.

Stay tuned for more How Do I videos on writing business rules.

Enjoy!

<Return to section navigation list>

Windows Azure Infrastructure

James Urquhart went Exploring a healthy cloud-computing job market in his 9/1/2010 post to CNet News’ The Wisdom of Clouds blog:

While much of the global economy struggles with creating jobs, the high-tech industry has had a better record than most. Yes, there are conflicting reports about IT job growth overall. But in general, the market remains quite strong for technologists.

Within high tech itself, there is one standout opportunity for experienced, innovative people: cloud computing.

Even with the roar of VMworld-related cloud announcements--and counter-announcements--there have been a few really interesting data points that have come out this week with respect to cloud-related work.

For instance, Boto creator Mitch Garnaat, noted last week that there were 181 jobs listed for Amazon Web Services in the U.S. alone. Rob La Gesse countered that the second largest infrastructure as a service provider, Rackspace, had 175 jobs available in the States. Conversations with sources at Terremark, IBM, and others indicate that they are quickly expandng their cloud teams in response to increasing market demand.

Those providing cloud infrastructure are also seeking very unusual skill combinations that combine infrastructure and data center architecture and operations, with application architecture and operations. Knowledge of server, network and storage, and the application of converged infrastructures to virtualized environments--or at least the ability to understand what that means--seems to be a baseline for the large systems vendors these days, such as my own employer, Cisco Systems, as well as IBM, Hewlett-Packard, Dell, Oracle, EMC, NetApp, and most others.

Perhaps the most surprising news about cloud jobs this week, however, was from an employment report from freelance contractor job site, Elance.

While development of applications to run in the cloud has largely been associated with Amazon Web Services to date, there appears to have been a sudden burst of interest in Google App Engine developers--in fact, a 10x increase since last quarter.

This burst of demand put App Engine ahead of AWS in Elance's ranking of top overall skills in demand, according to the Elance report.

I find this surprising only in that I haven't seen a lot of evidence of App Engine in the marketplace. But Krishnan Subramanian has a great post exploring why this might be. The combination of VMware's Spring framework with the App Engine cloud model seems to have attracted a large number of small Web applications--a claim that is reportedly substantiated by the research of VMware's Jian Zhen on Alexis.

The bottom line is that IT use of the cloud is growing very quickly, and demand for skills to enable that growth is climbing as a result. If you have skills related to IT operations, application administration/operations, or software development, now may be the time to dive into the cloud.

Graphic credit: CC pingnews/Flickr

Lori MacVittie’s (@lmacvittie) The Impossibility of CAP and Cloud essay of 9/1/2010 for F5’s DevCentral blog addresses Brewer’s CAP theorem and NP-Completeness in cloud computing:

It comes down to this: the on-demand provisioning and elastic scalability systems that make up “cloud” are addressing NP-Complete problems for which there is no known exact solutions.

At the heart of what cloud computing provides – in addition to compute-on-demand – is the concept of elastic scalability. It is through the ability to rapidly provision resources and applications that we can achieve elastic scalability and, one assumes, through that high availability of systems. Obviously, given my relationship to F5 I am strongly interested in availability. It is, after all, at the heart of what an application delivery controller is designed to provide. So when a theorem is presented that basically says you cannot build a system that is Consistent, Available, and Partition-Tolerant I get a bit twitchy.

Just about the same time that Rich Miller

was reminding me of Brewer’s CAP Theorem someone from HP Labs claimed to have solved the P ≠ NP problem (shortly thereafter determined to not be a solution after all), which got me thinking about NP-Completeness in problem sets, of which solving the problem of creating a distributed CAP-compliant system certainly appears to be a member.

CLOUD RESOURCE PROVISIONING is NP-COMPLETE

A core conflict with cloud and CAP-compliance is on-demand provisioning. There are, after all, a minimal set of resources available (cloud is not infinitely scalable, after all) with, one assumes, each resource having a variable amount of compute availability. For example, most cloud providers use a “large”, “medium”, and “small” sizing approach to “instances” (which are, in almost all cases, a virtual machine). Each “size” has a defined set of reserved compute (RAM and CPU) for use. Customers of cloud providers provision instances by size.

At first glance this should not a problem. The provisioning system is given an instruction, i.e. “provision instance type X.” The problem begins when you consider what happens next – the provisioning system must find a hardware resource with enough capacity available on which to launch the instance.

In theory this certainly appears to be a variation of the Bin packing problem (which is NP-complete). It is (one hopes) resolved by the cloud provider by removing the variability of location (parameterization) or the use of approximation (using the greedy approximation algorithm “first-fit”, for example). In a pure on-demand provisioning environment, the management system would search out, in real-time, a physical server with enough physical resources available to support the requested instance requirements but it would also try to do so in a way that minimizes the utilization of physical resources on each machine so as to better guarantee availability of future requests and to be more efficient (and thus cost-effective).

It is impractical, of course, to query each physical server in real-time to determine an appropriate location, so no doubt there is a centralized “inventory” of resources available that is updated upon the successful provisioning of an instance. Note that this does not avoid the problem of NP-Completeness and the resulting lack of a solution as data replication/synchronization is also an NP-Complete problem. Now, because variability in size and an inefficient provisioning algorithm could result in a fruitless search, providers might (probably do) partition each machine based on the instance sizes available and the capacity of the machine. You’ll note that most providers size instances as multiples of the smallest, if you were looking for anecdotal evidence of this. If a large instance is 16GB RAM and 4 CPUs, then a physical server with 32 GB of RAM and 8 CPUs can support exactly two large instances. If a small instance is 4GB RAM and 1 CPU, that same server could ostensibly support a combination of both: 8 small instances or 4 small instances and 2 large instances, etc… However, that would make it difficult to keep track of the availability of resources based on instance size and would eventually result in a failure of capacity availability (which makes the system non-CAP compliant).

However, not restricting the instances that can be deployed on a physical server returns us to a bin packing-like algorithm that is NP-complete which necessarily introduces unknown latency that could impact availability. This method also introduces the possibility that while searching for an appropriate location some other consumer has requested an instance that is provisioned on a server that could have supported the first consumer’s request, which results in a failure to achieve CAP-compliance by violating the consistency constraint (and likely the availability constraint, as well).

The provisioning will never be “perfect” because there is no exact solution to an NP-complete problem. That means the solution is basically the fastest/best it can be given the constraints. Which we often distill down to “good enough.” That means that there are cases where either availability or consistency will be violated, making cloud in general non-CAP compliant.

The core conflict is the definition of “highly available” as “working with minimal latency.” Or perhaps the real issue is the definition of “minimal”. For it is certainly the case that a management system that leverages opportunistic locking and shared data systems could alleviate the problem of consistency, but never availability. Eliminating the consistency problem by ensuring that every request has exclusive access to the “database” of instances when searching for an appropriate physical location introduces latency while others wait. This is the “good enough” solution used by CPU schedulers – the CPU scheduler is the one and only authority for CPU time-slice management. It works more than well-enough on a per-machine basis, but this is not scalable and in larger systems would result in essentially higher rates of non-availability as the number of requests grows.

WHY SHOULD YOU CARE