Windows Azure and Cloud Computing Posts for 4/27/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this weekly series. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated for the January 4, 2010 commercial release in April 2010.

Azure Blob, Table and Queue Services

Scott Densmore brings you up to date on Paging with Windows Azure Table Storage in this 4/27/2010 post:

Steve Marx has a great post on paging over data that uses the Storage Client Library shipped with previous versions of the Windows Azure SDK. You can update this code and make it work with the current SDK. It uses the DataServiceQuery and uses the underlying rest header calls to get the next partition and row key for the next query. In the current SDK, the CloudTableQuery<TElement> now handles dealing with continuation tokens. If you execute queries, you will not need to deal with the 1000 entity limitation. You can read more about this from Neil Mackenzie’s post on queries.

If you just execute your query you will not deal with the continuation token and just run through your results. This could be bad if you have a large result set (blocking IIS threads etc etc). To handle this, you will use the Async version to execute the query so you can get to the ResultSegment<TElement> and the ResultContinuation depending on your query.

In the sample, we are displaying 3 entities per page. To get to the next or previous page of data we create a stack of tokens to allow you to move forward and back. The sample stores this in session so they can persist between post backs. Since the ReultsContinuation object is serializable you can store this anywhere to persists between post backs. The stack is just an implementation detail to keep up with where you are in the query. The following is a diagram of what is going on on the page:

This is basically the same as what Steve did in his post but using the tokens and adding back functionality.

My live OakLeaf Systems Azure Table Services Sample Project demonstrates paging with continuation tokens.

i-NewsWire published a Manage Azure Blob Storage with CloudBerry Explorer press release on 4/27/2010:

CloudBerry Lab has released CloudBerry Explorer v1.1, an application that allows users to manage files on Windows Azure blog storage just as they would on their local computers.

CloudBerry Explorer allows end users to accomplish simple tasks without special technical knowledge, automate time-consuming tasks to improve productivity.Among new features are Development Storage and $root container support and availability of the professional version of CloudBerry Explorer for Azure.

Development storage is a local implementation of Azure storage that is installed along with Azure SDK. Users can use it to test and debug their Azure applications locally before deploying them to Azure. The newer version of CloudBerry Explorer allows working with the Development Storage the same way you work with the online storage.

$root container is a default container in Azure Blob Storage account. With the newer release of CloudBerry Explorer users can work with $root container just like with any other container.

With the release 1.1 CloudBerry Lab also introduces the PRO version of CloudBerry Explorer for Azure Blob storage. This version will have all the features of CloudBerry S3 Explorer PRO but designed exclusively for Windows Azure. CloudBerry Explorer PRO for Azure will be priced at $39.99 but for the first month CloudBerry Lab offers an introductory price of $29.99.

In addition CloudBerry Lab as a part of their strategy to support leading Cloud Storage Providers is working on the version of CloudBerry Backup designed to work with Azure Blob Storage. This product is expected to be available in May 2010.

For more information & to download your copy, visit our Web site at:

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

Johannes Kebeck’s Bing Maps & Codename “Dallas” post of 4/28/2008 to the MapForums.com community site shows you how to integrate weather information from Weather Central from Codename “Dallas” with Bing Maps:

You can run the sample application here. Johannes’ original post of 4/28/2010 with all screen captures visible is here.

Ritz Covan offers source code and a live demo for his Netflix browser: OData, Prism, MVVM and Silverlight 4 project of 4/28/2010:

During one of the keynotes at MIX 2010, Doug Purdy discussed OData and explained that Netflix, working in conjunction with Microsoft, had created and launched an OData feed. Armed with that information and being a big Netflix fan, I decided to whip up a small demo application taking advantage of this new feed while showcasing some of the cool stuff that is baked into Prism as well. If you don’t know Prism, check out this previous post where I provide some resources for getting up to speed with it. I’m considering doing a few screen casts that walk through how I created the Netflix browser if there is an appetite for it, so let me know.

Here is a screen shot of the final app or you can run the real thing here

Mike Flasko announces the availability of a Deep Fried OData podcast on 4/27/2010:

I had the pleasure of sitting down with Chris Woodruff, a host of the Deep Fried Bytes podcast, at MIX 2010 to talk about OData and some of the announcements around OData at the conference.

The podcast is available at: http://deepfriedbytes.com/podcast/episode-53-a-lap-around-odata-with-mike-flasko/.

Mike is Lead Program Manager, Data Services

The OData Team confirms that The Open Data Protocol .NET Framework Client Library – Source Code Available for Download in this 4/26/2010 post to the OData blog:

We are happy to announce that we have made the source code for the .NET Framework 3.5 SP1 and Silverlight 3.0 Open Data Protocol (OData) client libraries available for download on the CodePlex website. This release represents the OData team's continued commitment to the OData protocol and the ecosystem that has been built around it. We have had requests for assistance in building new client libraries for the OData protocol and we are releasing the source for the .NET Framework and Silverlight client libraries to assist in that process. We encourage anyone who is interested in the OData ecosystem and building OData client libraries to download the code.

The source code has been made available under the Apache 2.0 license and is available for download by anyone with a CodePlex account. To download the libraries, visit the OData CodePlex site at http://odata.codeplex.com.

It’s interesting that an Apache 2.0 license covers the source code, rather than CodePlex’s traditional Microsoft Public License (Ms-PL).

Jeff Barnes provides a link to Phani Raju’s post of the same name in Server Driven Paging With WCF Data Services of 4/27/2010 to the InnovateShowcase blog:

Looking for an easy way to page thru your result sets ->server-side?

Check-out this blog post for an easy walk-thru of how to easily enable data paging with WCF Data Services.

WCF Data Services - enables the creation and consumption of OData services for the web (formerly known as ADO.NET Data Services).

This feature is a server driven paging mechanism which allows a data service to gracefully return partial sets to a client.

Andy Novick gives you a guided tour of the SQL Azure Migration Wizard in this 4/21/2010 post to the MSSQLTips.com blog, which Google Alerts missed when published:

Problem

SQL Azure provides relational database capability in the cloud. One of the features that is missing is a way to move databases up or down from your in-house SQL server. So how do you move a database schema and data up to the cloud? In this tip I will walk through a utility that will help make the migration much easier.Solution

SQL Azure is Microsoft's relational database that is part of its Windows Azure Cloud Platform as a Service offering. While it includes most of the features of SQL Server 2008 it doesn't include any backup or restore capabilities that allow for hoisting schema and data from an on-premises database up to SQL Azure. The documentation refers to using SQL Server Management Studio's (SSMS) scripting capability for this task.While SSMS has the ability to script both schema and data there are several problems with this approach:

- SSMS scripts all database features, but there are some features that SQL Azure doesn't support

- SSMS doesn't always get the order of objects correct

- SSMS scripts data as individual insert statements, which can be very slow

Recognizing that the SSMS approach doesn't work very well, two programmers, George Huey and Wade Wegner created the SQL Azure Migration (SAMW) wizard and posted it on CodePlex. You'll find the project on the SQL Azure Migration Wizard page where you can download the program including the source code, engage in discussions and even post a patch if you're interested in contributing to the project.

You'll need the SQL Server R2 client tools (November CTP or later) and the .Net Framework on the machine where you unzip the download file. There's no install program, at least not yet, just the SQLAzureMW.exe program and configuration files.

Andy continues with a post that’s similar to my Using the SQL Azure Migration Wizard v3.1.3/3.1.4 with the AdventureWorksLT2008R2 Sample Database of 1/23/2010.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

John Fontana questions whether Microsoft’s ADFS 2.0 [Glass is] Half-empty? [or] Half-full? and concludes it’s half-empty this 4/28/2010 post to Ping Identity’s Ping Talk blog:

In the next few days, Microsoft says it will RTM Active Directory Federation Services 2.0, a piece the software giant needs to extend Active Directory to create single sign-on between local network resources and cloud services.

Back in October 2008, I was the first reporter to write about the impending arrival of ADFS 2.0, then code-named Geneva, and Microsoft’s plan to storm the identity federation market with its claims-based model. I followed Geneva and wrote about its evolution, including the last nail in the project – support for the SAML 2.0 protocol to go along with Microsoft’s similar protocol WS-Federation.

But what will arrive this week is more of a glass half-full, glass half-empty story, one end-users should closely evaluate.

Half-full. Microsoft validates a market when they move into it with the sort of gusto that is behind ADFS 2.0, a Security Token Service, even though smaller companies such as Ping have been providing federation technology since 2002. That validation should help IT, HR and others more easily push their federation projects. And more than a few companies should join those, such as Reardon, already enjoying identity federation and Internet SSO.

ADFS 2.0 is “free” for Active Directory users, which is a word that resonates with CIOs. And Microsoft has been running ADFS 2.0 on its internal network since May 2009, giving it nearly a year to vet bugs and other issues.

But potential users should look deeper.

Half-empty. ADFS 2.0 was slated to ship a year ago, what were the issues that caused it to slip and have they been corrected?

Microsoft’s support for the full SAML spec is first generation. Late last year was the first time Microsoft participated in and passed an independent SAML 2.0 interoperability test, an eight-day affair put on by Liberty Alliance and Kantara. Ping, which had participated previously, also passed and was part of the testing against Microsoft.

Microsoft's testing during the event focused on SAML's Service Provider Lite, Identity Provider Lite and eGovernment profiles. The ‘”lite” versions of those are a significant sub-set of the full profiles. Microsoft says it plans to support other SAML profiles based on demand. After the testing, Burton Group analysts said Microsoft had “covered the core bases” for SAML 2.0 support. For some deploying SAML that might be enough, for others it could fall short. …

The “Geneva” Team’s Update on Windows CardSpace post of 4/27/2010 announced:

We have decided to postpone the release of Windows CardSpace 2.0. This is due to a number of recent and exciting developments in technologies such as U-Prove and Open ID that can be used for Information Cards and other user-centric identity applications. We are postponing the release to get additional customer feedback and engage with the industry on these technologies. We will communicate additional details at a later time.

As part of our continued investment in these areas, we will deliver a Community Technology Preview in Q2 2010 that will enable the soon-to-be-released Active Directory Federation Services 2.0 (AD FS 2.0) in Windows Server to issue Information Cards.

Microsoft remains committed in the development of digital identity technologies, interoperable identity standards, the claims-based identity model, and Information Cards. AD FS 2.0 is on track for release shortly. We also continue to actively participate in industry groups such as the Information Card Foundation, the OpenID Foundation, and standards bodies such as OASIS.

Dave Kearns recommends that you Invest in federated identity management now in this 4/27/2010 post to the NetworkWorld Security blog:

Now truly is the time for organizations to invest in federated identity management. Not surprising to hear that from me, but it's actually a paraphrase of a statement by Tom Smedinghoff, partner at Chicago law firm Wildman Harrold, in an interview with Government Information Security News. …

Now Mr. Smedinghoff uses a somewhat broader definition of federated identity than, say, the Kantara Initiative. He offers this analogy:

"The best example I like to use is the process that you go through when you board an airplane at the airport and you go through security. The TSA could go through a process of identifying all passengers, issuing them some sort of a credential or an identification document and then maintaining a database, so as passengers go through they would check them against that database and so forth.

“But what they do instead is really a whole lot more efficient and a whole lot more economical, and that is to rely on an identification process done by somebody else -- in this case it is a government entity typically that issues driver's licenses at a state level or passports at the federal level. But by relying on this sort of identification of a third party, it is much more economical, much more efficient and works better for everybody involved and of course the passengers don't need to carry an extra identification document."

But the important part of the interview is his explanation of the four legal challenges federation partners face:

- Privacy and security – "there is a fair amount of concern about what level of security are we providing for that information, and what are the various entities doing with it?"

- Liability – "what is their [the identity provider's] liability if they are wrong?"

- Rules and enforcement – "We need everybody who is participating to know what everybody else is responsible for doing, and need some assurance that they really are going to do it correctly."

- Existing laws – "And as you do this across borders, of course, it complicates it even more." …

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Fabrice Marguerie announced Sesame update du jour: SL 4, OOB, Azure, and proxy support on 4/28/2010:

I’ve just published a new version of Sesame Data Browser.

Here’s what’s new this time:

- Upgraded to Silverlight 4

- Can run out-of-browser (OOB), with elevated permissions. This gives you an icon on your desktop and enables new scenarios. Note: The application is unsigned for the moment.

- Support for Windows Azure authentication

- Support for SQL Azure authentication

- If you are behind a proxy that requires authentication, just give Sesame a new try after clicking on “If you are behind a proxy that requires authentication, please click here”

- An icon and a button for closing connections are now displayed on connection tabs

- Some less visible improvements

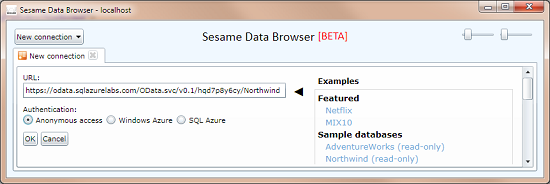

Here is the connection view with anonymous access:

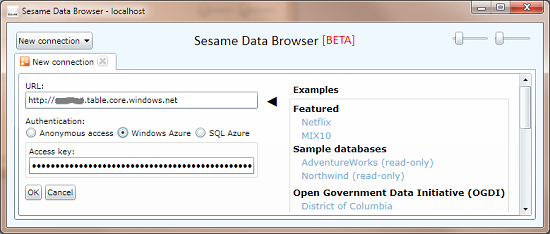

If you want to access Windows Azure tables as OData, all you have to do is use your table storage endpoint as the URL, and provide your access key:

A Windows Azure table storage address looks like this: http://<your account>.table.core.windows.net/

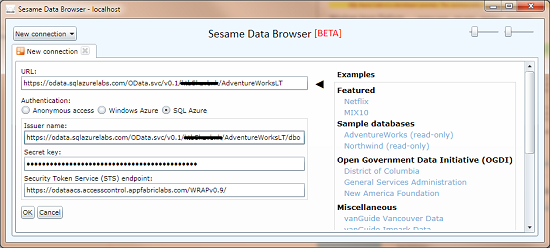

If you want to browse your SQL Azure databases with Sesame, you have to enable OData support for them at https://www.sqlazurelabs.com/ConfigOData.aspx.

You can choose to enable anonymous access or not. When you don’t enable anonymous access, you have to provide an Issuer name and a Secret key, and optionally an Security Token Service (STS) endpoint:

Jim O’Neill and Brian Hitney are running a couple of live instances of their Folding@home Windows Azure protein-folding project at http://distributed.cloudapp.net and http://distributedjoneil2.cloudapp.net.

For more information on this project, see Jim’s Feeling @Home with Windows Azure, at Home blog post of 4/24/2010.

Abel Avram quotes Bill Zack’s posts in Scenarios and Solutions for Using Windows Azure in this InfoQ article of 4/27/2010:

Bill Zack, Architect Evangelist for Microsoft, has detailed in an online presentation key scenarios for using the cloud and solutions provided by Windows Azure.

There are applications with an usage pattern making them appropriate for the cloud, but there are also applications which are better to be not deployed onto a cloud because the owner ends up spending more to run them.

Workloads

- On and Off – applications that are used sporadically during certain periods of time over the day or the year. Many batch jobs that run at the end of the day or the month fall in this category. Providing the required capacity for such applications is more expensive than running them in the cloud because much of the time the respective capacity lies unused.

- Growing Fast or Failing Fast – a workload pattern encountered by startups which cannot accurately predict the rate of success of their new business and, consequently, the actual capacity needs. Startups usually start small increasing their capacity over time when demand raises. Such applications are fit for the cloud because the cloud can accommodate the growing resource needs quickly.

- Unpredictable Bursting – this happens, for example, when the usual load on a web server is temporarily increased by a large value, so large that the system does not cope with the transient traffic. The owners should have provided enough capacity to absorb such loads, but they did not expect such peak of traffic. Even if they did anticipate it, the added capacity would sit mostly unused. This is another good candidate for the cloud.

- Predictable Bursting – the load constantly varies in a predictable way over time. The owner could buy the necessary equipment and software having it on-premises without having to rely on a cloud provider.

Zack continues by describing scenarios for computation, storage, communications, deployment and administration along with the solutions provided by Windows Azure. …

Eric Nelson offers a 45-minute video on introduction to Windows Azure and running Ruby on Rails in the cloud in this 4/27/2010 post:

Last week I presented at Cloud and Grid Exchange 2010. I did an introduction to Windows Azure and a demo of Ruby on Rails running on Azure.

My slides and links can be found here – but just spotted that the excellent Skills Matter folks have already published the video.

Watch the video at http://skillsmatter.com/podcast/cloud-grid/looking-at-the-clouds-through-dirty-windows. …

Bruce Kyle posted From Paper to the Cloud Part 2 – Epson’s Windows 7 Touch Kiosk to the US ISV Developer blog on 4/27/2010:

In Part 2 of From Paper to the Cloud, Epson Software Engineer Kent Sisco shows how Windows 7 Touch can be used in a kiosk setting with a printer and scanner. Epson Imaging Technology Center (EITC) team has created an application called Marksheets that converts marks on paper forms into user data on Windows Azure Platform.

Mark sheets are forms that can now be printed on standard printers and marked similar to the optically scanned standardized tests that we've all used.

You can apply this technology to create your own data input form or mark sheet. Users can print the form on demand then mark, scan, access their data in the cloud.

The demo is a prototype for printing, scanning, and data storage applications for education, medicine, government, and business.

Other Videos in This Series

Channel 9 Videos in this series:

Next up: Marksheets for medical input.

Toddy Mladenov answers users’ Windows Azure Diagnostics–Where Are My Logs? questions in this 4/27/2010 tutorial:

Recently I noticed that lot of developers who are just starting to use Windows Azure hit issues with diagnostics and logging. It seems I didn’t go the same path other people go, because I was able to get diagnostics running from the first time. Therefore I decided to investigate what could possibly be the problem.

I created quite simple Web application with only one Web Role and one instance of it. The only purpose of the application was to write trace message every time a button is clicked on a web page.

In the

onStart()method of the Web role I commented out the following line:

DiagnosticMonitor.Start("DiagnosticsConnectionString");and added my custom log configuration:

DiagnosticMonitorConfiguration dmc =

DiagnosticMonitor.GetDefaultInitialConfiguration();

dmc.Logs.ScheduledTransferPeriod = TimeSpan.FromMinutes(1);

dmc.Logs.ScheduledTransferLogLevelFilter = LogLevel.Verbose;DiagnosticMonitor.Start("DiagnosticsConnectionString", dmc);

Here is also the event handler for the button:

protected void BtnSmile_Click(object sender, EventArgs e)

{

if (this.lblSmile == null || this.lblSmile.Text == "")

{

this.lblSmile.Text = ":)";

System.Diagnostics.Trace.WriteLine("Smiling...");

}

else

{

this.lblSmile.Text = "";

System.Diagnostics.Trace.WriteLine("Not smiling...");

}

}This code worked perfectly, and I was able to get my trace messages after about a minute running the app in DevFabric.

After confirming that the diagnostics infrastructure works as expected my next goal was to see under what conditions I will see no logs generated by Windows Azure Diagnostics infrastructure. I reverted all the changes in the

onStart()method and ran the application again. Not very surprisingly I saw no logs after minute wait time. Somewhere in my mind popped the value 5 mins, and I decided to wait. But even after 5 or 10, or 15 mins I saw nothing in the WADLogsTable. Apparently the problem comes from the default configuration of theDiagnosticMonitor, done through the following line:

DiagnosticMonitor.Start("DiagnosticsConnectionString"); …

Toddy continues with more details about the fix.

Eugenio Pace announced Windows Azure Guidance – New Code & Doc drop on CodePlex on 4/26/2010:

We are almost content complete for our first Windows Azure Architecture Guide (the most probable name for our book). Available for download today:

- New updated samples, including all file processing and background tasks (lot’s of small nuggets in there, such as use of multiple tasks in a single Worker, continuation tokens, data model optimization, etc). Most of this has been discussed in previous posts.

- 7 Chapters of the guide are now available. Again, I’ve been covering most of this in previous blogs posts, but these are much nicer thanks to the work of our technical writing team: Dominic Betts, Colin Campbell and Roberta Leibovitz.

Let us know what you think. I hope to hand this over to the production team so we can move on to the next scenario. More on this soon.

I’m anxious to see the book.

tbtechnet provides the answer to I’m In The Cloud – Now What? in this 4/26/2010 post:

There are some great resources available to guide a developer to create cloud applications.

See for example, these:

- For USA developers, no-cost phone and email support during and after the Windows Azure virtual boot camp with the Front Runner for Windows Azure program.

- For non-USA developers - sign up for Green Light https://www.isvappcompat.com/global

- Learn how to put up a simple application on to Windows Azure

- Take the Windows Azure virtual lab

- View the series of Web seminars designed to quickly immerse you in the world of the Windows Azure Platform

- Why Windows Azure - learn why Azure is a great cloud computing platform with these fun videos

- Download the Windows Azure Platform Training Kit

- PHP on Windows Azure

So your application is now in the cloud? Now what? How do you tell the world about your application? How do you connect with a market and selling channel?

Check out these “market/sell” resources:

- www.pinpoint.com - this is a directory of sorts where you can get a profile of your company and your application professionally written, at no cost.

- Connect with channel and sales partners such as resellers, distributors that can help you reach a huge audience via the Channel Development Toolkit

So you’re not only in the cloud, but you’re now connecting with customers and partners to help them help you be successful.

Colin Melia presents a 00:55:00 dnrTV show #170, Colin Melia on Azure:

Colin Melia shows how to develop applications in the cloud with Windows Azure, including PHP!

Colin Melia is the Principal Architect for DreamDigital, a technical speaker and trainer on Microsoft technologies, as well as a user group leader and academic advisory committee member in Ottawa. He has been a hands-on Architect for over 17 years having developed award-winning rich desktop simulation technology, cloud-based learning portals and workflow-driven performance tracking BI systems as well as creating the first streaming video community site with Windows Media. He has worked in the finance, telecoms, e-learning, Internet communications and gaming industries, with his latest business solutions currently in use by thousands of users world-wide in corporations like GE, HP, O2, Cisco, IBM, Microsoft & Reuters.

Return to section navigation list>

Windows Azure Infrastructure

John Treadway’s Enterprise Cloud Musings post of 4/27/2010 from The State of the Cloud conference in Boston compares the TCO of private and public clouds:

The enterprise market is a bit like a a riddle wrapped in a mystery inside an enigma. On the one hand, the investment by service providers in “enterprise class” cloud services continues to accelerate. On the other hand, pretty much all I hear from enterprise customers is how they are primarily interested in private clouds. What to make of this, I wonder?

At “The State of the Cloud” conference in Boston today, most of the users talked about their concerns over public clouds and their plans for (or experience with) private clouds. There was some openness to low-value applications, and for specific cases such as cloud analytics. We do hear about “a lot” of enterprise cloud usage these days, but most of that is dev/test or unified communications, and not strategic business applications. So where’s the disconnect?

One of the speakers said it best — “we’re just at the beginning stages here and the comfort level will grow.” So enterprise IT is getting comfortable with the operational models of clouds while the technologies and providers mature to the point that “we can trust them.” It’s understandable that this would be the case. If enterprises fully adopt cloud automation models and cost optimization techniques internally, any scale benefits for external cloud providers will take longer to become meaningful. IT can be far more efficient than it is today in most companies, and if the private cloud model gets the job done, it will delay what is likely the inevitable shift to public cloud utilities.

Stated another way, the more successful we are at selling private clouds to the enterprise, the longer it will take for the transition to public clouds to occur.

As you see in the chart above, it is likely that the TCO gap between traditional IT and the public cloud will narrow as enterprises implement private clouds. Some enterprises are already at or below the TCO of many public cloud providers – especially the old-line traditional hosting companies who don’t have the scale of an Amazon or Google. Over time, the survivors in the public cloud space, including those with enterprise class capabilties, will gain the scale to increase their TCO advantage over in-house IT.

It may take a long time to see this out, and this is a general model. Individual companies and cloud providers won’t fit this chart, but it’s likely that the overal market will trend this way. TCO is not the only factor – but where costs matter the public cloud model will eventually win out.

The Microsoft TechNet Wiki added the Windows Azure Survival Guide on 4/27/2010:

This article is a stub for the list of resources you need to join the Windows Azure community of IT Pros, feel free to add to it - it is the wiki way!

To check whether the wiki was working, I opened an account and completed my profield, but the wiki wouldn’t save it. So I added an article to check whether authoring worked: It did.

Lori MacVittie claims Infrastructure can be a black box only if its knobs and buttons are accessible in her They’re Called Black Boxes Not Invisible Boxes post of 4/27/2010 from Interop:

I spent hours at Interop yesterday listening to folks talk about “infrastructure.” It’s a hot topic, to be sure, especially as it relates to cloud computing. After all, it’s a keyword in “Infrastructure as a Service.” The problem is that when most of people say “infrastructure” it appears what they really mean is “server” and that just isn’t accurate.

If you haven’t been a data center lately there is a whole lot of other “stuff” that falls under the infrastructure moniker in a data center that isn’t a server. You might also have a firewall, anti-virus scanning solutions, a web application firewall, a Load balancer, WAN optimization solutions, identity management stores, routers, switches, storage arrays, a storage network, an application delivery network, and other networky-type devices. Oh there’s more than that but I can’t very well just list every possible solution that falls under the “infrastructure” umbrella or we’d never get to the point.

In information technology and on the Internet, infrastructure is the physical hardware used to interconnect computers and users. Infrastructure includes the transmission media, including telephone lines, cable television lines, and satellites and antennas, and also the routers, aggregators, repeaters, and other devices that control transmission paths. Infrastructure also includes the software used to send, receive, and manage the signals that are transmitted.

In some usages, infrastructure refers to interconnecting hardware and software and not to computers and other devices that are interconnected. However, to some information technology users, infrastructure is viewed as everything that supports the flow and processing of information.

-- TechTarget definition of “infrastructure”

The reason this is important to remember is that people continue to put forth the notion that cloud should be a “black box” with regards to infrastructure. Now in a general sense I agree with that sentiment but if – and only if – there is a mechanism to manage the resources and services provided by that “black boxed” infrastructure. For example, “servers” are infrastructure and today are very “black box” but every IaaS (Infrastructure as a Service) provider offers the means by which those resources can be managed and controlled by the customer. The hardware is the black box, not the software. The hardware becomes little more than a service. …

Lori continues her essay with a “STRATEGIC POINTS of CONTROL” topic.

<Return to section navigation list>

Cloud Security and Governance

David Linthicum asserts that “The recent bricking of computers by McAfee should not be used to shine a bad light on cloud computing” in his The imperfect cloud versus the imperfect data center post of 4/28/2010 to InfoWorld’s Cloud Computing blog:

While I enjoyed fellow InfoWorld blogger Paul Venezia's commentary "McAfee's blunder, cloud computing's fatal flaw," I once again found myself in the uncomfortable position of defending cloud computing. Paul is clearly reaching a bit by stating that McAfee's ability to brick many corporate PCs reflects poorly on the concept of cloud computing.

Paul is suspicious that the trust we're placing in centralized resources -- using McAfee as an example -- could someday backfire, as central failures within cloud computing providers become massive business failures and as we become more dependent on the cloud.

However, I'm not sure those in the cloud computing space would consider poorly tested profile updates that come down from central servers over the Internet as something that should be used to knock cloud computing. Indeed, were this 15 years ago, the poorly tested profile updates would have come on a disk in the mail. No cloud, but your computer is toast nonetheless. …

I agree with Dave. As I noted in this item at the end of my Windows Azure and Cloud Computing Posts for 4/26/2010+ post:

Paul Venezia posits “McAfee's update fiasco shows even trusted providers can cause catastrophic harm” in his McAfee's blunder, cloud computing's fatal flaw post of 4/26/2010 to InforWorld’s The Deep End blog:

“Paul’s arguments fall far short of proving organizations that use off-premises PaaS are more vulnerable to amateurish quality control failures than those who run all IT operations in on-premises data centers. This is especially true of an upgrade bug that obliterated clients’ network connectivity.”

See John Fontana questions whether Microsoft’s ADFS 2.0 [Glass is] Half-empty? [or] Half-full? and concludes it’s half-empty in the AppFabric: Access Control and Service Bus section. Also see the “Geneva” Team’s related Update on Windows CardSpace post of 4/27/2010 in that section.

David Linthicum claims “A recent Harris Poll shows that cloud computing's lack of security -- or at least its perception -- is making many Americans uneasy about the whole idea” in his Cloud security's PR problem shouldn't be shrugged off post of 4/27/2010 to InfoWorld’s Cloud Computing blog:

"One of the main issues people have with cloud computing is security. Four in five online Americans (81 percent) agree that they are concerned about securing the service. Only one-quarter (25 percent) say they would trust this service for files with personal information, while three in five (62 percent) would not. Over half (58 perent) disagree with the concept that files stored online are safer than files stored locally on a hard drive and 57 percent of online Americans would not trust that their files are safe online."

That's the sobering conclusion from a recent Harris poll conducted online between March 1 and 8 among 2,320 adults.

Cloud computing has a significant PR problem. I'm sure there will be comments below about how cloud computing, if initiated in the context of a sound security strategy, is secure -- perhaps moreso than on-premise systems. While I agree to some extent, it's clear that the typical user does not share that confidence, which raises a red flag for businesses seeking to leverage the cloud.

If you think about it, users' fears are logical, even though most of us in the know understand them to be unfounded. For a typical user, it's hard to believe information stored remotely can be as safe as or safer than systems they can see and touch.

Of course, you can point out the number of times information walks out the door on USB thumb drives, stolen laptops, and other ways that people are losing information these days. However, there continues to be a mistrust of resources that are not under your direct control, and that mindset is bad for the cloud. …

See the James Quin will conduct a Security and the Cloud Webinar on 5/12/2010 at 9:00 to 9:45 AM PDT in the Cloud Computing Events section.

North Carolina State News reports New Research Offers Security For Virtualization, Cloud Computing on 4/27/2010:

Virtualization and cloud computing allow computer users access to powerful computers and software applications hosted by remote groups of servers, but security concerns related to data privacy are limiting public confidence—and slowing adoption of the new technology. Now researchers from North Carolina State University have developed new techniques and software that may be the key to resolving those security concerns and boosting confidence in the sector.

"What we've done represents a significant advance in security for cloud computing and other virtualization applications," says Dr. Xuxian Jiang, an assistant professor of computer science and co-author of the study. "Anyone interested in the virtualization sector will be very interested in our work." …

One of the major threats to virtualization—and cloud computing—is malicious software that enables computer viruses or other malware that have compromised one customer's system to spread to the underlying hypervisor and, ultimately, to the systems of other customers. In short, a key concern is that one cloud computing customer could download a virus—such as one that steals user data—and then spread that virus to the systems of all the other customers.

"If this sort of attack is feasible, it undermines consumer confidence in cloud computing," Jiang says, "since consumers couldn't trust that their information would remain confidential."

But Jiang and his Ph.D. student Zhi Wang have now developed software, called HyperSafe, that leverages existing hardware features to secure hypervisors against such attacks. "We can guarantee the integrity of the underlying hypervisor by protecting it from being compromised by any malware downloaded by an individual user," Jiang says. "By doing so, we can ensure the hypervisor's isolation."

For malware to affect a hypervisor, it typically needs to run its own code in the hypervisor. HyperSafe utilizes two components to prevent that from happening. First, the HyperSafe program "has a technique called non-bypassable memory lockdown, which explicitly and reliably bars the introduction of new code by anyone other than the hypervisor administrator," Jiang says. "This also prevents attempts to modify existing hypervisor code by external users."

Second, HyperSafe uses a technique called restricted pointer indexing. This technique "initially characterizes a hypervisor's normal behavior, and then prevents any deviation from that profile," Jiang says. "Only the hypervisor administrators themselves can introduce changes to the hypervisor code."

The research was funded by the U.S. Army Research Office and the National Science Foundation. The research, "HyperSafe: A Lightweight Approach to Provide Lifetime Hypervisor Control-Flow Integrity," will be presented May 18 at the 31st IEEE Symposium On Security And Privacy in Oakland, Calif. [Emphasis added.]

In Oakland???

See the 31st IEEE Symposium On Security And Privacy post in the Cloud Computing Events section.

Chris Hoff (@Beaker) wrote Introducing The HacKid Conference – Hacking, Networking, Security, Self-Defense, Gaming & Technology for Kids & Their Parents on 4/26/2010:

This is mostly a cross-post from the official HacKid.org website, but I wanted to drive as many eyeballs to it as possible.

The gist of the idea for HacKid (sounds like “hacked,” get it?) came about when I took my three daughters aged 6, 9 and 14 along with me to the Source Security conference in Boston.

It was fantastic to have them engage with my friends, colleagues and audience members as well as ask all sorts of interesting questions regarding the conference.

It was especially gratifying to have them in the audience when I spoke twice. There were times the iPad I gave them was more interesting, however.

The idea really revolves around providing an interactive, hands-on experience for kids and their parents which includes things like:

- Low-impact martial arts/self-defense training

- Online safety (kids and parents!)

- How to deal with CyberBullies

- Gaming competitions

- Introduction to Programming

- Basic to advanced network/application security

- Hacking hardware and software for fun

- Build a netbook

- Make a podcast/vodcast

- Lockpicking

- Interactive robot building (Lego Mindstorms?)

- Organic snacks and lunches

- Website design/introduction to blogging

- Meet law enforcement

- Meet *real* security researchers

We’re just getting started, but the enthusiasm and offers from volunteers and sponsors has been overwhelming!

If you have additional ideas for cool things to do, let us know via @HacKidCon (Twitter) or better yet, PLEASE go to the Wiki and read about how the community is helping to make HacKid a reality and contribute there!

Hoping to drive some more eyeballs.

<Return to section navigation list>

Cloud Computing Events

The Windows Azure Team’s Live in the UK and Want to Learn More About Windows Azure? Register Today For A FREE Windows Azure Self-paced Learning Course post of 4/27/2010 promotes Eric Nelson’s and David Gristwood’s Windows Azure Self-paced Learning Course:

Do you live in the UK and want to learn more about Windows Azure? Then you'll want to register today for the Windows Azure Self-paced Learning Course, a 6-week virtual technical training course developed by Microsoft Evangelists Eric Nelson and David Gristwood. The course, which will run from May 10 to June 18 2010, provides interactive, self-paced, technical training on the Windows Azure platform - Windows Azure, SQL Azure and the Windows Azure Platform AppFabric.

Designed for programmers, system designers, and architects who have at least six months of .NET framework and Visual Studio programming experience, the course provides training via interactive Live Meetings sessions, on-line videos, hands-on labs and weekly coursework assignments that you can complete at your own pace from your workplace or home.

The course will cover:

- Week 1 - Windows Azure Platform

- Week 2 - Windows Azure Storage

- Week 3 - Windows Azure Deep Dive and Codename "Dallas"

- Week 4 - SQL Azure

- Week 5 - Windows Azure Platform AppFabric Access Control

- Week 6 - Windows Azure Platform AppFabric Service Bus

Don't miss this chance to learn much more about Windows Azure, space is limited so register today!

Finally, tell your friends and encourage them to sign up via Twitter using the suggested twitter tag #selfpacedazure

The Windows Azure AppFabric Team announces Application Infrastructure: Cloud Benefits Delivered, a Microsoft Webinar to be presented on 5/18/2010 at 8:30AM PDT:

http://www.appinfrastructure.com

We would like to highlight an exciting upcoming event which brings a fresh view on the latest trends and upcoming product offerings in the Application Infrastructure space. This is a Virtual Event that focuses on bringing some of the benefits of the cloud to customers’ current IT environments while also enabling connectivity between enterprise, partners, and cloud investments. Windows Azure AppFabric is a key part of this event.

Want to bring the benefits of the cloud to your current IT environment? Cloud computing offers a range of benefits, including elastic scale and never-before-seen applications. While you ponder your long-term investment in the cloud, you can harness a number of cloud benefits in your current IT environment now.

Join us on May 20 at 8:30 A.M. Pacific Time to learn how your current IT assets can harness some of the benefits of the cloud on-premises—and can readily connect to new applications and data running in the cloud. As part of the Virtual Launch Event, Gartner vice president and distinguished analyst Yefim Natis will discuss the latest trends and biggest questions facing the Application Infrastructure space. He will also speak about the role Application Infrastructure will play in helping businesses benefit from the cloud. Plus, you’ll hear some exciting product announcements and a keynote from Abhay Parasnis, GM of Application Server Group at Microsoft. Parasnis will discuss the latest Microsoft investments in the Application Infrastructure space aimed at delivering on-demand scalability, highly available applications, a new level of connectivity, and more. Save the date!

Dom Green, Rob Fraser, and Rich Bower will present RiskMetrics – a UK Azure Community presentation on 5/27/2010 at the Microsoft London office:

RiskMetrics is on of the leading providers of financial risk analysis and I have had the pleasure to be working with them over the past couple of months to deliver their RiskBurst platform.

The RiskMetrics guys, Rob Fraser (Head of Cloud Computing) and Rich Bower (Dev Lead) and myself will be delivering a presentation on the platform, our lessons learned and how this integrates with the current data centre RiskMetrics have.

The session will be taking place on May 27th from the Microsoft London office and is sure to be one not to miss, as these guys are doing some great stuff with Windows Azure and really pushing the platform.

Here is an outline of our session:

High Performance Computing across the Data Centre and the Azure Cloud

RiskMetrics, the leading provider of financial risk analytics, is engaged in building RiskBurst, an elastic high performance computing capability that spans the data centre and the Azure cloud. The talk will describe the design and implementation of the solution, experiences and lessons learnt from working on Azure, and the operational issues associated with running a production capability using a public “cloud bursting” architecture.

Sign up for the event here: http://ukazurenet-powerofthree.eventbrite.com

Steve Fox will present W13 Integrating SharePoint 2010 and Azure: Evolving Towards the Cloud on 8/4/2010 at VSLive! on the Microsoft campus:

SharePoint 2010 provides a rich developer story that includes an evolved set of platform services and APIs. Combine this with the power of Azure, and you’ve got a great cloud story that integrates custom, hosted services in the cloud with the strength of the SharePoint platform. In this session, you’ll see how you can leverage SQL Azure data in the cloud, custom Azure services, and hosted Azure data services within your SharePoint solutions. Specific integration points with SharePoint are custom Web parts, Business Connectivity Services, and Silverlight.

Steve is a Microsoft Senior Technical Evangelist.

James Quin will conduct a Security and the Cloud Webinar on 5/12/2010 at 9:00 to 9:45 AM PDT:

Security is not about eliminating risks to the enterprise, it is about mitigating these risks to acceptable levels. As organizations increase their use of software-as-a-service, some question the security risks associated to the business. Is our information at risk from unauthorized use or deletion? Is security the same with the internal and external cloud? In this webinar, Info-Tech’s senior analyst James Quin will discuss the challenges and concerns the market faces today regarding security and cloud-based technologies.

This webinar will cover the following:

- Why companies associate business risk with cloud-based technologies

- What you can do to minimize risks associated with cloud computing

- Communicating security issues to non-IT business leaders

- The future of security and the cloud

Who should attend this webinar:

- IT leaders from both small and large enterprises who are thinking about or have just started leveraging the cloud

- IT leaders who have questions they’d like answered about security risks associated with cloud computing

The webinar will include a 30 minute presentation and a 15 minute Q&A.

Quin is a Lead Research Analyst with Info-Tech Research Group. James has held a variety of roles in the field of Information Technology for over 10 years with organizations including Secured Services Inc., Arqana Technologies, and AT&T Canada.

The 31st IEEE Symposium On Security And Privacy, which will take place 5/16 thru 5/19/2010 at the Claremont Hotel in Oakland, CA, is sold out. The Program is here.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Chad Catacchio reports Amazon CTO Vogels Plays with Internet Building Blocks in the Cloud in this post of 4/28/2010 to The Next Web blog:

Werner Vogels, Amazon’s CTO and Information Week’s 2009 Chief of the Year, gave a keynote here at The Next Web Conference about the “The Future Building Blocks of the Internet”.

According to Vogels, in Q1 2010, Amazon Web Services served over 100 billion objects. “If you go to a VC and say you aren’t using the scale of these services they will think your head is not on right”.

Vogels insists that in the future, all apps will need to include a core set of functionalities: rich media experience; multi-device access; location context aware; real-time presence driven; social graph based; user generated content; virtual goods economy; recommendations; integrated with social networks; and advertisement and premium support.

“Think of it as an international startup competition where I choose the winners.” Vogels went through a number of infrastructure cloud-based services including Drop.io (file sharing), Panda (security), SimpleGeo (location), Animoto (video), Twilio (VoIP), Echo (real-time conversation), Amazon Mechanical Turk (crowdsourced human labor), Social Gold (virtual currency), Charify (payments), OpenX (advertising), 80Legs and github (development).

Vogels concluded by saying that all apps need to “have a lot of stuff” – basically, all apps need to include many if not all of the above functionalities offered in the above or similar services.

Michael Coté offers a detailed look at VMforce’s Spring and tooling in his The Java cloud? VMforce – Quick Analysis post of 4/28/2010:

“The new thing is that force.com now supports an additional runtime, in addition to Apex. That new runtime uses the Java language, with the constraint that it is used via the Spring framework. Which is familiar territory to many developers. That’s it. That’s the VMforce announcement for all practical purposes from a user’s perspective.”

–William Vambenepe, Cloud Philosopher-at-Large, Oracle

Later this year, Salesforce will have an additional, more pure-Java friendly way to deliver applications in their cloud. The details of pricing and packaging are to be ironed out and announced later, so there’s no accounting for that. Presumably, it will be cheap-ish, esp. compared to some list price WebSphere install run on-prem with high-end hardware, storage, networking, and death-by-nines ITSM.

For developers, etc.

The key attributes from developers are the ability to use Java instead of Salesforce’s custom APEX language, access to Salesforce’s services, and easier integration and access to the Salesforce customer base.

Spring

Partnering with VMWare to use Spring is an excellent move. It brings in not only the Spring Framework, but the use of Tomcat and one of the strongest actors in the Java world at the moment. There’s still a feel of proprietariness, less than “pure” Java to the platform in the same way that Google AppEngine doesn’t feel exactly the same as an anything goes Java Virtual Machine. You can’t bring your own database, for example, and one wonders what other kinds of restrictions there would be with respect to brining any Java library you wanted – like a Java based database, web server, etc. But, we soothe our tinkering inner-gnome that, perhaps, there are trade-offs to be made, and they may be worth it.

(Indeed, in my recent talks on cloud computing for developers I try to suggest that the simplicity a PaaS brings might be worth it if it speeds up development, allowing you to deliver features more frequently and with less ongoing admin hassle to your users.)

Tools, finishing them out

The attention given to the development tool-chain is impressive and should be a good reference point for others in this area. Heroku is increasingly heralded as a good way of doing cloud development, and key to their setup is a tight integration – like, really tight – between development, deployment, and production. The Heroku way (seems to) shoot simplicity through all that, which makes looks to make it possible. The “dev/ops” shift is a big one to make – like from going to Waterfall to Agile – but so far signs show that it’s not just cowboy-coder-crap.

Throw in some VMforce integration with github and jam in some SaaS helpdesk (hello, Salesforce!), configuration management, and cloud-based dev/test labs…and you’re starting to warm the place up, addressing the “85 percent of [IT] budget just keeping the lights on” that Salesforce’s Anshu Sharma wags a finger at.

PaaS as a plugin framework, keeping partners alive

“In theory what it means for Java developers is that there’s sort of a ready marketplace community for them to develop their applications,” said RedMonk analyst Michael Cote. “Because there is that tighter integration between the Salesforce application and ecosystem, it kind of helps accelerate the market for these [applications].”

Many PaaSes are shaking out to be the new way to write plugins for an existing, large install-base. Of course, Salesfoce will protect its core revenue stream, and without any anti-trust action against Apple, the sky’s the limit when it comes to using fine print to compete on your own platform by shutting out “plugins” (or “apps”) you see as too competitive. That’s always a risk for a PaaS users, but I suspect a manageable one here and in many cases. …

Don’t miss William Vambenepe’s Analyzing the VMforce announcement (linked above) and be sure to read Carl Brooks’ (@eekygeeky’s) comment to the post.

VMforce announced VMforce: The trusted cloud for enterprise Java developers on 4/27/2010:

Salesforce.com and VMware introduce VMforce—the first enterprise cloud for Java developers . With VMforce, Java developers can build apps that are instantly social and available on mobile devices in real time. And it’s all in the cloud, so there’s no hardware to manage and no software stack to install, patch, tune, or upgrade. Building Java apps on VMforce is easy!

- Use the standard Spring Eclipse-based IDE

- Code your app with standard Java, including POJOs, JSPs, and Servlets

- Deploy your app to VMforce with 1 click

We take care of the rest. With VMforce, every Java developer is now a cloud developer. …

Sounds to me like serious PaaS competition for Azure.

Tim Anderson’s VMforce: Salesforce partners VMware to run Java in the cloud analyzes this new Windows Azure competitor in a 4/27/2010 post:

Salesforce and VMware have announced VMforce, a new cloud platform for enterprise applications. You will be able to deploy Java applications to VMforce, where they will run on a virtual platform provided by VMware. There will be no direct JDBC database access on the platform itself, but it will support the Java persistence API, with objects stored on Force.com. Applications will have full access to the Salesforce CRM platform, including new collaboration features such as Chatter, as well as standard Java Enterprise Edition features provided by Tomcat and the Spring framework. Springsource is a division of VMware.

A developer preview will be available in the second half of 2010; no date is yet announced for the final release.

There are a couple of different ways to look at this announcement. From the perspective of a Force.com developer, it means that full Java is now available alongside the existing Apex language. That will make it easier to port code and use existing skills. From the perspective of a Java developer looking for a hosted deployment platform, it means another strong contender alongside others such as Amazon’s Elastic Compute Cloud (EC2).

The trade-off is that with Amazon EC2 you have pretty much full control over what you deploy on Amazon’s servers. VMforce is a more restricted platform; you will not be able to install what you like, but have to run on what is provided. The advantage is that more of the management burden is lifted; VMforce will even handle backup.

I could not get any information about pricing or even how the new platform will be charged. I suspect it will compete more on quality than on price. However I was told that smooth scalability is a key goal.

More information here.

You can watch a four-part video of Paul Maritz’ and Marc Benioff’s VMforce launch here.

Bob Warfield analyzes VMforce: Salesforce and VMWare’s Cool New Platform as a Service in this 4/27/2010 post to the Enterprise Irregulars blog:

Salesforce and VMWare have big news today with the pre-announcement of VMFforce. Inevitably it will be less big than the hype that’s sure to come, but that’s no knock on the platform, which looks pretty cool. Fellow Enterprise Irregular and Salesforce VP Anshu Sharma provides an excellent look at VMforce.

What is VMforce and how is it different from Force.com?

There is a lot to like about Force.com and a fair amount to dislike. Let’s start with Force.com’s proprietary not-quite-Java language. Suppose we could dump that language and write vanilla Java? Much better, and this is exactly what VMForce offers. Granted, you will need to use the Spring framework with your Java, but that’s not so bad. According to Larry Dignan and Sam Diaz, Spring is used with over half of all Enterprise Java projects and 95% of all bug fixes to Apache Tomcat. That’s some street cred for sure.

Okay, that eliminates the negative of the proprietary language, but where are the positives?

Simply put, there is a rich set of generic SaaS capabilities available to your application on this platform. Think about all the stuff that’s in Salesforce.com’s applications that isn’t specific to the application itself. These are capabilities any SaaS app would love to have on tap. They include:

- Search: Ability to search any and all data in your enterprise apps

- Reporting: Ability to create dashboards and run reports, including the ability to modify these reports

- Mobile: Ability to access business data from mobile devices ranging from BlackBerry phones to iPhones

- Integration: Ability to integrate new applications via standard web services with existing applications

- Business Process Management: Ability to visually define business processes and modify them as business needs evolve

- User and Identity Management: Real-world applications have users! You need the capability to add, remove, and manage not just the users but what data and applications they can have access to

Application Administration: Usually an afterthought, administration is a critical piece once the application is deployed

- Social Profiles: Who are the users in this application so I can work with them?

- Status Updates: What are these users doing? How can I help them and how can they help me?

- Feeds: Beyond user status updates, how can I find the data that I need? How can this data come to me via Push? How can I be alerted if an expense report is approved or a physician is needed in a different room?

- Content Sharing: How can I upload a presentation or a document and instantly share it in a secure and managed manner with the right set of co-workers?

Pretty potent stuff. The social features, reporting, integration, and business process management are areas that seem to be just beyond the reach of a lot of early SaaS apps. It requires a lot of effort to implement all that, and most companies just don’t get there for quite a while. I know these were areas that particularly distinguished my old company Helpstream against its competition. Being able to have them all in your offering because the platform provides them is worth quite a lot.

There is also a lot of talk about how you don’t have to set up the stack, but I frankly find that a lot less compelling than these powerful “instant features” for your program. The stack just isn’t that hard to manage any more. Select the right machine image and spin it up on EC2 and you’re done.

That’s all good to great. I’m not aware of another Platform that offers all those capabilities, and a lot of the proprietary drawbacks to Force.com have been greatly reduced, although make no mistake, there is still a lot to think about before diving into the platform without reservation. Force.com has had some adoption problems (I’m sure Salesforce would dispute that), and I have yet to meet a company that wholeheartedly embraced the platform rather than just trying to use it as an entre to the Salesforce ecosystem (aka customers and demand generation).

Bob continues with “What are the caveats?”

<Return to section navigation list>